Snowflake doesn’t fail quietly. When it’s happy, dashboards fly, and teams feel smart. When it’s not, you’re staring at half-loaded tables, “temporary” scripts that somehow became permanent, and a growing suspicion that your pipelines are being held together by optimism. That’s usually the moment ETL tools enter the conversation.

In 2026, picking the best Snowflake ETL tools is less about features on a pricing page and more about how much friction you’re willing to tolerate. Some tools are built for speed, some for control, and some for people who don’t want ETL to become their personality. The trick is knowing which one matches your reality.

This guide is your shortcut. We’ll break down the tools that work with Snowflake – not just on paper, but in production – and call out where each one shines, struggles, or quietly saves your sanity. No ceremony, no buzzword soup. Just solid options and honest trade-offs so you can spend more time using Snowflake, not feeding it.

Table of Сontents

- Understanding ETL for Snowflake

- Benefits of Using Third-Party Snowflake ETL Tools

- 4 Things to Consider When Selecting ETL Tools for Snowflake

- Top 10 Snowflake ETL tools

- How to Import Data into Snowflake Using Skyvia in Minutes?

- Conclusion

- FAQ

Understanding ETL for Snowflake

What Is ETL?

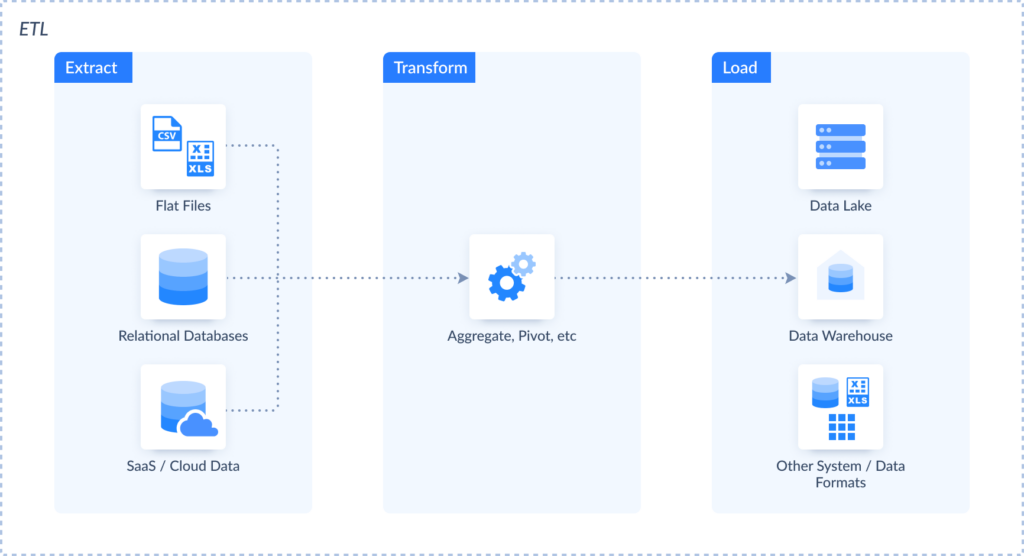

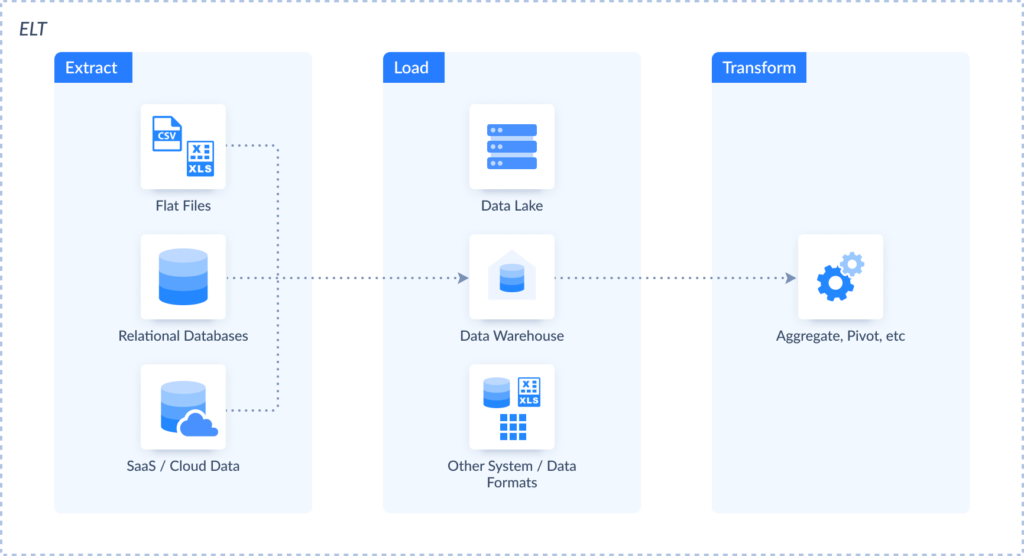

ETL stands for Extract, Transform, Load – the three-step dance for handling data:

- The Extract stage involves collecting records from multiple sources, including legacy systems and modern SaaS applications.

- The Transform step applies transformation techniques, basic or advanced, depending on the current requirements.

- The Load step transfers data to the specified destination, typically a database or data warehouse (DWH).

You have to build ETL pipelines that collect data from flat files, databases, and SaaS applications – all kinds of places that sometimes predate your employment, then move it into Snowflake.

What Is ELT?

Occasionally, loading beats transformation to the finish line – that’s ELT (Extract, Load, Transform), and Snowflake handles it well because transformations happen in-warehouse with tools like dbt, avoiding the performance nightmare of extracting massive datasets, transforming them elsewhere, then loading them back like some kind of data relay race.

Here are the main differences between ELT and ETL.

| ETL | ELT | |

|---|---|---|

| Destinations | ELT is mainly used to load data into a cloud DWH or a data lake. | ETL doesn’t impose limitations on the destination –data can be loaded into any supported source. |

| Data types | Works with structured, semi-structured, and unstructured data. | Supports structured records. |

| Transformations | On the destination side, it usually requires programming skills. | Made after extraction and before its loading to the destination. |

Note: Apart from ETL and ELT approaches, Reverse ETL is also often used. It allows you to move records from Snowflake to other business apps for data activation.

Why to Use ETL for Snowflake?

When organizational data is dispersed across multiple platforms, it is challenging to get a clear picture of the company’s performance. So, it’s essential to consolidate it in Snowflake to obtain a unified view of business health. Both ELT and ETL approaches can help with this since they automate data collection, modify data along its transfer, and optimize its loading into Snowflake.

There are other good reasons to use ETL for Snowflake:

- Apply multistage transformations on data intended for analytical purposes.

- Employ elasticity, scalability, and flexibility for changing data loads.

- Configure ETL pipelines and automated workflows in a visual interface.

- Enjoy optimized cost and performance for big cases.

- Take advantage of real-time or near-real-time processing.

- Obtain a centralized repository with no-code data movement.

- Extracting insights with the data blending possibilities.

- Ensure data security and privacy on transfer and storage.

Native ETL Capabilities of Snowflake

Snowflake’s creators recognized that ETL processes actually matter, so they embedded data integration features into the warehouse itself rather than pretending pipelines are someone else’s concern. You already have pipeline building and configuration capabilities, ready to make the work routine easier.

- Snowpipe. This tool is designed for real-time data ingestion and low-latency loading.

- Streams and Tasks. This solution uses Change Data Capture (CDC) and automated SQL execution, allowing you to monitor and react to data modifications effectively.

- Zero-Copy Cloning. This tool creates data clones without duplicates. It can be used for testing and data manipulation without the extra overhead of replication.

- Stored Procedures. This option executes data manipulation logic directly in Snowflake.

Note that constructing pipelines with these features is a complex procedure. It involves a modular approach, where creating integration dataflows will be like a LEGO puzzle. That will take a lot of time and effort and require regular maintenance. Meanwhile, third-party data integration tools deprive you of such complications, allowing you to set up everything in several clicks. It’s possible to get some of these tools from Snowflake Marketplace.

Benefits of Using Third-Party Snowflake ETL Tools

Unlike the options mentioned above, the solutions by external providers don’t limit you to using only Snowflake. They support multiple sources and destinations, allowing you to build pipelines of different configurations. Such solutions also provide a range of additional features that simplify the integration process.

Pre-built Connectors

Data integration services come with pre-built connectors that allow users to connect to hundreds of sources (apps, databases, flat files, etc.). That streamlines the integration process since non-tech business users (marketers, salespeople, etc.) can build pipelines independently without IT experts’ help.

Transformation Options

All ETL tools include transformation options, though their complexity varies from one solution to another. Some offer simple transformations (cleansing, standardization, etc.), while others provide complex multistage operations (duplicate removal, lookup, etc.).

Manual Input Reduction

Another benefit of modern ETL solutions is their advanced automation options. They simplify data management, eliminating the manual input and minimizing the probability of human error.

Scheduling

Data integration tools usually contain scheduling options for regular data transfers. That way, you can select specific intervals at which records will travel from selected sources to Snowflake. Scheduling options ensure that your centralized repository always contains the freshest data ready for reporting tasks.

Scalability

When businesses rapidly grow, they experience spikes in data loads as well. At the same time, companies may experience drops in data volumes due to external economic factors, for instance. Luckily, modern data platforms can handle changing requirements for data automatically, with the possibility of scaling up and down upon request.

Monitoring

Snowflake ETL tools embed logging and error-handling features for a better overview of the integration results. They also send notifications via email or SMS to help users address any issues quickly.

4 Things to Consider When Selecting ETL Tools for Snowflake

There are various ETL tools for Snowflake in the market these days. Thus, it might be confusing and unclear which one would suit you best. In the table below, find the CUPS criteria list you need to consider when evaluating data integration tools and selecting the one for your organization.

- Сonnectivity. Validate that the platform supports Snowflake and your actual sources out of the box, because compatibility issues surfacing post-purchase spawn emergency meetings no one wants to attend.

- Usability. Determine if the server is actually friendly or just pretending. Does it gate everything behind code, or offer visual interfaces where people can work without syntax errors haunting their dreams? Compare that against your team’s skills and what building pipelines will realistically require from them day-to-day.

- Pricing. Explore the ETL software’s pricing models and see which one matches your budget.

- Scalability. Investigate how a chosen tool can handle changing volumes without impacting performance.

Top 10 Snowflake ETL tools

Let’s see in detail what the most popular choices for the ETL Snowflake solutions are. We start with this comparison table:

| Platform | G2 Ratings | Pros | Cons |

|---|---|---|---|

| Skyvia | 4.8 out of 5 | – no-code interface – 200+ sources – data manipulation features – automatic schema detection and mapping | – no phone support |

| Integrate.io | 4.3 out of 5 | – drag-and-drop, no-code interface – 150+ pre-built connectors | – error messages are unclear – debugging is time-consuming |

| Apache Airflow | 4.4 out of 5 | – create ETL pipelines with Python | – requires Python coding skills |

| Matillion | 4.4 out of 5 | – transformations can be performed with SQL queries or via a GUI | – lack of documentation |

| Stitch | 4.4 out of 5 | – custom connectors | – lacks data transformation options |

| Fivetran | 4.4 out of 5 | – data transformation options with dbt Labs – governance options | – lack of data management options |

| Hevo Data | 4.4 out of 5 | – 150+ built-in connectors | – no way to organize or categorize pipelines |

| Airbyte | 4.4 out of 5 | – 400+ connectors with the possibility of customization | – limited transformation capabilities |

| StreamSets | 4.0 out of 5 | – 50+ data systems – custom sources and processors with JavaScript, Groovy, Scala, etc. | – library dependency issues |

| Astera | 4.3 out of 5 | – pre-built transformation functions | – resource-intensive |

Skyvia

Skyvia is a leading cloud data aggregation platform that pulls records from hundreds of sources, cleans and standardizes them, and delivers analysis-ready datasets to Snowflake or other destinations through a visual, no-code interface built for real production workloads.

Let’s explore the Data Integration product in detail and see how it can help you with moving data from and to Snowflake.

- Import uses a wizard interface to create ETL and Reverse ETL pipelines, conduct transformations, and align source and destination schemas, without requiring you to write connections or mapping logic.

- Export converts Snowflake data to CSV and stores it on cloud platforms or your machine through a visual tool that doesn’t assume you have command syntax memorized or enjoy opening text editors.

- Synchronization automatically manages two-way data sync between SaaS platforms and databases, keeping everything aligned without scheduled jobs, manual checks, or discovering mismatches three weeks later during a presentation.

- Replication provides a guided interface for constructing ELT pipelines that move data from applications to databases or warehouses, replacing the usual process of coding integrations with a wizard that works on the first attempt.

- Data Flow offers a visual workspace for designing pipelines with actual depth. It allows you to integrate data from systems that store information completely differently, apply layered transformations that clean, enrich, and reshape as data flows through, all without writing nested functions that make you want to quit.

- Control Flow provides a visual workspace for choreographing integration scenarios, allowing you to define execution sequences for imports, exports, replications, and synchronizations without writing orchestration scripts.

Reviews

G2 Rating: 4.8 out of 5 (based on 250+ reviews).

Best for

- Businesses of any size and any industry.

Key features

- Skyvia is an official technology partner in Snowflake’s ecosystem.

- A no-code interface lets users build pipelines visually.

- Simple and advanced data integration scenarios.

- Skyvia supports over 200 sources (CRM systems, e-commerce platforms, payment processors, databases, DWHs, marketing automation platforms, etc.).

- Data manipulation features (sorting, filtering, searching, expressions, and others) to enhance data accuracy.

- Web-based access from any browser and platform.

- Automatic schema detection and mapping.

Disadvantages

- Data volume and update frequency limitations in the Freemium plan.

- No phone support yet.

Pricing

Skyvia offers plans from free to enterprise-level, with paid Data Integration subscriptions starting at $79 per month when your needs exceed what the free tier supports.

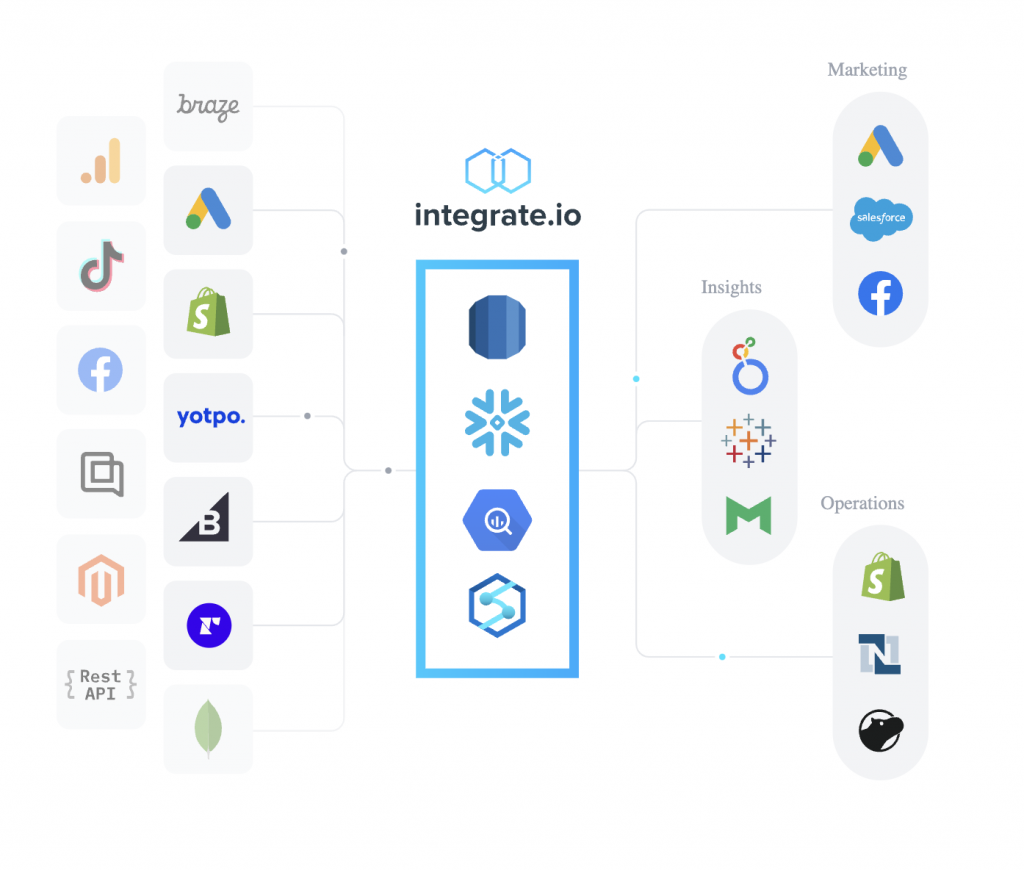

Integrate.io

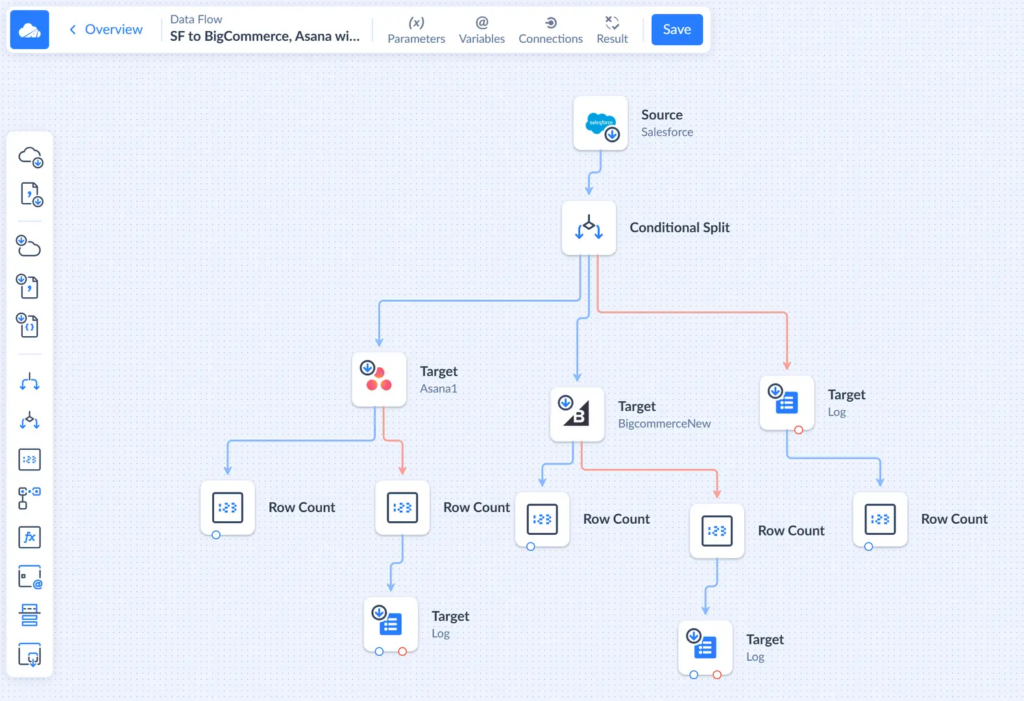

Integrate.io is a no-code, visual data integration platform that allows teams to combine cloud inputs, transformations, and destinations on a single canvas, making it simple to construct and fine-tune batch or real-time pipelines without turning integration into a full-fledged engineering project.

Reviews

G2 Rating: 4.3 out of 5 (based on 200+ reviews).

Best for

- Best suited for organizations with complex data integration and transformation needs.

Key features

- The drag-and-drop, no-code interface simplifies pipeline creation. It allows users to set up connections and define transformations visually.

- There are 150+ pre-built connectors, including Snowflake and SaaS services.

- Integrate.io provides 360-degree support via chat, email, phone, and Zoom.

Disadvantages

- Debugging can be time-consuming since it requires a detailed check of logs.

- Error messages are unclear.

Pricing

There are four different pricing plans for this solution, and each considers cost per credit, feature set, expected data volume, and some other principal aspects.

Apache Airflow

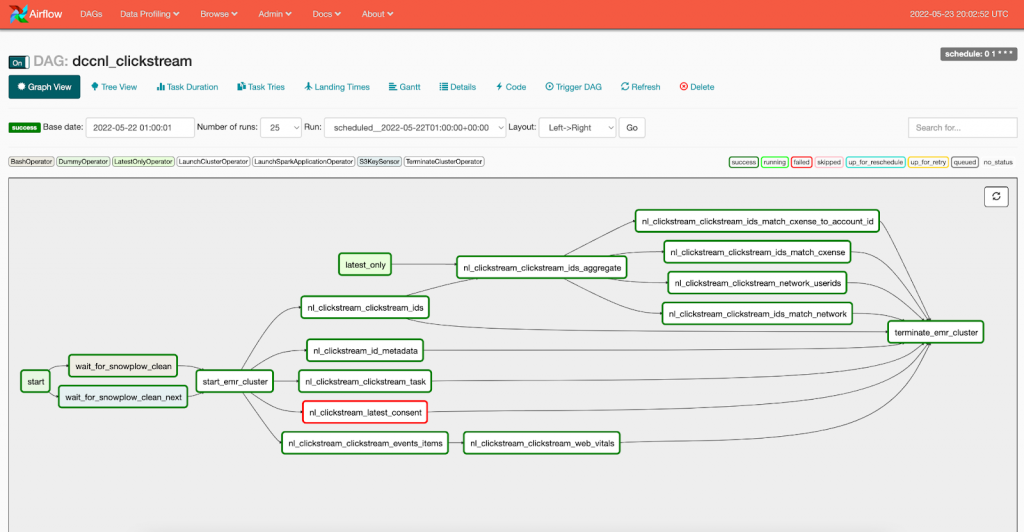

Apache Airflow is an open-source orchestration platform often used as a Snowflake ETL tool, where teams define batch data pipelines as code and use DAGs to control exactly how data is loaded, transformed, and scheduled inside Snowflake at scale.

Reviews

G2 rating: 4.4 out of 5 (based on nearly 100+ reviews).

Best for

- Companies with a complex business logic orchestrated in Python.

Key features

- Write ETL workflows in Python – the language with millions of tutorials.

- Hooks into Snowflake and cloud warehouses while still tolerating on-premises databases.

- Version control with Git means pipelines become trackable code rather than mysteries.

Disadvantages

- Requires Python coding skills and technical expertise.

- It’s difficult to modify data pipelines once they are launched.

Pricing

Since Apache Airflow is an open-source tool, it can be installed and used for free.

Matillion

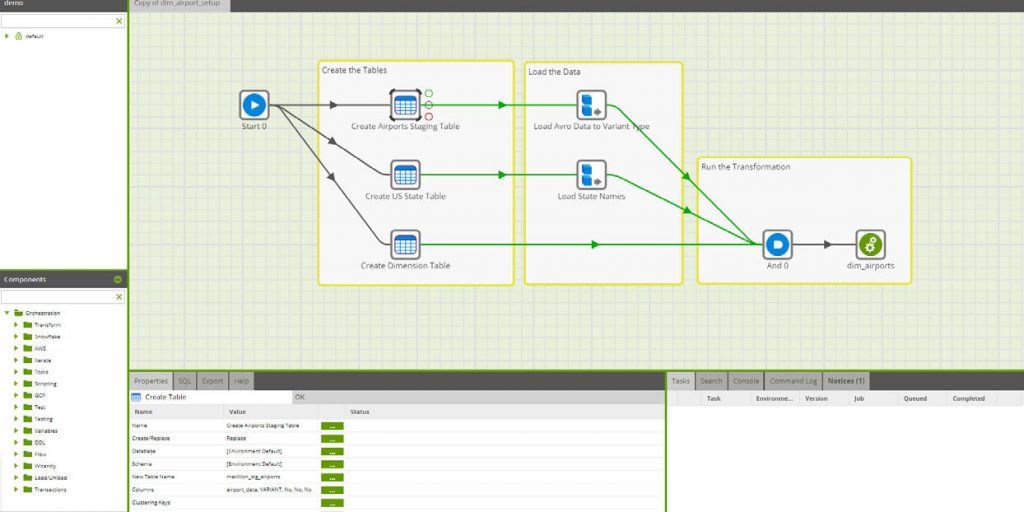

Matillion is a cloud-native Snowflake ETL tool that lets teams build and run pipelines through a visual, drag-and-drop interface, handling both data loading and in-warehouse transformations without turning pipeline work into a coding project.

Reviews

G2 rating: 4.4 out of 5 (based on 80 reviews).

Best for

- Better for enterprises than SMBs.

Key features

- Offers connectors to data systems on premises and in the cloud.

- Has tools for managing and orchestrating data.

- Transformations can be carried out via a graphical user interface (GUI) or SQL queries.

Disadvantages

- Lack of documentation describing features and instructions for their configuration.

- There is no option to restart tasks from the point of failure. The job needs to be restarted from the beginning.

Pricing

The cost of Matillion ETL is credit-based, meaning that it depends on the data units processed.

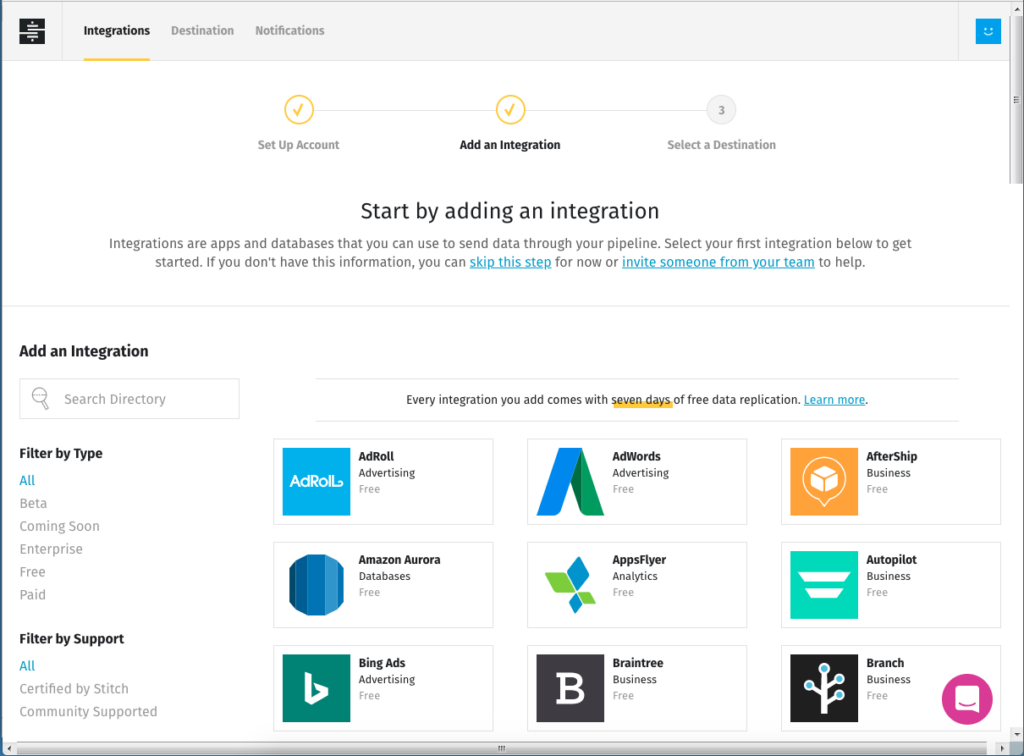

Stitch

Stitch is a lightweight Snowflake-friendly ETL tool that focuses on reliably extracting data from SaaS apps and databases, handling the plumbing, monitoring, and security for you, then delivering clean, ready-to-load data with minimal setup and very little control needed from a user’s side.

Reviews

G2 Rating: 4.4 out of 5 (based on 60+ reviews).

Best for

- Perfect for developers in small to medium-sized businesses.

Key features

- Allows you to create custom connectors to sources.

- Provides a graphical UI to set up Stitch and configure ETL pipelines.

- Includes a dashboard for pipeline tracking and monitoring.

- Schedules data loading at predefined times.

Disadvantages

- Lacks data transformation options.

- Supports only several data destinations, depending on the selected subscription.

Pricing

Stitch offers three pricing tiers. The cost starts at $100 per month.

Fivetran

Fivetran is a Snowflake-ready ELT tool built for teams that want data consolidation without the daily upkeep, automatically pulling data from hundreds of sources, handling schema drift behind the scenes, and keeping Snowflake fed with fresh, reliable data as a single source of truth.

Reviews

G2 Rating: 4.2 out of 5 (based on 380+ reviews).

Best for

- Mid-size and large enterprises in the financial industry.

Key features

- Implements data governance options for role-based access controls and metadata sharing.

- Provides transformation options with dbt Labs.

- Ensures advanced security with private networks, column hashing, and other approaches.

- Offers automated pipeline setup and monitoring with minimal maintenance.

Disadvantages

- Limited support for Apache Kinesis and Apache Aurora data services.

- Lack of data management options.

Pricing

Fivetran provides four pricing plans, including a free tier. The price depends on the data volumes, number of users, available features, and connectors.

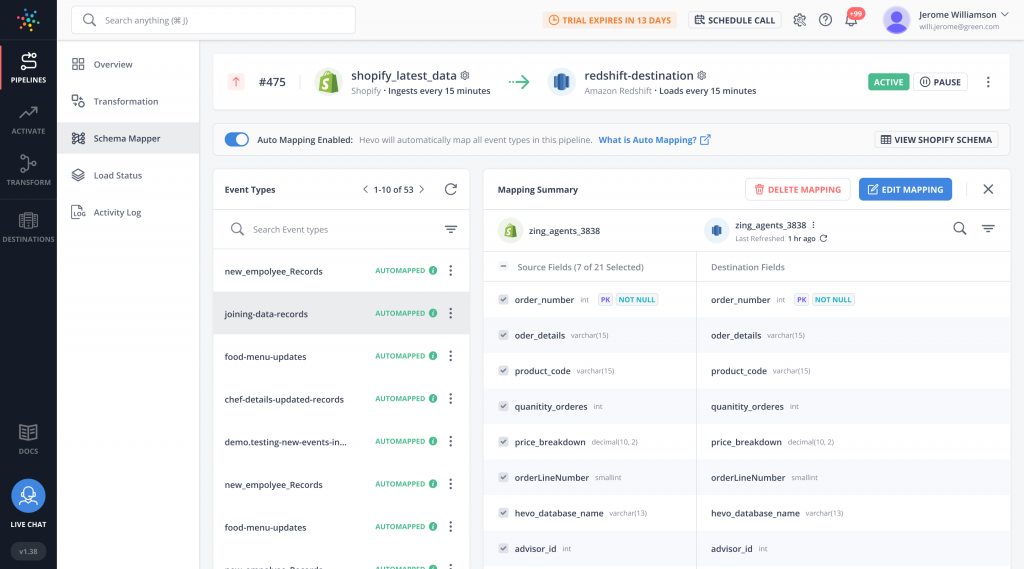

Hevo Data

Hevo Data is a no-code ETL platform that helps teams move and shape data across a wide range of warehouses and analytics platforms, with strong support for Snowflake, among others. It handles schema mapping and drift automatically, while letting you mix visual transformations with Python when pipelines need more control.

Reviews

G2 Rating: 4.4 out of 5 (based on 200+ reviews).

Best for

- Companies with limited technical resources can benefit from Hevo’s no-code interface.

Key features

- Anonymizes data before loading it to the destination.

- Provides 150+ built-in connectors to databases, SaaS platforms, etc.

- Near real-time data loading to certain destinations.

Disadvantages

- There is no way to organize or categorize data pipelines.

- Although Hevo Data supports real-time data streaming, latency can sometimes be an issue, especially for large datasets.

Pricing

Hevo Data offers four pricing plans, including a free tier with up to 1M events per month. For paid plans, the price starts at $239 per month.

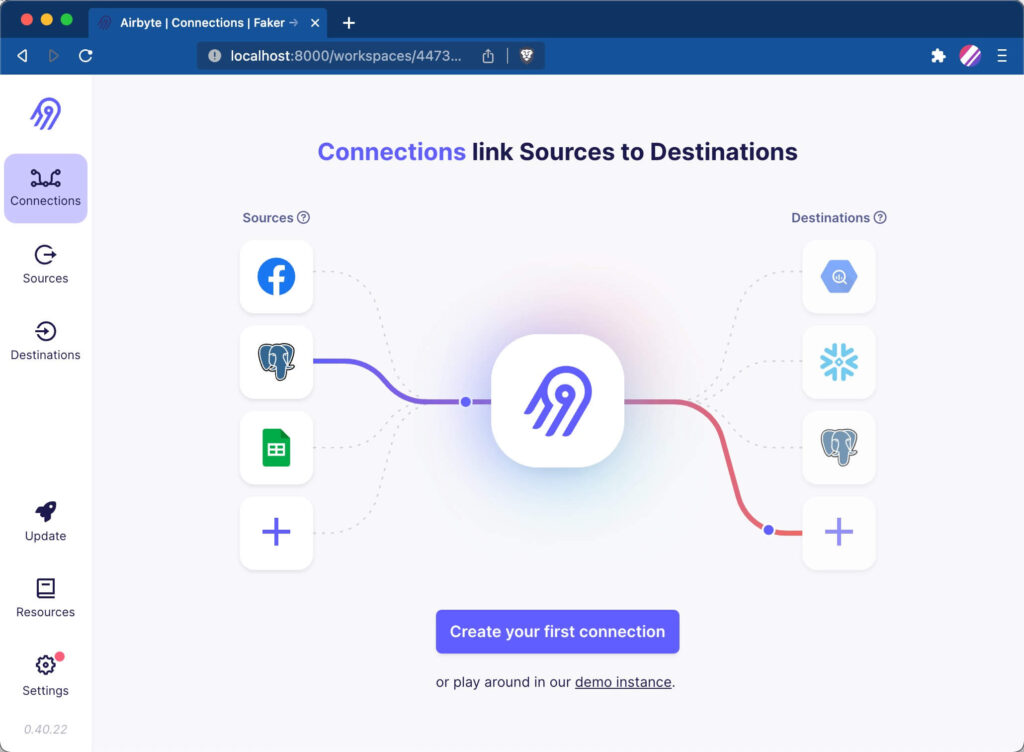

Airbyte

Airbyte is an open-source data movement platform often used to load data into Snowflake, especially when teams want control over how raw records land in the warehouse. Its growing connector ecosystem and flexible deployment options make it a solid fit for ELT-style workflows, where data is loaded first and shaped later using SQL or transformation tools.

Reviews

G2 rating: 4.4 out of 5 (based on nearly 70+ reviews).

Best for

- It’s an open-source solution suitable for both SMBs and enterprises.

Key features

- Offers over 400+ connectors with the possibility of customization.

- Supports incremental data loading.

- Provides detailed logging and error detection options.

Disadvantages

- Limited transformation capabilities.

- Data-intensive pipelines can be resource-consuming and thus costly.

Pricing

Open-source deployment on your host is free. The cost of the cloud-hosted deployment can be discussed with sales.

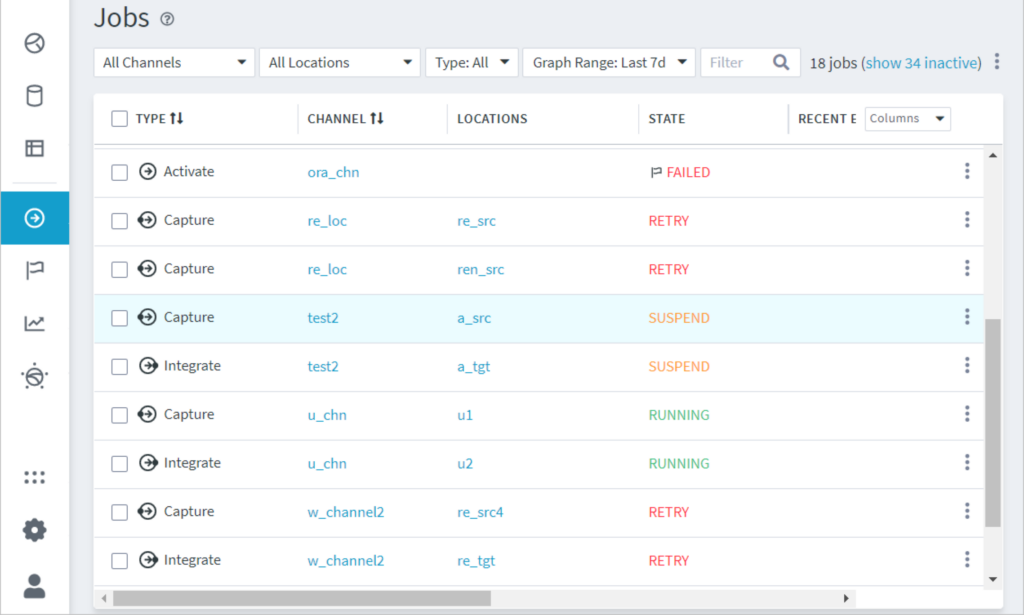

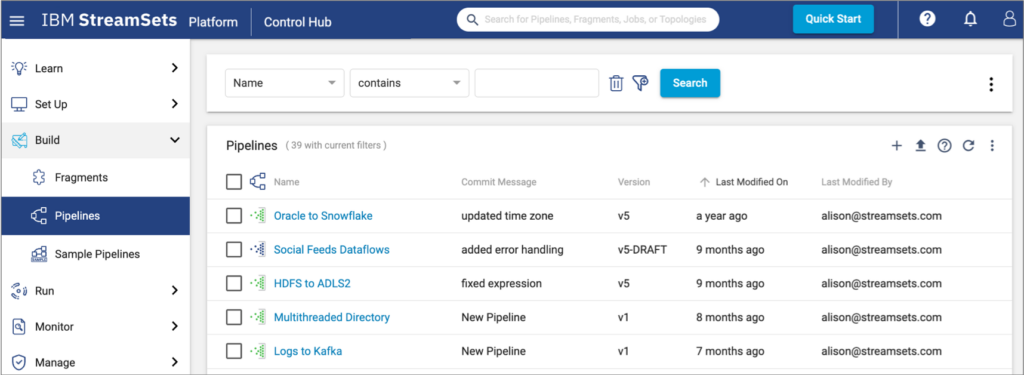

StreamSets

StreamSets is a cloud-managed data integration platform from IBM that teams use to move and prepare data for Snowflake across hybrid and multi-cloud environments. Its visual pipeline builder and strong streaming support make it a good fit when data arrives continuously and needs to stay reliable as schemas and sources change.

Reviews

G2 rating: 4.0 out of 5 (based on 100+ reviews).

Best for

- Perfect for large enterprises.

Key features

- Provides the possibility to add custom data sources and processors with JavaScript, Groovy, Scala, etc.

- Has extensive documentation with a thorough description of product functionality.

- Supports 50+ data systems, including streaming sources Kafka and MapR.

Disadvantages

- Lacks extensive coverage of SaaS input sources.

- Copying the same pipelines to different servers might cause library dependency issues.

Pricing

The cost of StreamSets can be discussed with IBM’s sales managers.

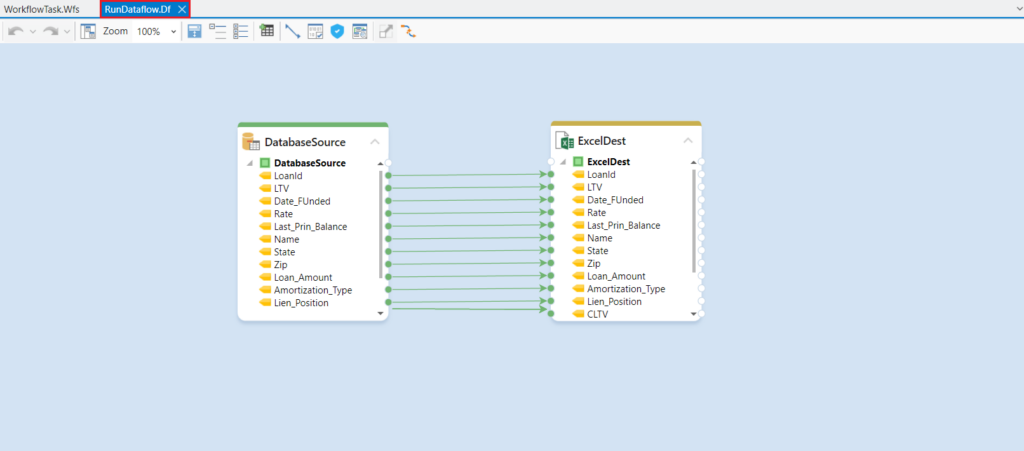

Astera

Astera is a full-cycle data management platform built for teams that want to design ETL pipelines without stitching tools together. With 50+ connectors, built-in transformations, visual mapping, and scheduling baked in, it’s well-suited for recurring aggregation jobs where data accuracy and consistency matter more than tinkering with low-level plumbing.

Reviews

G2 rating: 4.3 out of 5 (based on nearly 50 reviews).

Best for

- Best suited for SMBs and enterprises.

Key features

- Has built-in connectors to databases, cloud storage, flat files, and data warehouses, including Snowflake.

- Offers pre-built transformation functions for shaping data with no code.

- Applies AI-powered and role-based mapping.

- Provides automated quality data management to prepare data before loading it into Snowflake.

Disadvantages

- The steep learning curve for non-technical users.

- This tool can be resource-intensive, especially when handling large datasets or complex data transformations.

Pricing

The Astera prices are discussed with their sales representatives.

How to Import Data into Snowflake Using Skyvia in Minutes?

Let’s explore an example of configuring an integration pipeline using one of the Snowflake ETL tools – the Skyvia platform.

Note: Skyvia supports both ETL and ELT workflows, so you can select the scenarios that currently meet your business requirements.

Step 1. Configure Source Connector

Skyvia supports over 200+ data sources, including cloud apps, databases, data warehouses, storage systems, and flat files. To set up the required connectors, take the following steps:

- Log into your Skyvia account.

- Navigate to +Create New -> Connectors.

- Select the tool that interests you and click on it.

- Follow the step-by-step instructions provided within the setup screen of the selected connector.

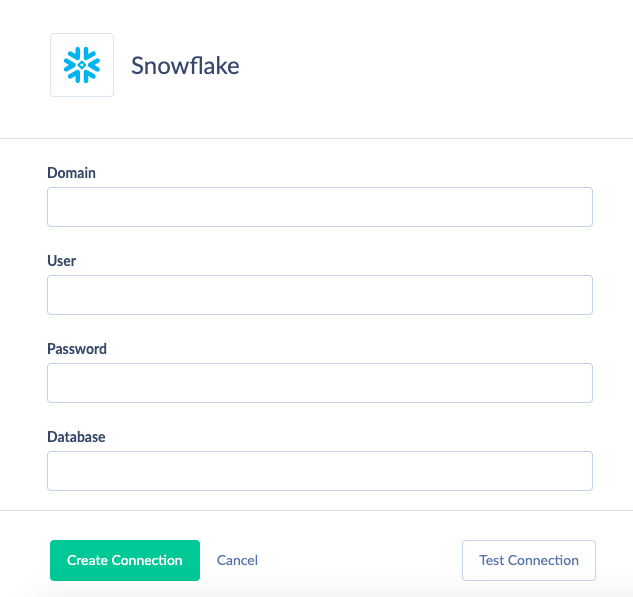

Step 2. Configure Snowflake Connector

In your Skyvia account, go to + Create New -> Connectors and select Snowflake from the list.

You need to specify the following required connection parameters:

- Domain is a Snowflake account domain.

- User is a username with which to log in.

- A password is a password with which to log in.

- The database is a database name.

Snowflake also envisages the following optional connection parameters:

- Schema is a current schema name in the database. Although this parameter is optional, you need to specify it when using replication or import in the Bulk Load mode.

- Warehouse is the name of the warehouse used for a database.

- Role is a role name used to connect.

Step 3. Set up integration scenario

As mentioned above, Skyvia provides several tools for moving data to and from Snowflake. In this example, we use the Replication tool to create an ELT pipeline and transfer data from Salesforce and Snowflake.

- In the top menu, go to +Create New -> Replication.

- Select Salesforce as a source connector and Snowflake as a destination.

- Select the fields for replication.

- Schedule the replication by specifying the exact time and date at which it should take place or indicate the intervals. Make sure that the Incremental Updates checkbox is selected.

- Save the replication and click Run to start it.

- Check the status of the integration scenario in the Monitor tab.

Conclusion

If Snowflake is your warehouse, your ETL tool is the part that decides whether it feels natural or like a rush.

- If you want maximum control and you already have developers living in Airflow DAGs and Git, a code-first stack (Airflow, open-source ELT, native Snowflake pieces) can be a great fit – you’ll get flexibility, but you’ll also own the upkeep.

- If your goal is “get the data in, keep it fresh, don’t make it a lifestyle,” the no-code and managed options (Skyvia, Integrate.io, Hevo, Matillion) win on speed-to-value.

- And if you’re mostly consolidating SaaS data into Snowflake with minimal drama, ELT-first platforms like Fivetran or Stitch are built exactly for that lane.

- If you want the “set it up once, monitor it, move on” path, start with Skyvia. Create your connections, map your data visually, schedule incremental loads, and let Snowflake stay fed without turning ETL into your side project.

The key takeaway is simple: the best Snowflake ETL tools aren’t the ones with the most buzz, they’re the ones your team can run without firefighting – and that still keep your warehouse trustworthy when schemas shift and requirements change. Try Skyvia 14-day free trial and build your first pipeline in minutes.

FAQ for Snowflake ETL Tools

Does Snowflake use SQL or Python?

Yes, SQL and Python can be used with Snowflake. SQL is often used to query and transform data. Meanwhile, Snowflake Snowpark allows you to use Python directly within Snowflake to process data, run complex data analytics scenarios, and execute machine learning tasks.

What is an ETL pipeline?

An ETL pipeline is a set of coordinated processes for data collection, transformation, and loading. It aims to move data from sources into Snowflake or other data warehouses or databases.

How to build an ETL pipeline in Snowflake?

Feel free to use Skyvia’s Replication and Import to set up data pipelines in a GUI with no coding.