Hitting a wall with complex queries or struggling to scale your MySQL database? You’re not alone. With constantly growing data volumes and application requirements, many organizations stumble on MySQL’s limits in areas like analytics, advanced data types, and concurrency. That is why more and more teams are switching from MySQL to PostgreSQL, an open-source database with a robust feature set, rigorous standards conformance, and the ability to support transactional and analytical workloads in scale.

In this tutorial, we’ll walk you through everything you need to know to migrate MySQL to PostgreSQL successfully. From planning and tool selection to post-migration optimization. We’ll explore multiple migration methods, go through practical steps, tool comparisons, and validation tips to ensure a smooth transition.

Table of Contents

- Why Migrate from MySQL to PostgreSQL?

- Pre-Migration Checklist: Setting Yourself Up for Success

- Methods to Migrate Data from MySQL to PostgreSQL

- Method 1: The Power of pgloader for One-Time Migrations

- Method 2: Using a Foreign Data Wrapper (FDW) for a Phased Migration

- Method 3: The No-Code Approach with Skyvia

- Post-Migration: The Job’s Not Done Yet

- Conclusion

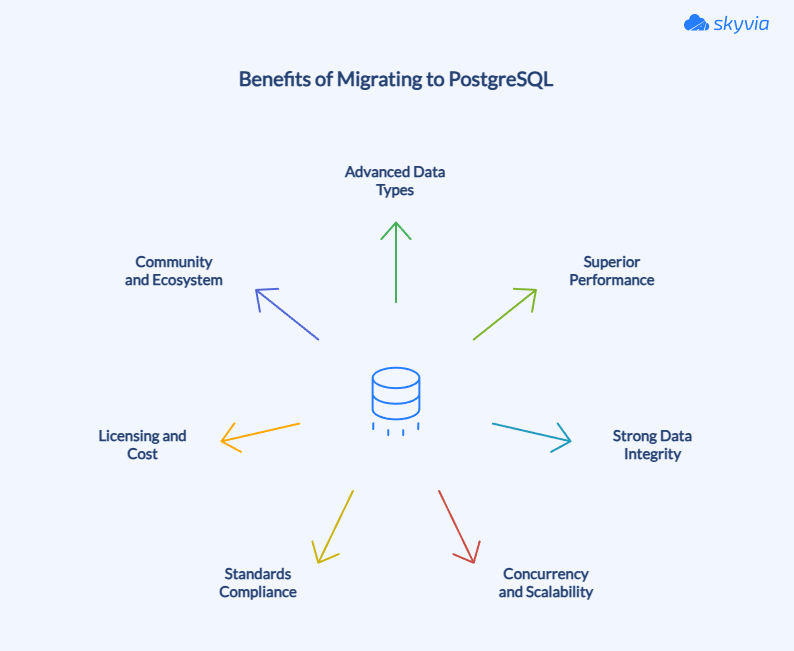

Why Migrate from MySQL to PostgreSQL?

PostgreSQL is a feature-rich, standards-compliant platform built to handle both transactional and analytical workloads at scale. Here are the main reasons why migrating from MySQL to PostgreSQL can be a strategic upgrade for your data infrastructure.

1. Advanced Data Types and Features

PostgreSQL offers extensive support for well beyond the most common data types like JSON/JSONB for query-friendly storage, arrays, hstore for key-value information, XML, and user-defined types. Robust geospatial capabilities are extended through add-ons like PostGIS, which can handle sophisticated location-based analytics. MySQL has introduced JSON capabilities, but it is less efficient, and native materialized views for performance optimization are not a feature as in PostgreSQL.

2. Superior Performance for Complex Queries

If your workloads involve large data sets, sophisticated joins, or calculations with heavy analytics, PostgreSQL’s query planner, parallel query execution, and dynamic indexing (B-tree, GIN, GiST, BRIN, and more) can decrease query time. MySQL is adequate for light OLTP and read-intensive workloads, but will break under high query complexity.

3. Strong Data Integrity and Reliability

PostgreSQL provides complete ACID compliance, strong constraints, cascading foreign keys, and guaranteed transactions. MySQL provides weak enforcement. Check constraint support is not complete, depending on the engine. PostgreSQL is far more suitable for mission-critical systems.

4. Concurrency and Scalability

PostgreSQL uses Multi-Version Concurrency Control (MVCC), meaning reads and writes can be executed at the same time without blocking each other, resulting in good performance while other processes are using the same database. These strengths are further bolstered through partitioning and replication options, or even scaling out with load balancing tools like PGpool and Slony, providing additional configurations for applications running OLAP and OLTP workloads.

5. Standards Compliance and Extensibility

PostgreSQL, closely aligned with the ANSI SQL standard, reduces surprises when porting queries or applications. Developers can also extend the database with custom functions, operators, and procedural languages, such as PL/pgSQL or PL/Python.

6. A Growing Community and Ecosystem

PostgreSQL benefits from an active global community, which continues to support improvements and growing documentation. Major cloud vendors are now providing fully managed PostgreSQL services which is making it even easier to adopt PostgreSQL.

When PostgreSQL Might Not Be the Right Move

Although PostgreSQL is a good option in many contexts, it does have some drawbacks. The learning curve can be steep, and MySQL can still outperform it for ultra-high-write workloads, or highly specialized OLTP use cases. In addition, PostgreSQL’s case-sensitive identifiers can cause issues when migrating a MySQL-based application that uses case-insensitive identifiers.

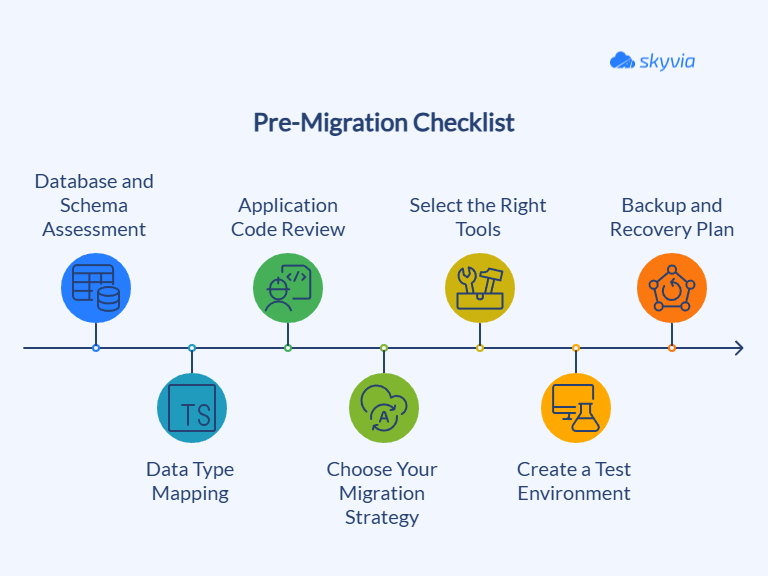

Pre-Migration Checklist: Setting Yourself Up for Success

Before starting a MySQL to PostgreSQL migration, proper planning can prevent costly downtime, data issues, or failed cutovers. The steps below will help you anticipate compatibility challenges, prepare the right tools, and reduce migration risks.

Database and Schema Assessment

Start by taking a complete inventory of your existing MySQL environment:

- List all tables, views, stored procedures, triggers, and indexes.

- Identify MySQL-specific features such as AUTO_INCREMENT, ENUM, or GROUP_CONCAT.

- Check for naming conflicts (reserved words, case sensitivity) and object name length limits (PostgreSQL enforces 63 characters).

Data Type Mapping

Map datatypes in MySQL and PostgreSQL. Some data types have direct matches, while others require conversion.

| MySQL Data Type | PostgreSQL Equivalent | Notes |

|---|---|---|

| TINYINT(1) | BOOLEAN | MySQL uses TINYINT for booleans |

| DATETIME | TIMESTAMP or TIMESTAMPTZ | Adjust for timezone handling |

| TEXT | TEXT | Similar usage in both systems |

| ENUM | CHECK constraint or TEXT | ENUM is not native in PostgreSQL |

| BLOB | BYTEA | Use BYTEA for binary data |

| DOUBLE | DOUBLE PRECISION | Ensure correct precision settings |

| JSON | JSONB | JSONB offers better indexing and performance |

Application Code Review

Your applications may contain MySQL-specific SQL that won’t work in PostgreSQL:

- Search for proprietary functions (NOW(), STR_TO_DATE, CONCAT_WS, etc.) and replace them with PostgreSQL equivalents.

- Review ORM configurations for database-specific optimizations.

- Update query syntax differences (e.g., LIMIT + OFFSET works similarly, but some JOIN behavior differs).

Choose Your Migration Strategy

- Big Bang Migration. Move all data at once. This approach is simple, but requires longer downtime.

- Trickle Migration. Move data incrementally. Requires less downtime but is more complex in sync and validation.

- Offline vs. Online. Offline means the source database is unavailable during migration; online approaches (with tools like FDW or CDC) minimize disruption but require extra monitoring.

Select the Right Tools

There are several opportunities:

- pgloader – Best for automated, one-time migrations with schema and data conversion.

- Foreign Data Wrapper (FDW) – Ideal for phased migrations and live validation.

- Skyvia – A no-code, cloud-based option for fast setup and recurring syncs.

Create a Test Environment

Never migrate directly to production without testing:

- Set up a PostgreSQL staging instance with the same specs as production.

- Run trial migrations and measure performance.

- Test your applications against the staged PostgreSQL database.

Backup and Recovery Plan

Always have a rollback strategy:

- Create a full MySQL backup using mysqldump or native backup tools.

- Test restoring the backup to confirm it works.

- Keep snapshots of both source and target systems before the final cutover.

Methods to Migrate Data from MySQL to PostgreSQL

There is no one-size-fits-all approach to take MySQL databases to PostgreSQL databases. What is best for you will depend on your business requirements, the size of your database, downtime options, and the technical skills of your team. Whether you’re moving a small development database or a mission-critical production system, the goal remains the same: ensure data integrity, minimize disruption, and resolve schema differences before cutover.

| Method | Price | Complexity | Requirements | Features |

|---|---|---|---|---|

| Pgloader | Low | High | Requires PostgreSQL role setup, Linux/macOS/Docker, and command-line skills | Automated schema conversion, bulk data load, parallel processing, error handling |

| Foreign Data Wrapper (FDW) | Low | High | PostgreSQL extension setup, MySQL connectivity, DBA knowledge | Query MySQL directly from PostgreSQL, supports phased migration with minimal downtime |

| Skyvia | Medium | Low | Web browser, valid DB credentials | Cloud-based migration, mapping, automatic schema creation, and scheduling |

Method 1: The Power of pgloader for One-Time Migrations

What is pgloader?

Pgloader is a popular open-source command-line tool designed to migrate data from MySQL to PostgreSQL in one streamlined operation. It automates the creation of tables, indexes, sequences, and constraints, while intelligently handling data type conversions.

When to Use pgloader

Ideal for one-time, “big bang” migrations where downtime is acceptable. Works best for medium to large databases and teams comfortable with command-line tools.

Step-by-Step pgloader Tutorial

Step1. Installation

Choose your installation method based on your operating system.

Ubuntu/Debian

sudo apt update # Updates package lists

sudo apt install pgloader # Installs pgloader from the package repositorymacOS (via Homebrew)

brew install pgloader # Installs pgloader using HomebrewDocker (no installation on host required)

docker run --rm dimitri/pgloader:latest \

pgloader mysql://user:pass@mysql_host/source_db \

postgresql://user:pass@pg_host/target_dbdocker run --rm– Runs a temporary containerdimitri/pgloader:latest– Official pgloader image- The two connection strings specify the MySQL source and PostgreSQL target

Step 2. Create Target Database and Role in PostgreSQL

The commands below create a new PostgreSQL database for migration, add a user (migrator) with login privileges, and grant full privileges on the new database.

CREATE DATABASE target_db;

CREATE ROLE migrator WITH LOGIN PASSWORD 'pass';

GRANT ALL PRIVILEGES ON DATABASE target_db TO migrator;Step 3. Running the Migration

Simple Command-Line Migration

This command transfers schema and data from MySQL to PostgreSQL in one go. It works well for small to medium databases without custom transformations.

pgloader mysql://user:password@mysql_host:3306/source_db \

postgresql://user:password@pg_host:5432/target_dbUsing a Load File for More Control

For better control and customization, you can create a .load file.

LOAD DATABASE

FROM mysql://user:pass@mysql_host/source_db

INTO postgresql://migrator:pass@pg_host/target_db

WITH include drop, create tables, create indexes, reset sequences,

workers = 4, prefetch rows = 1000

SET work_mem to '512MB', maintenance_work_mem to '512MB'

CAST type datetime to timestamptz drop default drop not null using zero-dates-to-null;include drop– Drops existing target tables before migrationcreate tables, create indexes– Rebuilds schema in PostgreSQLreset sequences– Ensures sequence numbers match the dataworkers– Sets parallel workers for faster migrationCAST– Defines custom type conversion (e.g., zero MySQL dates become NULLs)

Run it with:

pgloader migration.loadStep 4. Verifying the Migration

When the migration is completed, check how it went.

The command below confirms a successful connection and basic data integrity.

You can repeat the COUNT(*) check for multiple tables to ensure completeness.

psql -U migrator -d target_db # Connect to the PostgreSQL database

SELECT COUNT(*) FROM some_table; # Verify row count for a migrated tableMethod 2: Using a Foreign Data Wrapper (FDW) for a Phased Migration

What is a Foreign Data Wrapper (FDW)?

A Foreign Data Wrapper lets PostgreSQL query and interact with an external database. In this case, MySQL acts like a part of the local PostgreSQL database. With FDW, you can pull data in gradually, validate it alongside live MySQL data, and cut over when ready.

When to Use an FDW

FDW is ideal for phased migration without extended downtime. It allows you to validate data in parallel before the complete cutover.

Step-by-Step mysql_fdw Guide

Step 1. Enable the FDW Extension

On Ubuntu/Debian

The command below installs the MySQL FDW package for your PostgreSQL version.

sudo apt update

sudo apt install postgresql-<version>-mysql-fdwIn PostgreSQL

Load the FDW extension so PostgreSQL can communicate with MySQL.

CREATE EXTENSION mysql_fdw;Step 2. Create a Foreign Server and User Mapping

- Define an external MySQL server that PostgreSQL can connect to:

CREATE SERVER mysql_svr

FOREIGN DATA WRAPPER mysql_fdw

OPTIONS (host 'mysql_host', port '3306'); - Map the PostgreSQL postgres user to a MySQL account:

CREATE USER MAPPING FOR postgres

SERVER mysql_svr

OPTIONS (username 'mysql_user', password 'mysql_password');Step 3. Import the MySQL Schema into PostgreSQL

The following command creates PostgreSQL table definitions that point to MySQL table metadata. It doesn’t copy data.

IMPORT FOREIGN SCHEMA mysql_db

FROM SERVER mysql_svr

INTO public;Step 4. Query MySQL Data from PostgreSQL

SELECT * FROM some_mysql_table LIMIT 10;Step 5. Migrate Data in Phases

Copy data from the foreign MySQL table into a local PostgreSQL table using the command below with your table names. Repeat this for each table, possibly in batches, until all data is migrated.

INSERT INTO local_table

SELECT * FROM some_mysql_tableStep 6. Drop FDW Connections After Migration

Remove the foreign server definition and associated objects once migration is completed.

DROP SERVER mysql_svr CASCADE;Method 3: The No-Code Approach with Skyvia

As you can see, the methods described above require SQL skills and involve manual intervention that can lead to human errors. However, migrating databases doesn’t always require coding and manual edits. Skyvia provides a fast and no-code way to migrate MySQL to PostgreSQL.

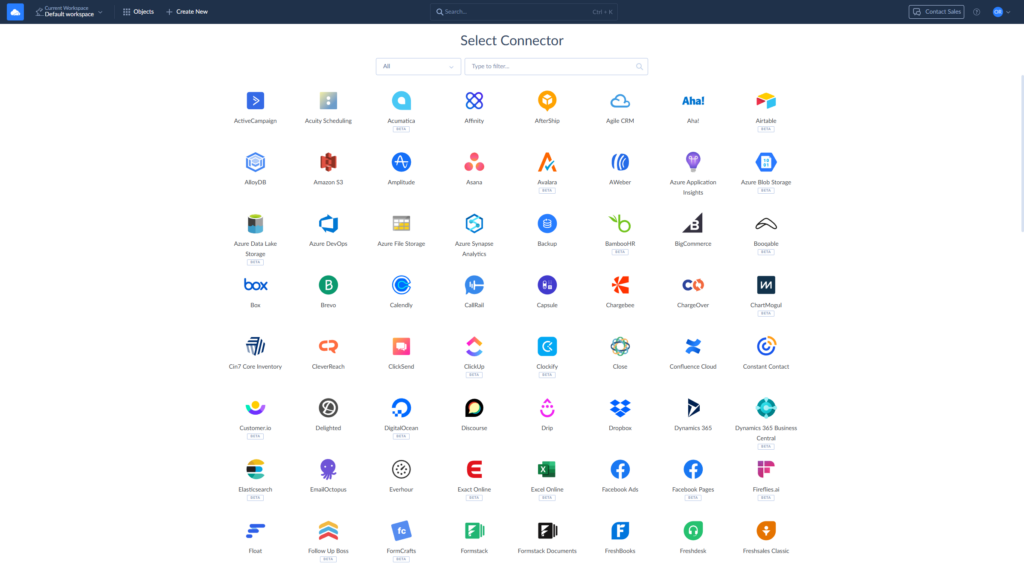

Skyvia is a versatile, no-code cloud data integration platform. It provides ETL, ELT, Reverse ETL, data migration, one-way and bi-directional synchronization, workflow automation, and real-time connectivity services. It enables users to visually manage data flows between 200+ supported sources, including cloud apps, databases, data warehouses, and file storage systems, entirely through a web browser, and with no installation required.

Skyvia Replication tool allows you to copy schema and data from your MySQL database to PostgreSQL and keep it up to date in several simple steps. You just need an active Skyvia account and valid connections to the source and target.

Migrating from MySQL to PostgreSQL with Skyvia

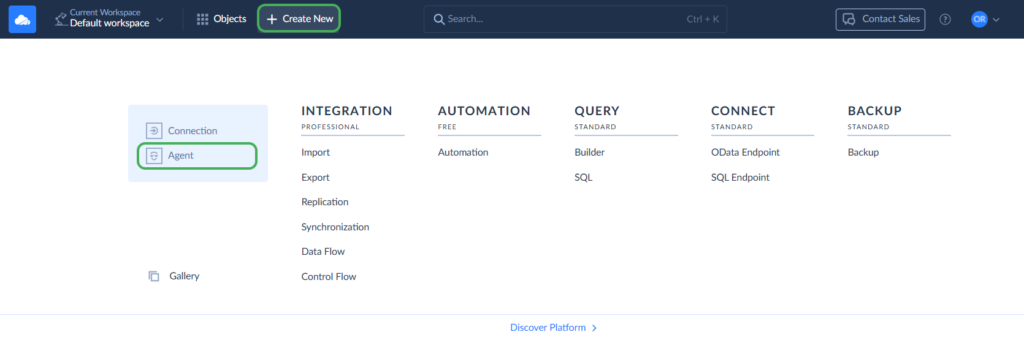

There are two ways to connect to a database with Skyvia: Direct connection and Agent connection. If your database is available from the Internet, use a direct connection. To connect to local database servers, use the Agent connection. Agent is an application that acts as a secure layer between Skyvia and your database.

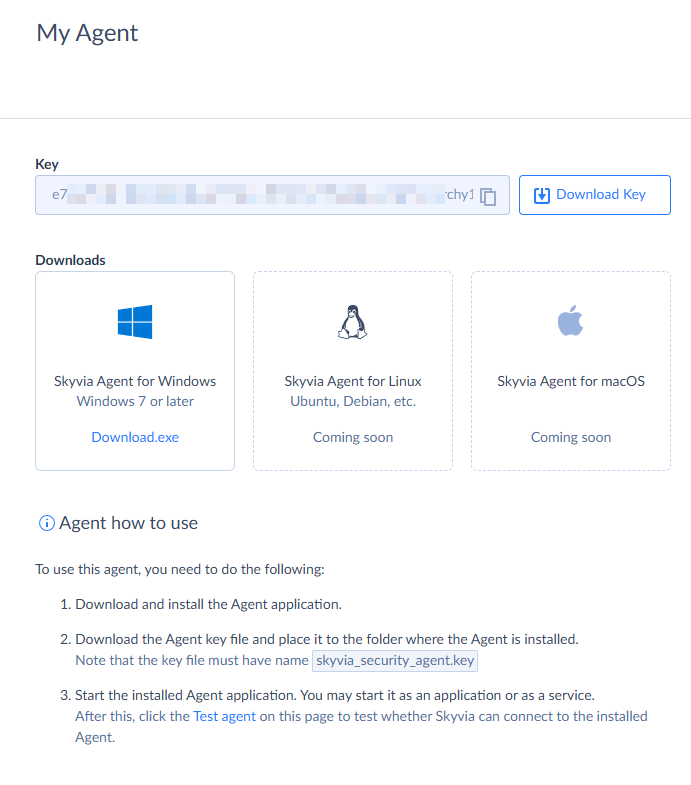

Step 1. Create an agent.

- Click + Create New -> Agent.

- Download the Agent app and install it on the computer where the database is located.

- Copy the Agent’s key and paste it into the Agent’s UI once you run the Skyvia Agent app. Alternatively, download the key file and place it in the Agent app folder, allowing the Agent to detect the key automatically.

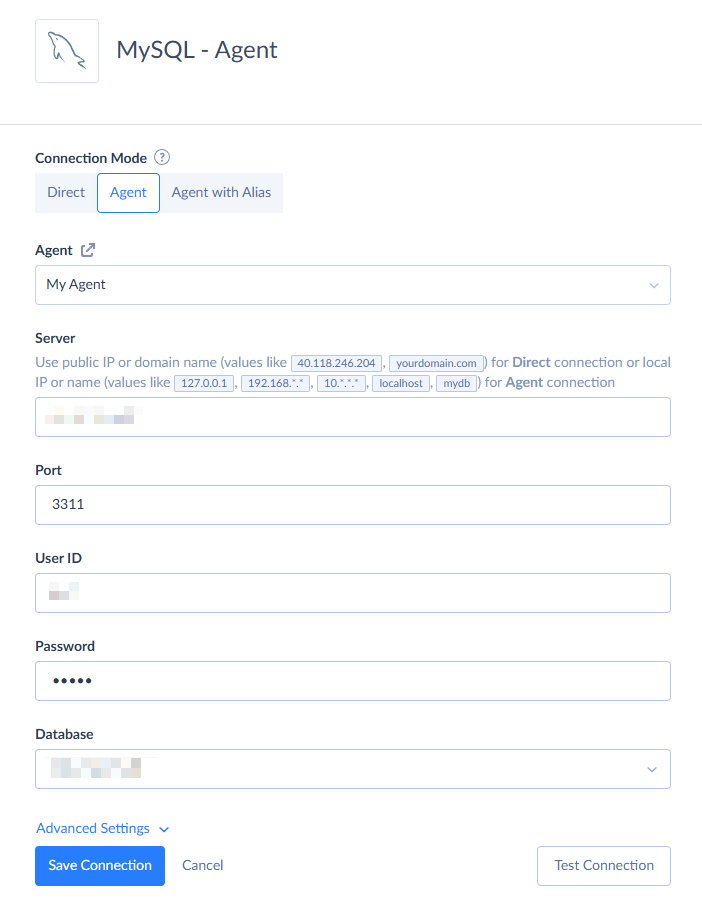

Step 2. Connect to MySQL

- Click + Create New -> Connection and look for MySQL.

- Select the Agent connection mode at the top.

- Choose your Agent from the drop-down list and specify the server, user ID, password, and database to which to connect.

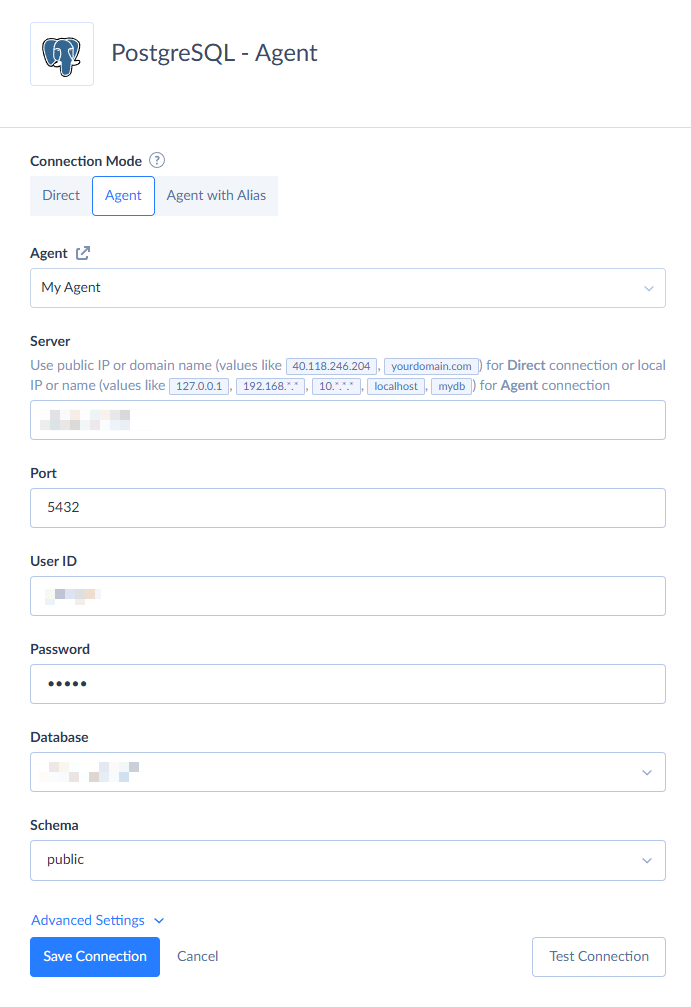

Step 3: Connect to PostgreSQL

- Click + Create New -> Connection and look for PostgreSQL.

- Select the Agent connection mode at the top.

- Choose your Agent from the drop-down list and specify the server, port, user ID, password, database, and schema.

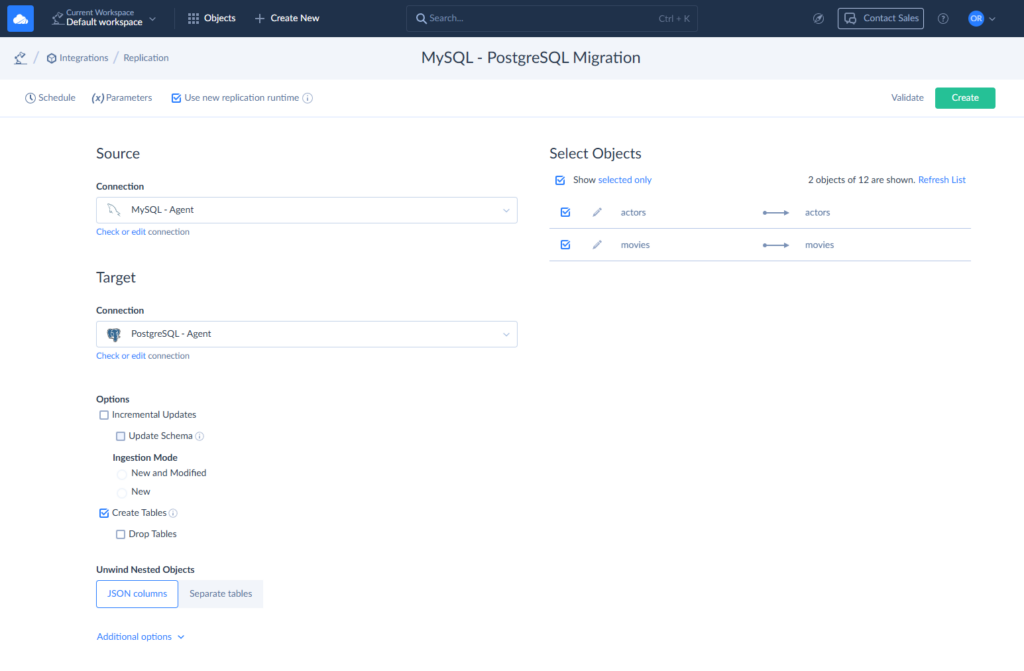

Step 4. Configure the migration

- Create a new replication from the menu.

- Select MySQL as Source and PostgreSQL as Target. Adjust the replication options according to your business needs.

- With the Incremental Updates option enabled, Skyvia detects record changes since the last integration run and applies these changes to your database every run. Use this option with recurrent migrations. We demonstrate a one-time migration, so we disable this option.

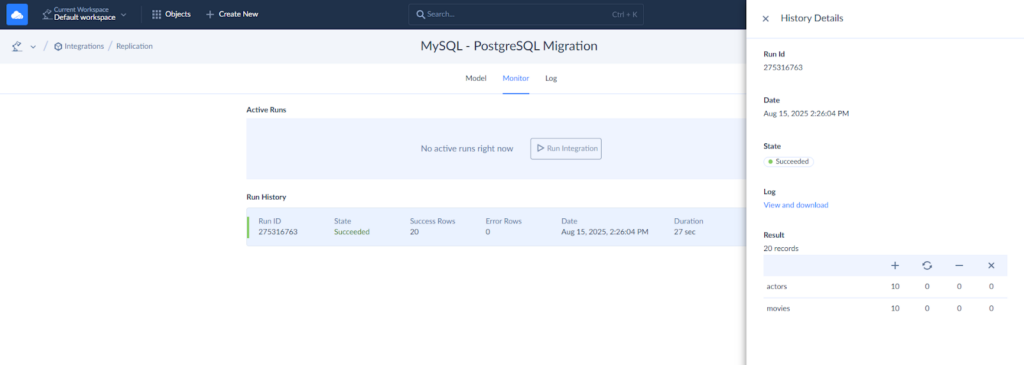

Step 5: Run & Monitor

- Run the replication manually by clicking the corresponding button in the top right corner. Or schedule the integration to run automatically. You can schedule it to run every day or on specific weekdays, every few hours or minutes.

- Check the migration results on the Monitor or Log tabs. Just click the needed run.

Why Choose Skyvia?

- Skyvia is cloud-based, so you don’t have to install anything on your servers or local computer.

- It requires no coding and has a user-friendly UI. Thus, the manual involvement is minimal.

- It allows you to set up scheduled integrations that pull only updates without risk of data duplication.

- You can build complex flows and implement advanced scenarios involving more than two databases.

Post-Migration: The Job’s Not Done Yet

A successful cutover to PostgreSQL isn’t the end of the migration story. You need to ensure everything went smoothly.

Data Validation

After migration, your first task is to confirm that the data in the source matches the data in the target.

- Check the row counts. Use SELECT COUNT(*) on both systems for each table.

- Randomly sample rows and compare field-by-field between old and new databases.

- Ensure foreign keys, unique constraints, and check constraints are intact in PostgreSQL.

Performance Testing

Now that your data is in PostgreSQL, it’s time to ensure queries and transactions run efficiently.

- Analyze query execution plans and adjust indexes or rewrite queries if necessary. Use EXPLAIN / EXPLAIN ANALYZE.

- Run REINDEX to optimize indexes post-migration.

- Execute ANALYZE to refresh optimizer statistics, improving query planning accuracy.

- Configure tools like PgBouncer for efficient connection handling in production.

Application Cutover

Migrating your applications to PostgreSQL entails more than just updating a connection string.

- Update Drivers & Connection Strings. First, you will need to replace your MySQL drivers with PostgreSQL-compatible drivers.

- Ensure that SQL syntax updates are captured in any ORM queries, stored procedures, or the like.

- Test with production workloads to ensure you can do what you need, before migrating.

- And finally, keep your MySQL instance in read-only mode for a while, just in case a rollback is necessary.

Decommission MySQL

Once PostgreSQL is validated in production:

- Disable writing permissions in MySQL to prevent accidental data changes after the migration.

- Keep final MySQL backups for compliance and historical queries.

- Decommission MySQL servers to reduce maintenance and costs.

- Record lessons learned, configuration changes, and performance improvements for future reference.

Conclusion

Transitioning from MySQL to PostgreSQL in 2025 is a tactical decision with the potential to scale better, acquire additional functionality, and save you money over the long term. Ultimately, whether you prefer a quick transfer with pgloader, a phased transition with Foreign Data Wrapper (FDW), or a no-code migration with Skyvia, the right option to choose depends on your use case, the size of your database, and the technical skill level of your in-house staff.

As long as you follow a solid pre-migration checklist, choose the migration method based on your needs, and complete a solid post-migration validation discussion, you will be assured that your new PostgreSQL data ecosystem will serve your current workloads and future-proof your growth.

For teams seeking a low-risk, fast, and user-friendly option, Skyvia offers an intuitive, cloud-based way to convert MySQL to PostgreSQL with no coding required.

Next step: If you’re ready to start your migration, try Skyvia’s database migration solution and see how quickly you can move your MySQL database to PostgreSQL.

What are the most common challenges?

Data type incompatibilities, SQL syntax differences, invalid data formats, and case sensitivity issues.

Is zero-downtime migration possible?

Yes, with FDW or Skyvia recurring syncs.

How long does it take to migrate a MySQL database to PostgreSQL?

From hours for small databases to several days for large, mission-critical ones.

What is the easiest way?

Using Skyvia’s no-code platform for quick, automated migration.