Every year, the amount of data companies push into AWS seems to double. S3 buckets fill up with logs and event streams, Redshift clusters power more analytics, and RDS, Aurora, and DynamoDB quietly run the backbone of day-to-day operations. AWS makes it incredibly easy to store data.

But! Getting that info into the right shape, in the right place, at the right time, that’s where things get tricky.

And this is why choosing the right ETL tool matters so much. The wrong choice can drag down performance, blow up your AWS bill, or lock you into workflows that are painful to unwind later.

The right one helps you:

- Scale smoothly.

- Keep costs predictable.

- Build pipelines not collapsing the moment your traffic spikes or the schema shifts.

With so many AWS-native services and third-party platforms in the mix, the landscape can feel a bit overwhelming.

So that’s what this article is here to solve it.

We’ll walk through the leading AWS ETL Amazon’s own offerings and the popular third-party alternative tools and break them down by the criteria teams actually care about:

- Scalability.

- Cost.

- Ease of use.

- Maintenance load.

- Transformation power.

- Long-term flexibility.

By the end, you’ll have a much clearer picture of which tool actually fits your stack and the way your team works. WS environment — whether you’re running a lean startup or a multi-petabyte enterprise stack.

Table of contents

- What is AWS ETL

- Top AWS ETL Tools: A Comparative Review (2026)

- How to Choose the Best AWS ETL Tool

- Native AWS ETL Tools Included in the ETL Process

- Conclusion

- FAQ

What is AWS ETL

In data processing, Extract, Transform, and Load (ETL) means extracting data from various sources, transforming it to make it usable and insightful, and loading it into a destination like a database, data warehouse, or data lake. A key advantage of starting ETL on the cloud is its scalability and cheaper solutions compared to their on-premise counterparts.

Amazon Web Services (AWS) provides many native services for extracting, transforming, and loading data within the AWS ecosystem. Each tool is designed for different purposes and provides its own set of supported data sources, use cases, and pricing models.

Let’s discuss the most popular native AWS ETL tools in detail and discover their advantages and limitations.

Top AWS ETL Tools: A Comparative Review (2026)

With a growing mix of native AWS services and third-party platforms, choosing the right ETL tool can be overwhelming. This section breaks down the top options, comparing their strengths, limitations, and ideal use cases.

| Tool | Primary Pattern (ETL/ELT) | Pricing Model | Key AWS Integrations | Best Use Case | |

|---|---|---|---|---|---|

| Skyvia | Very High — visual builder, minimal setup | ETL / ELT hybrid | Subscription (predictable) | S3, Redshift, RDS, Aurora + 200+ SaaS & DBs | No-code cross-source integrations and automated data pipeline |

| AWS Glue | Medium — requires Spark/PySpark skills | ETL (Spark) | Pay-as-you-go (DPUs & runtime) | S3, Redshift, Athena, Glue Catalog | Large-scale batch ETL with custom logic |

| AWS Data Pipeline | Low–Medium | ETL orchestration | Activity-based pricing | EC2, EMR, RDS, DynamoDB | Legacy batch workflows |

| Fivetran | Very High | ELT | Subscription (rows/month) | S3, Redshift, Snowflake, BigQuery | Zero-maintenance ingestion |

| Stitch | High | ELT | Subscription (Monthly Active Rows) | S3, Redshift, Athena | Zero-maintenance ingestion |

| Hevo Data | Very High — visual pipelines | ELT | Subscription (events/rows) | S3, Redshift, RDS, Aurora | SaaS-centric real-time pipelines |

| Talend Data Fabric | Moderate-low — enterprise | ETL/ELT with governance | Tiered enterprise pricing | 80+ AWS connectors available | Complex integration + governance |

| Informatica | Moderate — enterprise product | ETL (broad patterns) | Enterprise license | Broad cloud support incl AWS | Large enterprise, data governance |

| Integrate.io | High — drag-drop UI | ETL/ELT + CDC + Reverse ETL | Tiered or fixed fee | Integrates with AWS targets via connectors | Unified pipelines with transformation |

| Airbyte | Medium | ELT | Open-source or cloud pricing | S3, Redshift, Snowflake | Teams needing customizable connectors |

1. Skyvia

Skyvia is a leading cloud-native, no-code platform that streamlines ETL workflows between diverse cloud applications and the AWS ecosystem, including Redshift, S3, and RDS.

While Fivetran focuses primarily on ELT and AWS Glue targets enterprise Spark-based workloads, Skyvia stands out with its intuitive visual interface, wide connector coverage (200+), and all-in-one integration toolkit for AWS and SaaS ecosystems. It’s especially suited for teams without deep data engineering resources who still need robust ETL capabilities.

Pros

- No-code visual interface with drag-and-drop pipeline builder

- Supports 200+ connectors, including AWS Redshift, RDS, and S3

- Includes reverse ETL and cloud backup features — unlike Fivetran, which focuses only on ELT

- Free tier available with full-featured functionality for small-scale needs

Cons

- Focused on scheduled and incremental syncs rather than real-time streaming

- Advanced features like custom scripting and logging require a Professional plan

- Fewer community tutorials and templates than older, enterprise-focused platforms

Pricing

Starts at $79/month for Standard Data Integration

Professional plans start at $399/month, and a tailor-made offer is available for enterprise needs.

Best For

SMBs and mid-sized teams looking for a visual, all-in-one ETL/ELT platform with deep AWS + SaaS integration — especially when dedicated data engineering resources are limited.

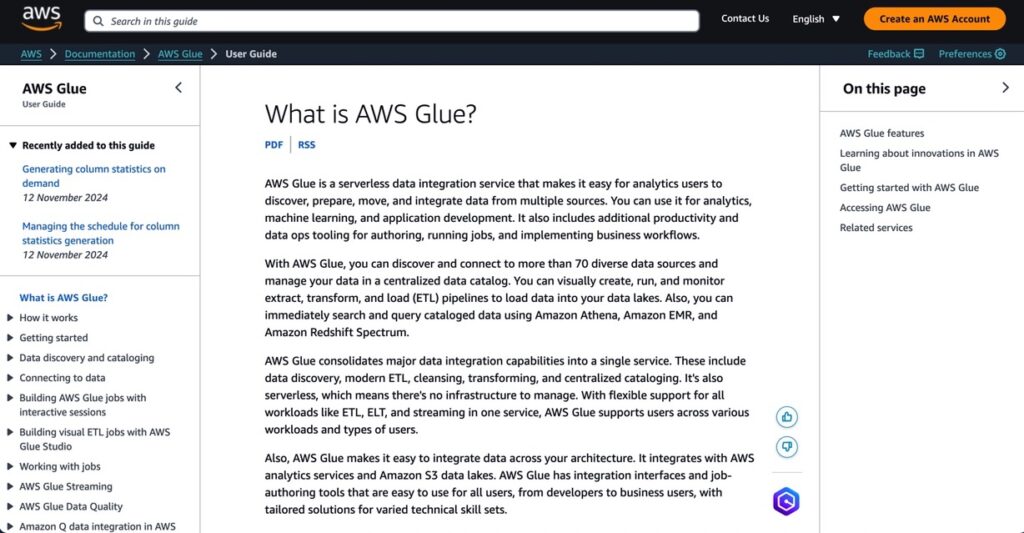

2. AWS Glue

G2 Crowd: 4.2/5

AWS Glue is Amazon’s heavy-duty, Spark-based ETL engine built for large-scale processing inside the AWS ecosystem. It really comes into its own when you’re crunching big volumes of data across S3, Redshift, Athena, or Lake Formation and need distributed compute that scales on demand. Unlike visual, no-code tools such as Skyvia or Matillion, Glue assumes an engineering-first setup. You’re writing PySpark or Scala, tuning jobs, and keeping an eye on DPUs yourself. And while Fivetran focuses on hands-off ELT into warehouses, Glue gives you far more control over transformations, at the cost of added complexity. It’s powerful, but it’s not the kind of tool you pick if you want quick wins or minimal setup.

Pros

- Serverless architecture means no cluster provision in Deepest integration with AWS storage and cataloging tools

- New Spark 3.5 engine in Glue 5.0 improves job performance by ~32%

- Built-in metadata management through Glue Data Catalog

Cons

- Steep learning curve and AWS-specific complexity

- Costs can grow quickly — $0.44 per DPU-hour can add up with large workloads

- No built-in reverse ETL or direct SaaS destination support

Pricing

Pay-as-you-go model starting at $0.44 per DPU-hour

Glue 5.0 introduces optimizations that can reduce costs by up to 22% compared to earlier versions

Best For

Large-scale enterprises already embedded in AWS needing powerful, serverless ETL with Spark — ideal for batch processing at petabyte scale and tight AWS-native integration.

3. AWS Data Pipeline

G2 Crowd: 4.1/5

AWS Data Pipeline is one of AWS’s oldest native tools, designed mainly for scheduling and orchestrating batch data movement. Compared to AWS Glue, it’s far less about heavy transformations and more about controlling when and how jobs run. And unlike visual platforms such as Skyvia, it comes with a dated interface and no real SaaS connectivity. Where it still makes sense is in stable, legacy setups that rely on predictable schedules, retries, and dependency management, but for anything modern or evolving, its limitations show up pretty quickly.

Pros

- Works well for legacy AWS batch environments

- Reliable for periodic ingestion and movement tasks

- Good for teams already invested in EMR pipelines

Cons

- UI and tooling are outdated

- Limited modern connector support

- Lacks the automation and no-code experience offered by newer tools

Pricing

$1.00/month for low-frequency pipelines; $3.00/month for high-frequency ones

Additional AWS resource usage (EC2, S3, etc.) billed separately

Best For

Teams already committed to AWS, looking for reliable, low-cost batch ETL orchestration that doesn’t require real-time delivery or frequent scaling.

4. Fivetran

G2 Crowd: 4.2/5

Fivetran is a fully managed, ELT-first platform built around the idea of getting data into the warehouse as fast and hands-off as possible. Unlike AWS Glue, there’s no Spark to manage, and compared to Skyvia, transformations don’t really happen before load — data lands in Redshift first and gets shaped downstream. Where Fivetran really shines is in its low-maintenance connectors and automatic schema handling, but that convenience comes with less flexibility when you need custom or pre-load transformations.

Pros

- True “set it and forget it” managed pipelines

- Large library of prebuilt SaaS and database connectors

- Automatic schema drift handling and incremental syncs

- Strong reliability and minimal operational overhead

Cons

- Pricing based on Monthly Active Rows (MAR) can escalate quickly with frequent updates/deletes

- Limited transformation capabilities compared to Matillion or Skyvia

- Warehouse-dependent ELT may increase compute costs for heavy workloads

Pricing

Starts at ~$120/month for low-volume usage

Enterprise plans vary based on usage and support needs; can reach several thousand per month at scale

Best For

Organizations with a mature data stack prioritizing automation, reliability, and low-lift integration — especially for syncing cloud apps into Redshift or Snowflake.

5. Stitch

G2 Crowd: 4.4/5

Stitch is a lightweight, cloud-native ELT tool that keeps things intentionally simple. Unlike broader platforms like Talend or more flexible ETL tools like Skyvia, Stitch focuses almost entirely on ingestion, making it easy to roll out when all you need is data flowing into Redshift or S3. You won’t get deep transformations or governance controls here, but you will get quick setup, predictable pricing, and solid compliance — a fair trade-off for teams that value speed over customization.

Pros

- Very simple, no-code setup with 140+ connectors

- Predictable, row-based pricing model

- Strong compliance posture (SOC 2, HIPAA, GDPR, ISO 27001)

- Built on the open-source Singer standard, allowing custom extensions

Cons

- Minimal built-in transformation; better suited for ELT pipelines — complex logic needs external tools

- No support for row-level filtering or advanced schema validation

- Some connectors may experience sync lags with large data volumes or changing APIs

- No free tier beyond trial; entry pricing may deter smaller teams

Pricing

- Standard Plan: $83.33–$100/month (up to 5M rows)

- Advanced: $1,250/month

- Premium: $2,500/month (annual billing)

- Enterprise: Custom pricing

- Free Trial: 14 days (no credit card)

Best For

Data teams prioritizing simplicity, compliance, and broad SaaS/app connector coverage over complex transformation needs. Ideal for mid-sized companies replicating data to AWS Redshift or S3 on a regular cadence.

6. Hevo Data

G2 Crowd: 4.3/5

Hevo Data is a fully managed, no-code ETL/ELT tool with a strong focus on near real-time CDC. Compared to Skyvia, which combines CDC with scheduled syncs, transformations, and broader pipeline control, Hevo keeps the experience simple and stream-oriented. It’s a good choice when data freshness is the top priority, though more complex transformation logic may still require extra work.

Pros

- Real-time CDC with automated schema handling

- 150+ sources across SaaS, databases, and cloud platforms

- Built-in transformations for basic enrichment

- Strong monitoring, alerting, and failure recovery

Cons

- UI can feel crowded as pipelines grow more complex

- Advanced transformations may require external processing

- Event-based pricing can grow quickly with high-frequency data

Pricing

- Free Tier: 1M events/month

- Starter Plan: ~$239/month (20M events/month)

- Business & Enterprise: Custom quotes based on volume, SLA, and support

- Offers scaling based on events, not rows — good for sync-heavy use cases

Best For

Teams needing real-time or near-real-time data pipelines into AWS services like Redshift, S3, or Snowflake — especially for analytics dashboards and live ops monitoring. Strong fit for data-driven orgs without in-house ETL engineering.

7. Talend Data Fabric

G2 Crowd: 4.4/5

Talend Data Fabric is an all-in-one data integration and governance platform built for companies dealing with complex or regulated data. While tools like Stitch or Fivetran focus mainly on getting data from A to B, Talend adds data quality checks, lineage, and master data management into the mix. It’s powerful, but that power comes with more setup, more learning, and a higher price tag — best suited for teams that really need that level of control.

Pros

- Complete data management suite: ETL + Data Quality + Governance + MDM

- Supports multi-cloud and hybrid deployments across AWS, Azure, GCP, and on-prem

- Strong data transformation capabilities, including cleansing, masking, and enrichment

- Includes data lineage and audit trails, critical for compliance-heavy industries

- Backed by a large open-source community (Talend Open Studio) and enterprise support

Cons

- Higher complexity and longer onboarding time compared to no-code tools like Hevo or Skyvia

- Total cost of ownership is high — includes licensing, infrastructure, and support costs

- Some features gated behind premium tiers or available only in enterprise edition

Pricing

- No public pricing; contact sales for custom quotes

- Talend Open Studio (free, open source) available with limited support/features

Best For

Enterprises needing deep transformation, strict data governance, and hybrid cloud control — especially in regulated industries (finance, healthcare, manufacturing) with compliance and audit requirements.

8. Informatica

G2 Crowd: 4.4/5

Informatica is an enterprise-grade platform with decades of data integration expertise. Unlike open-source options like Airbyte or lightweight solutions like Stitch, Informatica excels in complex, governed ETL/ELT pipelines for large-scale enterprises.

Its suite includes AI-powered automation, granular security controls, and deep metadata management — ideal for hybrid and multi-cloud environments requiring bulletproof compliance and end-to-end governance.

Pros

- Proven enterprise leader with robust ETL and data governance capabilities

- Extensive connector ecosystem covering AWS, SaaS, legacy, and on-prem systems

- Advanced features for data quality, lineage, masking, and cataloging

- Highly scalable and secure, built for enterprise and regulated industries

Cons

- Steep learning curve and complex architecture not suited for smaller teams

- High total cost of ownership; requires dedicated IT/data ops resources

- Some modules/features are gated behind premium tiers

Pricing

- Contact sales for quote

- Cloud Integration entry-level plans start around $2,000/month

- Free trials available for select products

Best For

Large enterprises that require robust ETL + governance + security, particularly across hybrid or multi-cloud deployments. Ideal for regulated sectors like healthcare, finance, or government.

9. Integrate.io

G2 Crowd: 4.3/5

Integrate.io is a no-code/low-code ETL platform that emphasizes ease of use for cloud data movement. While Talend offers more enterprise-grade governance, Integrate.io shines with its drag-and-drop simplicity and support for real-time streaming, which makes it appealing to data teams without heavy engineering overhead. It’s a flexible middle ground between lightweight tools like Stitch and heavyweight platforms like Informatica.

Pros

- Intuitive visual interface with no-code pipeline builder

- Supports 140+ data sources, including AWS, cloud apps, and SQL/NoSQL databases

- Handles both batch ETL and real-time streaming workflows

- Transparent pricing model with usage-based scaling

- Built-in transformation and enrichment capabilities

Cons

- Advanced custom workflows may require scripting or API workarounds

- UI can feel constrained for very complex, branching data logic

- Pricing can escalate with high-volume or high-frequency jobs

Pricing

- Starts at $1,250/month

- Free trial available

- Usage-based scale-up available for high-volume needs

Best For

SMBs and mid-market orgs seeking a visual, low-maintenance ETL tool to move data into AWS services (Redshift, S3, etc.) — without building pipelines from scratch.

10. Airbyte

G2 Crowd: 4.5/5

Airbyte is an open-source ELT platform that gives teams a lot of freedom under the hood. Unlike fully managed tools such as Hevo Data, Airbyte lets you self-host, customize connectors, and control how pipelines run. It’s a solid pick for teams with engineering muscle that want flexibility and don’t want to feel boxed in by vendor limits as they scale.

Pros

- Highly customizable, especially for niche APIs

- Open-source version avoids SaaS subscription costs

- Cloud option adds automated scaling and easier management

Cons

- Open-source version requires self-hosting and monitoring

- Not as turnkey as Fivetran or Skyvia

- Cloud pricing is usage-based and can become unpredictable

Pricing

- Open Source: Free (self-hosted)

- Cloud: Starts at $2.50/credit; includes free tier for low-volume usage

- Enterprise: Custom pricing available

Best For

Teams seeking open-source extensibility, or companies with unique connector needs not met by proprietary tools. Ideal for orgs that want to own their ETL pipeline architecture and scale affordably with internal DevOps support.

How to Choose the Best AWS ETL Tool

Selecting the right AWS ETL tool is more than a technical decision — it’s a strategic choice that can shape your data infrastructure’s scalability, agility, and total cost of ownership.

In 2026, with more tools and hybrid architectures than ever, it’s crucial to align your choice with your data volumes, team capabilities, and long-term goals.

Here are the key factors to consider when evaluating your ETL options:

1. Assess Your Data Volume and Load Frequency

Start with the basics: how much data do you process, and how often?

- If you’re working with real-time data streams (e.g., IoT sensors, in-app user events), prioritize ETL tools with streaming or near real-time ingestion support like Hevo or Airbyte.

- For scheduled batch jobs, such as daily sales reports or weekly data syncs, tools like AWS Glue or Skyvia may offer a better price-performance balance.

The right tool should scale seamlessly as your data grows — without hitting performance bottlenecks.

2. Identify the Complexity of Your Transformations

Not all ETL pipelines are created equal.

- If your workflows involve simple data cleaning, type conversions, or formatting, lightweight tools with visual designers (e.g., Skyvia, Stitch) will suffice.

- For complex joins, aggregations, or advanced logic, choose platforms like Informatica or Talend, which offer powerful transformation engines and support for custom scripting.

Some tools are ELT-first (like Fivetran), while others offer true in-pipeline ETL, which may better suit data governance and compliance workflows.

3. Consider Where Your Data Lives

Where your data lives — and where it’s going — matters.

- If you’re fully embedded in AWS (Redshift, S3, RDS), native services like Glue or Data Pipeline offer tight integration and minimal friction.

- For hybrid or multi-cloud environments, look for tools with a broad range of connectors (e.g., Hevo, Airbyte, Skyvia) and support for cross-platform transfers.

Choose based on current architecture and future platform flexibility.

4. Prioritize Ease of Use and Maintenance s. Customization

Evaluate your team’s skill set and bandwidth.

- No-code or low-code tools like Integrate.io, Skyvia, or Hevo are great for teams with limited data engineering resources.

- Developer-focused platforms like Airbyte or AWS Glue offer deeper control but require more technical overhead.

The right balance depends on whether your team wants rapid delivery or fine-tuned customization.

5. Evaluate Cost and Flexibility

ETL pricing models vary widely — by row, by event, by compute usage.

- Tools like Stitch and Fivetran price by Monthly Active Rows or events, which can escalate rapidly with high-volume updates.

- Others like AWS Glue charge per DPU-hour, while Skyvia uses predictable flat-tier pricing.

Always consider not just base cost, but also scaling behavior, support costs, and overage risks.

6. Look for Built-In Monitoring and Error Handling

When something breaks — and it will — visibility is key.

- Look for tools with built-in dashboards, logging, and retry mechanisms.

- Platforms like Hevo and Skyvia offer intuitive error tracking and alerting, while Airbyte’s open-source model gives full control over log pipelines.

This is essential for production-grade pipelines and SLA compliance.

7. Determine if You Need Real-Time Processing

If your use case involves live dashboards, customer behavior modeling, or fraud detection, batch ETL won’t cut it.

- Choose tools with real-time CDC (Change Data Capture) capabilities, such as Hevo, Airbyte, or Fivetran (for certain sources).

For less time-sensitive workloads, scheduled batch ETL tools may be more cost-efficient.

8. Consider How Much Control You Want Over the Infrastructure

Do you want to control the compute layer or let the tool handle it?

- Serverless options like AWS Glue minimize infrastructure management.

- If you prefer custom compute tuning or need to optimize cost/performance trade-offs, consider tools like Talend or self-hosted Airbyte.

This choice often depends on your organization’s DevOps maturity and compliance requirements.

9. Assess Integration with Analytics and Storage Services

Your ETL tool should fit cleanly into your broader data stack.

- Ensure compatibility with AWS-native storage (S3, Redshift, RDS) and analytics platforms (Athena, QuickSight, etc.).

- Some tools, like Skyvia and Hevo, also offer reverse ETL, enabling you to sync transformed data back into operational systems.

AWS ETL Best Practices

When teams start scaling ETL on AWS, most issues don’t come from the tools themselves – they come from small architectural choices that quietly compound over time. A few practical best practices can save you a lot of cleanup later.

- Whether you rely on AWS-native metadata services or maintain a shared catalog across tools, having a single source of truth for schemas, tables, and ownership makes everything downstream easier. It speeds up onboarding, reduces broken pipelines caused by silent schema changes, and keeps analytics teams from second-guessing which table is the “right” one. In practice, teams that invest in cataloging spend less time firefighting and more time shipping.

- Cost monitoring deserves just as much attention as performance. AWS ETL costs tend to creep rather than spike. Extra DPUs here, longer-running jobs there, and suddenly the bill looks very different. Set up budgets and alerts early, review job runtimes regularly, and don’t assume “serverless” means “cheap by default.” Optimizing job frequency and right-sizing workloads often has more impact than switching tools.

- IAM roles are another area where cutting corners comes back to bite. It’s tempting to reuse broad roles to move faster, but least-privilege access pays off quickly. Clear, well-scoped IAM roles make pipelines easier to audit, safer to operate, and far less painful to debug when something breaks. Think of IAM as part of your ETL design, not just security plumbing.

- Not every workload needs Spark, and not every pipeline benefits from heavy parallelism. Batch jobs, CDC pipelines, and transformation-heavy workloads all behave differently. Matching compute to the actual data pattern – not the hype – usually leads to better performance and lower costs.

- Favor incremental loads whenever possible. Full reloads might feel simpler at first, but they don’t scale well and tend to hide data quality issues. Incremental strategies, whether timestamp-based or CDC-driven, reduce load times, lower costs, and make pipelines more resilient. Once teams switch to incremental thinking, it’s rare they want to go back.

Native AWS ETL Tools Included in the ETL Process

For organizations working primarily within the AWS ecosystem, native ETL tools offer tight service integration, scalability, and cost-efficiency — especially when building pipelines around Redshift, S3, or RDS. Here are five core AWS-native tools commonly used in ETL workflows, along with their ideal usage scenarios:

1. AWS Glue

Best for: Serverless, large-scale ETL, and data lake preparation

AWS Glue is AWS’s flagship ETL tool, purpose-built for transforming, cleaning, and moving data across the AWS stack. It automatically catalogs source data using the Glue Data Catalog and leverages Apache Spark under the hood for distributed processing.

Glue is serverless, so it handles job orchestration, scaling, and infrastructure, reducing DevOps overhead. It’s especially useful for preparing semi-structured data (JSON, Parquet, etc.) from S3 into analytics-ready formats for Redshift, Athena, or SageMaker.

When to use it:

- Batch ETL at scale

- Building data lakes or pipelines for ML/BI

- Transforming large semi-structured datasets

2. AWS Glue DataBrew

Best for: No-code data prep for analysts and citizen data users

AWS Glue DataBrew brings data transformation to non-technical users through a visual, no-code interface. With over 250 built-in operations (deduplication, normalization, joins, etc.), users can prep data without writing a line of code.

It integrates seamlessly with the Glue Data Catalog, S3, Redshift, and SageMaker — making it a great option for cleaning datasets before feeding them into ML workflows or BI dashboards.

When to use it:

- Analyst-friendly data prep with no-code UX

- Cleaning and standardizing structured or semi-structured data

- Preparing input datasets for machine learning pipelines

3. AWS Data Pipeline

Best for: Scheduled batch workflows with AWS-native orchestration

AWS Data Pipeline is a workflow automation tool for orchestrating ETL jobs across AWS and on-prem systems. You can schedule tasks, define dependencies, and add retry logic — similar to tools like Apache Airflow, but tightly coupled with AWS.

While more manual and less modern than Glue, it remains effective for regular batch processes like nightly backups, table syncs, or job chaining across EC2, EMR, RDS, and S3.

When to use it:

- Time-based ETL workflows

- Moving data between AWS services or from on-prem systems

- Lightweight orchestration of SQL scripts or EMR jobs

4. Amazon EMR (Elastic MapReduce)

Best for: Custom, code-heavy big data ETL at scale

Amazon EMR is a managed cluster platform for running open-source big data frameworks like Apache Spark, Hadoop, Hive, and Presto. It offers full control over cluster configuration and supports highly customizable ETL workflows.

Unlike Glue’s abstracted serverless model, EMR is ideal when you need to fine-tune performance, install custom libraries, or migrate existing Spark/Hadoop jobs to AWS.

When to use it:

- Custom big data pipelines with complex transformations

- Migrating legacy Spark/Hadoop ETL jobs to the cloud

- Scenarios requiring full infrastructure control and optimization

5. Amazon Kinesis Data Firehose

Best for: Real-time ingestion and delivery to AWS targets

Kinesis Data Firehose is AWS’s real-time streaming ingestion tool, used to capture and load high-velocity data into S3, Redshift, or OpenSearch. It handles buffering, batching, and retry logic automatically and supports lightweight data transformations using AWS Lambda.

While not a full ETL tool, Firehose is a key component in streaming data architectures — particularly for log collection, clickstream analysis, or IoT event delivery.

When to use it:

- Real-time ingestion of streaming data

- Delivering event data to S3, Redshift, or analytics platforms

- Lightweight transformations at ingestion

AWS Glue vs AWS Data Pipeline

| Aspect | AWS Glue | AWS Data Pipeline |

|---|---|---|

| Primary role | Full ETL service with transformation, cataloging, and execution | Workflow orchestration and scheduling |

| Core architecture | Serverless, Spark-based | Managed scheduler coordinating AWS resources |

| Typical workload | Large-scale batch ETL, analytics preparation | Predictable, low-frequency batch jobs |

| Transformation capability | Native Spark transformations (ETL and ELT patterns) | Limited – relies on external scripts or services |

| Scalability | Automatic scaling with workload size | Manual sizing via underlying AWS resources |

| Ease of use | Moderate – requires Spark and AWS knowledge | Lower – dated UI and configuration-heavy |

| AWS integration depth | Deep integration with S3, Redshift, Athena, Lake Formation | Native support for S3, RDS, DynamoDB, Redshift |

| Cost model | Pay-per-use ($ per DPU-hour) – can grow with heavy jobs | Flat monthly pipeline fee plus resource costs |

| Best fit | Modern data lakes, warehouses, growing analytics stacks | Legacy or stable batch pipelines with fixed schedules |

In short, Glue is the better choice when you need scalable transformations and expect your data workloads to evolve. Data Pipeline still works for simple, stable batch orchestration, but it’s rarely the right starting point for new AWS ETL projects today.

Conclusion

f there’s one thing worth taking away, it’s this: there’s no universally “best” AWS ETL tool. The right choice always depends on what you’re trying to get done, how fast you need to move, and how much complexity the team is ready to take on. A Spark-heavy, AWS-native setup that works great for one enterprise can be total overkill for a smaller team that just needs reliable data flows into Redshift.

That’s why the criteria matter more than the brand name. Ease of use, transformation flexibility, cost behavior, AWS integration depth, and long-term maintainability all play a role. Some teams are optimizing for raw performance, others for speed of setup or predictable pricing. Being honest about those priorities upfront saves a lot of rework later.

The practical approach is to start with your real constraints, not a feature checklist.

Look at:

- The data volumes.

- How often data changes.

- Whether you need real-time sync.

- Who’s actually going to maintain the pipelines six months from now.

Once those pieces are clear, the “right” tool usually stands out on its own.

AWS gives users plenty of solid options, and the ecosystem around it is mature enough to support almost any data strategy. The win comes from matching the tool to the job, not forcing the job to fit the tool.