Summary

- Apache Airflow - A Python-based platform for orchestrating complex data workflows using Directed Acyclic Graphs (DAGs).

- Hevo - A no-code data pipeline platform that automates the process of loading data into data warehouses.

- Integrate.io - A cloud-based data integration platform that offers ETL, ELT, and reverse ETL capabilities with a focus on scalability.

- Skyvia - A cloud-based, no-code data integration platform that simplifies ETL processes with a user-friendly interface.

- Talend Cloud - A cloud-based data integration platform that offers advanced features for data transformation and synchronization.

Are you struggling to keep your workflows running smoothly with PostgreSQL?

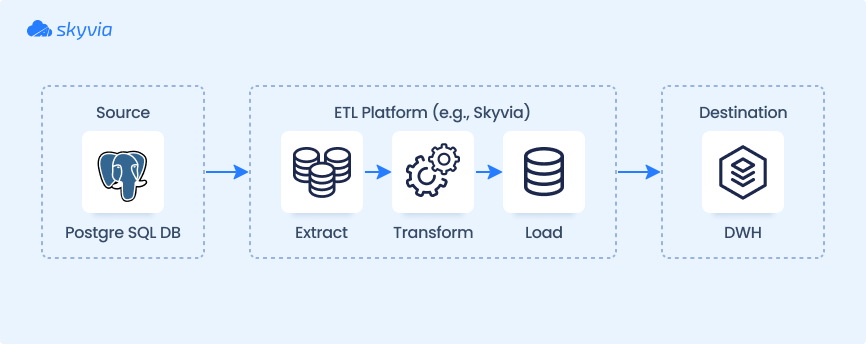

ETL (Extract, Transform, Load) tools for PostgreSQL are essential for automating the movement, transformation, and loading of data between your database and other systems.

These tools streamline data processing and integration, enabling businesses to focus on growth while ensuring data is properly managed and optimized.

When working with large volumes of data, ETL tools are crucial for data warehousing, analytics, and migration. They allow businesses to consolidate data from various sources, making it easier to generate actionable insights and improve decision-making.

While custom scripts can provide flexibility, using dedicated ETL tools offers greater scalability, reliability, and ease of use. These tools are designed to handle complex workflows and large datasets, providing robust features that custom scripts often lack.

In this guide, we’ll explore the best PostgreSQL ETL tools, comparing both open-source and paid solutions, and highlighting the strengths of each to help you choose the right fit for your business needs.

Table of Сontents

- The Rise of ELT and Real-Time Data Integration for PostgreSQL

- Integrating Postgres with ETL Tools: Key Benefits

- How to Choose the Best PostgreSQL ETL Tool: Key Criteria

- Open Source ETL Tools for PostgreSQL

- Paid PostgreSQL ETL Tools

- Tips for a Successful PostgreSQL ETL Implementation

- Conclusion

The Rise of ELT and Real-Time Data Integration for PostgreSQL

Data integration processes for PostgreSQL are undergoing a significant transformation, shifting from the traditional ETL (Extract, Transform, Load) to ELT (Extract, Load, Transform). This shift is primarily driven by the increasing demand for scalability and faster data processing in modern cloud-based environments.

In ETL, data is first extracted from a source, transformed in a separate tool or server to fit the target system’s requirements, and then loaded into a database or data warehouse.

While this approach works well for smaller datasets and complex transformations, it can be slow and resource-intensive. The need for an external transformation layer often leads to higher costs and slower turnaround times.

On the other hand, ELT directly extracts data from sources and loads it into the target system first, often a cloud-based data warehouse. Transformations then occur in the target system, utilizing its processing power.

ELT aligns perfectly with modern cloud data platforms like Snowflake and Amazon Redshift, which provide in-place transformation capabilities, allowing for faster and more flexible data processing. The ELT approach reduces infrastructure costs and enables faster data availability, which is crucial for agile decision-making.

The growing adoption of cloud technologies has made ELT the go-to method for modern data integration. Unlike ETL, ELT leverages the cloud infrastructure’s ability to handle large, raw datasets and scale efficiently with growing data volumes.

This approach also supports real-time data loading and transformation, making it an ideal fit for PostgreSQL users who need to handle increasingly complex datasets.

As part of the trend toward real-time data processing, Change Data Capture (CDC) has gained significant traction in PostgreSQL environments.

Unlike batch processing, which works on large chunks of data at scheduled intervals, CDC works by reading transaction logs or using triggers to detect changes as they happen.

For modern applications like fraud detection, inventory management, or dynamic reporting, CDC enables near-instantaneous updates, offering sub-second latency for decision-making. With CDC, PostgreSQL users can ensure their analytics systems always reflect the latest database state, bridging operational and analytical needs seamlessly.

Integrating Postgres with ETL Tools: Key Benefits

When paired with ETL tools, PostgreSQL unlocks powerful opportunities to streamline and enhance operational workflows.

This combination allows businesses to automate processes, ensuring seamless integration with various platforms and applications. PostgreSQL is a central hub for managing diverse tasks, transforming raw inputs into structured, actionable insights.

Key Benefits

- Automated Workflows. Eliminate manual intervention by streamlining extraction, transformation, and loading processes.

- Consistency Across Systems. Maintain accurate, synchronized information across platforms and applications, avoiding human errors.

- Custom Transformations. Adapt structures and formats to specific business requirements with flexible mapping capabilities.

- Scalability. Efficiently handle different volumes and increasingly complex operations as your business grows.

- Improved Analytics. Deliver clean, well-organized inputs to analytics tools for better insights and decision-making.

- Cost and Time Savings. Minimize manual effort and reduce operational expenses with automated, repeatable workflows.

- Enhanced Security. Ensure secure transfers and compliance-ready configurations for handling sensitive information.

How to Choose the Best PostgreSQL ETL Tool: Key Criteria

There are numerous factors to consider, each of which plays a vital role in determining how well the tool integrates with your existing systems and supports your business needs. Below, we break down the key criteria you should evaluate.

1. Connector Coverage

A robust ETL tool must support a wide range of data sources and destinations to integrate PostgreSQL seamlessly with your data ecosystem.

The tool should offer pre-built connectors for popular databases like MySQL, SQL Server, cloud platforms like Snowflake, and SaaS applications such as Salesforce or HubSpot.

Some tools also provide extensibility to create custom connectors for niche or emerging data sources. Having comprehensive connector coverage will significantly reduce the manual work involved in connecting disparate systems and ensure a more streamlined and efficient data flow across platforms.

2. Scalability & Performance

It’s crucial if you deal with large data volumes or expect rapid growth. The tool must be able to handle your current data volume and scale efficiently as your data grows.

Look for tools that provide parallel processing and optimization features to ensure high performance, even with large datasets. For enterprises, ensuring the ETL tool can support real-time processing and maintain reliability as the data volume increases is vital.

3. Ease of Use (No-Code vs. Code-Based)

Consider your team’s technical expertise. Some solutions offer no-code or low-code interfaces, allowing non-technical users to create and manage data pipelines with ease.

These tools often feature drag-and-drop functionalities and visual designers, making them ideal for smaller teams or those without extensive technical backgrounds.

On the other hand, code-based tools give technical teams more control, offering greater flexibility and customization options for complex workflows.

4. Open-Source vs. Commercial

Choosing between open-source and commercial ETL tools depends on your team’s needs and resources. Open-source tools, such as Apache Airflow and Talend, are free to use, customizable, and come with community support.

However, they often require additional setup, maintenance, and resources. Commercial tools, like Fivetran or Hevo, offer enterprise-grade support, pre-built connectors, and advanced features, but come at a higher cost.

5. Cloud vs. On-Premise

Consider the deployment model that best suits your organization’s infrastructure and security needs. Cloud-based tools, such as Hevo or Skyvia, offer scalability, flexibility, and remote access, making them ideal for organizations seeking modern solutions.

They reduce the need for physical hardware and provide automatic updates. On-premise solutions, like Pentaho or Informatica, offer greater control, security, and compliance, making them suitable for industries with stringent data privacy requirements.

6. Support and Community

Support is an essential factor. Commercial tools typically offer dedicated customer support via chat, email, or phone, along with service-level agreements (SLAs).

Open-source tools often rely on community forums, documentation, and developer contributions for support. While community-driven tools like Airbyte can offer robust support, they may lack formal assistance.

7. Pricing

Pricing is a crucial factor when selecting an ETL tool. Open-source ones are free but may come with hidden costs for infrastructure or custom development.

Commercial tools typically use tiered pricing models based on factors like data volume, connectors, or features. Look for free trials or freemium plans to test tools before committing.

Open Source ETL Tools for PostgreSQL

If you chose PostgreSQL as the platform, it’s likely because of its open-source nature and cost-effectiveness.

Searching with these no-cost options is a practical way to explore powerful abilities without a financial commitment. Such tools offer flexibility and customization, making them a great fit for developers and small businesses.

Let’s kick off our list with free ETL solutions, but at first look at the short comparison table:

| Tool | Deployment | Ease of Use | Key Features | Best For |

|---|---|---|---|---|

| Apache Airflow | Cloud/On-Prem | Moderate | Python-based, DAGs for orchestration, extensive community support | Developers, complex workflows |

| Apache Kafka | On-Prem | Advanced | Distributed streaming, real-time data pipelines, high throughput | Real-time data processing, event-driven apps |

| Apache Nifi | On-Prem | Moderate | Data routing, transformation, and system mediation logic | Complex data flows, IoT integrations |

| HPCC Systems | On-Prem | Advanced | Big data processing, data lake management, scalable architecture | Big data analytics, enterprise environments |

| Singer.io | Cloud/On-Prem | Easy | Modular connectors, open-source, supports ELT pipelines | Developers, lightweight integrations |

| KETL | On-Prem | Moderate | Data transformation, batch processing, supports various data sources | Enterprises, batch data processing |

| Talend Open Studio | On-Prem | Moderate | Visual design, supports batch processing, large community | Developers, on-premise environments |

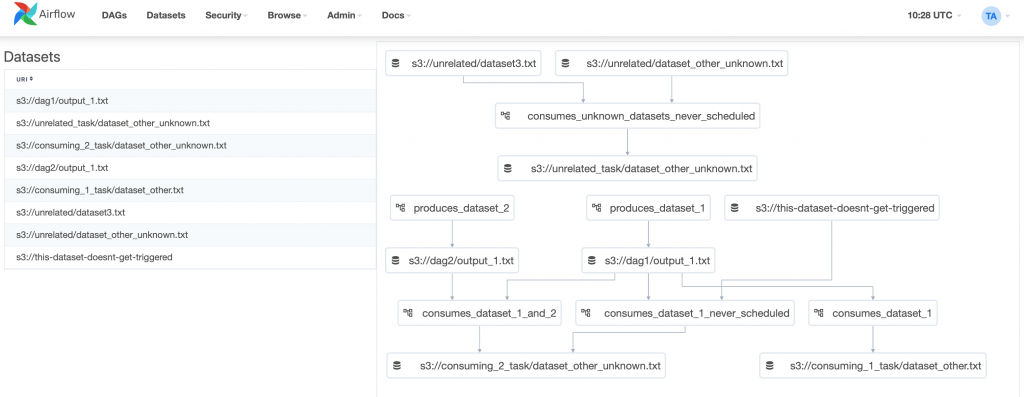

Apache Airflow

Apache Airflow is a platform for creating, scheduling, and monitoring batch processes. Its modular architecture makes it highly scalable, allowing it to handle even the most complex data pipelines. It relies on a message queue (a mechanism that facilitates asynchronous communication by storing various messages), such as requests, responses, errors, or general information.

These messages remain in the queue until they are processed by a designated consumer, ensuring smooth coordination across distributed systems.

With Apache Airflow, you can use Python to define processes and outline data extraction, transformation, and loading steps. Here, the power of ETL truly comes into play, enabling efficient and reliable data workflows.

Best for

It suits organizations needing to orchestrate complex, multi-stage workflows with clear dependencies, making it ideal for PostgreSQL users managing scheduled data transformations.

Key features

- Python-based workflow management with Directed Acyclic Graphs (DAGs) for visualizing and managing tasks.

- Extensive scheduling and monitoring capabilities via a web-based user interface.

- Integration with cloud services (e.g., AWS, Azure) and databases like PostgreSQL.

- Supports over 75 provider packages for third-party integrations, enhancing extensibility.

Rating

G2 Crowd 4.3/5 (based on 87 reviews)

Pros

- A very extensible, plug-and-play platform.

- It can process thousands of concurrent workflows, according to Adobe.

- It can also be used for reverse ETL and other data-processing tasks.

- 75+ provider packages to integrate with third-party providers.

- Uses a web user interface to monitor workflows.

Cons

- Requires Python coding skills to create pipelines.

- There’s a steep learning curve if you are transitioning from a GUI tool to this setup.

- The community is still small but growing.

- Uses a command-line interface to install this product.

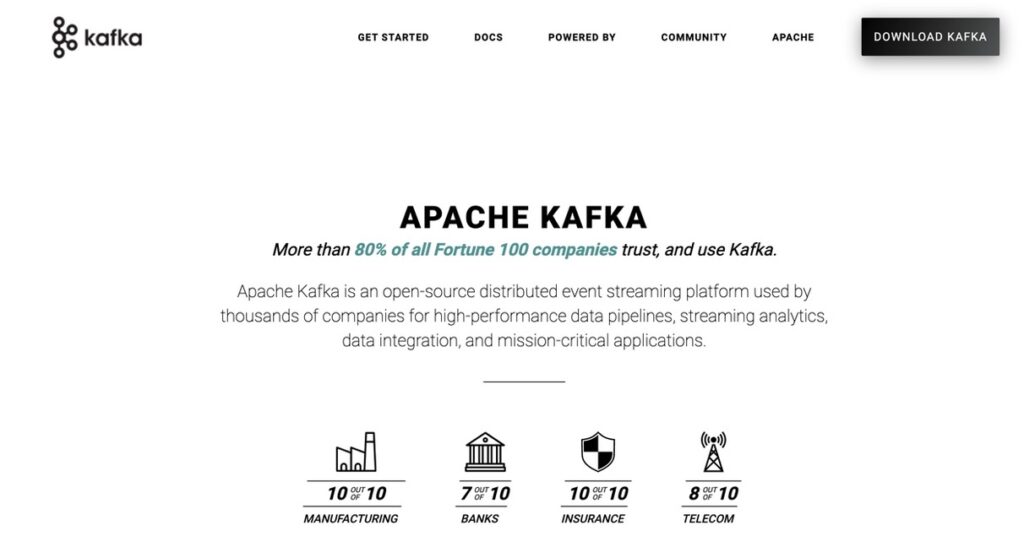

Apache Kafka

Apache Kafka is a distributed platform designed for real-time data streaming. It captures information from various sources, such as databases, sensors, mobile devices, and applications, and then directs it to various destinations.

Kafka operates with producers that write data to the system and consumers that read and process it. It also offers an API compatible with multiple programming languages, enabling integration with tools like Python and Go or database libraries such as ODBC and JDBC. This approach makes the platform a versatile option for working with PostgreSQL as both a source and a target for data.

Additionally, GUI tools are available here and here to simplify management and monitoring. For those who enjoy coding and scripting, It’s an excellent choice for PostgreSQL ETL processes.

Below is an example of a console script for writing data:

$ bin/kafka-console-producer.sh --topic quickstart-events --bootstrap-server localhost:9092

This is my first event

This is my second eventAnd here’s a sample script for reading events:

$ bin/kafka-console-consumer.sh --topic quickstart-events --from-beginning --bootstrap-server localhost:9092

This is my first event

This is my second eventBest for

Perfect for PostgreSQL users needing low-latency data pipelines or high-throughput applications like real-time analytics and monitoring.

Key Features

- Distributed event streaming platform with high scalability and fault tolerance.

- Handles millions of messages per second with latency as low as 2 ms.

- Stores streams of records in topics with keys, values, and timestamps for robust data handling.

- Supports real-time data ingestion from diverse sources (e.g., databases, sensors) and distribution to multiple destinations.

- Requires technical skills to configure producers and consumers, with a steep learning curve for GUI users.

Rating

G2 Crowd 4.5/5 (based on 121 reviews)

Pros

- 80% of all Fortune 100 companies trust and use Kafka.

- Handle millions of messages per second.

- Very fast and highly scalable, with a latency of up to 2 ms.

- High availability and fault-tolerant.

- Large community for support.

Cons

- Requires technical skills to create producers and consumers.

- Like Apache Airflow, there’s a steep learning curve if you transition from a GUI ETL tool to this tool.

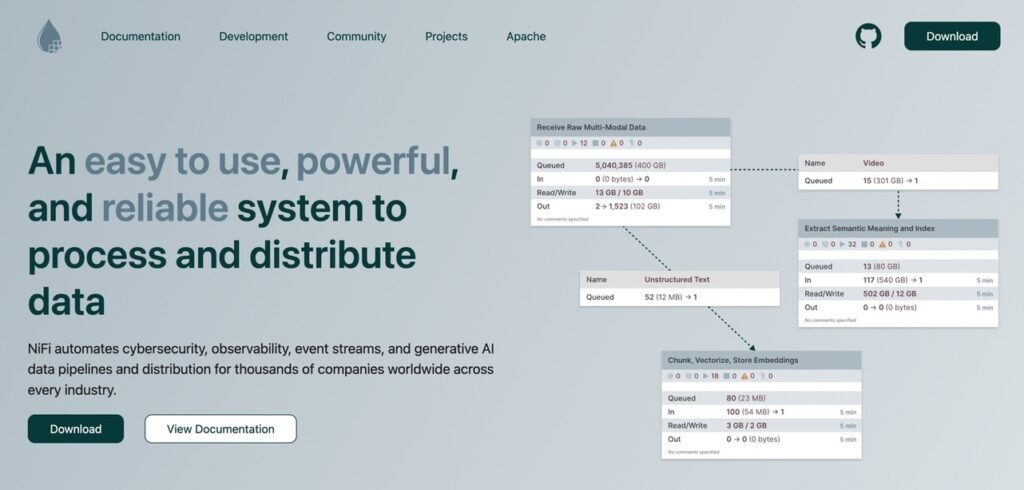

Apache Nifi

If you want a free, open-source ETL with a GUI tool, Apache NiFi is the best bet. It boasts no-code data routing, transformation, and system mediation logic. The user-friendly platform allows even non-developers to create complex workflows easily.

NiFi supports various data sources and destinations, offering flexibility for different integration needs. Its drag-and-drop interface streamlines the process of building and managing pipelines, saving time and reducing the risk of errors.

So, if you hate coding and scripting but still need powerful ETL capabilities, this is the perfect open-source solution.

Best for

Suitable for PostgreSQL users seeking a no-code solution for complex workflows or real-time data routing.

Key Features

- Graphical user interface (GUI) with drag-and-drop flow-based programming for designing pipelines.

- Real-time data streaming and batch processing with dynamic prioritization schemas (e.g., oldest/newest first).

- Data provenance tracking for complete lineage from source to destination.

- Built-in security with 2-way SSL encryption and flexible authentication.

Rating

G2 Crowd 4.2/5 (based on 24 reviews)

Pros

- Supports a wide variety of sources and targets, including PostgreSQL.

- No coding of complex transformations.

- Uses a drag-and-drop designer for your pipelines.

- It also has templates for the most used data flow.

- It can execute large jobs with multithreading.

- You can also use data splitting to reduce processing time.

- Supports data masking of sensitive data.

- Supports encrypted communication.

- You can use Nifi chat, Slack, and IRC for support.

Cons

- The user community is small but growing.

HPCC Systems

HPCC Systems uses a lightweight core architecture for high-speed data engineering, making it an excellent choice for efficiently working with large volumes of information. It offers a secure and scalable platform, ensuring integrity and protection while managing complex workflows.

With its unique Enterprise Control Language (ECL), users can perform powerful transformations and analytics with minimal coding effort.

The platform also includes integrated components for ingestion, processing, and querying, simplifying the entire pipeline. This blend of performance, simplicity, and security makes it a versatile solution for businesses looking to streamline their information management processes.

Best for

Ideal for PostgreSQL users in enterprises handling large-scale data warehousing or complex analytical workloads.

Key Features

- Uses Thor, an ETL engine with ECL (Enterprise Control Language) scripting for data manipulation.

- Configurable for both batch and high-performance data applications using indexed files.

- Runs on commodity shared-nothing computing clusters for scalability.

- Offers efficient data processing on large datasets, though it requires technical expertise to optimize.

- Lacks a graphical interface, relying on script-based configuration.

Rating

No review details are available.

Pros

- Secure but near real-time data engineering.

- Uses the Enterprise Control Language (ECL) designed for huge data projects.

- Provides a wide range of machine learning algorithms accessible through ECL.

- Provides documentation and training to develop pipelines.

Cons

- Must learn a new language (ECL) to design pipelines.

Singer.io

Singer.io is an open-source framework for building simple, flexible, scalable data pipelines. It follows a straightforward “tap and target” architecture, where taps extract information from sources, and targets load it into destinations.

The platform uses a standard JSON-based format for data exchange, ensuring compatibility across various systems and applications.

One of its standout features is the ability to handle diverse sources, including APIs, databases, and flat files, making it suitable for various ETL needs.

The system also supports incremental extraction, allowing only new or updated records to be processed, which improves efficiency and reduces resource consumption. Developers appreciate its simplicity and extensibility, as they can create custom taps or targets in Python to meet specific requirements.

Here’s a sample Singer ETL script:

› pip install target-csv tap-exchangeratesapi

› tap-exchangeratesapi | target-csv

INFO Replicating the latest exchange rate data from exchangeratesapi.io

INFO Tap exiting normally

› cat exchange_rate.csv

AUD,BGN,BRL,CAD,CHF,CNY,CZK,DKK,GBP,HKD,HRK,HUF,IDR,ILS,INR,JPY,KRW,MXN,MYR,NOK,NZD,PHP,PLN,RON,RUB,SEK,SGD,THB,TRY,ZAR,EUR,USD,date

1.3023,1.8435,3.0889,1.3109,1.0038,6.869,25.47,7.0076,0.79652,7.7614,7.0011,290.88,13317.0,3.6988,66.608,112.21,1129.4,19.694,4.4405,8.3292,1.3867,50.198,4.0632,4.2577,58.105,8.9724,1.4037,34.882,3.581,12.915,0.9426,1.0,2017-02-24T00:00:00ZBest For

Lightweight data extraction and loading, particularly for PostgreSQL users extracting from APIs or databases and loading into data warehouses like Snowflake or Redshift.

Key Features

- Schema-less data streams supporting both batch and real-time data movement.

- Written in Python, making it accessible for developers to customize.

- Lightweight design minimizes resource use, though transformation requires additional tools.

- Supports multiple data sources and destinations with JSON-based scripts.

Rating

No review details are available.

Pros

- 100+ sources and targets, including PostgreSQL.

- JSON-based for language-neutral app communication.

- Develop your own source and your own targets using Python.

- Uses scripts to process data.

Cons

- Requires technical skills to develop pipelines.

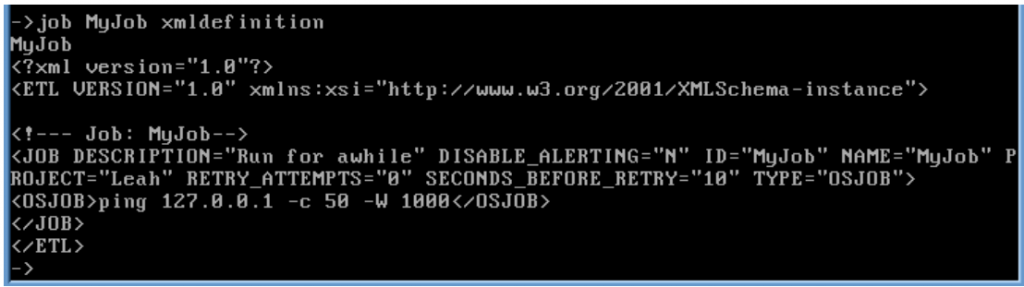

KETL

KETL is a powerful open-source ETL platform for high-performance data integration and processing. It is tailored to handle complex workflows, offering a scalable and robust architecture supporting enterprise-level operations.

One of KETL’s key features is its ability to manage large-scale transformations efficiently, making it suitable for businesses with substantial data volumes.

The platform provides built-in support for scheduling, monitoring, and logging, ensuring seamless operation and easier management of ETL processes.

It also includes many prebuilt connectors for databases, flat files, and other data sources, allowing for flexible and straightforward integration. Its modular design enables users to extend its capabilities with custom plugins, making it adaptable to unique business needs.

Here’s a sample KETL command to show the XML definition of MyJob:

Best for

Suitable for PostgreSQL users needing a production-ready solution for structured workflows.

Key Features

- Multi-threaded server with job executors (SQL, OS, XML, Sessionizer, Empty) for diverse tasks.

- Manages complex data manipulations using an open-source data integration platform.

- Requires technical setup and maintenance, with limited documentation on advanced features.

Rating

No review details are available.

Pros

- Extract and load data that supports JDBC. This includes flat files and relational databases like PostgreSQL.

- Manage the most complex data in minimal time.

- Integrates with security tools to keep your data safe.

Cons

- Hard to find ETL samples and documentation you can study.

- You may get confused with documentation from similar ETL tools named KETL (like Kogenta ETL (KETL) and configurable ETL (kETL)).

Talend Open Studio

Note: As of January 31, 2024, Talend has discontinued support for Talend Open Studio. Here are the top alternatives for 2025.

However, the platform is still powerful, free, and useful for data integration, transformation, and migration. Its easy-to-use drag-and-drop interface is perfect for anyone without a strong coding background. The platform supports various systems, seamlessly connecting to databases, APIs, and cloud services.

Its flexible design allows users to tackle anything from basic data tasks to more complex, multi-step workflows.

As an open-source tool, it also allows developers to customize and enhance its features, making it a highly adaptable solution for businesses with unique challenges.

Best for

For PostgreSQL users seeking a no-code solution with broad connectivity, though its open-source version is discontinued as of January 2024.

Key Features

- Drag-and-drop interface for building scalable ETL and ELT pipelines without coding.

- Supports batch processing and real-time integration with advanced data mapping tools.

- Offers data quality and governance features, though some components are reserved for paid tiers.

- Discontinued open-source support shifts focus to commercial Talend Data Fabric, with legacy users relying on existing installations.

Rating

G2 Crowd 4.3/5 (based on 46 reviews)

Pros

- 900+ data integration components. Includes connectors, data transformation, and many more.

- Graphical development of pipelines.

- Extend features with Java programming language.

- Great for small to large datasets.

Cons

- No scheduler for ETL pipelines.

- The app is CPU and memory-intensive, based on some user reviews.

Paid PostgreSQL ETL Tools

Now that we’ve found the free solutions, let’s come close to the paid platforms that provide a lot of value, like ease of use, great support, and more. Let’s look at the comparison table first.

| Tool | Deployment | Ease of Use | Key Features | Best For |

|---|---|---|---|---|

| Skyvia | Cloud-based | Easy | Over 200 prebuilt connectors, reverse ETL, automated backup, visual data flow tools. | No-code, cloud-based integration and backup for PostgreSQL and cloud apps. |

| Talend Cloud | Cloud-native | Easy | Real-time/batch processing, data governance. | Scalable, cloud-native integration with extensive connectors. |

| Informatica PowerCenter | On-premise or hybrid | Advanced | Advanced transformations, parallel processing. | Advanced transformation in on-premise setups. |

| Stitch | Cloud-based | Easy | Automatic schema detection, Singer-based ELT. | Businesses needing simple, automated ELT with minimal customization. |

| Hevo | Cloud-based | Moderate | Automatic schema mapping, Python transformations. | No-code ELT with real-time PostgreSQL replication and scalable pipelines. |

| Integrate.io | Cloud-based | Moderate | Automated pipelines, real-time/batch processing. | Businesses needing low-code, cloud-based ETL/ELT. |

| Pentaho Data Integration | On-premise or cloud | Moderate | JDBC-based connectors (e.g., PostgreSQL), data cleansing, filtering, cluster deployment. | Teams preferring custom coding flexibility. |

Skyvia

Skyvia is a user-friendly, cloud-based ETL tool that makes working with PostgreSQL a breeze. Whether syncing data, importing files, or building complex integrations, its no-code interface ensures you can get the job done without being a tech wizard.

The platform supports 200+ different sources, connecting PostgreSQL to everything from CRMs to cloud storage services.

What really sets it apart is the scheduling of recurring imports, synchronizing information between platforms, and even setting up workflows to keep everything running smoothly. The solution also offers powerful transformation abilities to clean and reshape data before it lands in the DBMS.

Best For

Teams with limited technical expertise seeking a no-code, cloud-based solution for data integration, backup, and synchronization, especially those using PostgreSQL with cloud applications or databases.

Key Features

- No-code data integration with a user-friendly interface for ETL, ELT, and reverse ETL processes.

- Over 200 prebuilt connectors for CRMs, databases (including PostgreSQL), and cloud apps, with options for on-premise access.

- Automated backup and restore functionality with daily sync options.

- Scalable pricing plans, including a free tier, hosted on a secure Azure cloud infrastructure.

- Visual data flow and control flow tools for advanced transformations.

Rating

G2 Crowd 4.8/5 (based on 242 reviews)

Pros

- Free plans and trials.

- Simple, drag-and-drop designer.

- Simple and complex data transformations.

- With a pipeline scheduler.

- Detailed logs and failure alerts that you can easily comprehend.

- Almost zero code querying of data sources.

- 100% cloud. No need to install it.

Cons

- Limits on free usage.

Pricing

- Flexible freemium model.

- Free plan for 10k records/month.

- Starts at $79/month for 1MB.

Talend Cloud

Features you love in the Open Studio but for the cloud. Talend Cloud is a powerful, cloud-based ETL tool that takes the complexity out of managing PostgreSQL integrations.

It handles everything from simple data migrations to advanced workflows, all with a user-friendly interface that combines drag-and-drop simplicity with robust functionality. The solution supports 1000 sources, making connecting PostgreSQL with CRMs, ERPs, and cloud platforms easier.

One of its standout features is its real-time data integration abilities, ensuring your systems are always up-to-date. With built-in data transformation tools, you can clean, format, and prepare the information without additional software. Plus, its automation features let users schedule tasks, freeing up time and reducing manual effort.

Best For

Suitable for PostgreSQL users requiring extensive prebuilt integrations and data governance.

Key Features

- Cloud-based ETL and ELT with a drag-and-drop interface and support for Python, Java, and SQL.

- Real-time and batch processing with machine learning-based data quality checks.

- Data governance and quality management tools for compliance and analytics.

- Custom pricing based on deployment scale, with a shift from open-source roots to a commercial focus.

Rating

G2 Crowd 4.2/5 (based on 206 reviews)

Pros

- Easy and fast browser-based graphical pipeline designer.

- Extend or customize your pipeline using Python.

- Talend Trust Score checks data reliability at a glance, over time, or at any point in time.

Cons

- Pricey, according to Gartner reviews.

Pricing

It provides a wide range of plans. Contact Talend Sales for pricing.

Informatica PowerCenter

Informatica PowerCenter is a robust solution designed to handle even the most complex integration workflows. It’s an excellent choice for businesses utilizing PostgreSQL and other databases, offering advanced features to extract, transform, and load information across diverse systems.

Its scalability ensures it can accommodate large datasets and complex operations, making it a preferred option for enterprise-level organizations.

Its vast library of prebuilt connectors truly sets it apart, enabling seamless integration with various platforms, including databases and cloud-based applications.

Additionally, it provides powerful transformation options to ensure your information is accurate, consistent, and ready for analysis. With automation capabilities, users can easily schedule repetitive processes, reducing manual work and enhancing overall efficiency.

Best For

Large enterprises with complex data integration needs, particularly those using PostgreSQL in on-premise or hybrid environments.

Key Features

- Extensive library of prebuilt connectors for databases, cloud apps, and unstructured data formats (e.g., JSON, XML).

- Advanced transformation options, including parallel processing and pushdown optimization.

- Robust security features like encryption and role-based access, with grid computing for scalability.

- Custom pricing based on deployment size and features, with a steep learning curve and high cost.

Rating

G2 Crowd 4.4/5 (based on 85 reviews)

Pros

- Build formulas for data transformation instead of coding.

- Drag-and-drop designer and configuration with keyboard shortcuts.

- Uses parallel data processing to handle huge amounts of data.

- Granular access privileges and flexible permission management for security.

- 24/7 support is available.

Cons

- Runtime logs are a bit challenging to read.

- Transitioning ETL experts need to familiarize the product’s terminologies.

- Some user reviews in G2 report the unresponsiveness of this app.

Pricing

- Prepaid subscription based on Informatica Processing Unit (IPU).

- Contact sales for more details about IPUs.

Stitch

Stitch is a lightweight and easy-to-use ETL tool for modern data integration. It’s perfect for businesses working with PostgreSQL and other databases, offering a straightforward way to extract, transform, and load the information across platforms. It handles small datasets or large-scale data pipelines with ease.

The solution stands out because of its vast selection of prebuilt integrations, connecting PostgreSQL to hundreds of popular apps, cloud platforms, and DWHs. Its simplicity provides essential transformation options to ensure the data is ready for analysis.

Best For

Ideal for teams needing automated pipelines and basic transformations.

Key Features

- Cloud-first ELT platform using the Singer open-source framework for data extraction.

- Self-service interface for quick pipeline setup, though advanced transformations require Talend integration.

- Focus on compliance and security, but limited customization for complex workflows.

Rating

G2 Crowd 4.4/5 (based on 68 reviews)

Pros

- Simple interface to quickly create pipelines.

- Enterprise-grade security and data compliance.

- Extensible platform with the Singer open-source framework.

Cons

- No free version.

Pricing

- Standard pricing starts at $100/month with 1 destination and 10 sources.

Hevo

Hevo is a no-code ETL tool that simplifies integration and makes working with PostgreSQL and other platforms effortlessly.

It provides a seamless way to extract, transform, and load data in real time, making it an excellent choice for businesses that value speed and efficiency. With its scalable architecture, Hevo can handle everything from small datasets to enterprise-level workflows.

What sets Hevo apart is its fully automated pipeline setup, enabling users to connect PostgreSQL with various sources without coding.

Its transformation abilities ensure your data is clean, consistent, and analysis-ready. It also offers robust scheduling and monitoring features, so data syncs happen on time without manual intervention.

Best For

Suitable for PostgreSQL users needing automated pipelines and scalable solutions.

Key Features

- Real-time data replication with automatic schema mapping and pre-load transformations using Python.

- Horizontal scaling for large datasets and robust scheduling/monitoring features.

- Limited destinations and advanced transformation options compared to some competitors.

Rating

G2 Crowd 4.4/5 (based on 254 reviews)

Pros

- 150+ connectors, where 50 are free.

- Flexible transformation using Python.

- Single-row testing before deployment.

- Easy-to-use forms with a schema mapper and keyboard shortcuts.

- Process huge amounts of data with horizontal scaling.

- 24/7 Support.

- Provides resource guides and video tutorials.

Cons

- PostgreSQL is not included in the free connectors.

- Not allowed registration: personal email addresses (Outlook, Gmail) and .edu addresses.

- Requires knowing Python to do transformations.

- No drag-and-drop designer for pipelines.

Pricing

- The pricing is flexible and provides a 14-day free trial.

Integrate.io

Integrate.io is a cloud-based ETL tool that especially fits businesses using PostgreSQL and other platforms. It offers a no-code interface, allowing users to extract, transform, and load data effortlessly for technical and non-technical teams. Its scalability ensures it can handle small tasks and complex enterprise-level workflows.

Its vast library of prebuilt connectors enables seamless integration between PostgreSQL and various cloud applications, databases, and data warehouses. Its robust transformation tools help ensure that your data is accurate, consistent, and ready for analysis.

With automation features like scheduled workflows and real-time monitoring, the solution minimizes manual effort while maximizing efficiency.

Best For

Ideal for teams seeking visual workflow design.

Key Features

- Low-code interface with a visual workflow builder for designing data pipelines.

- Prebuilt connectors for databases (including PostgreSQL), SaaS apps, and cloud warehouses like Snowflake and BigQuery.

- Automated data pipelines with support for real-time and batch processing.

- Scalable architecture with a focus on ease of use and minimal maintenance.

Rating

G2 Crowd 4.3/5 (based on 201 reviews)

Pros

- Powerful, code-free data transformation.

- Connects to major databases, including PostgreSQL, and other sources.

- ETL, reverse ETL, ELT, CDC, and REST API.

- UI is easy and applicable for beginners and experts alike.

Cons

- No on-premise solution.

- No real-time data synchronization capabilities.

- Does not support pure data replication use cases.

- Business emails only to get started.

- Limited connectors.

Pricing

- The pricing is based on the number of connectors.

- A 14-day trial is available.

Pentaho Data Integration

Pentaho Data Integration (PDI) helps businesses seeking to simplify the integration process, especially with platforms like PostgreSQL.

It offers a rich set of ETL features for handling tasks of any complexity, from small projects to large-scale enterprise workflows. Its scalable and flexible architecture allows businesses to manage growing data needs efficiently.

One of PDI’s standout features is its intuitive drag-and-drop interface, which lets users build pipelines without extensive coding knowledge. It also provides advanced transformation abilities to easily clean, format, and prepare data for analysis.

Best For

Suitable for those comfortable with a GUI or custom coding.

Key Features

- Drag-and-drop GUI for no-code ETL, with options for custom transformations in Java.

- Wide range of JDBC-based connectors (e.g., MySQL, Salesforce, PostgreSQL) and a marketplace for third-party plugins.

- Supports data cleansing, filtering, and complex operations like pivoting and joining.

- Scalable for big data with cluster deployment, though performance may slow with large datasets.

Rating

G2 Crowd 4.3/5 (based on 15 reviews)

Pros

- Codeless pipeline development.

- Supports streaming data.

- Wide range of connectors.

- Enterprise-scale load balancing & scheduling.

- Use R, Python, Scala, and Weka for machine learning models.

- Uses Pentaho security or advanced security providers.

- 24/7 support.

Cons

- Technical documentation needs improvement.

- Pricey, according to some reviews in Gartner Peer Insights.

- No built-in data masking for sensitive data. But a scripting transformation is possible.

Pricing

- Try for free the Pentaho Community Edition.

- 30-day free trial of Pentaho Enterprise Edition.

- $100/user/month to process 5 million rows. You can also adjust your plan as you grow.

Tips for a Successful PostgreSQL ETL Implementation

Understand Your Data

Before beginning the ETL process, it’s crucial to thoroughly understand the data you’re working with. Data profiling helps you identify the structure, quality, and any potential issues within your PostgreSQL datasets.

Use PostgreSQL’s built-in ANALYZE command or third-party data profiling tools to analyze your data tables for types, volumes, and inconsistencies such as missing values, duplicates, or outdated records.

Incremental Loading

One of the most important ways to optimize the ETL process is through incremental loading. Rather than performing full data refreshes every time, incremental loading ensures that only changed or newly added data is moved.

The Change Data Capture (CDC) technique is an ideal way to achieve incremental loading. By tracking changes in the PostgreSQL Write-Ahead Logs (WAL) or using triggers, CDC enables real-time or near-real-time updates.

For simpler setups, timestamp-based filtering (i.e., moving only the data with updated timestamps since the last ETL job) can also be effective.

Monitor and Alert

Ongoing monitoring and alerting to failures — whether through email, Slack, or SMS — ensures that you can quickly respond to issues such as data mismatches or performance issues before they escalate.

Regular monitoring not only helps catch problems early but also ensures that your ETL process runs smoothly and remains scalable.

Schema Management

Changes such as new columns or renamed tables can cause data mismatches or pipeline failures. To prevent this, having a schema management plan in place is essential.

Additionally, designing flexible ETL workflows that can adapt to schema changes, such as dynamically mapping new fields or flagging unmapped columns, can prevent disruptions.

Conclusion

When selecting the best ETL tool for PostgreSQL, it’s crucial to balance factors like usability, performance, security, and pricing. Whether you’re a beginner in data integration or an experienced user seeking a more powerful solution, the tools discussed in this article cater to a wide range of needs and budgets.

Choosing the right tool tailored to your specific requirements will streamline your data workflows, enhance operational efficiency, and improve data accuracy. Remember, the best tool for your business will not only support your current processes but also scale with your future data needs.

Ready to supercharge your PostgreSQL analytics? Explore Skyvia’s powerful, easy-to-use platform and see how seamless data integration can be for your business. Start with a free trial today!

FAQ for ETL Tools for PostgreSQL

Why do we need to integrate PostgreSQL with ETL tools?

Integrating PostgreSQL with ETL tools simplifies data extraction, transformation, and loading from multiple sources. ETL tools enhance automation, reduce manual errors, and improve the efficiency of managing complex data workflows.

Can PostgreSQL be used for data warehousing needs?

Yes, PostgreSQL is often used for data warehousing due to its robust querying capabilities, scalability, and support for large datasets. Features like table partitioning and indexing make it suitable for analytical workloads.

What are some key extensions for PostgreSQL ETL workflows?

Popular extensions include PostgreSQL-FDW for integrating external data sources, pg_partman for managing partitions, and pg_stat_statements for monitoring query performance during ETL operations.

What are the best ETL tools for PostgreSQL?

Top ETL tools for PostgreSQL include Skyvia, Apache Airflow, Talend, Hevo, Integrate.io, etc., for PostgreSQL. Each offers unique features like automation, cloud integration, and user-friendly interfaces.