This architecture is the traffic control tower for your data: routing, timing, and guiding each byte to the right destination. Inefficient ETL systems are a silent productivity killer for many businesses struggling with

- Data silos.

- Lengthy processing times.

- Inconsistent data.

If your team wastes too much time resolving issues in the data pipeline, it’s a good idea to change the approach.

In this article, we’ll walk through ETL best practices and explore how to build a robust architecture that grows with your business. Whether you’re migrating to the cloud or scaling up, we’ve got you covered with real-world solutions to common ETL challenges like slow data flows, broken integrations, and scalability issues.

By the end, you’ll have a clear roadmap to:

- Build an agile and efficient ETL system that handles today’s data challenges.

- Cut down on bottlenecks, making your data move faster and more reliably.

- Harness cloud tools to scale your ETL architecture with ease.

Optimizing your ETL is a game changer for better, faster business decisions. Ready to dive in?

Table of contents

- What Is ETL Architecture?

- 12 ETL Architecture Best Practices

- Challenges in Building ETL Architecture

- Top Solutions for Better ETL Architecture

- ETL vs. ELT: Which Is Best for Your Architecture?

- Conclusion

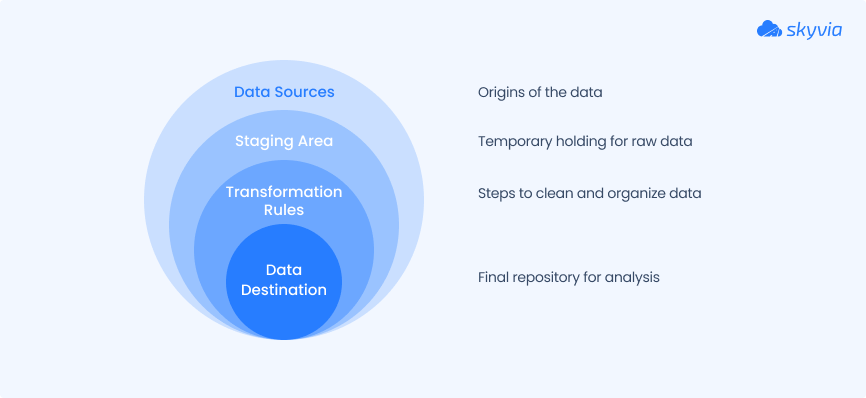

What Is ETL Architecture?

Think of it as the blueprint for how data moves within your business. It’s the behind-the-scenes work that gets the info from its source (a CRM, cloud storage, or API) to the destination, like a DWH or reporting tool.

ETL architecture ensures that everything is connected, organized, and flows smoothly.

Let’s consider how it works.

- Extract. The initial step is the extraction of data, during which the data is explicitly identified and drawn out from several internal as well as external sources. Database tables, pipes, files, spreadsheets, and relational and non-relational databases may be included among some of these data sources.

- Transform. The next step involves transformation. Once the data is extracted, it must be transformed into a suitable format and should be transported physically to the target data warehouse. This transformation of data may include cleansing, joining, and sorting data.

- Load. The final step is to load the transformed data into the target destination, like a data warehouse or a database. Once the data is loaded into the DWH, it can be queried precisely and used for analytics and business intelligence.

Modern Trends Impacting ETL Architecture

ETL isn’t static. It’s evolving. Businesses move more into the cloud and deal with larger, more complex datasets. So, ETL work is changing.

The new trends are:

- Cloud-Native ETL. With it, companies gain scalable and cost-effective pipelines that grow in line with their needs, whether on AWS, GCP, or Azure.

- Real-Time Data. Forget the old-school batch processing. The future is about streaming ETL, where data is processed continuously as it arrives, enabling teams to work with real-time insights and stay ahead of the curve rather than waiting for reports to catch up.

- Serverless ETL. Businesses may no longer need to worry about the complexities of infrastructure with platforms like AWS Lambda and Google Dataflow. It’s about running jobs without managing servers, giving users more freedom and less hassle.

- No-Code ETL Solutions. The rise of tools like Skyvia completely changes the game. Such platforms provide setting up, automation, and integration of data workflows without coding. Therefore, non-tech teams are happy with managing processes quickly and flexibly.

12 ETL Architecture Best Practices

When we think about building a rock-solid ETL architecture, we need to ensure that data flows smoothly, efficiently, and is always ready for action. A great ETL pipeline is the foundation for making data-driven decisions quickly.

So, let’s break down 12 ETL architecture best practices that’ll help you build pipelines that work with your business, not against it. From reliable setups to tools that make life easier, we’ll give you actionable steps and real-world examples to ensure you’re set up for success in the long run.

1. Plan for Scalability from the Start

Building scalable ETL pipelines is a must. As the data grows, you need to ensure that the system can handle increased volume without falling.

Why it matters: Scalability allows your ETL system to grow with your data needs, keeping things fast and efficient.

Example: Teesing has harnessed Skyvia’s cloud-based integration to scale its ETL processes effortlessly. By automating data workflows between various applications and databases, they’ve eliminated manual tasks, reduced errors, and ensured real-time data availability across their systems.

2. Automate Data Transformation Where Possible

This approach can save companies tons of time and reduce human error. By using ETL platforms, you can set up workflows that automatically transform data as it flows through the pipeline.

Why it matters: Automated transformations streamline the process, ensuring consistency and speeding up data availability.

How to do it: Use Skyvia’s transformation features to automatically clean and format data without manual intervention.

3. Use Cloud-Based Solutions for Flexibility

Such tools are all about flexibility and cost savings compared to on-premise ones. Regardless of your choice: AWS, Google Cloud, or Azure, migrating your ETL processes to the cloud enables seamless scaling and maintenance.

Why it matters: Cloud platforms are flexible, reliable, and cost-saving, with minimal infrastructure management.

Example: Bakewell Cookshop has used Skyvia’s cloud-based integration to streamline its ETL processes. By connecting multiple data sources (including their BigCommerce platform and cloud storage) without worrying about maintaining on-premise infrastructure, they’ve saved time and resources.

4. Focus on Data Quality at Every Stage

Data quality can be a major headache in ETL. If you want your data to be trustworthy and useful at every stage of the pipeline, you must be on top of:

- Validation.

- Cleaning.

- Consistency.

Why it matters: Without clean, consistent data, you’re essentially flying blind. Bad data leads to bad decisions, and nobody wants that.

How to do it: Platforms like Skyvia make it easy by providing built-in features to clean and validate data while it’s being extracted and transformed. This way, you can ensure everything is in tip-top shape before it reaches its final destination.

5. Optimize Data Extraction for Efficiency

Not optimized extraction is slow. Use incremental extraction methods to pull only the data that are new or updated rather than re-pulling everything.

Why it matters: Incremental extraction reduces unnecessary load on source systems and speeds up the pipeline.

Example: A company migrating from batch extraction to incremental ETL using the ETL system’s real-time sync may see a drastic improvement in processing time and data accuracy.

6. Implement Monitoring and Alerts

It’s a good idea to monitor ETL systems to catch errors early. Set up automated alerts. It will notify users when a process fails or a data pipeline slows down.

Why it matters: Monitoring helps teams avoid data downtime. It ensures that your systems stay up and running without manual checks.

How to do it: These platforms offer dashboards for real-time monitoring and custom alerts. So users may stay on top of their ETL processes.

7. Leverage Parallel Processing for Speed

Parallel processing involves multiple data transformations or loads that occur at the same time. It drastically improves the speed of the ETL pipelines.

Why it matters: Speed is critical when you’re working with large datasets. Parallel processing ensures your system handles heavy loads efficiently.

How to do it: ETL platforms automatically handle parallel processing when syncing or transferring large amounts of data.

8. Choose the Right Data Storage Options

Verify the destination (DWH or cloud storage). It has to support the types of queries you need to run.

Why it matters: Storing data in the incorrect format or on the wrong system can slow down processing. It makes analysis more challenging.

How to do it: Choose a data warehouse like Snowflake or Google BigQuery for storing large, structured datasets, and leverage cloud storage like AWS S3 for more flexible, unstructured data.

9. Use Version Control for ETL Code

To ensure that workflows stay organized and any changes are easily tracked, the ETL scripts need to be versioned.

Why it matters: With version control, teams may roll back to previous versions of the ETL processes if something breaks. It keeps a clean record of how your processes evolve.

No more wondering where things went wrong or who made the last update.

How to do it: Set up Git repositories to store ETL scripts. Each time you make a change, commit it with a clear message.

Platforms like GitHub or GitLab offer collaboration features that make it easy to

- Track changes.

- Work together.

- Manage code reviews.

This method keeps everything clean and guarantees you’re always working with the most up-to-date version.

10. Document Your ETL Processes

This practice means that everyone on your team understands the architecture, making it easier to maintain and troubleshoot.

Why it matters: Clear documentation reduces errors and improves collaboration between teams.

How to do it: Use documentation tools like Confluence or store the workflow diagrams in a shared folder.

11. Build a Strong Data Governance Framework

Data governance is all about implementing the proper controls. It

- Keeps the data pipeline secure.

- Ensures privacy.

- Allows you to meet compliance standards.

Why it matters: With privacy regulations like GDPR tightening up, strong data governance is a must to avoid legal headaches and ensure you’re doing everything by the book.

How to do it: To get started

- Set clear rules for who can access what data (access control).

- Track the origin and flow of data (data lineage).

- Keep detailed records of who did what and when (audit trails).

These simple steps will help you stay secure and compliant as the data moves through the pipeline.

12. Plan for Data Security at Every Layer

From the moment data is extracted to when it’s loaded into a final destination, check if the data is secure.

Why it matters: Data breaches can be costly and damaging. Security should be embedded in your ETL architecture from the start.

How to do it: Use

- Encryption protocols (AES-256 or TLS) for transferring data securely.

- Access controls (OAuth 2.0 or SAML) to manage secure authentication.

- Secure transfer protocols (HTTPS, FTPS, or SFTP) to ensure data is encrypted while being transferred across networks.

Challenges in Building ETL Architecture

Even the most well-designed ETL pipeline isn’t immune to failures. Many things, from sluggish performance to messy integrations with legacy systems, can go wrong if you’re not careful.

These challenges can feel overwhelming, especially when they all show up at once, but with the right tools and a solid plan, most of them are totally manageable.

Below are some of the biggest roadblocks teams run into and how to steer around them without breaking a sweat.

1. Data Latency

The headache. Delays between when data is created and when it becomes available for analysis. This is especially painful if your business relies on up-to-the-minute insights.

The fix. Use tools that support incremental loads or streaming ETL. Platforms like Skyvia, Fivetran, or Kafka can reduce the gap between data creation and availability by continuously syncing changes instead of waiting for the next batch.

2. Scalability

The headache. As your business grows, so does your data. And suddenly, your ETL setup that worked fine last year is crawling.

The fix. Design with scale in mind. Cloud-based platforms like Snowflake, BigQuery, and Skyvia let you scale computing and storage independently. Also, use parallel processing where possible to keep things fast as volumes grow.

3. Massive Data Volumes

The headache. Working with millions (or billions) of records can slow everything down (from extraction to load times).

The fix. Break the load into chunks. Use partitioning, indexing, and incremental extraction. Tools like Apache Spark, Airbyte, and Skyvia are built to handle large volumes without melting down.

4. Legacy System Integration

The headache. Older systems weren’t built with modern data architecture in mind. Connecting them can be like forcing a square peg into a round hole.

The fix. Use ETL tools with pre-built connectors or API bridges. Skyvia, for example, connects older systems like QuickBooks Desktop or custom SQL servers to modern destinations without custom code. Where APIs don’t exist, you can still work with flat files or ODBC drivers.

5. Real-Time Processing Requirements

The headache. Stakeholders want real-time dashboards and alerts. But your ETL was designed for daily batch jobs.

The fix. Consider streaming ETL with platforms like Kafka, Google Dataflow, or Snowflake’s Snowpipe. If your use case doesn’t need true real-time, scheduled near-real-time syncs (every 5-15 minutes) via Skyvia or Stitch can hit the sweet spot between performance and cost.

Top Solutions for Better ETL Architecture

There’s no one-size-fits-all tool when we talk about ETL. But that’s good news. Whether you’re a startup trying to get dashboards up and running fast or an enterprise wrangling terabytes of data from 20 different systems, your ETL setup needs to fit your own workflow, not the other way around.

Below is a quick breakdown of some of the most popular ETL platforms out there.

| Tool | Best For | Key Features | Ease of Use | Pricing | Scalability |

|---|---|---|---|---|---|

| Skyvia | No-code automation for teams with mixed skill levels. | Integration, backup, data sync, SQL querying, 200+ connectors. | Drag-and-drop UI, beginner-friendly, no coding needed. | Free plan + usage-based. | Auto-scaling, cloud-native. |

| AWS Glue | Data engineers needing deep AWS integration. | Serverless, event-driven ETL, integration with S3, Redshift, Athena. | Requires Python/Scala scripting and AWS ecosystem knowledge. | Pay-as-you-go. | Highly scalable on AWS. |

| Fivetran | Fast deployment & managed pipelines. | Prebuilt connectors, automated schema migration, and strong ELT support. | Easy to set up, but limited customization. | Higher cost, per row. | Enterprise-grade. |

| Apache NiFi | Flow-based programming, complex routing needs. | Real-time processing, visual flow designer, robust data routing. | Visual but complex; has a learning curve. | Free (open source). | Scales horizontally. |

| Talend | Enterprises needing full control. | Advanced transformations, data quality tools, on-prem/cloud hybrid. | GUI with coding options, better for technical users. | Subscription model. | Scalable with resources. |

| Hevo Data | Startups and midsize businesses. | Real-time sync, minimal setup, integrations with BI tools. | Clean UI, quick to deploy, minimal setup needed. | Flat monthly pricing. | Scalable cloud model. |

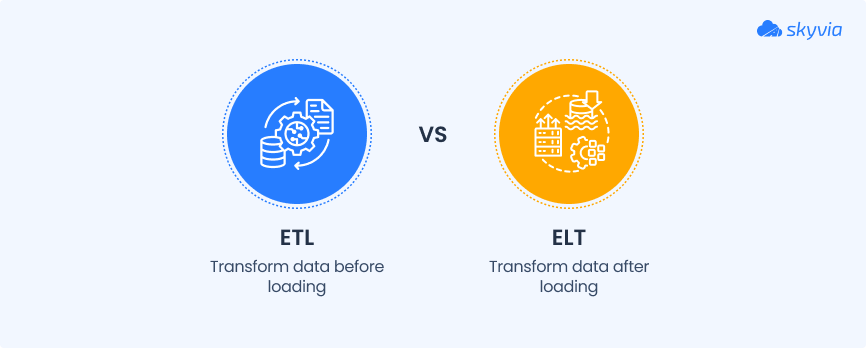

ETL vs. ELT: Which Is Best for Your Architecture?

When building a data pipeline, one of the first questions you’ll bump into is this: ETL or ELT? Same letters, different order. And that order makes a big difference.

Let’s break it down:

- ETL (Extract, Transform, Load) pulls data out of source systems, transforms it on the way in, and then loads it into a destination (like a data warehouse).

- ELT (Extract, Load, Transform) flips the script. It extracts raw data, loads it straight into the target system, and does the heavy lifting (transformation) once it’s already there.

When to Use ETL

- Best suited when data transformations are complex and must occur before loading into the destination.

- Ideal for traditional relational databases that lack large-scale transformation capabilities.

- Fits teams that prioritize strict control over data quality throughout the pipeline.

- Effective for small to medium data volumes that require preparation prior to loading.

Common in: healthcare, banking, insurance (anywhere with strict compliance and structured workflows).

Tools: Skyvia, Talend, Informatica.

When to Use ELT

- Works best for large datasets that need to be loaded quickly before transformation.

- Ideal for modern, cloud-native data warehouses (e.g., Snowflake, BigQuery, Redshift) that support scalable in-warehouse processing.

- Enables faster time-to-value by making raw data available sooner.

- Supports analytics teams that prefer flexible modeling directly from raw data.

Common in: SaaS, e-commerce, and marketing (fast-moving environments where speed and flexibility rule).

Tools: Fivetran, dbt, Stitch, Snowflake (native features).

Which One Should You Use?

- ETL is a strong fit for traditional data stacks where teams need full control over data preparation before loading.

- ELT is better suited for cloud-based environments dealing with large volumes of raw data.

- ELT offers faster access to data and scales easily with modern platforms.

Tip: Some platforms (like Skyvia) are flexible enough to support both approaches, so you can start with one and evolve as your architecture grows.

Real-World ETL Use Cases

These aren’t just abstract scenarios. Real companies are using smart ETL architecture to solve real data headaches and unlock serious business value.

Fintech: Building Smarter Analytics at CashMe

What wasn’t working: CashMe, a fast-scaling fintech player, was drowning in siloed data from Microsoft Dynamics, marketing platforms, and more, making it tough to get the full picture for reporting.

How they fixed it: They brought in Skyvia to pull all those scattered data points into Snowflake, setting up smooth pipelines that plugged right into Power BI.

The result: Cleaner dashboards, real-time insights, and way less manual data wrangling. That meant faster decisions and better strategy across the board.

Cybersecurity: A Unified Customer View for TitanHQ

What wasn’t working: TitanHQ was juggling customer info across SugarCRM, Maxio, and support platforms. The team needed a reliable way to stitch it all together and use it to drive retention.

How they fixed it: With Skyvia, they automated the entire flow, pushing everything into Snowflake and linking it up with Tableau for reporting.

The result: No more hand-coded scripts. Just clean, synced data, ready to analyze. TitanHQ now has a full 360° view of their customers. They’re using it to keep clients happy and stick around longer.

Retail: Redmond Inc. Tackles Inventory Chaos

What wasn’t working: Redmond Inc. runs over 10 brands, and they were struggling to sync order and inventory data between Shopify and their ERP (Acumatica). Stock levels were messy, and fulfillment was getting bumpy.

How they fixed it: They turned to Skyvia to handle data replication and real-time sync between systems.

The result: Inventory became accurate, orders flowed smoothly, and the team saved a ton of time (and headaches) from fixing errors. Operational efficiency? Big time.

Healthcare: Keeping Patient Data in Sync

What wasn’t working: A healthcare provider needed all departments on the same page, literally. Patient records weren’t syncing in real-time, and care coordination was taking a hit.

How they fixed it: They rolled out a real-time ETL setup to constantly update records across systems.

The result: Everyone from nurses to admin had access to current patient data, leading to faster and more effective care.

E-commerce: Smarter Marketing, Less Guesswork

What wasn’t working: An online retailer wanted to run smarter campaigns, but customer data lived in too many places: their CRM, email tools, and analytics dashboards didn’t talk to each other.

How they fixed it: They used an ETL process to funnel everything into one central warehouse, receiving better segmentation, targeting, and personalization.

The result: Engagement and conversions went up. And marketing had real numbers to work with instead of best guesses.

Conclusion

ETL architecture isn’t just about moving data from A to B. It’s about building something reliable, flexible, and smart enough to keep up with your business as it grows. The area encompasses real-time syncs and cloud scaling, as well as cleaning up messy data and addressing legacy systems. Every step matters.

We covered a lot:

- Why getting your architecture right saves time, money, and many headaches.

- 12 field-tested best practices to keep your pipelines clean and scalable.

- Real-world case studies that prove this stuff works.

- A toolkit of top platforms (like Skyvia) that make the heavy lifting feel lighter.

So what now?

If you’re ready to stop duct-taping the data workflows together and start building something future-proof, it’s time to give Skyvia a spin. Whether you need no-code simplicity or enterprise-level horsepower, it’s got your back because clean data leads to clean decisions.

F.A.Q. for ETL Architecture Best Practice

What is the difference between ETL and ELT architectures?

ETL transforms data before loading it; ELT loads raw data first and transforms later using the destination system’s power. ELT is best for modern, cloud-based setups handling big data.

How can I scale my ETL architecture to handle large data volumes?

Use cloud platforms like processing, distributed systems, and cloud solutions like AWS or Google Cloud. Break workflows into modular stages and add parallel processing or partitioning where needed.

What are common challenges in ETL architecture, and how do I overcome them?

Scalability, legacy integration, latency, and messy data. Use automation, cloud-native tools, strong governance, and monitoring to stay in control.

What tools can help improve my ETL architecture?

Skyvia, AWS Glue, Apache NiFi, and Fivetran are top options. Choose based on the use case, skill level, and how much control you need.