Replacing information from one system to another might seem like a straightforward IT task, but it’s actually one of the trickiest and most important jobs for any business today. Whether migrating to the cloud, changing your CRM, or cleaning up old legacy systems, choosing the right solution can save countless hours and prevent costly mistakes. With so many data migration software, cloud-based, on-premises, and open-source ones, it’s tough to figure out which genuinely fits your needs in 2025.

That’s why we put together this guide and walk you through 10 of the most popular systems, highlighting what they do best, where they fall short, and what types of projects they’re made for.

Table of contents

- What Is Data Migration?

- What are Database Migration Tools?

- Different Types of Database Migration Tools

- 4 Best Cloud-based Migration Tools

- Top 3 On-premise Migration Tools

- Top 3 Open Source Migration Tools

- How to Select the Right Data Migration Software?

- Data Migration Best Practices

- Conclusion

What Is Data Migration?

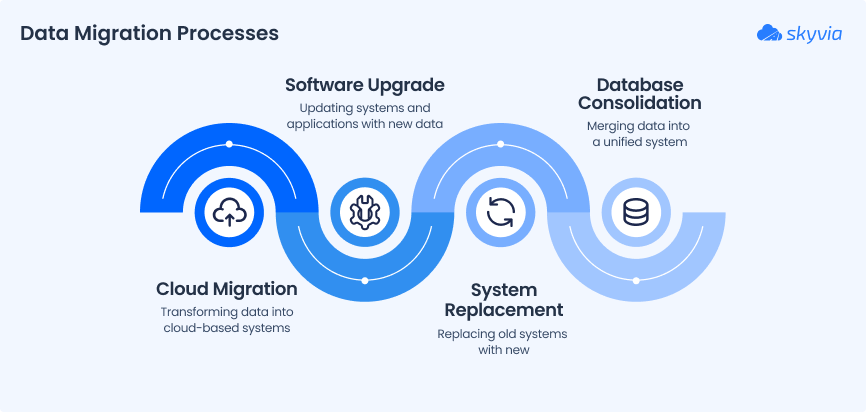

Moving information from one platform, format, or storage location to another is called “migration.” We use it during:

- Cloud migration.

- Software upgrade.

- System replacement.

- Consolidating multiple databases into a single source of truth.

Sounds simple, but it means reformatting, validating, cleaning, and securing insights, especially when moving between platforms that don’t speak the same “language.”

Done right, such a process helps users streamline operations, reduce redundancy, and improve info accuracy.

Done wrong, it leads to broken systems, lost data, and a lot of trouble. That’s why having the right tools and a well-thought-out plan matters more than ever.

What are Database Migration Tools?

There are software solutions that move insights between different databases or platforms quickly, safely, and with minimal disruption. Whether upgrading to a newer system, switching to a different database engine, or moving from on-prem to the cloud, they take the heavy lifting off the plate.

These systems handle many tasks, from schema conversion and data transfer to validation and synchronization. Many also provide users with automation, scheduling, and monitoring features to avoid information loss, downtime, or compatibility issues.

Depending on the project, you might need something lightweight, simple, enterprise-grade, and deeply customizable. That’s where understanding the different types of tools matters.

Different Types of Database Migration Tools

Not all migration software is built in the same way. Depending on each business’s setup and goal, companies choose a solution that matches their infrastructure and resources.

- Cloud-based systems are ideal for teams that want a quick setup, automated workflows, and scalability without worrying about hardware or updates.

- On-premise software is perfect for businesses with strict security requirements, complex legacy systems, or limited internet access.

- Open-source ones are often free to use but may require more technical expertise to set up and maintain.

Let’s walk through the top tools in each category to help you find the best fit.

4 Best Cloud-based Migration Tools

Such migration software is a go-to choice for modern teams that require:

- Flexibility.

- Speed.

- Minimal setup.

Since these tools run in the cloud, users don’t have to worry about installation, updates, or infrastructure; they just connect data sources and let the tool handle the rest.

They’re handy for SaaS-heavy environments, distributed teams, and businesses scaling quickly.

Most offer automation, scheduling, and real-time syncing, making them great for ongoing data workflows, not just one-time transfers.

Next, we’ll look at four of the most popular cloud-based migration tools in 2025: Skyvia, Fivetran, Xplenty, and AWS Data Pipeline.

Skyvia

Skyvia is a no-code cloud data integration platform that supports:

- ETL.

- ELT.

- Reverse ETL.

- Replication.

- Synchronization.

- Data Flow

- Control Flow.

It provides over 200 connectors, including MySQL, Salesforce, HubSpot, Google Sheets, and many more.

Skyvia’s clean interface and drag-and-drop logic make it easy for non-technical users while offering advanced features for more complex pipelines.

It’s perfect for businesses that want fast results without relying on developers. Plus, its cloud-based infrastructure means users can manage everything from a browser. The tool offers a flexible range of pricing plans, starting with a free tier for light use, followed by paid plans beginning at $79/month. Higher-tier options are available for businesses needing more rows, connectors, or advanced scheduling.

Fivetran

It’s a fully managed ELT tool built for speed and reliability. It connects with 500+ sources and automatically syncs data into the destination warehouse or database.

Once set up, it handles schema changes and pipeline maintenance with little human input.

Fivetran is a good choice for data teams that want hands-off syncing and high-volume reliability, but it comes at a premium price, especially at scale.

Fivetran’s price is based on monthly active rows (MAR), meaning the number of new or updated records. Plans start around $300/month, but the cost scales quickly with usage. A free plan is available for light use (up to 500K MAR/month).

Xplenty

Xplenty, now part of the Integrate.io platform, is a user-friendly ETL tool that helps businesses move data between cloud sources, databases, and SaaS apps. It balances simplicity in customization with a visual interface, low-code options, and built-in monitoring.

It’s a solid choice for teams that want powerful workflows without the infrastructure headache.

The platform offers custom pricing based on data volume and connector usage, with most packages starting around $1200/month. A free trial is available.

AWS Data Pipeline

That’s Amazon’s native tool for moving and transforming data within the AWS ecosystem. It integrates well with services like S3, Redshift, RDS, and EMR, and supports complex job scheduling.

While it’s extremely powerful, it’s more suited for teams already familiar with AWS architecture and comfortable managing infrastructure-as-code.

AWS Data Pipeline charges based on pipeline activity. It starts at $1/month per pipeline, but costs vary depending on frequency, region, and AWS resource usage.

Pros of Cloud-based Migration Tools

- Quick to deploy. No installation or local setup is required.

- Scalable and flexible. Easily handle growing data needs across multiple cloud platforms.

- Automation-friendly. Most offer built-in scheduling, monitoring, and error handling.

- Accessible from anywhere. Great for distributed teams and remote operations.

- User-friendly interfaces. Many support no-code/low-code workflows for business users.

- Reduced infrastructure maintenance. The vendor manages uptime, updates, and scalability.

Cons of Cloud-based Migration Tools

- They can get expensive at scale. Especially for tools that charge by data volume or usage.

- Limited control. Less customization compared to self-hosted or code-first solutions.

- Internet-dependent. Relies on strong and stable connectivity for large transfers.

- Vendor lock-in risk. Migrating away from a cloud-based solution later can be tricky.

- Less suited for sensitive on-premise-only environments. Not ideal for companies with strict data residency or security regulations.

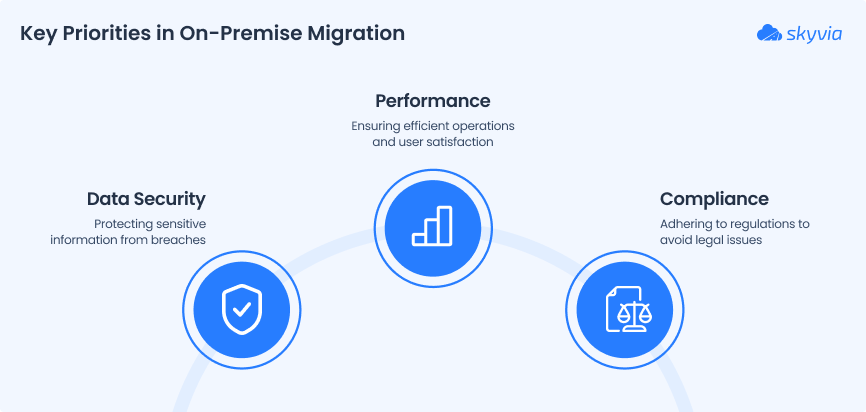

Top 3 On-premise Migration Tools

While cloud-based tools are popular for their flexibility, many businesses, especially large enterprises or those in regulated industries, still prefer on-premise migration tools. These platforms are installed and managed locally, offering more control over:

- Data Security.

- Performance.

- Compliance.

They’re particularly useful when dealing with sensitive legacy systems or strict internal policies that prevent data from moving through the cloud. Though they require more setup and IT involvement, their robustness and customizability make them a solid choice for complex or high-stakes migrations.

Three of the most widely used on-premise data migration tools in 2025 are Informatica PowerCenter, Talend Data Fabric, and Oracle Data Integrator.

Informatica PowerCenter

It’s a powerful, enterprise-grade data integration platform known for its stability, scalability, and broad connector support. The system successfully manages large, mission-critical migration projects that require high availability and strong governance.

PowerCenter is packed with tools for:

- Transformation.

- Profiling.

- Workflow design.

- Performance monitoring.

Custom pricing is based on enterprise requirements and deployment size. Generally, it starts in the five-figure range annually.

Talend Data Fabric

The platform provides multiple solutions as a part of its data integration services. It allows connecting from most data sources and managing the data cluster on-site and in the cloud.

The system offers features like data cleaning, data governance, data integration, etc., and supports over 1,000 connectors to choose from and process the data.

The pricing starts at $1,170/month, depending on the edition and deployment type. An enterprise plan is available for large-scale deployments.

Oracle Data Integrator (ODI)

This data integration platform is typically used for all data integration services. It can connect with multiple sources to perform various tasks like:

- Data synchronization.

- Transformation.

- Quality management.

- Data loading across heterogeneous systems.

Being a product of Oracle, it also enables easier integration with other Oracle products like Oracle GoldenGate for real-time data replication and Oracle Database services.

It provides documentation and an extensive knowledge base for users getting started with the platform. However, setup and customization often require skilled developers or database administrators.

The platform doesn’t offer a free version of ODI. The Oracle Data Integrator Enterprise Edition is typically priced at around $23,000–30,000 per processor license, plus additional support and maintenance fees. Precise pricing depends on your Oracle licensing agreement.

Pros of On-premise Migration Tools

- Full data control. Everything stays behind your firewall, which is ideal for highly sensitive environments.

- Better compliance. Perfect for industries with strict regulatory or data residency requirements.

- Strong performance. Optimized for high-throughput, enterprise-grade workloads.

- Legacy system compatibility. Designed to work with older infrastructure or customized internal systems.

- Robust monitoring and auditing. Advanced tracking, logging, and error-handling features built in.

Cons of On-premise Migration Tools

- Expensive to implement. High upfront licensing, hardware, and maintenance costs.

- Slower to deploy. Installation and setup can be complex and time-consuming.

- Requires IT resources. Ongoing support, upgrades, and system maintenance depend on in-house teams.

- Less flexible. Scaling up or adapting to new tools often involves more manual work.

- Not cloud-native. May lack seamless integration with cloud apps or hybrid environments without customization.

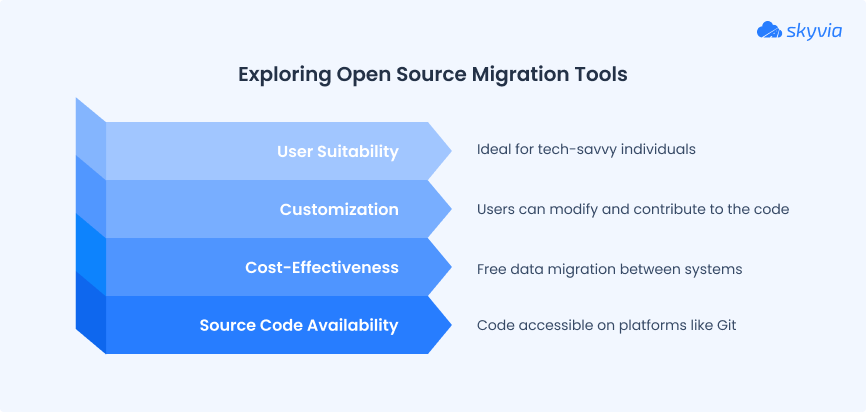

Top 3 Open Source Migration Tools

This software is driven by the developers’ community, who work together to create and improve the tools and prefer:

- Complete control.

- Transparency.

- The ability to customize workflows without vendor lock-in.

The source code of these tools is typically available on a central repository like Git. Some licensed solutions also use these source codes as the underlying code for their software.

The open-source tools allow data to be migrated between different systems at no cost. The users can also modify or contribute to the open-source code.

These tools suit tech-savvy people who can understand the open-source code and implement the changes if required.

Let’s look at three popular open-source database and data migration tools: Apache NiFi, Airbyte, and Apache Airflow.

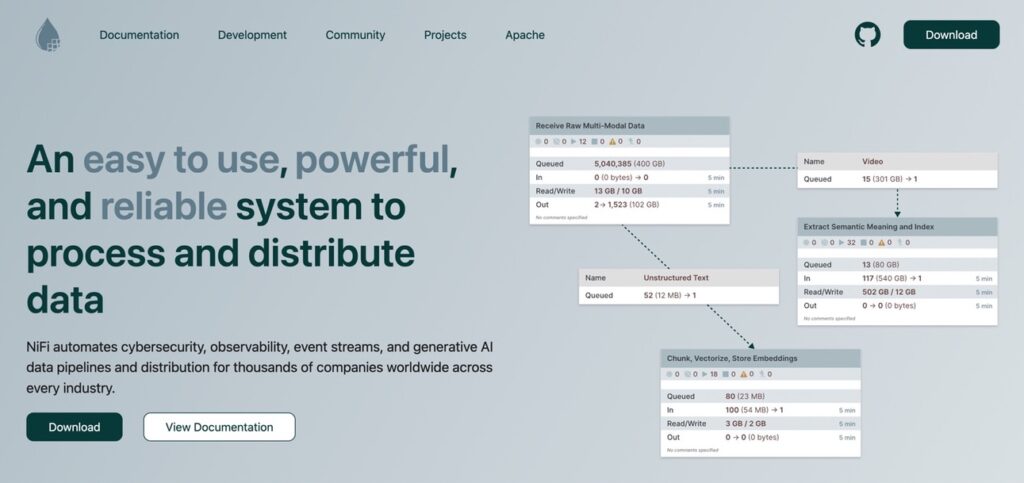

Apache NiFi

This robust data flow management tool supports real-time and batch data movement between systems. It features a drag-and-drop interface, data provenance tracking, and fine-grained control over flow behavior.

The user interface represents the flow through directed graphs that are easy to understand, modify, and monitor. It allows the flow of these graphs to be changed at runtime itself, making it more configurable.

Such a platform is perfect for teams who want to automate complex data workflows.

It’s free and open-source. Paid support is available through Cloudera.

Airbyte

This fast-growing open-source ELT tool makes data integration accessible and community-driven. It offers over 300 connectors and allows users to build and deploy new ones easily. Airbyte is highly modular, supports cloud or local deployment, and integrates well with modern data stacks.

The tool comes in an open-source version and a commercialized cloud version (Airbyte Cloud) that starts at around $2.50/credit, depending on usage.

Apache Airflow

This open-source orchestration tool is widely used for managing complex data pipelines. It’s not a data migration tool per se, but it’s often used to schedule and monitor ETL/ELT jobs. Developers love its Python-based Directed Acyclic Graphs (DAG) structure, flexibility, and integrations with cloud and on-prem tools.

It can also connect with the popular data sources that allow the data to be migrated between different databases.

The solution is free and open-source. Managed services are available via providers like Astronomer and Google Cloud Composer.

Pros of Open Source Migration Tools

- Free to use. No license fees, making them ideal for startups and budget-conscious teams.

- Highly customizable. Developers can tweak the code, create custom connectors, and build tailored workflows.

- Community-driven. Backed by active open-source communities offering plugins, updates, and shared solutions.

- Flexible deployment. Can be hosted on-premise, in the cloud, or in hybrid environments.

- Ideal for tech-savvy teams. Complete control over pipeline behavior, scheduling, and orchestration.

Cons of Open Source Migration Tools

- Requires technical skills. Not beginner-friendly; setup and maintenance often demand developer involvement.

- Limited user interface. Most tools prioritize flexibility over visual, drag-and-drop features.

- There is no official support. Users are on their own for debugging or scaling unless using a managed service.

- Integration gaps. May lack pre-built connectors or require additional tools to cover full ETL needs.

- More time-consuming to configure. Expect longer implementation cycles compared to commercial platforms.

How to Select the Right Data Migration Software?

Now, each business needs a smooth, efficient, and secure data transfer. However, choosing the appropriate software might be a challenge. With so many options available, weighing users’ specific needs, business size, and long-term goals is essential.

Organizations have to look at factors like performance, integration capabilities, and security to make sure the tool fits their technical requirements and budget.

The perfect migration software should meet your current needs and scale as the business grows.

To help you navigate through the options, let’s break down some key aspects to consider before choosing the right one.

Performance at Scale

When migrating large amounts of data, performance becomes critical. Whether you’re moving petabytes of data or syncing real-time updates across systems, the tool you choose must handle high-volume transfers without issues.

Look for software that offers batch processing, parallel data transfer, and load balancing to optimize performance.

It’s also essential to consider how the tool will perform with growing data volumes as the business expands. Tools that offer cloud-native architecture tend to scale better and provide elastic performance adjustments as needed.

Integration Flexibility

How well does the software connect to your existing systems and other third-party solutions? The more cloud apps, on-prem databases, or CRM platforms your migration tool can integrate with, the better.

Tools supporting multiple connectors, APIs, or custom integrations give users more options and flexibility.

If data is spread across multiple systems (e.g., Salesforce, MySQL, OneDrive), users need a solution that can easily pull data from various sources and push it to their target system.

Ease of Use

Data migration can get complicated, but it doesn’t have to be. Usability is key, especially for non-tech users.

Selecting software with drag-and-drop interfaces, no-code or low-code options, and pre-built templates that make setup and configuration easy is a good idea. The best data migration software will help streamline the process, not add to the complexity.

For a small team or an enterprise-scale migration, ease of use can significantly reduce deployment time and help ensure adoption across the team.

Security and Governance

While working with sensitive info, security and governance are must-have. Ensure that the migration process doesn’t expose data to risks.

Opt for tools that offer encryption, secure connections, and compliance with regulations like GDPR, HIPAA, and CCPA.

The migration platform must also have strong audit logs, access controls, and data masking features to control who can view and edit the information. Always choose a tool that prioritizes security to avoid costly data breaches or compliance violations.

Data Migration Best Practices

To ensure a successful data migration, it’s essential to follow these best practices throughout the process:

- Plan Thoroughly. Start by strategizing a detailed migration plan. Identify potential constraints and lay out a clear diagram of the data sources and destinations for better understanding.

- Address Data Issues Early. Before migration, carefully examine information for any quality issues, such as duplicates, missing values, or outdated records. Cleanse or transform the data as needed to avoid carrying over errors to the new system.

- Test with a Replica. Always test the migration on a replica of the target system before full implementation to identify potential issues and fine-tune the approach without risking the actual data.

- Backup Data. Always create a complete backup of data before migrating. A backup ensures you can recover quickly if something goes wrong during the migration.

- Verify Storage Capacity. Ensure the target system has sufficient storage space to accommodate all the migrated data. Double-check that the storage size aligns with the volume of data you’re moving.

- Break Down Data into Chunks. If there are network constraints, consider breaking the data into smaller chunks to avoid performance bottlenecks and to facilitate smoother migration.

- Post-Migration Review. After the migration is complete, reassess the data to ensure no loss occurs and that everything is transferred accurately. Run integrity checks to confirm all records were moved as expected.

Conclusion

The right data migration tool ensures a smooth, efficient transition from one system to another. Whether you’re working with cloud-based, on-premise, or open-source platforms, each type offers unique advantages based on business needs and technical setup.

Understanding requirements like performance, integration flexibility, and security helps people select the best tool for the job. Following best practices, such as thorough planning, data quality checks, and testing, will further ensure the success of your migration project.

F.A.Q. for Top Migration Tools

How do I choose the right data migration software for my business?

When choosing data migration software, consider factors like ease of use, scalability, performance at scale, and compatibility with your existing systems. Cloud-based tools may be ideal for scalability and ease of deployment, while on-premise solutions might be better suited for highly secure or legacy environments.

What is the role of data transfer tools in data migration?

Data transfer tools facilitate the actual movement of data between systems, whether it’s between databases, cloud storage, or other applications. These tools ensure data is transferred efficiently, accurately, and securely, often with minimal manual intervention.

Can database migration tools handle large datasets effectively?

Yes, modern database migration tools handle large datasets effectively. They often include features such as parallel processing, incremental data migration, and automated error handling to ensure the data is moved without disruption, even for large or complex databases.

How do cloud data migration tools compare to traditional database migration tools?

Cloud data migration tools are typically more flexible and scalable. Unlike traditional database migration tools, which are often designed for on-premise or legacy systems, cloud tools can handle distributed environments and integrate seamlessly with modern cloud applications.