Summary

- Skyvia – A practical fit for warehouse-centric stacks that need integration, sync, backups, and the occasional CSV handled without turning data plumbing into a full-time role.

- Fivetran – A solid choice when ingestion needs to run quietly at scale, and cost is secondary to not thinking about pipelines once they’re live.

- Hevo Data – Works well when speed matters more than depth, and the goal is to get SaaS and database data into the warehouse with minimal setup.

- Airbyte – Appeals to teams who want freedom and flexibility, and are comfortable trading convenience for control by running and shaping things themselves.

- Estuary – Built for cases where data freshness matters, not just sounds good, and teams are ready to manage streaming behavior alongside batch work.

By 2026, the data stacks pattern is universal: dozens to hundreds of systems, each performing its role adequately but not communicating with others in ways that reflect actual business needs. That’s not a design flaw in those systems, though; making them work together coherently is precisely what data integration tools are for.

Some of these tools offer straightforward ETL that functions reliably without attention. Others provide real-time data movement. Some are extracting information from legacy systems that existed before cloud strategy became a vocabulary. Finding the exact needle in this integration haystack is quite a task.

We’ve tested and organized the most capable integration tools in 2026 based on what they demonstrably handle well, and this article is the result. Let’s choose the tool that will bring everything together for you.

Table of Contents

- Data Integration Meaning

- What are Data Integration Tools?

- How to Choose the Right Tool in 2026?

- Top 15 Data Integration Tools Compared

- Comparison Table: Skyvia vs. Competitors

- Why Skyvia is the Smart Choice for SMBs & Mid-Market

- Key Trends Shaping Data Integration in 2026

- Conclusion

Data Integration Meaning

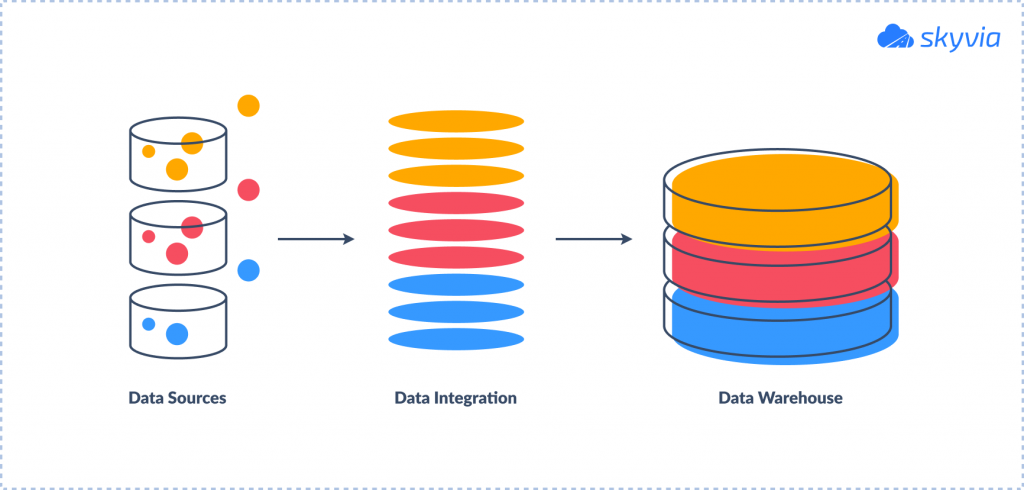

Data integration is the process of collecting data from multiple systems, transforming it into a consistent format, and delivering it to a single destination for analysis, reporting, or use by other applications.

As you can see, data integration sits between records that lack coordination and a structured warehouse that holds answers you can grab. Finally, teams get comprehensive visibility, decisions emerge from actual unified information, and being “data-driven” stops being something people only say in quarterly reviews.

What are Data Integration Tools?

Even though integration tools share the goal, the details matter because how the data moves shapes everything downstream.

Here are the three integration models that define modern stacks.

ETL vs. ELT

ETL is the traditional method. Before loading the data into the warehouse, it is cleaned and reshaped. That worked flawlessly when warehouses were slow, expensive, and somewhat erratic.

ELT does the opposite. Data lands in Snowflake or BigQuery first, raw and intact, and gets transformed there. Since modern warehouses are built to crunch data at scale, this approach is simpler to reason about, easier to debug, and far more flexible when requirements change.

Most cloud-first teams quietly moved to ELT because it removes much of the unnecessary choreography.

Reverse ETL

Reverse ETL answers a simple question: why should insights live only in dashboards?

Instead of analysts pulling reports, cleaned warehouse data is pushed back into tools like Salesforce or HubSpot. Scores, segments, and metrics appear directly where sales and marketing teams already work.

In 2026, this isn’t a “nice extra.” It’s how data becomes operational instead of observational.

Real-Time vs. Batch

Data integration tools have different movement patterns:

- Batch integration runs on a schedule. For reporting, analytics, and most business workflows, this calmness and predictability are perfect.

- Real-time integration flows constantly. It’s useful when timing matters more than comfort.

The smart stacks get the best of both worlds: real-time, where it earns its keep, and batch, where reliability should triumph.

How to Choose the Right Tool in 2026?

Most tools look fine on day one. The issues knock on the door when volumes grow. These are the criteria that matter.

1. Connector Availability

Be painfully specific here because there’s a big difference between “we integrate with databases” and “we integrate with Shopify, QuickBooks, NetSuite, Salesforce, SFTP, etc.”

If you rely heavily on SaaS apps, make sure the connectors are native, maintained, and not labeled as “beta.” Custom APIs sound flexible until you’re the one debugging them.

2. No-Code vs. Low-Code

The main problem with choosing between low-code and no-code is that, for many, it is a technical question, whereas it is really a question about people.

- Python or SQL-based tools will reveal their potential without disrupting operations if your pipelines are reserved for data engineers’ eyes only.

- A no-code interface is a survival mechanism if ownership drifts toward RevOps, marketing, or analytics teams.

Ask yourself who will maintain the pipelines, not who sets them up once during a demo.

3. Pricing Models (Read This Carefully)

Many tools subtly lose their appeal at this point.

Certain platforms charge according to the amount of data or events they process. That is effective until your company expands, usage increases, or someone decides to log additional data “just in case.” Whether or not the value scales, costs do.

Others price according to workloads, rows, or connectors, which is typically more predictable and simpler to explain to finance. Surprises are less shocking, and you are aware of what you are paying for.

Before committing, model what pricing looks like six and twelve months out. The cheapest tool today is often the loudest invoice tomorrow.

Top 15 Data Integration Tools Compared

Best All-in-One Cloud Data Platforms

1. Skyvia

Skyvia is a top no-code, freemium data platform that goes beyond ELT by combining integration with backups, querying, and bi-directional sync, so teams can move, protect, inspect, and reconcile data across systems from one visual interface.

G2 / Capterra Rating

- G2 – 4.8 out of 5 with 290 reviews.

- Capterra – 4.8 out of 5 with 109 reviews.

Best for

- Small and mid-sized teams that want production-ready pipelines without having to write volumes of code.

- Salesforce-centric stacks (Salesforce → warehouse, bidirectional syncs, backups).

- Hybrid setups mixing cloud apps with on-prem databases.

- Teams that care about incremental updates and Change Data Capture (CDC), not full reloads every night.

Key Features

- Provides a no-code wizard with a user-friendly interface, meaning that business users can configure data flows visually without extensive training.

- Enables failure alerts and detailed error logs.

- Offers various scenarios with over 200+ connectors for cloud-based, on-premise, or hybrid ecosystems.

- Full and incremental replication with CDC – ideal for analytics pipelines where full scans waste resources.

- Bidirectional synchronization between apps and databases.

- Visual mapping with lookups, expressions, and relations.

- dbt Core support for modeling data in your warehouse or database, with Skyvia handling hosting and infrastructure.

- MCP server capability that allows AI tools to query and work with your connected data sources via a standardized protocol.

- Scheduling from daily down to 1-minute frequency on higher tiers.

- Data Flow and Control Flow for advanced integrations.

Pricing

Skyvia’s pricing is built around records, which makes it easier to connect usage to cost. There’s a free tier to test real pipelines, and paid plans start at $79 per month as data volumes and sync frequency increase. As you move up, the limits loosen rather than the model changing, and enterprise plans focus on security and performance rather than adding a new pricing scheme.

Strengths & Limitations

Strengths

- Very fast to set up.

- CDC-driven incremental loads reduce warehouse and API strain.

- Cost-effective and transparent pricing compared to volume-based tools.

- Strong fit for Salesforce-heavy analytics and sync scenarios.

Limitations

- CDC is schedule-based, not true real-time streaming.

- Not designed for extremely large, event-streaming workloads.

2. Fivetran

Fivetran is the market leader for ELT: a fully managed, warehouse-first ingestion platform that quietly keeps data flowing into Snowflake, BigQuery, and friends with minimal hands-on work.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 994 reviews.

- Capterra – 4.4 out of 5 with 25 reviews.

Best for

- Teams that want ELT to be invisible and are willing to pay for that comfort.

- Companies with large, growing data volumes where reliability matters more than flexibility.

- Organizations that don’t want engineers babysitting pipelines or chasing schema changes.

Key Features

- Log-based CDC for inserts, updates, and deletes with low source impact.

- 700+ prebuilt connectors for SaaS apps, databases, and event sources.

- Automatic schema drift handling without manual remapping.

- Tight integration with dbt for downstream transformations.

- Enterprise-grade security, roles, and compliance options.

Pricing

Fivetran’s pricing follows Monthly Active Rows (MAR) per connector. Free tier covers early testing, while costs scale with growing data volumes. It’s designed as a premium solution targeting teams with high data movement and the budget infrastructure to support usage-based pricing at scale.

Strengths & Limitations

Strengths

- Extremely reliable “set it and forget it” ingestion.

- Best-in-class connector coverage.

- Handles CDC and schema changes with almost no manual effort.

Limitations

- Pricing can escalate fast as data scales.

- Transformations live outside the platform (dbt-heavy workflows).

- Less appealing for cost-sensitive teams or highly custom logic.

3. Stitch

Stitch is a lightweight, developer-friendly ELT tool that focuses on getting data out of common SaaS apps and databases and into your warehouse quickly, without trying to become a full data platform.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 68 reviews.

- Capterra – 4.3 out of 5 with 4 reviews.

Best for

- Teams that just want CRM and marketing data to show up in Snowflake or BigQuery so reporting can move forward.

- Startups and SMBs that don’t have the time or patience for a long rollout just to move data.

- People who are fine with shaping data later, as long as ingestion is stable and doesn’t need attention.

Key Features

- 130+ prebuilt connectors based on the Singer open-source ecosystem.

- Incremental loads and basic CDC for selected databases.

- Automated schema handling for common drift scenarios.

- Scheduled batch syncs (hourly or daily).

- dbt-friendly workflows for transformations outside the platform.

Pricing

Stitch is row-based, so the bill mostly tracks how much data you actually move. There’s a small free tier to kick the tires, paid plans start around $100/month, and it scales up with volume – usually more predictable than usage-by-activity models, but it can still creep up fast when you start replicating wide tables at high frequency.

Strengths & Limitations

Strengths

- Very fast to set up and easy to operate.

- Solid choice for standard SaaS-to-warehouse ELT.

- The Open Singer ecosystem allows custom connectors if needed.

Limitations

- Batch-first approach with limited real-time capabilities.

- No native transformation layer inside the platform.

- Fewer enterprise-grade features compared to larger ELT vendors.

4. Matillion

Matillion is a cloud ELT tool focused on transforming data directly inside warehouses like Snowflake, BigQuery, and Redshift, built for teams that want hands-on control over SQL-driven transformation logic rather than just data ingestion.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 81 reviews.

- Capterra – 4.3 out of 5 with 111 reviews.

Best for

- Data engineers and analytics teams working primarily inside Snowflake, Redshift, or BigQuery.

- Organizations where transformations are complex, warehouse-centric, and closely reviewed.

- Teams that already have ingestion covered and need stronger orchestration and modeling inside the warehouse.

- Salesforce-to-warehouse pipelines where transformation logic matters more than setup speed.

Key Features

- Visual job builder for designing transformation pipelines without writing orchestration code.

- Warehouse-native ELT with pushdown execution for joins, aggregations, and business logic.

- Incremental loads and CDC support for supported databases and SaaS sources.

- Built-in scheduling and dependency management across jobs.

- Version control and team collaboration features are suited for governed environments.

- Integration with dbt for teams standardizing on SQL-based modeling workflows.

Pricing

Matillion bills according to compute and cloud infrastructure usage. Entry plans run approximately $2/hour, while Standard and Enterprise tiers usually involve annual contracts starting around $10k+. Pricing scales with how frequently pipelines execute and the warehouse compute they use, offering cost predictability for mature operations.

Strengths & Limitations

Strenghts

- Strong warehouse-first transformation model that scales with modern cloud platforms.

- Comfortable for SQL-heavy teams that want precise control over data logic.

- Solid support for versioning, reviews, and production workflows.

- Works across central cloud warehouses without forcing a single-vendor bet.

Limitations

- Learning curve is real for non-SQL users or business teams.

- Pricing can feel steep compared to no-code platforms aimed at smaller teams.

- The connector catalog is narrower than ingestion-first tools.

- Best value shows up only when the warehouse is already central to the stack.

5. Celigo

Celigo is an iPaaS platform best for automating NetSuite and e-commerce operations, built for teams that need dependable, event-driven app-to-app flows rather than analytics-focused data pipelines.

G2 / Capterra Rating

- G2 – 4.6 out of 5 with 861 reviews.

- Capterra – 4.6 out of 5 with 56 reviews.

Best for

- Running NetSuite at the heart of their business, where orders, invoices, inventory, and customers constantly need to line up across systems.

- E-commerce operations that are tired of rebuilding the same Shopify → ERP → fulfillment logic every time volume or tooling changes.

- Ops-led teams who think in business processes first and data models second, and want integrations to react as events happen, not hours later.

Key Features

- A flow builder that lets you lay out real business processes end to end.

- Event-driven syncs that fire when something changes.

- Clear error handling with retries and dashboards that show where a flow stalled and why.

- Built-in support for APIs and EDI.

- Monitoring and governance features to run dozens of interconnected flows without losing track of ownership.

Pricing

Celigo pricing is built around endpoints and flows, which makes costs easier to anticipate as integrations grow. You’re not charged per task or transaction, so usage spikes don’t suddenly change the bill. For teams running steady, operational integrations, that simplicity makes budgeting far less stressful than volume- or activity-based plans.

Strengths & Limitations

Strengths

- Feels purpose-built for NetSuite and commerce workflows instead of generic app wiring.

- Prebuilt integrations save time where it usually gets wasted – recreating standard order, customer, and fulfillment flows from scratch.

- Reliably handles operational, event-driven automation across apps.

- Well-suited for multi-system workflows that go beyond simple data movement.

Limitations

- Cost ramps up quickly as integrations grow in number and complexity.

- Less focused on warehouse analytics or large-scale historical data movement.

- Connector strategy favors ERP and commerce over general-purpose data platforms.

- Overkill for teams that only need basic SaaS-to-warehouse syncing.

Best for Real-Time Streaming (CDC)

6. Estuary

Estuary is a real-time data platform built for moving changes as they happen, designed for teams that care about sub-second freshness and exactly-once delivery more than scheduled batch comfort.

G2 / Capterra Rating

- G2 – 4.8 out of 5 with 31 reviews.

- Capterra – not presented.

Best for

- Engineering teams that notice immediately when data is even a few seconds behind.

- Stacks where change data needs to show up fast enough to drive alerts, operational views, or ML features.

- Use cases where batch loads feel too blunt and hourly syncs arrive after the moment already passed.

Key Features

- CDC that reacts immediately when rows change, not when a timer goes off.

- A single pipeline model that can behave like a stream or like a batch job, depending on where the data is going.

- Schema changes are picked up as they happen.

- Support for warehouses, queues, and logs, which makes it easier to fan data out to analytics, ops, and ML without re-ingesting it multiple times.

Pricing

Estuary pricing is tied to how much data moves through the platform. There’s a free tier for small proofs. For sustained, high-velocity CDC, this often works out cheaper than per-row models, especially when freshness matters more than raw volume.

Strengths & Limitations

Strengths

- Delivers changes fast enough to support operational and near-real-time use cases.

- Handles streaming and batch in one coherent pipeline instead of forcing an early choice.

- Clear delivery guarantees reduce downstream reconciliation work.

- The Bring Your Own Cloud (BYOC) option avoids being boxed into a single vendor’s economics.

Limitations

- This is not a point-and-click experience.

- Teams looking mainly for scheduled analytics loads will feel like they’re paying for speed they don’t use.

- The connector ecosystem is still growing compared to long-established vendors.

- Overkill for teams whose needs stop at hourly or daily analytics loads.

7. Hevo Data

Hevo Data is a no-code ETL/ELT tool focused on getting data into cloud warehouses quickly and reliably, aimed at teams that want near-real-time ingestion without building or maintaining pipelines themselves.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 276 reviews.

- Capterra – 4.7 out of 5 with 110 reviews.

Best for

- Teams that want their warehouse fed steadily without turning ingestion into a long-running internal discussion.

- Analytics and RevOps groups who need Salesforce, HubSpot, and product data to show up reliably, but don’t want to own pipeline logic day to day.

- Stacks where “close to real time” is good enough, as long as it stays predictable.

Key Features

- A no-code setup that lets teams spin up warehouse pipelines quickly and keep them running with minimal oversight.

- CDC that keeps dashboards current without reloading entire tables.

- Built-in transformations for light cleanup and enrichment before data lands in the warehouse.

- Automatic handling of schema changes so pipelines don’t break every time a source evolves.

- Monitoring and alerts that surface issues early.

Pricing

Hevo uses an event-based pricing model with a low barrier to entry. There’s a free tier for small volumes, paid plans start in the low hundreds per month, and costs scale as event counts increase. For moderate workloads, this stays reasonable.

Strengths & Limitations

Strengths

- Easy to adopt for teams without dedicated data engineering resources.

- Keeps warehouse data reasonably fresh without complex configuration.

- Good balance between automation and control for common analytics pipelines.

- Lower entry cost than enterprise-focused ingestion platforms.

Limitations

- Focused almost entirely on warehouse loading, without backup or app-level sync features.

- Transformation capabilities are limited compared to warehouse-native tools.

- Latency can vary under heavier loads compared to real-time platforms.

- Pricing becomes harder to predict as data volume and schema complexity increase.

Best Open Source & Custom Solutions

8. Airbyte

Airbyte is an open-source ELT platform built for teams that want maximum control and connector breadth, and are willing to own the infrastructure that comes with it.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 5 reviews.

- Capterra – 0 (no reviews yet).

Best for

- Engineering-led teams that prefer owning their pipelines.

- Companies that want to shape, extend, or fix connectors themselves rather than wait on a vendor roadmap.

- Running infrastructure and treating data movement as part of the core platform work.

Key Features

- A connector ecosystem that doesn’t stop at what’s officially supported.

- CDC built on Debezium, so when records change, replay, or fall behind, the behavior is familiar and debuggable instead of opaque.

- Sync modes that let you decide how fresh is fresh enough.

- Warehouse-first flows where raw data lands quickly, and modeling happens later, closer to the people who query it.

Pricing

The open-source core is free to run if you host it yourself. Airbyte Cloud adds usage-based pricing with a small free tier and paid plans that scale by data volume. For high-throughput pipelines, this often comes out cheaper than row-based tools, but the real cost shows up in engineering time if you go the self-managed route.

Strengths & Limitations

Strengths

- Unmatched connector breadth compared to most managed platforms.

- Strong appeal for teams that want transparency and extensibility.

- CDC support that fits well into modern event-aware stacks.

- No hard vendor lock-in at the core level.

Limitations

- Running Airbyte yourself means you’re signing up for upgrades, monitoring, and tuning, not just connectors.

- The Cloud version smooths out some edges, but it still assumes you’re comfortable thinking about pipeline lifecycle, not just results.

- Airbyte Cloud trades some control for convenience, but it’s still not instant streaming.

- Not a great fit for teams that want pipelines to disappear into the background.

9. Talend Open Studio

Talend Open Studio is a free, open-source ETL tool aimed at developers who want full control over complex data pipelines, yet since January 2024, it has been discontinued, so there will be no updates anymore.

Note: If you want to keep your integration process relevant and up to date, choosing a Talend alternative may be a smart decision.

G2 / Capterra Rating

- G2 – not presented.

- Capterra – 4.4 out of 5 with 14 reviews.

Best for

- Teams that look at a free, open-source ETL and want control more than convenience.

- Developers migrating data out of older databases and file dumps where nothing is clean, nothing is consistent, and the fastest path is a custom ETL job.

- When you need a sandbox to prove the pipeline logic works before spending money on a managed platform.

Key Features

- A visual job designer that turns drag-and-drop flows into generated Java or Perl code you can inspect and tweak.

- An extensive connector set that covers databases, files, and enterprise apps like Salesforce.

- Map transformations that handle joins, filters, and expressions.

- Support for incremental loads and basic CDC patterns, assuming you’re willing to wire things up yourself.

- Big data components for Spark and Hadoop workloads.

Pricing

Talend Open Studio doesn’t charge anything to use, but, in some sense, you pay in time and effort instead of license fees.

Strengths & Limitations

Strengths

- Zero licensing cost, which still matters in cost-sensitive environments.

- Very deep transformation capabilities for teams that like seeing exactly how data is handled.

- Large ecosystem of community components and long-standing documentation.

- Works well for complex batch ETL and learning how pipelines behave.

Limitations

- Not approachable for non-developers or teams without Java familiarity.

- Everything from deployment to scaling is on you in the open-source version.

- Real-time CDC is limited without custom work.

- The UI and workflow feel dated compared to modern warehouse-first tools.

Best for Enterprise & Legacy Systems

10. Informatica

Informatica is an enterprise-grade data integration platform built for organizations that accept high cost and long implementations in exchange for deep governance, control, and scale.

G2 / Capterra Rating

- G2 – 4.3 out of 5 with 549 reviews.

- Capterra – 4.1 out of 5 with 18 reviews.

Best for

- Large enterprises where data work happens inside formal processes, not ad-hoc projects.

- Organizations that already run dedicated data teams and expect integrations to go through design reviews and change management.

- Industries where compliance, lineage, and audit trails shape every technical decision.

- When data integration is infrastructure, and not something that should be quick or lightweight.

Key Features

- Handles messy coexistence of old on-prem systems and newer cloud platforms.

- Keeps a full paper trail of how data moves and changes.

- Built to move large volumes steadily over time, not spike-and-drop workloads.

Pricing

Informatica doesn’t publish its pricing, so prepare to talk to its sales team. However, as an enterprise-grade platform, its plans are relatively well-suited for companies operating at scale, rather than for those with modest data integration requirements.

Strengths & Limitations

Strengths

- Handles complexity that would overwhelm lighter tools.

- Strong fit for regulated environments where traceability matters as much as throughput.

- Fits naturally into long-lived data programs that value stability and consistency over speed.

Limitations

- Informatica is not something you “spin up” to test an idea; it assumes commitment before the first pipeline runs.

- The pace and structure make it a poor fit for teams that need to adjust quickly or experiment often.

- Cost structure excludes small and mid-sized organizations by design.

- Might feel rigid next to warehouse-first and real-time platforms built for modern stacks.

11. MuleSoft

MuleSoft is an enterprise integration platform built around API-led connectivity, designed for large organizations that treat systems and APIs as long-lived products rather than simple data pipes.

G2 / Capterra Rating

- G2 – 4.4 out of 5 with 713 reviews.

- Capterra – 4.5 out of 5 with 4 reviews.

Best for

- Organizations where integration is treated as shared infrastructure, owned centrally, and used everywhere.

- Companies that already think in APIs and expect teams to follow published contracts instead of improvising connections.

- Enterprises carrying a lot of history, legacy systems, on-prem middleware, custom services, that still have to coexist with Salesforce and cloud apps.

Key Features

- An API-led model that forces discipline early.

- DataWeave sits right in the middle of every flow, shaping payloads on the fly when systems disagree.

- APIs come with ownership rules, versions, and access controls baked in.

- Hybrid runtimes let older systems keep doing their job while newer services are layered on top, which is usually the only realistic path in large organizations.

Pricing

MuleSoft pricing plans start with core integration and API design, then expand to cover hybrid deployments, multi-cloud runtimes, and deeper monitoring as needs grow. Costs are tied to the number of flows you run and the amount of traffic that moves through them. If you want precise numbers, though, you will need to schedule a call with their sales team.

Strengths & Limitations

Strengths

- Excellent fit for organizations that treat APIs as shared infrastructure.

- Handles hybrid and cross-system orchestration reliably at scale.

- Strong alignment with Salesforce-centric enterprise environments.

- Governance and security features match enterprise expectations.

Limitations

- Far too heavy for teams looking for straightforward ETL or analytics pipelines.

- Pricing and licensing structure rule it out for most small and mid-sized teams.

- Implementation effort is significant even for experienced integration groups.

- Low-code elements don’t remove the need for specialist skills.

12. Oracle Data Integrator / IBM DataStage

Oracle Data Integrator and IBM DataStage are legacy enterprise ETL platforms designed for organizations that are already committed to Oracle or IBM stacks years ago and continue to build around them.

G2 / Capterra Rating

Oracle Data Integrator:

- G2 – 4 out of 5 with 19 reviews.

- Capterra – 4.4 out of 5 with 20 reviews.

IBM DataStage:

- G2 – 4 out of 5 with 72 reviews.

- Capterra – 4.5 out of 5 with 2 reviews.

Best for

- Enterprises that made their platform choices a long time ago and are still living with them.

- Running large, scheduled jobs that are expected to finish the same way every night, not adapt on the fly.

- Maintaining established data warehouses while newer stacks slowly grow alongside them.

Key Features

- Transformation logic that assumes the database is doing the real work, not the integration layer (Oracle Data Integrator).

- A processing engine built to split big jobs across many threads and keep pushing until the batch is done (IBM DataStage).

- Comfortable handling of classic enterprise inputs like internal databases and flat files, where formats rarely change (Oracle Data Integrator).

- Pipelines designed around long-running batch execution, with real-time capabilities added only when there’s a strong reason (IBM DataStage).

- Tight coupling with each vendor’s broader stack, which simplifies life if you’re already committed and complicates it if you’re not (both).

Pricing

Pricing for both IBM DataStage and Oracle Data Integrator follows an enterprise model built around long-term use. DataStage charges based on how much processing capacity you consume, which works well for stable, high-volume workloads but requires planning as scale increases. Oracle Data Integrator is priced through custom quotes tied to your environment and data volumes, typically assuming deep alignment with the Oracle stack.

Strengths & Limitations

Strengths

- Efficient warehouse-side transformations that reduce custom coding (Oracle Data Integrator).

- Strong performance for very large, parallel batch workloads (IBM DataStage).

- Mature platforms with decades of production use in enterprise environments (both).

- Tight integration with broader Oracle and IBM data stacks (both).

Limitations

- High licensing and infrastructure costs that rule out smaller teams (both).

- Setup and maintenance effort is significant and rarely quick (both).

- Bias toward the vendor’s ecosystem can limit flexibility (Oracle Data Integrator).

- Heavy infrastructure requirements make experimentation expensive (IBM DataStage).

13. Dell Boomi

Dell Boomi is a general-purpose enterprise iPaaS built for organizations that need to connect a lot of systems, including on-prem ones, and are willing to pay for that flexibility.

G2 / Capterra Rating

- G2 – 4.5 out of 5 with 585 reviews.

- Capterra – 4.4 out of 5 with 273 reviews.

Best for

- Organizations that still rely on on-prem systems and know those systems aren’t going away.

- Ops and integration teams under pressure to connect a lot of moving parts fast, without negotiating every detail with engineering.

- Businesses with the budget to prioritize coverage and adaptability over lean pipelines.

Key Features

- A visual designer that lets teams sketch integrations quickly.

- A wide connector library.

- Native B2B and EDI (Electronic Data Interchange) handling that stops integrations from turning into custom side projects the moment partners and suppliers enter the flow.

- A centralized view of integrations to help users spot where things usually go wrong.

Pricing

Boomi’s pricing is flexible, but not simple. You can start with a narrow setup and add APIs, governance, environments, and throughput as needs grow. Each step unlocks real capability, but also another layer of cost. It works well when integration is a long-term platform decision, less so if you’re trying to keep scope and spend tightly contained.

Strengths & Limitations

Strengths

- Covers a wide range of integration scenarios without forcing teams into a single pattern.

- Handles on-prem and hybrid setups.

- Gets integrations live quickly, even when the underlying systems are anything but modern.

Limitations

- Advanced transformation logic is not its strongest area.

- Can feel oversized when pipelines are simple or analytics-focused.

- Costs rise steadily as integrations multiply.

14. SnapLogic

SnapLogic is a visual enterprise integration platform that leans on automation and AI assistance to help large teams build and maintain complex pipelines faster, without dropping fully into code.

G2 / Capterra Rating

- G2 – 4 out of 5 with 72 reviews.

- Capterra – 4.5 out of 5 with 15 reviews.

Best for

- Enterprises that want integrations to stay visual, even when the logic underneath stops being simple.

- Teams where ops and analytics are expected to build and adjust flows themselves, without filing tickets for every small change.

- Organizations dealing with a steady churn of apps, APIs, and data sources, and need tooling that bends without snapping.

Key Features

- A visual builder that makes it possible to understand what a pipeline does at a glance, which matters when someone else has to troubleshoot it later.

- AI-assisted pipeline drafts that help you get moving quickly.

- Warehouse ingestion and prep steps that handle the routine shaping work, so teams don’t push half-baked data downstream by accident.

- Runtime and monitoring behavior that assumes pipelines will change often and focuses on keeping them running while they do.

Pricing

SnapLogic pricing isn’t publicly available. A call with their sales team is a must if you want to evaluate potential benefits.

Strengths & Limitations

Strengths

- More approachable than many enterprise iPaaS tools thanks to its visual focus.

- Strong accelerator for teams that need to build and adjust integrations quickly.

- Works well across cloud and hybrid environments.

- Balances automation with enough visibility to understand what pipelines are doing.

Limitations

- Still firmly an enterprise tool in both cost and scope.

- Ecosystem depth trails long-established enterprise platforms.

- Less suitable for teams looking for simple batch ETL or lightweight analytics pipelines.

15. Jitterbit

Jitterbit is an API-led integration platform for teams that treat APIs as first-class citizens and use data movement mainly as part of larger application workflows.

G2 / Capterra Rating

- G2 – 4.5 out of 5 with 583 reviews.

- Capterra – 4.4 out of 5 with 45 reviews.

Best for

- Teams whose integration strategy starts with APIs and only then worries about where the data lands.

- Building reusable services around Salesforce, NetSuite, and internal systems instead of one-off pipelines.

- Hybrid environments where some systems still live on-prem and need to be exposed safely through managed endpoints.

- Companies that want low-code speed but still need proper API control, versioning, and visibility.

Key Features

- A visual studio that makes it obvious how apps and APIs are wired together.

- API gateway and proxy tooling that lets teams design integrations as reusable services instead of one-off connections that quietly pile up over time.

- Incremental and CDC-style syncs that keep systems aligned without moving more data than necessary.

- Private agents that sit close to on-prem systems.

- Monitoring and debugging tools that help trace what happened inside an API call.

Pricing

Jitterbit pricing isn’t publicly available, so you will need to contact sales. Plans are sold on annual contracts and scale as you add more systems, APIs, and runtime components.

Strengths & Limitations

Strengths

- Keeps API logic, data syncs, and app workflows close together instead of scattering them across tools.

- Works well in mixed environments where some systems stay on-prem, and others don’t.

Limitations

- Not designed for heavy warehouse-side transformations.

- Connector depth is uneven for less common systems.

- Support and advanced features are closely tied to higher-tier plans.

Comparison Table: Skyvia vs. Competitors

| Criteria | Skyvia | Fivetran | Hevo | Airbyte |

|---|---|---|---|---|

| Setup Time | Minutes to a few hours via no-code UI | Fast initial setup, tuning happens later | Fast, mostly no-code | Variable – quick for basics, longer if self-hosted |

| Pricing Model | Record-based, freemium | Usage-based (Monthly Active Rows) | Event-based tiers | Free open source or usage-based cloud |

| Real-Time Capable? | Near-real-time (scheduled, minute-level) | Near-real-time CDC | Near-real-time CDC | Near-real-time (true streaming needs engineering) |

| Reverse ETL? | Yes | Via add-ons / dbt workflows | Limited | Possible, but custom-built |

| Backup Included? | Yes | No | No | No |

Why Skyvia is the Smart Choice for SMBs & Mid-Market

Skyvia is built for teams that need reliable pipelines, predictable costs, and just enough flexibility to grow without dragging in unnecessary enterprise-level complexity. If your stack revolves around tools like Salesforce, cloud databases, and warehouses, and your team wants things to work without constant supervision, Skyvia fits that reality well.

- Stop paying for rows you don’t need

Row-based pricing sounds harmless until you can’t control the directions your data grows in. A few new fields, a wider table, a couple of extra syncs, and suddenly you’re paying for activity that brings no new value.

Skyvia’s pricing matches how teams use data, not how spreadsheets imagine they might. Forecasts are accurate, and billing conversations are brief because you pay for the records you move on a schedule you choose.

- More than just a pipeline

Alongside ETL and ELT, you get backups and querying in the same environment, which usually would require extra tools. Backups matter when you need to roll something back. Queries matter when you need to check the source without spinning up a separate workflow.

Having these pieces in one place reduces friction and cuts down on tool sprawl, especially for smaller teams that don’t want to manage a stack just to keep data sane.

- CSV to cloud, without ceremony

Many platforms either assign this to custom scripts or handle it as an edge case. Without appearing to be a workaround, Skyvia manages CSV-to-database and CSV-to-warehouse flows directly. You map it, schedule it, and move on. For SMBs and mid-market teams, this isn’t a niche feature. It’s part of daily operations.

- Built for how SMB stacks evolve

Most SMBs don’t start with a finished architecture. They grow into one. Skyvia supports that progression without forcing an early commitment to heavy tooling or specialized roles. Non-technical teams can own basic pipelines. More advanced mappings and incremental loads are there when needed. You don’t have to re-platform just because your reporting got more serious.

Skyvia consistently works well with mid-market teams because of this balance, which minimizes overhead without sacrificing control. Self-assured, realistic, and built for steady expansion as opposed to theoretical scale.

If you’re choosing a tool that needs to work now and still make sense a year from now, Skyvia fits exactly that reality.

Key Trends Shaping Data Integration in 2026

In 2026, tools are changing because expectations changed, not the other way around.

AI-driven mapping is now about skipping the boring first 80 percent of the work. Tools are finally able to do a decent job of guessing how schemas line up, adjusting when fields move, and pointing out what needs attention. Yes, someone with a soul still has to review the result, but you’re not starting from scratch every time a new column is added.

Another shift is around data freshness. Batch jobs are still everywhere, but they’re no longer the default answer. Change Data Capture has become the normal way to keep systems reasonably in sync, with batch filling in where it still makes sense.

iPaaS tools stopped being something only specialists dare to touch. Modern platforms are expected to work for smaller teams, hybrid stacks, and setups in transition because if a tool needs a dedicated owner just to keep it stable, it’s already at a disadvantage.

And then there’s data fabric. It is a concept of simplicity under a mask of grand architecture. Teams are tired of copying the same data into three places just to understand it. Better metadata, shared definitions, and automatic lineage mean fewer reloads and fewer questions.

All of these points point to the same pressure: the integration process has to stop demanding constant attention. In 2026, nobody is impressed by elaborate pipelines. What matters is whether data stays usable as systems develop, without anyone having to nurse it along.

Conclusion

Come 2026, nobody’s debating whether data integration matters. The question becomes how much operational complexity you’re prepared to shoulder.

Throughout this piece, we’ve explored tools serving wildly different contexts:

- Enterprise platforms expecting patient rollouts and dedicated staff (Informatica, MuleSoft, IBM DataStage, Oracle Data Integrator).

- Warehouse-centric solutions engineered for volume (Fivetran, Matillion).

- API-driven systems centered on orchestration (MuleSoft, Boomi, Jitterbit).

- No-code platforms that fit warehouse-centric stacks while keeping setup and cost under control (Skyvia).

What becomes clear is that most teams don’t fail because they picked the “wrong” tool. They struggle because the tool they picked was designed for a different kind of organization. One with more people, more budget, or a higher tolerance for overhead.

Skyvia works well when you need data to move, sync, and stay safe without building a platform around it. When CSVs still show up, Salesforce sits at the center of reporting, and predictability matters more than architectural ambition. It doesn’t try to win every category. It focuses on being usable, affordable, and steady as your stack grows.

If that sounds like your situation, Skyvia is worth spending time with.

Don’t get locked into expensive contracts or complex code. Start your free Skyvia trial today and unify your data in minutes.

F.A.Q. for Best Data Integration Tools

What is the difference between ETL and ELT?

ETL cleans data before it lands in the warehouse. ELT loads first and cleans it there. With modern warehouses, ELT usually feels simpler and more forgiving when things change.

Do I need coding skills to use data integration tools?

Not necessarily. Many tools cover common cases without code. Coding helps when logic gets tricky, but most teams start without it and only reach for code only when they need it.

Is open-source data integration better than cloud-based (SaaS)?

Open source gives you control, but also responsibility. SaaS trades that control for speed and less upkeep. It comes down to whether you want to run infrastructure or just move data.

What is Reverse ETL?

Reverse ETL takes data that’s already cleaned in the warehouse and sends it back into tools like Salesforce or HubSpot, so teams can act on it without opening a dashboard.