Redshift in 2026 isn’t “just a warehouse” anymore – it’s increasingly a hub that can pull in data in a few different ways:

- Classic batch loads.

- Near-real-time streams.

- AWS-native “zero-ETL” paths from services like Aurora, RDS, and DynamoDB.

That’s great news, but it also means the messy part has simply shifted: you still need a clean, reliable way to move data in, shape it, and keep it fresh without your pipelines turning into a constant fire drill.

That’s where Redshift ETL tools still earn their keep. Even if some sources can replicate straight into Redshift, you’ll usually have more than one system in play (SaaS apps, operational databases, files in S3), plus the usual real-life requirements like incremental loads, schema drift, monitoring, and sane scheduling. And if you’re dealing with event-style data, Redshift’s streaming ingestion options (Kafka/MSK/Confluent and friends) are powerful – but they don’t magically replace the need for end-to-end pipeline management.

One more shift worth calling out for 2026: more teams are blending warehouse and lake patterns, especially with formats like Apache Iceberg, which Redshift can query directly. That opens up flexibility, but it also raises the bar on how you organize and govern data flows.

In this guide, we’ll walk through the best ETL tools for Amazon Redshift in 2026 and, more importantly, how to pick one based on how your data actually behaves – batch vs incremental, warehouse-only vs warehouse +.

Table of contents

- Key Advantages of Amazon Redshift

- What is ETL? How It Transforms Data Management in AWS Redshift

- Top ETL Tools for Redshift in 2026

- Optimize Your Redshift ETL: Achieving Speed, Efficiency, and Cost-Effectiveness

- Conclusion

Key Advantages of Amazon Redshift

Redshift is a cloud data warehouse that is a part of the huge Amazon Web Services infrastructure. It’s designed with the large-scale data sets in mind – to store and analyze them effectively.

Amazon Redshift is based on PostgreSQL, so it uses a traditional relational database schema for data storage. This guarantees efficient performance and fast querying, which is crucial for teams that aim to find answers quickly.

Key features:

- Cloud-based. Being hosted on the cloud, Amazon Redshift doesn’t require any on-premises installations.

- Scalability. DWH can handle any workload, even if there’s an unpredicted data spike or large data set to process.

- Elasticity. The capacity of the data warehouse is automatically provisioned depending on the current needs.

- Integration. Redshift easily connects to other AWS services and can integrate with other apps with Redshift ETL tools.

What is ETL? How It Transforms Data Management in AWS Redshift

ETL stands for Extract – Transform – Load – an approach originally used for converting transactional data into formats supported by relational DBs. Now, it’s mainly applied for better data management in digitalized settings by moving data between apps or consolidating it within a DB or DWH.

Redshift uses ETL scripts to populate a data warehouse, but those are complex. Therefore, it makes sense to use AWS Redshift ETL tools, which usually offer a user-friendly graphical interface.

ETL tools are directly associated with the ETL concept and bring each step (extraction, transformation, and loading) to life.

- Extract – ingesting data from a certain app or database.

- Transform – applying filtering, cleansing, validation, masking, and other transformation operations to data.

- Load – copying the extracted and transformed data into a destination app or data warehouse.

All the above-mentioned steps make up a so-called ETL pipeline. It also includes source and destination, data transformations, mapping, filtering, and validation functionalities.

Even though the concept of ETL was the pioneer in data integration, its successors, such as Reverse ETL and ELT, are gaining momentum now. Both notions are also applicable to the Reshift:

- Reverse ETL loads data back from a DWH into apps for data activation and robust operability.

- ELT is a faster alternative to ETL as it loads data into a DWH before transforming it. This is crucial for accommodating large and fast-flowing data sets in a DWH.

Top ETL Tools for Redshift in 2026

As ETL has two modern variations, it implies that some ETL tools have built-in ELT and reverse ETL algorithms. Below, find the list of the Redshift ETL tools, specifying their features and pricing models.

Skyvia

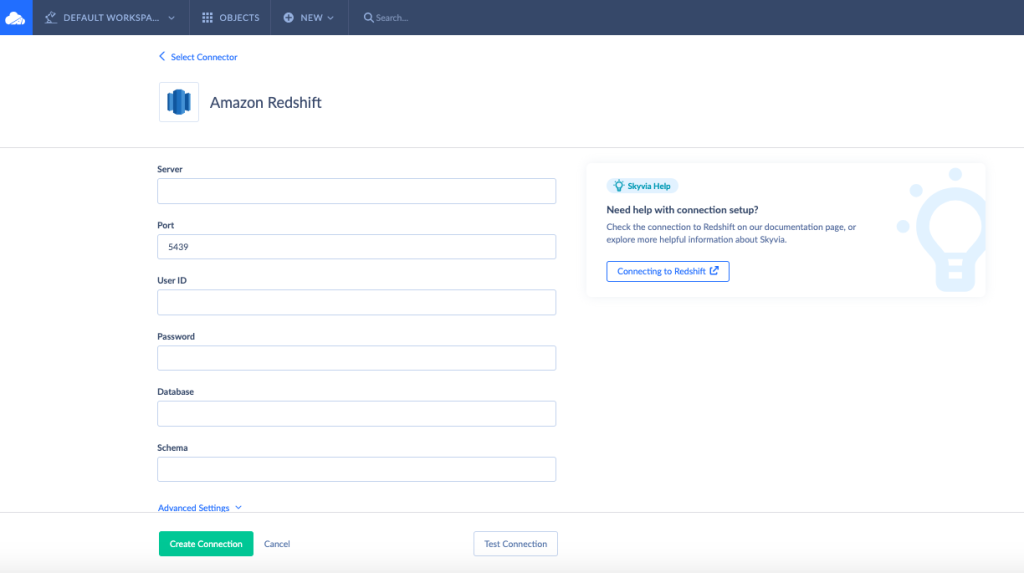

Skyvia is Redshift ETL tool that lets teams load, transform, and sync data from 200+ cloud apps and databases into Amazon Redshift through a no-code interface, without getting pulled into h

Key features

Skyvia is the best ETL tool for Redshift because it has the spectrum of all the necessary functions and attributes that make data warehousing simple but powerful.

- Replication component embodies ELT concept – it copies data from the preferred app or database into a DWH and can even automatically create tables for that data. This scenario is ideal for working with AWS Redshift and other DWHs because it allows scheduling regular incremental data updates.

- Import component connects AWS Redshift to other sources and allows loading data into a DWH (ETL) and vice versa (Reverse ETL).

- Data Flow + Control Flow tools. Data Flow enables the creation of complex data integration scenarios with compound data transformations. Control flow orchestrates tasks by creating rules for the data integration task order considering specific conditions.

Skyvia also has other data integration components as well as tools for workflow automation and creating web API points with no coding.

The biggest advantage of this service is the possibility of building all the data integration pipelines with no coding – just with the drag-and-drop visual interface!

Pricing

Skyvia offers a free plan with all principal features and connections available. Though, there are certain limitations on the frequency of integration and the amount of operated data. The basic plan extends these limits slightly, while the Enterprise and Advanced plans practically wipe them away.

The latter even offers complex integration scenarios where you can include more than three sources and specify task execution conditions.

AWS Glue

AWS Glue is Amazon’s serverless ETL service that prepares and moves data into Amazon Redshift using Spark under the hood, making it a natural fit for teams already deep in the AWS ecosystem who are comfortable trading simplicity for scale and flexibility.

Key features

- Supports ETL, ETL, batch, and streaming workloads.

- Connects to 70+ sources.

- Automatically recognized the data schema.

- Uses ML algorithms to point out data duplicates.

- Schedules jobs.

- Scales on demand.

Pricing

The cost for the AWS Glue service is based on the progressive model, where the pricing depends on the duration of the job run. Try out the pricing calculator to get to know the approximate cost or get a quote from Sales.

Apache Spark

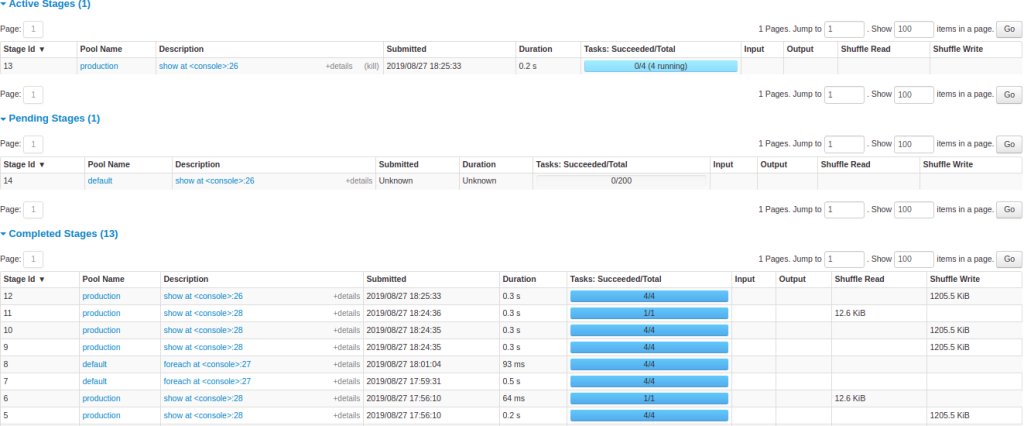

Apache Spark is a distributed data processing engine often used for large-scale Redshift ETL workloads, where teams need to transform and prepare massive datasets before loading them into Amazon Redshift with full control over performance and logic.

Key features

- Supports real-time data streaming and batch data.

- Executes ANSI SQL queries and builds ad-hoc reports.

- Trains machine learning models with large datasets.

- Works with structured and unstructured data.

Pricing

Being an open-source solution, Apache Spark doesn’t have an exact pricing model. The total cost depends on the installation and configuration works performed by specialists.

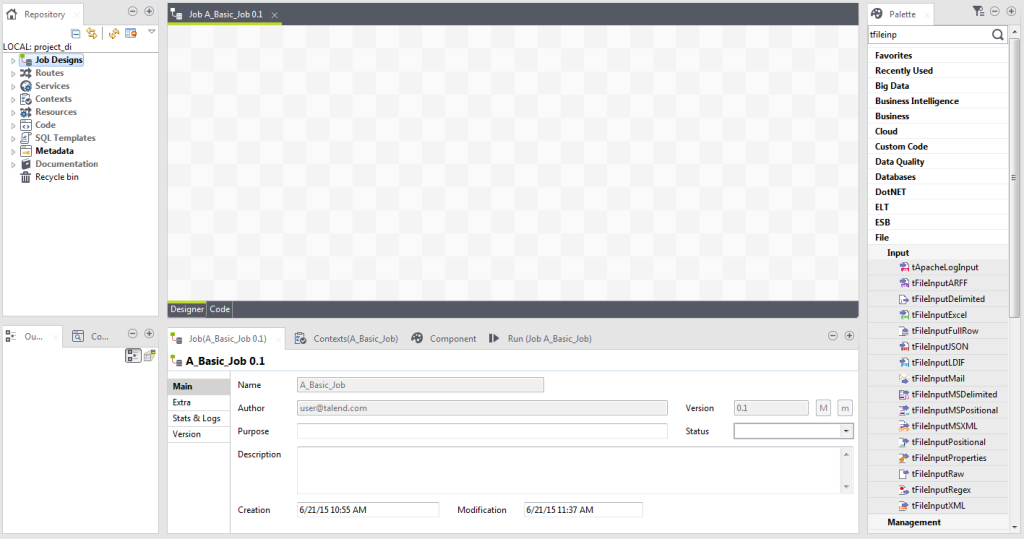

Talend

Talend is an enterprise-grade Redshift ETL platform that helps teams design, transform, and govern complex data pipelines feeding Amazon Redshift, especially when data quality, compliance, and scale are part of the equation.

Key features

- Ingests, transforms, and maps data.

- Designs and deploys pipelines with the possibility to reuse them.

- Prepares data either automatically or with self-service tools.

- Ensures data monitoring and advanced reporting.

Pricing

Talend uses enterprise subscription pricing with no public rates, so costs are quoted on request and typically require working directly with the sales team.

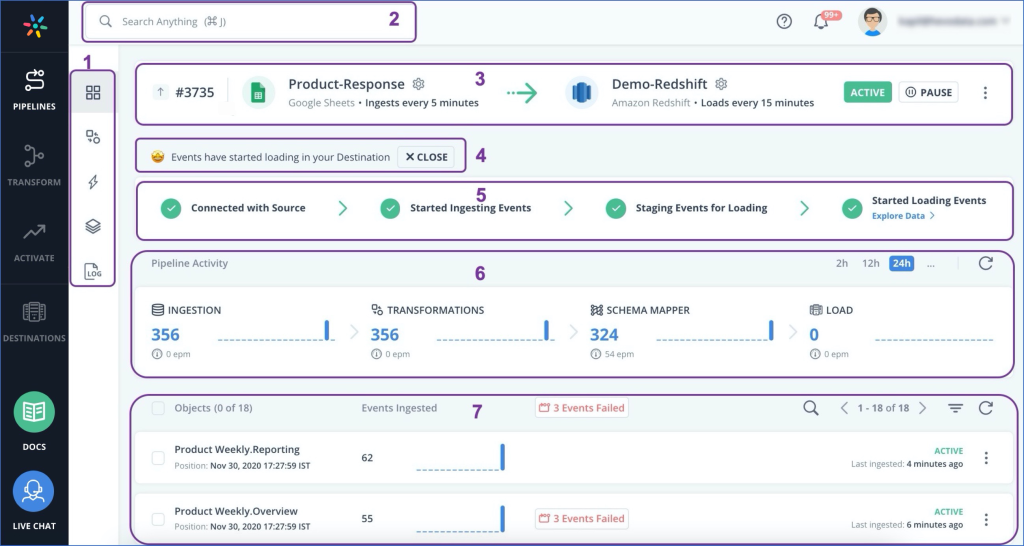

Hevo

Hevo Data is a no-code Redshift ETL tool that streams data from popular cloud sources into Amazon Redshift in near real time, handling schema mapping and incremental updates automatically so teams can move fast without writing pipelines from scratch.

Key features

- Provides complete visibility of how data flows across the data pipelines.

- Cleans and transforms data before it’s loaded into a DWH.

- Ensures data security with end-to-end encryption and 2-factor authentication.

- Complies with SOC 2, GDPR, and HIPAA standards.

- Notifications about any delays and errors.

- Pre-aggregates and denormalizes data for faster analytics.

Pricing

Hevo Data uses a tiered subscription model based on data volume or event usage, with pricing details available on request or via their sales team for larger plans.

Integrate.io

Integrate.io is a no-code Redshift ETL platform that pulls data from SaaS apps and databases into Amazon Redshift, handling transformations and monitoring along the way so teams can focus on analysis instead of babysitting pipelines.

Key features

- Data transformation with a low-code approach, suitable even for non-engineers.

- REST API of Integrate.io allows users to connect to any other sources using REST API.

- Data security is provided by SSL/TLS encryption and firewall-based access control.

- Compliance with SOC 2, GDPR, CCPA, and HIPAA.

Pricing

The monthly cost of the plan for building ELT pipelines starts from $159.

Stitch

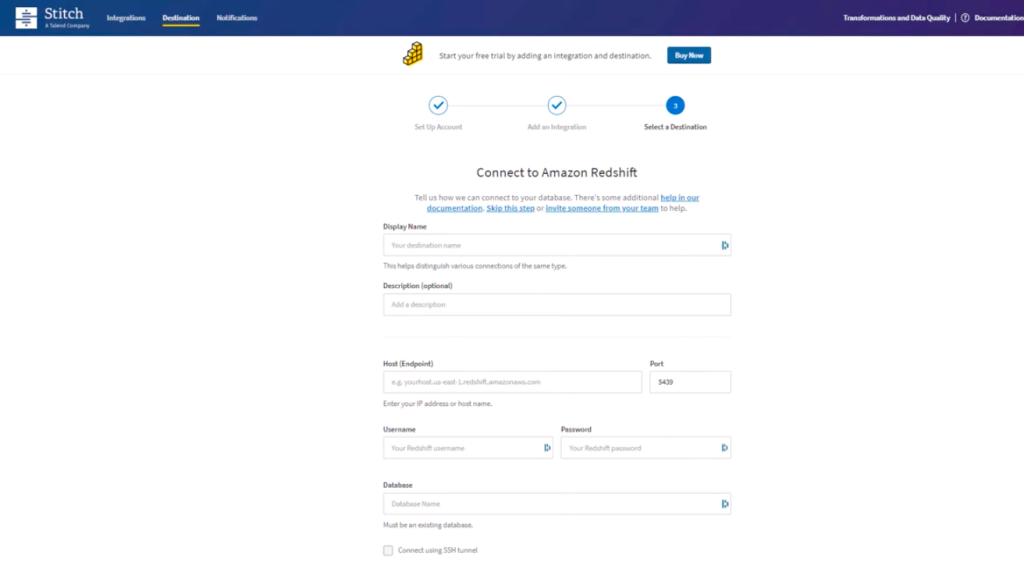

Sttch makes it easy to load data from everyday sources into Amazon Redshift, handling the loading step with minimal setup, so teams can start working with the data without diving into ETL plumbing.

Key features

- API key management for adding connections programmatically.

- Logs and notifications on any errors.

- Advanced scheduling options.

- Automatic scaling that adjusts to the actual data load.

Pricing

Stitch uses a tiered subscription pricing model based primarily on row volume, with transparent plan levels and the option to work with sales for higher-volume or enterprise needs.

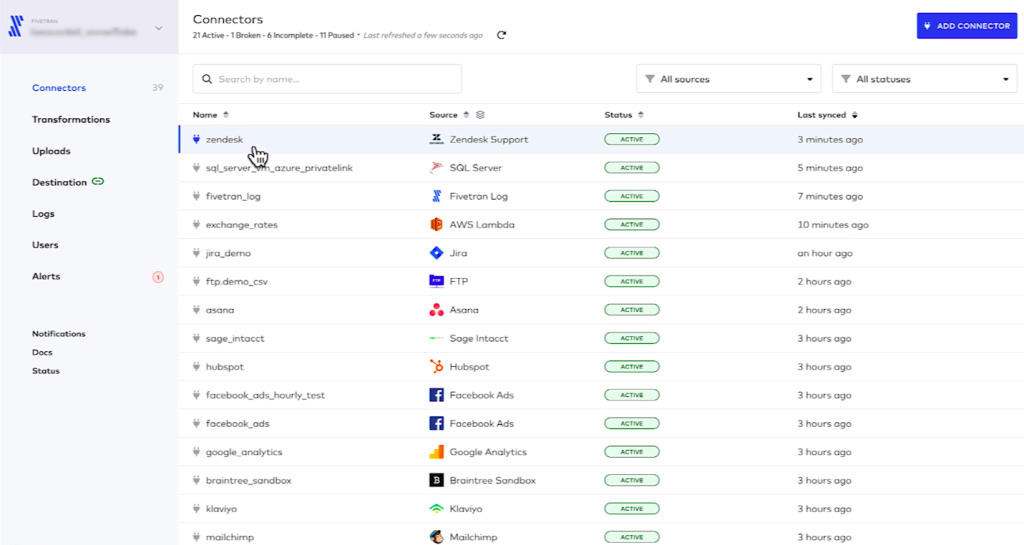

Fivetran

Fivetran is a managed Redshift ELT tool that continuously pulls data from a wide range of sources into Amazon Redshift, handling incremental updates and schema changes so teams can focus on analytics rather than pipeline upkeep.

Key features

- Automatic data cleansing and duplicate removal.

- Preliminary data transformations before its load into a DWH.

- Data synchronization on schedule.

- Masking for protecting sensitive data.

Pricing

Fivetran uses a usage-based pricing model driven by Monthly Active Rows (MAR), with costs scaling as data change volume grows and enterprise plans typically handled through the sales team.

Optimize Your Redshift ETL: Achieving Speed, Efficiency, and Cost-Effectiveness

Even though the refined ETL pipelines bring already prepared data into AWS Redshift, they also need to consider this DWH’s architecture for better results.

So here we provide several pieces of advice for optimizing your Redshift ETL in order to speed up the decision-making and make the process more efficient and cost-effective.

Load Data in Bulk

Amazon Redshift is a DWH designed to operate on petabytes of data with the massive parallel processing (MPP) approach. This refers not only to the computing nodes but also to the Redshift Managed Storage (RMS).

Loading data in bulk ensures that the DWH resources are used efficiently, with no nodes standing by. So, consider this when ingesting data from other sources directly within AWS Redshift or when using external ETL tools.

Perform Regular Table Maintenance

With incremental updates, the fresh data is added to the tables, while the replaced data is moved to the unsorted region of the table and only marked for deletion.

However, the space previously occupied by that data isn’t reclaimed, which could significantly degrade the user query performance as the unsorted region grows. To overcome this, apply the VACUUM command against the table.

Use Workload Manager

Amazon has an in-build solution for monitoring the workload queues inside the DWH – Workload Manager (WLM). It shows the number of queues, each dedicated to a specific workload.

The overall number of queues shouldn’t be greater than 15 to ensure the effective execution of all processes. In particular, the WLW helps to improve the ETL runtimes.

Choose the Right ETL Tool

The key to achieving quick and highly performing Redshift ETL is the pickup of the right ETL tool. Data load frequency, transformations, and the number of sources are the most critical criteria in making that choice.

Skyvia could be a perfect choice as it designs simple and complex ETL pipelines with no code, applies advanced transformations on data, performs data filtering, and ensures constant monitoring of their execution!

Conclusion

Amazon Redshift is the preferred data warehouse choice for many companies. Its cost-effectiveness, scalability, speed, and built-in tools ensure a favorable environment for data storage and management for any company.

Even though Redshift has its own ETL mechanisms, they mightn’t always be the best option for populating a DWH. Here come professional ETL tools that connect to apps and databases, get data from there, transform it, and copy it into a data warehouse. Skyvia is the universal Redshift ETL tool with everything necessary to populate your DWH.

F.A.Q. for Redshift ETL tools

Why should I use a dedicated ETL tool instead of Redshift’s built-in capabilities?

Tools simplify building and maintaining pipelines, handle schema changes automatically, and provide visual workflows, monitoring, and scheduling, so you spend time on insights, not data plumbing.

Do these tools support real-time data loads into Redshift?

Many modern ETL tools offer near-real-time or incremental loading, capturing only changes after the initial load, reducing costs, and keeping your Redshift data fresh for analytics.

How do I choose the right Redshift ETL tool for my team?

Think about your data volume, whether you need real-time updates, how much transformation you perform before loading, and your team’s skill level. Some tools are no-code, others require more engineering know-how.

Are these ETL tools expensive to run with Redshift?

Costs vary by tool and usage patterns. Cloud-native options often charge based on data volume or jobs run, while open-source engines may have infrastructure costs. Plan with expected data loads and refresh cadence in mind.

Can I transform data before it hits Redshift with these tools?

Yes, many tools let you clean, enrich, and shape data before loading. Some follow a classic ETL approach, while others lean toward ELT, loading raw data and transforming it in Redshift or another compute layer.