Businesses don’t just succeed by picking up any old data solutions and hoping for the best. They strike gold when they zero in on the right tool that can keep pace with their data’s constant motion. But here’s where it gets risky: the modern market offers numerous options, and it’s challenging to make the right choice, especially when talking about complicated solutions like Change Data Capture (CDC).

Yet, diversity is never something that can make things harder for your business if you know where to look, what to anticipate, and how to slip past the barriers.

Ready to cut through the noise and find the perfect CDC tool? This article presents the best 13 CDC tools for 2026. One of them may be a missing piece your business needs. So, let’s explore.

Table of Contents

- What are the Change Data Capture (CDC) Tools?

- Why Do You Need CDC Tools?

- Benefits of Change Data Capture Tools

- Challenges and Considerations CDC Tools

- Best CDC Tools for 2026

- Choosing the Best Change Data Capture Tool

- Conclusion

What are the Change Data Capture (CDC) Tools?

The CDC pattern automatically finds and records modifications happening in databases (DB) or source systems as they occur (or nearly instantly), such as:

- New entries (inserts)

- Updates

- Deletions

This approach helps move high-volume records across systems and keeps the cloud DB’s architecture up to date.

Take an online store that depends on an active DB (like PostgreSQL) to manage:

- Orders

- Customer details

- Inventory levels

- Pricing information

On the other end of this spectrum is a data warehouse (DWH) with a copy of their records. The goal here is to make sure the destination reflects all current database activity for quick decisions and accurate reports.

What can they do?

In short, implement a CDC solution to monitor the operational DB for any changes.

How does that work?

Now, a lengthier description of what is hidden from our eyes.

- Transaction happens (e.g., a customer places a new order, updates their shipping address, or product inventory levels go up and down), and the CDC tool grabs these changes.

- Then, it temporarily stages the changed records.

- The staged changes make their way to the DWH in real time or nearly so. The CDC tool directs each update to its correct location.

Easy real-time analytics and fresh reports are now just a reality, not a prize for an excruciating marathon of manual work, comparisons, and doubt at every click.

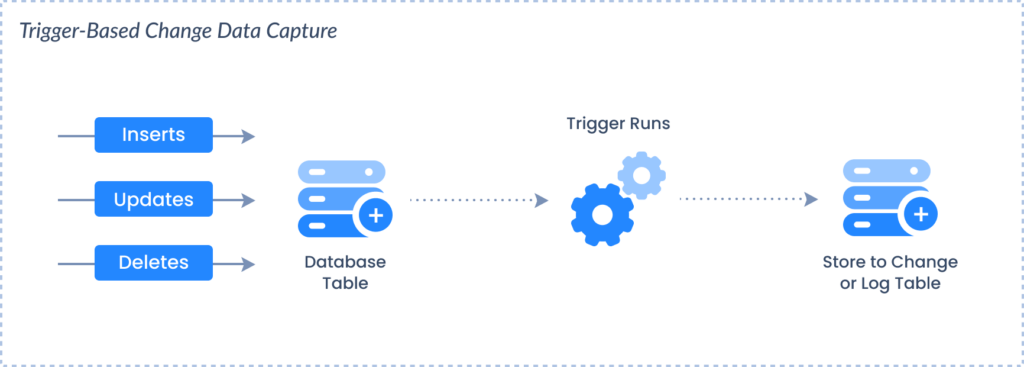

We covered only one of the CDC methods that vary in how they catch and mirror data activity. These include trigger-based, log-based, timestamp-based, snapshot-based, and hybrid CDC. For more info on each method’s strengths, weaknesses, and ideal use cases, check out our full article on what the CDC is.

Why Do You Need CDC Tools?

Every business, sooner or later, experiences the moment when its data becomes its biggest bottleneck – CDC tools are designed to fix exactly that problem. Before diving into specific tools, it’s worth understanding what the CDC solutions can do.

| Requirement | Business Case | How the CDC Delivers |

|---|---|---|

| Real-Time Data Synchronization | Teams depend on up-to-the-minute data flowing between systems to stay razor-focused. | CDC tools seize every update as it occurs and push it out to all systems right away. |

| Efficient Data Integration | With old-school batch processing methods, records are stale and arrive too late. | By focusing on just the updates, the CDC slashes transfer loads and makes integration way more efficient. |

| Operational Efficiency | Manual copying is time-consuming, and errors occur all the time. | With the CDC, the process is automatic, and you don’t waste time on tedious tasks. |

| Support for Event-Driven Architecture | Companies want systems that can quickly react to record updates without different parts being too dependent on each other. | The CDC continuously monitors for modifications and springs into action the moment anything is updated. |

| Data Consistency and Quality | Accurate analytics and operations require harmony throughout all systems. | CDC shadows every change from source to target, improving data quality and preserving consistency. |

| Regulatory Compliance and Auditing | Businesses need to chronicle every twist and turn of their data. | CDC tracks and documents alterations, helping businesses stay compliant and making it simple when auditors come knocking on their doors. |

Benefits of Change Data Capture Tools

It’s a win-win when technology’s strengths outweigh its drawbacks, but this fragile and complex balance varies from case to case. First, here’s why CDC solutions are usually worth the effort.

- Minimized impact on source systems. Compared to traditional extraction methods, they focus on modifications only, meaning the load on sources, performance degradation, and operational disruption are as little as possible.

- Optimized Network and Storage Usage. When only changed records are transferred, network capacity requirements and storage consumption are lower.

- Better Analytical and Reporting Abilities. Fresh data ripens into the perfect recipe for pinpoint insights and instant reporting.

- Event-driven Architecture Support. CDC tools set off chain reactions from specific changes, leading to more responsive and dynamic application behaviors and better customer experiences.

- Reduced Costs and Resource Requirements. They decrease the need for manual data babysitting and batch processing.

- Enables Data Modernization and Cloud Migration. It is an effective strategy when companies need to modernize their data infrastructure without interrupting operations.

- Scalability. Modern implementations can grow together with data frameworks.

Challenges and Considerations CDC Tools

Change Data Capture platforms deliver impressive results, but they’re not bulletproof. Here’s what you need to watch out for.

| Challenge | Impact | How to Overcome It |

|---|---|---|

| Data Consistency & Integrity | Risk of data drift, loss, or duplication in distributed systems. | Pick CDC tools that can keep data accurate when many changes happen at once. Test all the tricky situations where your records could end up inconsistent. |

| Performance | Makes source systems work harder, which might cause delays. | Monitor system performance closely during testing and adjust settings when necessary. Opt for solutions that minimize the impact on the source system. |

| Schema Evolution/Drift | Pipeline failures after schema changes. | Hunt for tools that adapt like chameleons to schema transformations or provide frameworks to manage and propagate these changes quickly. |

| Operational Complexity | You must manage a lot of moving parts, which can become messy when working with multiple systems. | Working and planning with CDC specialists guarantees that the solution fits the existing setup like a glove and can handle future expansion. |

| Scalability | As data volumes grow, the CDC system might not scale properly. | Select systems that can adapt their processing power as needed and spread tasks around to prevent bottlenecks. |

| Security & Access | Log-based CDC may require a level of database access that, in turn, may create a security breach. | Strong encryption will protect data in motion and at rest. Confirm that the CDC platform follows required compliance standards and audit user permissions carefully and regularly. |

| Technical Expertise | Application and implementation require special technical knowledge and practical experience. | Train your team or collaborate with partners that deliver quality support and direct experience. |

| Latency & Event Ordering | Busy systems or those spread across locations can deliver events out of sequence or behind schedule, creating chaos for downstream processing. | Make sure events occur in the proper order, avoid duplicates, and use temporary storage when needed to handle mixed-up records. |

Best CDC Tools for 2026

Don’t let these challenges intimidate you because choosing the right CDC tool that adapts to how a business grows can prevent at least half of them.

Then, a few more will be easy to resolve if the tool offers scalability, monitoring, and flexible configuration options. So, by the end, you’re safe and can enjoy the benefits of the CDC’s effect on your data management.

Here’s a guide to 13 CDC tools and their significant features, strengths, and drawbacks to consider when selecting the right fit for your particular needs.

| Tool | Best For | Pros | Cons | Use Case |

|---|---|---|---|---|

| Skyvia | No-code data integration, SaaS apps, and DB sync | User-friendly, 200+ sources, scalable | Dependent on the Internet, lacks video tutorials | Replication, transformation, and automation across cloud apps |

| Debezium | Real-time DB updates, event streaming | Open-source, Kafka integration, supports many DBs | Requires Kafka expertise, complex setup | Real-time streaming to analytics platforms, DB-to-DB replication |

| Oracle GoldenGate | Enterprise-grade replication across platforms | High performance, supports heterogeneous DBs | Expensive and complex setup | Data consistency in mission-critical systems, cloud-to-premises sync |

| Apache NiFi | High-velocity data ingestion, real-time processing | Scalable, flexible UI, secure data routing | A steep learning curve, resource-intensive | IoT data processing, log aggregation, and system mediation |

| Qlik Replicate | Fast, reliable data streaming from multiple sources | User-friendly, efficient data replication | Expensive, complex workflows require expertise | Big data replication, ML, and hybrid environments |

| IBM InfoSphere | Real-time data integration, analytics, governance | Reliable, supports various DBs, suitable for high throughput | Complex management, high TCO | Ongoing data sync across operational systems, DWHs |

| Striim | Real-time data integration and analytics | In-memory processing, comprehensive integration | High pricing transparency issues, steep learning curve | IoT, e-commerce, and real-time operational intelligence |

| StreamSets | Hybrid and multi-cloud data integration | Real-time integration, flexible, works with batch | Overkill for small projects takes time to learn | Real-time data flow, cloud-to-cloud and on-prem data movement |

| Talend | Migration, transformation, cloud modernization | Open-source version, strong ETL capabilities | Enterprise version costly, steep learning curve | Cloud and big data integration, master data management |

| Hevo Data | No-code integration, real-time updates | Easy to use, automatic mapping, 150+ sources | Pricing for high-volume data, limited transformations | Automated pipelines, real-time sync for data-driven decision-making |

| Fivetran | Automated ELT pipelines, real-time CDC | Saves engineering time, broad connector library | Expensive pricing, lacks native reporting | SaaS data integration, marketing analytics, and customer 360 views |

| Airbyte | Open-source, scalable ELT, real-time sync | Extensive connectors, free community edition | Enterprise features costly, basic monitoring | Data lakes, real-time sync, AI/ML data prep |

| Estuary Flow | Low-latency, scalable CDC for AI and event-driven apps | Real-time processing, cost-effective for enterprises | Smaller ecosystem, learning curve for streaming concepts | Real-time analytics, AI-powered pipelines |

Skyvia

Skyvia is a leading no-code CDC platform that automates incremental data capture and loading across 200+ cloud apps, databases, and data warehouses, keeping systems continuously in sync without engineering overhead. d applications, DBs, and DWHs.

Best For

- It’s ideal for users looking for a no-code interface to connect their SaaS applications, DB, and cloud WH without deep technical expertise.

- Data replication and synchronization, including one-way and bi-directional sync between cloud sources.

- Data transformation and enrichment.

- Building complex data flows involving multiple sources and transformations with minimal effort.

Reviews

G2 Crowd – 4.8/5 (based on 222 reviews)

Both business users and tech teams love Skyvia for its user-friendliness and flexibility. People consistently mention how quickly they can connect systems and automate processes across numerous cloud tools and databases.

The standout customer service, quick response times, and one of the lowest prices for this type of functionality on the market also receive frequent mentions.

Key Features

- A simple wizard-based interface allows users to develop complex data automation.

- Handles the entire pipeline: extraction, transformation, and loading, as well as reverse ETL for sending processed records back to applications.

- Grabs changes in real time and automates workflows to guarantee constant updates.

- Features a query builder for easy access to insights without requiring deep SQL knowledge.

- Scalable to fit growing business needs, with a focus on secure, automated data management.

Pros

- No-code platform accessible to both technical and non-technical users.

- Can handle a variety of data-related tasks.

- Supports 200+ cloud apps, DBs, and services for seamless integration.

- Offers automated workflows and low-maintenance pipelines for continuous operations.

Cons

- Depends on internet connectivity.

- More video tutorials would be helpful.

- Its extensive features might be overwhelming for users unfamiliar with data management.

Pricing

The pay-as-you-go pricing offers a free tier; paid plans start from $79/month with offers based on features and data volumes. The paid version lets you run tasks more often, schedule automatic updates, match data between systems, import information, split files, and much more.

During a 14-day free trial, users can try everything back and forth to learn how Skyvia supports their operations. Scheduled integrations, source and expression lookup mapping and sync, data import, splitting, etc.

Debezium

Debezium is an open-source CDC tool that captures row-level database changes in real time and streams them into Apache Kafka, turning transactional databases into event-driven data sources for distributed systems.

Best For

- Organizations that need live DB updates circulating through their warehouses, analytics platforms, and search systems in real time.

- Teams creating heavy-duty pipelines for analytics and reporting that can handle whatever volume they throw at them.

- Maintaining razor-sharp accuracy in search engines and caching layers so users always get current results.

- Businesses orchestrating data migrations and cross-environment replication without the usual headaches.

Reviews

Debezium isn’t presented on popular review aggregators, yet here’s what users across the Internet have to say about this platform after working with it.

Debezium is known for being fast and reliable, operating at scale, and supporting real-time streaming of data. However, teams with little or no experience in working with complex distributed systems find its extensive manual operations and demanding management challenging.

Key Features

- Native integration with Apache Kafka for real-time data streaming.

- Supports numerous DBs, including PostgreSQL, MySQL, MongoDB, and SQL Server.

- Transparent handling of database schema changes.

Pros

- Wide database support.

- You can start and stop data apps as needed.

- It integrates well with Kafka ecosystems.

Cons

- It requires Kafka expertise.

- Setup can be complex.

Pricing

This solution is free since it’s open-source, but hidden costs (infrastructure scaling, cloud licensing nuances, support and maintenance, etc.) can still catch you off guard.

Oracle GoldenGate

Oracle GoldenGate is a mature, enterprise-grade CDC solution that keeps data moving in real time across on-prem, cloud, and hybrid environments, even when systems and databases don’t match neatly.

Best For

- Maintaining systems that need data consistency across various DBs, including both Oracle and non-Oracle systems.

- Sectors such as finance, e-commerce, and telecom operate mission-critical systems that cannot afford downtime.

- Easy synchronization of data between the cloud and on-premises systems.

Reviews

G2 Crowd – 3.9/5 (based on 34 reviews)

It’s often considered the gold standard for enterprise-grade CDC and replication, particularly when your whole system is built around Oracle. However, it’s most acceptable in large organizations with the capability to handle their complexity and high cost.

Key Features

- Advanced conflict resolution mechanisms for complex replication scenarios.

- Detailed transaction data capture and delivery without impacting source systems.

Pros

- High-performance data replication and real-time data integration across heterogeneous databases.

- The solution supports various platforms beyond Oracle, including cloud services.

- It supports a broad array of databases.

Cons

- High cost.

- Complexity in setup and maintenance.

Pricing

Licensing costs start high, typically for enterprise use; specific pricing requires a quote from Oracle.

Apache NiFi

Apache NiFi is an open-source dataflow automation tool with a visual, web-based UI that helps data engineers route, transform, and manage data movement between systems without wiring everything together by hand.

Best For

- Businesses that must ingest and process high-velocity data in real time, such as IoT devices, logs, and APIs.

- Centralizing logs from various sources, making it easier to monitor and troubleshoot systems in real time.

- When on-the-fly filtering, merging, and enriching data before it reaches its final destination are needed.

Reviews

G2 Crowd – 4.2/5 (based on 25 reviews)

Users love this tool because it allows them to design dynamic data flows with ease. The drag-and-drop interface helps those without extensive coding skills manage complicated data pipelines.

However, some users report that there’s a steep learning curve to climb, and it can be pretty resource-intensive, particularly when you’re dealing with large-scale operations.

Key Features

- The tool supports broad data sources and destinations featuring over 300 processors.

- It prioritizes data flow control with backpressure and prioritization mechanisms.

Pros

- Flexible and scalable graphical interface for designing, running, and monitoring data flows.

- The solution facilitates secure data routing, transformation, and system mediation.

Cons

- Small projects might find the learning curve for the data flow approach not worth the trouble.

Pricing

The platform is open source, so you’re free to experiment without investment. ay use it for free.

Qlik Replicate

Qlik Replicate is a data integration solution that helps teams move and keep data in sync across databases, data warehouses, and big data platforms using CDC, all through a straightforward, visual interface that doesn’t get in the way.

Best For

- Companies that need fast, dependable data streaming from systems like Oracle, SQL Server, or SAP to power their big data and ML initiatives.

- When teams need to move data between legacy systems and modern cloud platforms with minimal disruption.

- Keeping hybrid or multi-cloud systems synchronized.

- Automating metadata and schema management, especially during large-scale deployments.

Reviews

G2 Crowd – 4.3/5 (based on 109 reviews)

People genuinely appreciate the ease of use and Qlik Replicate’s performance, especially in moving large data volumes with minimal latency.

However, the pricing can make smaller businesses wince, and though the interface keeps things simple, complex workflows may still need a technical expert.

Key Features

- Automated schema conversion and validation.

- Broad support for data sources and targets, including major databases and data warehouses.

- Minimal performance impact on source systems.

Pros

- User-friendly graphical interface.

- Efficient data replication.

Cons

The solution can be expensive for small to medium-sized businesses and might require additional training. into consideration a $350 per-user license fee and an additional $77 for software updates and support.

Pricing

Qlik Replicate’s pricing is typically based on an annual subscription license that varies with data volume, number of sources/targets, and deployment scale, often positioning it toward larger enterprise budgets rather than small, usage-based models.

IBM InfoSphere Data Replication

IBM InfoSphere Data Replication is an enterprise CDC and replication solution that moves data in real time across a wide range of sources and targets and fits best for teams already running on IBM tech who want tighter analytics and governance without reinventing their stack.

Best For

- On-going data synchronization across operational and analytical systems.

- Transferring data between different platforms or from on-premises to the cloud while everything keeps running smoothly.

- Feeding real-time data into DWHs or Master Data Management (MDM) systems to support analytics and decision-making.

Reviews

G2 Crowd – 4.8/5 (based on six reviews)

IBM InfoSphere Data Replication receives many thumbs up for stability and speed, especially when dealing with sophisticated, high-throughput scenarios.

However, there’s a price that many users find too high – a pretty intimidating learning curve that requires skilled professionals to tame.

Key Features

- Data transformations and enrichments during replication.

- Conflict detection and resolution for bidirectional replication.

Pros

- Reliable performance.

- Extensive data source support.

Cons

- It can be complex to manage.

- The total cost of ownership is high.

Pricing

There are no strict pricing plans, so you will need to contact IBM for details.

Striim

Striim is a real-time data streaming and CDC platform built for data in motion, making it a strong fit for use cases like IoT, e-commerce, and online services where insights need to happen as events are flowing, not after the fact.

Best For

- Smoothly migrating on-premises databases to the cloud with zero downtime.

- Building streaming data pipelines and performing SQL analytics in motion to provide operational intelligence and make timely decisions before the data even hits your target system.

- Connecting diverse data sources to form a real-time, unified data ecosystem.

Reviews

G2 Crowd – 5/5 (based on one review)

Striim users can’t get enough of its real-time and uninterrupted data access without burdening the source system. The wizard-based UI and SQL streaming capabilities stand out as time-savers, especially when compared to traditional ETL tools.

However, users note that streaming data isn’t exactly intuitive, and if you’re dealing with complex legacy infrastructure, you’ll want some serious technical muscle.

Key Features

- In-memory stream processing for fast data analysis and transformation.

- A library of 150+ pre-built connectors for cloud services, DBs, and DWHs.

- Data flow, analytics dashboards, and visualizations.

Pros

- Real-time performance.

- Comprehensive integration features.

Cons

- Pricing transparency.

- The learning curve for streaming concepts.

Pricing

It is not publicly listed and requires engagement with Striim sales for a quote.

StreamSets

StreamSets makes it easier to build and keep an eye on batch and real-time data pipelines with minimal coding, and since joining IBM’s portfolio, it’s often used for analytics, data science, and operational workloads where reliability matters.

Best For

- Data movement between on-premises, cloud, and hybrid platforms.

- Processing data as it arrives to keep operations nimble and responsive.

- Managing diverse and complex data flows.

Reviews

G2 Crowd – 4/5 (based on 105 reviews)

The user-friendly visual interface is the first thing users acknowledge about StreamSets. Another important ability is to handle both batch and streaming pipelines within a single platform.

However, many have pointed out that the monitoring and visualization tools could be more intuitive, at least when it comes to tracking previous jobs and performance indicators.

Other users mentioned problems with scalability when dealing with large datasets (e.g., more than 5 million records), which leads to performance issues.

Key Features

- Supports real-time data integration and CDC, ensuring your pipelines stay updated with minimal delay.

- Drag-and-drop interface.

- Centralized Control Hub for real-time monitoring, alerting, lineage tracking, and full operational visibility across all pipelines.

- Role-based access control, audit logging, and integration with enterprise security frameworks.

Pros

- Detects and adapts to schema changes.

- Works across on-premises, cloud, and hybrid environments, supporting platforms like AWS, Azure, Google Cloud, and private clouds.

- In-flight data transformations, cleansing, enrichment, and filtering.

Cons

- Overkill for simple needs.

- The tool requires time to learn.

Pricing

IBM StreamSets pricing plans start at $4,200 per month for departmental projects, scaling up to $105,000 for organization-wide deployments.

Pricing is based on the number of Virtual Processor Cores (VPCs) used, making it scalable as your data integration needs grow. A 30-day free trial is available for those looking to test before committing.

Talend

Talend is a broad data integration and data management platform with built-in CDC for major databases like Oracle, SQL Server, and MySQL, making it a solid fit for organizations juggling complex data landscapes and looking to centralize integration under one roof.

Best For

- Migrating to the cloud or modernizing DWHs.

- Companies looking for a platform that supports integration with a vast range of data sources, from traditional RDBMS and flat files to big data and cloud platforms.

- If your business demands master data management.

Reviews

G2 Crowd – 4.4/5 (based on 68 reviews)

Talend stands out for its wide connectivity and robust data transformation capabilities. Users appreciate its user-friendly interface, allowing teams to manage complex data workflows with ease.

However, some mention a steep learning curve and pricing concerns, especially for larger enterprises.

Key Features

- 1000+ connectors for various sources and applications.

- It supports batch and real-time data processing.

- Open-source foundation with a strong community and enterprise support.

Pros

- Powerful ETL and data management capabilities.

- Open-source version available.

Cons

- The enterprise version can be costly.

- Steep learning curve.

Pricing

Pricing plans vary depending on a business’s needs, yet even the basic one is available only upon request.

Hevo Data

Hevo Data is a no-code CDC and data pipeline platform built for real-time integration, making it a good fit for teams that want to connect sources quickly, keep data fresh, and avoid heavy engineering work.

Best For

- Businesses that need to keep their records continuously synced across systems with near real-time updates.

- Automating data flows without the technical burden of complex coding.

- Automating the entire data lifecycle from collection to transformation to loading, speeding up the availability of quality data for analysis.

Reviews

G2 Crowd – 4.4/5 (based on 263 reviews)

Users love Hevo’s versatility in handling both simple and complex transformations. The platform’s codeless interface is a big win for teams without technical expertise.

However, some have pointed out onboarding challenges, which can leave new users feeling a bit lost at first, and there are also pricing concerns, with some calling for more transparent and competitive models.

Key Features

- Hevo supports 150+ sources, including databases, SaaS applications, and cloud storage.

- It offers transformations with Python code for custom processing needs.

- Real-time load with schema detection and automatic mapping.

Pros

- User-friendly, no-code interface.

- An ability to identify data automatically.

Cons

- Pricing can be steep for high-volume data.

- Limited custom transformation control.

Pricing

Hevo offers a 14-day free trial; paid plans start at $239 per month, based on event volume and features.

Fivetran

Fivetran keeps data flowing into cloud warehouses by continuously capturing changes at the source, quietly handling incremental updates and schema shifts so teams don’t have to think about pipeline maintenance day to day.

Best For

- Automating the process of extracting, loading, and transforming data.

- Bringing together records from various DBs, SaaS apps, and hybrid systems for analytics and reporting.

- Teams in need of marketing analytics, customer 360 views, or syncing financial records across multiple platforms for accurate decision-making.

Reviews

G2 Crowd – 4.2/5 (based on 430 reviews)

Fivetran earns praise for its ability to cut through the time-consuming pipeline work. Users value its extensive connector options and rock-solid, hands-off pipelines.

However, volume-based pricing can throw you some curveballs, and if you need to do fancy data transformations, you’ll have to bring in extra tools like dbt to pick up the slack.

Key Features

- Quick setup with minimal ongoing maintenance.

- Incremental data sync – only changed records (inserts, updates, deletes) are transferred.

- Tracks pipeline health and failures.

- You can build custom connectors for unsupported sources.

Pros

- More than 700 sources for various integration needs.

- Meets enterprise-level security and compliance standards.

Cons

- Lacks native data visualization or reporting capabilities.

- Some connectors are still in beta or have limited features.

Pricing

Pricing can fluctuate due to seasonal data spikes or business growth, and Fivetran offers an online pricing estimator to help businesses model expected costs. Annual contracts and volume discounts are also available.

Airbyte

Airbyte is an open-source CDC-friendly ELT platform that lets teams build and control their own data pipelines, with the flexibility to adapt and scale as data needs evolve.

Best For

- Pulling together data of any size from everywhere – databases, APIs, SaaS, files.

- Both live streaming and batch processing to keep reports and insights current.

- Data engineers and analysts who prefer drag-and-drop over coding.

- Enterprises looking to integrate AI/ML workflows and unstructured records into vector DBs for swifter analytics and machine learning.

Reviews

G2 Crowd – 4.4/5 (based on 62 reviews)

Users appreciate Airbyte’s open-source flexibility, extensive connector selection, and intuitive interface. Pipeline setup and the custom connector toolkit are often highlighted as key benefits.

However, there’s a learning curve, paid enterprise tiers, and transformation capabilities that fall short.

Key Features

- 600+ pre-built connectors.

- The Connector Development Kit to quickly build custom connectors for unsupported sources.

- Real-time and scheduled sync.

- Normalization and transformation.

Pros

- Open-source and free to start, encouraging adoption and customization.

- An extensive number of connectors covering the most common and niche sources.

- User-friendly interface, suitable for both technical and non-technical users.

- Efficient incremental sync, which reduces data transfer costs and improves performance.

- Strong community support and continuous updates from contributors.

Cons

- Enterprise features require paid licenses, which may be costly for smaller organizations.

- A learning curve exists when managing complex pipelines or building custom connectors.

- Monitoring and alerting features are basic compared to some commercial tools.

- Custom connector development can be challenging for highly specialized sources.

- Limited advanced transformation capabilities natively within Airbyte.

Pricing

Airbyte offers a Self-Managed Community Edition, which is free and open-source, perfect for smaller teams or those looking to host their own solution. Self-Managed Enterprise and Airbyte Cloud plans, for more enterprise-grade features, are available with tailored pricing based on usage.

The Cloud Teams edition adds role-based access control and team management features, perfect for scaling organizations. Pricing for these plans is based on usage, so you will need to contact Airbyte to learn how much you will need to pay.

Estuary Flow

Estuary Flow is a real-time data integration and CDC platform built for teams that need fast, scalable pipelines and want to keep data moving continuously as systems and workloads grow. tions.

Best For

- Use cases requiring sub-100ms latency for fast operational insights, such as AI and event-driven architectures.

- Teams that want no-code connectors and reusable data collections for flexible, many-to-many data distribution and transformations.

Reviews

G2 Crowd – 4.8/5 (based on 14 reviews)

Users across finance, retail, and healthcare appreciate Estuary Flow especially sincerely for its real-time data processing capabilities and low-latency performance.

However, some users note a learning curve due to its unique architecture and streaming concepts, and many wish for additional community resources.

Key Features

- No-code connectors for easy integration across various sources.

- Private storage with secure, append-only transaction logs and encryption.

- ELT and ETL support.

- Flexible data movement using reusable collections across projects.

- Schema evolution and automated updates to maintain consistency.

Pros

- Low latency for real-time analytics and operational pipelines.

- Proven cost savings and productivity improvements for large enterprises.

- Strong support for AI/ML use cases and operational analytics.

Cons

- Smaller ecosystem compared to legacy tools, limiting community-driven support.

- Some users face a learning curve adapting to its unique collection-based architecture and streaming concepts.

- Complexity in custom connector development for highly specialized sources.

Pricing

The pricing is usage-based, but Estuary offers a 30-day free trial with no credit card required to explore the platform before committing.

Choosing the Best Change Data Capture Tool

Haunting down the right tool is like teaming up with a travel companion. While you’re the one mapping the path, the other one has to be able to overcome it.

Here’s a concise guide to scouting for a partner who can go the distance, keep pace, and won’t give up at the foot of a hill.

1. Start by understanding business and technical requirements. We made a quick questionnaire to help discover what the CDC tool should accomplish:

- Which databases, applications, and platforms do you need to integrate?

- How much data is involved?

- Are there frequent schema changes?

- Can your team manage the tool, or is a low-maintenance solution needed?

2. The next step is to look over the potential tools’ key features. The difference might be slight, yet it might have a huge effect later. So, among all the possible features, make sure the tool supports:

- Log-based CDC for low-latency, minimal-impact replication.

- A broad range of connectors for different sources and destinations.

- Transformation capabilities to handle any processing requirements (e.g., integration with tools like dbt).

- Data delivery guarantees, such as exactly-once delivery for reliable replication.

3. Pricing is another crucial factor. Many CDC tools have usage-based pricing models, so make sure to understand how the costs will scale with your data volume. Also, never neglect the power of a free trial. Test before invest.

4. Consider user feedback. Look for tools with positive reviews for reliability, scalability, and customer support. Learn from the successes and mistakes of other teams.

Conclusion

Supporting the CDC infrastructure with manual coding is not only too much, but it is also unnecessary with all the tools waiting for you on the market. Now, the most valuable resource – a team’s time – can be used for other tasks and overall business improvements. So, investing in a third-party CDC tool is a win-win solution.

Of course, the selection of such a tool depends on your company’s business scenarios. However, always consider

- Balance between the provided functionality.

- Solution’s usability.

- Cost savings.

Use the free trials, if accessible, to their maximum to test the potential solution and how well it responds to your needs. You can start with Skyvia and check what it can do – in the worst case, you’ll get a better sense of what features matter most to your team.

F.A.Q. for CDC Tools

How is CDC different from ETL batch processing?

CDC captures changes in real time, while ETL batch processing does the same thing at set intervals, often causing delays.

Do I need to be a developer to use a CDC tool?

No, CDC tools often offer no-code or low-code options. Many of them are accessible for business users, though technical users can benefit from more advanced features.

How do I choose the right CDC tool for my needs?

Choose a CDC tool based on your data sources, latency needs, scalability, and ease of integration. Also, consider cost, maintenance, and whether it supports real-time or batch processing.

Can CDC tools work with cloud applications like Salesforce or HubSpot?

Yes, many CDC tools, for example, Skyvia, integrate with cloud applications like Salesforce or HubSpot, syncing data with CRMs and other cloud platforms in real time.