The rule in data mining is simple: the more, the better. The more material there is to analyze, the richer is the context for predictions. Modern mining tools, built to handle big data, can easily process terabytes – provided that this data is gathered and prepared.

Data integration – collecting information from different sources into a single dataset – is a foundational stage of the data mining pipeline. It creates the raw material for mining: the piles of ore that have to be sifted through to get the grains of insight – the true gold of today.

In this article, we’ll focus on key integration techniques for data mining, highlighting best practices and common pitfalls every miner should be aware of.

Table of Contents

- Why Data Integration is the Backbone of Successful Data Mining

- Key Data Integration Techniques for Data Mining

- Data Integration Approaches: Tight vs. Loose Coupling

- Common Challenges in Data Integration for Data Mining

- How Skyvia Streamlines Data Integration for Data Mining

- Best Practices for a Winning Data Integration Strategy

- The Future of Data Integration in Data Mining

- Conclusion

Why Data Integration is the Backbone of Successful Data Mining

The short answer is – because it enables the process itself by providing the material for mining. However smart your algorithms are, without integrated data, you’re just groping in the dark. Integration is what connects the dots, weaving them into a coherent canvas to picture your analytics. And the benefits it brings are enormous.

Unified View

As companies go for hybrid and multicloud deployment models, their data becomes increasingly scattered – CRM here, ERP there, cloud tools everywhere.

Integration solves this problem by combining everything into a single, unified view. It gives you the broader context needed to understand your data and lays a solid foundation for discovery.

Improved Data Quality and Accuracy

Good mining starts with good data. The accuracy of your predictions rises in sync with the quality of the input feeding your mining tools.

Integration processes like mapping, transformation, and validation help clean up inconsistencies, fix missing values, and align schemas – so you’re not mining noise.

Enhanced Analytics and Richer Insights

Integration allows you to ask deeper questions – and actually get answers. By integrating data from different sources, you’re quite literally putting together pieces of a puzzle – and patterns that were invisible in isolated datasets start to emerge.

Increased Efficiency

Human resources are precious – and limited. You can spend them on manual data wrangling, or on high-value tasks like interpreting insights.

Integration helps you automate all messy parts like extraction, cleaning, transformation, thus freeing your analysts for more creative tasks.

Key Data Integration Techniques for Data Mining

The five techniques outlined below all serve the same purpose – integrating data from various sources – but they take different paths to get there. Eventually, the one you choose will depend on:

- Your end goal;

- How quickly you need results;

- How precise those results must be.

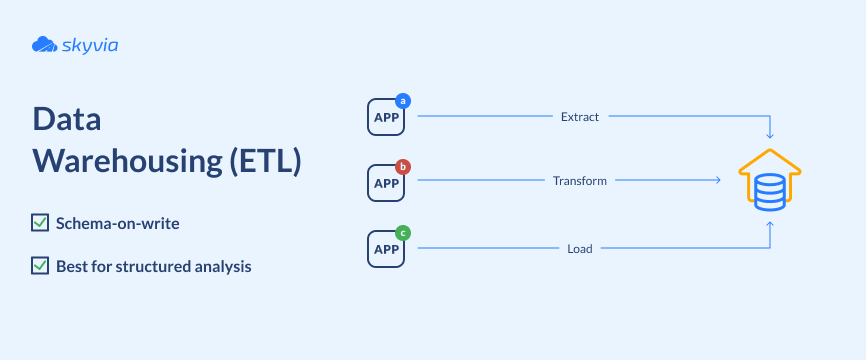

1. Data Warehousing

Data warehouse (DWH) integration is the process of collecting data from multiple sources into a centralized repository, where it gets cleaned and stored in a consistent format. The purpose of DWH is simple but powerful: to serve as a single, reliable “source of truth” for analytical tools.

It is worth noting that you can also use a data lake for this purpose. However, mining algorithms and predictive models require structured features to deliver accurate results. The cleaner and more organized the data is, the closer your predictions will be to reality.

DWH enforces this by applying a schema-on-write approach: data must be structured and validated before it is loaded – otherwise, it simply cannot persist in the warehouse.

The process that feeds the warehouse is known as ETL:

- Extract: pulling relevant information from various operational systems, databases, or external sources.

- Transform: cleansing, standardizing, and reformatting the extracted data for compatibility and quality.

- Load: loading the transformed data into the warehouse.

Because warehousing enforces a strict structure on incoming data, it is particularly suited for in-depth mining tasks such as trend detection, predictive modeling, and historical reporting.

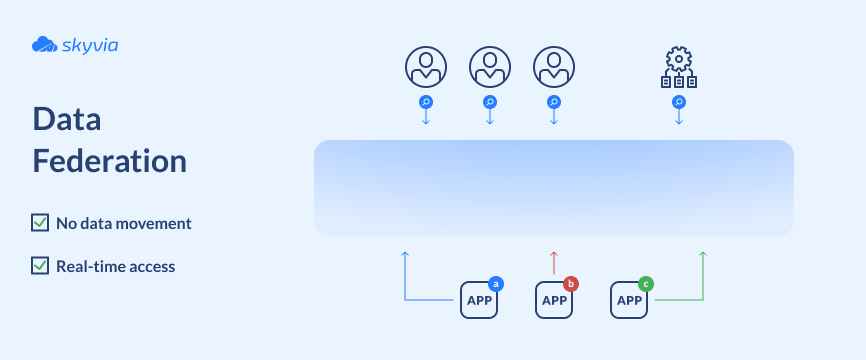

2. Data Federation (Virtualization)

Unlike DWH, this method is about virtual, not physical, consolidation. Instead of physically moving data into a central repository, powerful virtualization platforms visualize it as a unified view across multiple sources.

Through a common interface, users or mining tools can query and analyze data as if it were all stored in one place.

The key advantage of this approach is incredibly fast access and the ability to query in real time. This is of enormous importance in cases when information changes on the fly – such as stock trading platforms or IoT sensor streams. Or, when duplicating massive volumes of data in storage is cost-inefficient, like in cases with log and event records.

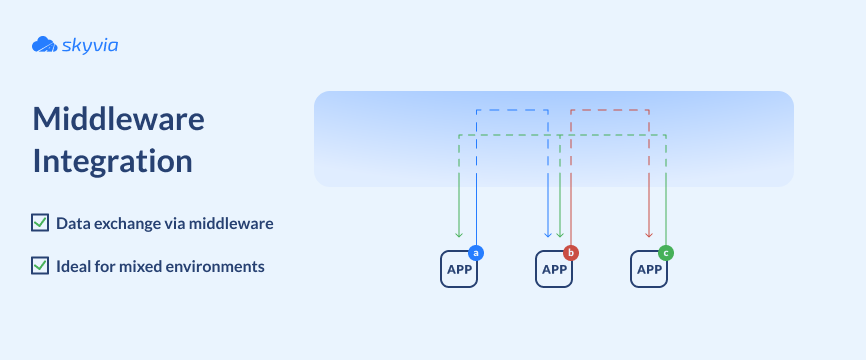

3. Middleware Integration

If the data abstraction layer is like a “window” to all your sources, middleware integration is more like a distribution network.

Middleware software creates a programmatic layer that allows different systems to “talk” to each other and exchange information.

How this layer is implemented depends on the type of middleware used:

- Enterprise service bus (ESB) middleware – acts like a central hub for routing data between multiple systems.

- Message-oriented middleware (MOM) – enables asynchronous communication between systems, with applications sending data as messages to a broker, which queues and delivers them.

- Database middleware – enables communication between heterogeneous DB systems.

- Integration Platforms as a Service (iPaaS) – cloud-based middleware that simplifies integration between SaaS applications, databases, and APIs.

Thanks to this flexibility, middleware integration is an excellent choice for heterogeneous environments that contain both legacy and modern systems.

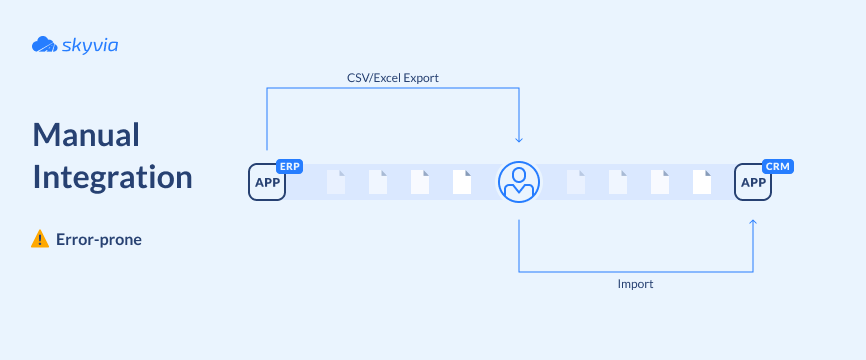

4. Manual Integration

This is a traditional and time-tested approach that comes down to simply exporting data from one source and importing it into another, often via CSV or Excel files.

Despite its outdated nature and many drawbacks, it is still used today – especially for small datasets and one-off tasks. However, in the context of mining, this method is the least suitable because:

- Mining tools expect terabytes: human operators simply cannot process that much data efficiently.

- Manual operations are highly error-prone, which can undermine the accuracy of predictive models and analytics.

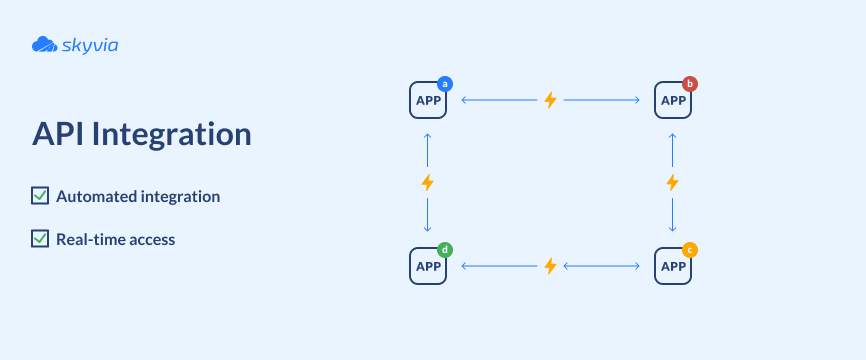

5. Application-Based Integration

Same as middleware integration, this method also enables system-to-system communication but does it in a different way: it creates direct point-to-point connections between systems through their Application Programming Interfaces (APIs).

API integration is one of the most efficient ways to transfer data between applications: it is fast, reliable, supports real-time mode, and requires minimal human intervention. The flexibility and scalability of this method make it the backbone of many data-centered workflows, including mining.

Data Integration Approaches: Tight vs. Loose Coupling

If tight coupling is about control, loose coupling is about agility – and in today’s data-driven world, you often need both. The table below will help you nail their key differences by outlining their pros, cons and best-for use cases.

| Method | Tight Coupling | Loose Coupling |

|---|---|---|

| Explanation | Involves physically consolidating data into a single repository. | Virtual, on-demand access to data without moving it. |

| Integration technique | Data warehousing | Data federation |

| Pros | Data consistency; faster queries. | High flexibility; real-time data access; lower storage costs. |

| Cons | Initial setup can be costly and time-consuming. | Slower query performance; data consistency issues. |

| Best for | Enterprise-level BI, predictive modeling, and large-scale reporting. | Real-time analytics and cost-efficient integration at scale. |

Common Challenges in Data Integration for Data Mining

Heterogeneity

Having multiple information sources is great. It means more material to analyze and a broader external context to correlate your data with. But with variety comes heterogeneity as each system tends to store data in a different format.

One source gives you neat, tabular records with rows and columns. Another delivers a tangled JSON blob through an API. Somewhere else, a legacy system spits out XML files that feel prehistoric. Reconciling them can be like trying to merge completely different languages into a single conversation.

Data mining thrives on consistency. If formats, schemas, and types don’t align, you risk wasting hours just cleaning and reshaping data before you can even start analyzing it.

Quality Issues

As the number of sources grows, so do the potential risks of data quality issues: inconsistent naming, missing values, and mismatched fields. To make things worse, data from different systems can duplicate and contradict itself – like two customer records with the same contact details but different birthdays.

Mining models rely on patterns. If the underlying data is inconsistent, incomplete, or filled with noise, those patterns become unreliable – and so do your predictions.

Schema Integration and Mapping

Even if your data comes in the same format, it doesn’t mean it speaks the same language. One system calls it “CustomerID,” another just “ID”, and a third splits it into two separate fields. A human can see instantly that they all refer to the same thing, but mining tools can’t – they either misinterpret the data or fail outright.

If you’re correlating sales data from two systems and their “Product ID” fields don’t match, you’ll end up mining thin air.

Scalability

Data mining is only as good as the pipeline that feeds it. How many sources can your integration layer handle? A few of them would be easy.

Ten or a dozen might still be fine, but what if you’re dealing with hundreds of sources? The real-world load is merciless: it can easily break ideal workflows that run like clockwork in a proof-of-concept environment.

Design for scale from the start. If your integration layer can’t keep up with growing data volumes, you may as well say good-bye to real-time insights. And yes, to good relationships with your analysts, too.

Privacy and Security

Sensitive information runs through most data like veins in marble. In fact, it’s rare to find an integration pipeline that doesn’t touch it in one way or another. Think customer records, financial details, or confidential logs – integrating these kinds of data is more about handling trust than just moving bytes.

Leaks or unauthorized access can breach privacy laws, and then things can turn really bad for your company. Never underestimate these risks – make security and compliance a non-negotiable part of the process.

How Skyvia Streamlines Data Integration for Data Mining

Skyvia, a powerful cloud data integration platform, is designed to effectively meet all the challenges that make data integration for mining complex.

It has over 200+ prebuilt connectors to integrate with cloud applications, databases and data warehouses. And if the system you need isn’t on the list, you’re not stuck – Skyvia Connect lets you create API endpoints to establish a custom connection with almost any source.

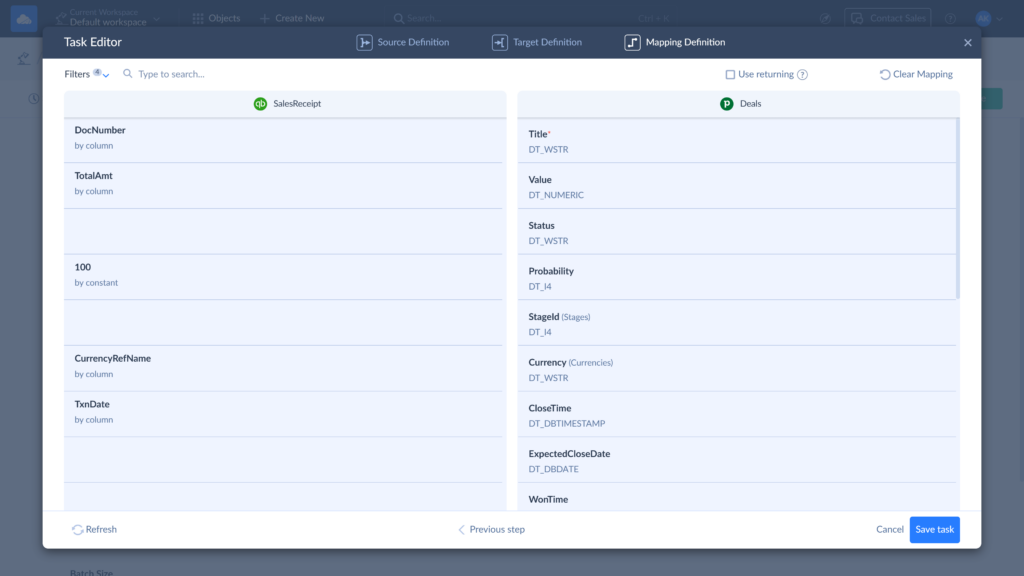

Skyvia’s powerful mapping and transformation tools make data heterogeneity and schema mismatch far less of a headache. You can match columns, run lookups, or even write expressions to convert data types and merge fields.

The platform also helps improve data quality during integration. Inconsistent field names between systems? No worries – map Title in Pipedrive Deals to DocNumber in QuickBooks SalesReceipts and move on. Missing values? You can set default values for target fields so that integrations don’t fail mid-run. Also, in the mapping editor, you can preview data before loading and catch potential problems early.

Scalability is not a problem with Skyvia’s flexible subscription plans. From mid-sized businesses to enterprise-level workloads, it can process over 200 million records per month. The built-in notification system ensures that you remain within your plan limits.

Finally, Skyvia takes security seriously. Your data is protected with:

- Encryption in transit and at rest.

- Secure hosting in Microsoft Azure, compliant with SOC 2, ISO 27001, GDPR, HIPAA, and PCI DSS.

- OAuth authentication with encrypted tokens.

- Granular access control with options to restrict API endpoints, limit IP ranges, and manage user accounts individually.

Best Practices for a Winning Data Integration Strategy

Integration can be your greatest ally in data mining – or a silent saboteur, slipping a spanner into the works. The difference? Strategy. Here’s how to get it right from the start.

Define Clear Objectives: Keep the End Goal in Mind

At first glance, one might think, “How could integration change just because the goal is different? Isn’t it always about pulling data from different places and consolidating it?” Not quite. The way you integrate depends heavily on what you’re trying to achieve.

A pipeline for syncing two systems in real time looks nothing like the one for building an ML training dataset. The table below shows how different end goals influence the process – beyond simply choosing different sources.

| End Goal | Priority | Integration Technique | Use Case |

|---|---|---|---|

| BI & reporting | Structured, historical data | Batch ETL into a centralized DWH | A retail company wants to analyze quarterly sales trends. |

| Real-time monitoring | Low latency | Data federation (loose coupling) or streaming pipelines | A logistics company needs to track shipments live. |

| ML or predictive modeling | Clean training datasets with labels and consistent formatting; feature engineering | Data warehousing (tight coupling) | An insurance provider wants to predict churn. |

| Data sync between systems | Data freshness and bidirectional sync | API-based or middleware integration | A company syncing data between a CRM and a marketing automation platform. |

Invest in Profiling: Get to Know Your Data

Before merging anything, understand exactly what you’re working with. Data profiling exposes potential trouble spots like invalid data types, missing values, and inconsistencies. Fixing these issues early is far easier than erasing them after your systems have been connected.

Prioritize Data Governance and Quality: Divide and Rule

Just as every parish has its priest, every dataset needs a clear owner. Clear understanding of who owns what allows setting unified standards for naming conventions, data types, and access across departments and units.

Choose the Right Tool: Drive Nails with a Hammer

There’s no universal integration tool that’s perfect for everyone. On one scale there are your requirements shaped by your data, your goals, and your infrastructure. On the other – the realities of available platforms, each with its own trade-offs.

The balance lies in between keeping costs in check, complexity manageable, and results on target. With every deviation, you risk ending up overspending, overcomplicating, or underdelivering.

Monitor and Optimize: No “Set It and Forget It”

Integration is a living system – and living systems change. Business needs evolve: maybe you start tracking new KPIs or adding new tools. Even if your goals stay steady, changes in connected systems can throw you off. APIs get updated, fields in SaaS apps get renamed or deprecated, and suddenly mappings break.

A good monitoring dashboard will flag issues early, so pipelines don’t collapse unnoticed. And regular reviews, especially after major system changes, will keep your integrations healthy and relevant.

The Future of Data Integration in Data Mining

AI and Machine Learning

AI is being increasingly used not only for the analysis itself but also during the integration stage: for automating schema mapping, detecting data quality issues, and suggesting transformations. And the payoff is real: faster deployment, fewer mistakes, and smarter pipelines.

Real-Time Integration

The demand for real-time data is only going to grow. This trend is largely driven by the following factors:

- The dynamics of modern businesses that require quick decisions on the spot.

- The rise of event-driven architectures: these systems trigger actions based on live events and thus rely on instant data flows to function correctly.

- The necessity of fresh input for automation tools and AI models, especially in areas like fraud detection, pricing optimization, or supply chain logistics.

So, batch processing is gradually giving way to real-time, streaming pipelines.

Cloud-Native Integration

As the shift to the cloud continues at full speed, providers catch up by offering cloud-native features like serverless ETL, managed data lakes, and auto-scaling pipelines.

The result? Modern integration tools that can handle hybrid environments, multi-cloud ecosystems, and massive data volumes without breaking a sweat.

Conclusion

Data integration is the foundation of data mining – the footing of a complex pyramid of processes, with those coveted insights at the very top. The more time and effort you invest here, the stronger your results will be.

Yes, integration comes with its share of pitfalls, but with the right tools and approach, you can navigate them successfully. When weighing your options, consider Skyvia – a platform that delivers an exceptional balance of productivity, efficiency, and cost.

F.A.Q. for Data Mining

What is the difference between data integration and ETL?

ETL is just one way to integrate data – by extracting, cleaning, and loading it into a system. Integration can also be done virtually or via APIs.

How does data integration improve data quality for mining?

It fixes messy bits – like duplicates, missing values, and mismatched formats – so mining tools work with reliable data.

Which data integration technique is best for real-time analysis?

Data federation or streaming pipelines – they let you tap into changing data instantly, without waiting for batches.

Why is schema integration a major challenge?

Because systems “speak” differently – one calls it CustomerID, another just ID. Lining these up takes smart mapping and sometimes a human eye.