Summary

- Zero ETL is a modern, pipeline-free approach to integration that enables instant data access and real-time analytics. This is made possible by a simplified architecture and cloud-native capabilities that support federated queries and built-in replication between services. Thanks to these features, Zero ETL is an ideal choice for scenarios where immediacy and low latency are critical.

Modern businesses oriented toward big data and real-time analytics expect their integration systems to keep pace. They need fast, low-latency solutions. Zero ETL, a new approach to data integration, rises to this challenge. Designed with the cloud in mind, it works best where traditional ETL stalls, removing complex pipelines and their maintenance from your task list.

Recently Zero ETL gets a lot of attention, as it is being adopted and invested in by major cloud providers. What hides behind this buzz word? How does this approach to data integration work, and what benefits can it bring to your business? You’ll find the answers in this guide.

Table of Contents

- What is Zero ETL? A Deeper Dive

- How Does Zero ETL Work?

- The Business Impact: Key Benefits of Adopting Zero ETL

- Common Use Cases for Zero ETL

- The “Gotchas”: Limitations and Considerations of Zero ETL

- The Future of Data Integration: Is Zero ETL the New Standard?

- Conclusion

What is Zero ETL? A Deeper Dive

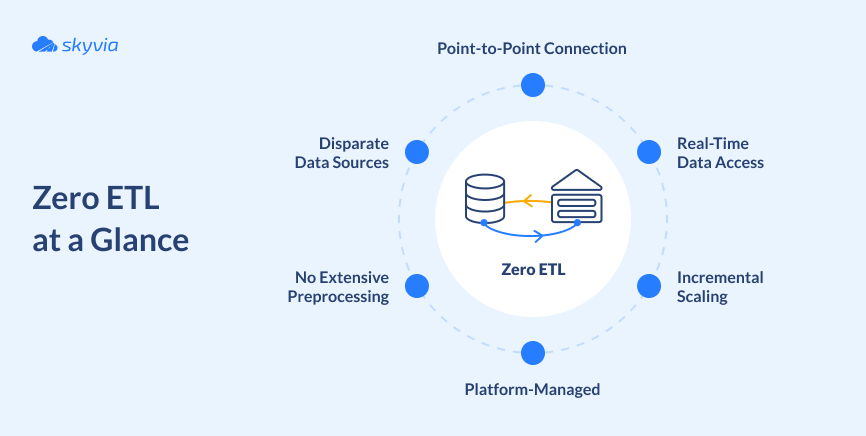

Zero ETL is an integration technique that enables direct, point-to-point data movement between a source and its target destination. There’s no need to configure external pipelines, write transformations, or stage data in S3 – the flow is fully automated and managed by the cloud platform.

This is the key to understanding Zero ETL: it leverages native cloud capabilities to eliminate traditional ETL pipelines. All the required steps happen inside or between platforms automatically. Data can either be queried directly from its original location (federation) or replicated natively by the platform itself, without manual orchestration.

The diagram below displays core features that define a Zero ETL design.

Key Characteristics

Elimination of Intermediate Steps

Traditional ETL moves data through multiple stages. Zero ETL, by contrast, flattens this pipeline: it acts more like a native replication mechanism, automatically syncing data in its original format and structure. This results in faster transfers without extra effort.

Real-Time or Near-Real-Time Data

Immediacy is a hallmark of Zero ETL. There’s no need waiting for scheduled ETL jobs to complete: your data is available for analysis the moment it’s created or updated, hot off the skillet.

Cloud-Native and Scalable

In traditional ETL setups, increase in data volumes often requires re-engineering your pipelines. Not so with Zero ETL. Cloud-native by design, it thrives on elasticity, scaling automatically as workloads expand. So if your setup suddenly starts syncing millions instead of thousands of records, you probably won’t notice it all – the platform will adjust seamlessly.

How Does Zero ETL Work?

Zero ETL workflows rely heavily on cloud capabilities to deliver the speed and simplicity they promise. Let’s take a closer look at the technologies and processes that make these integrations possible.

Core Technologies

Virtualization/Federation

Here, the system queries data in place. The data stays where it is – in multiple databases, buckets, or services. The query engine acts like a federated layer that understands how to reach each source and merge results at query time.

Tools like BigQuery Omni, AWS Athena, or Denodo connect to data lakes, operational databases, or even other warehouses, without copying the data.

Change Data Capture (CDC)

Change Data Capture (CDC) is a technique of identifying and capturing database changes – inserts, updates, deletes – in a source system as they happen. These changes are further delivered to target destinations such as DWH or data lakes through native replication.

Since CDC tracks changes incrementally, the whole process is faster and lighter than full replication, making it ideal for low-latency updates.

Implementation methods may vary, but the most common include:

- Log-based CDC

- Timestamp-based CDC

- Trigger-based CDC

It can be either native or configurable – if the source system supports it, you simply enable it; if not, you can set it up through connectors or middleware. In modern Zero ETL environments, CDC is often completely abstracted by the platform.

Data Streaming

Streaming platforms such as Apache Kafka, AWS Kinesis, and Google Pub/Sub act as data highways, moving events from many sources to many destinations at once. Although not mandatory components of every Zero ETL setup, they are powerful enabling technologies that facilitate the process.

In Zero ETL architectures, they’re used when:

- You have multiple data producers (apps, IoT, APIs) sending continuous events.

- You need scalable, asynchronous delivery across systems.

- You want near-real-time analytics, alerts, or ML triggers.

At the same time, provider managed cloud-to-cloud syncs, such as AWS Aurora – Redshift Zero-ETL relies on built-in replication mechanisms – you never see Kafka involved at all.

Schema-on-Read

Unlike traditional ETL, which imposes a strict, predefined structure on data before loading, Zero ETL handles the transformation step through the platform’s native capabilities. It lets data land in its raw form first, and applies the schema later, when queried. The engine automatically interprets the data at query time, deciding what fields exist, what types they are, and how to read them.

This approach enables you to analyze unstructured or semi-structured data (such as JSON or Parquet files) without lengthy preparation — an ideal setup for data lakes and machine learning.

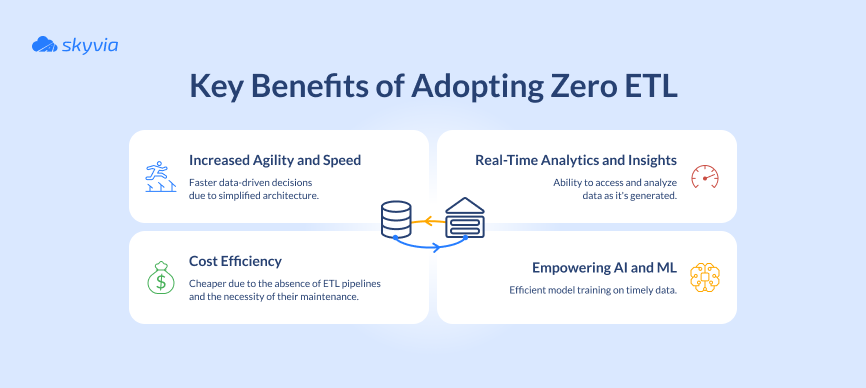

The Business Impact: Key Benefits of Adopting Zero ETL

As a modern approach, Zero ETL is designed to align with today’s realities, such as:

- rapid data aging,

- the explosion of operational data,

- the widespread adoption of cloud services, and

- the growing demand for timely data in AI and ML.

Its trademark is a simplified architecture that facilitates direct flow from source to target without passing through external tools or staging layers. Combined with cloud elasticity, this design enables the advantages Zero ETL is known for – highlighted in the diagram below.

Common Use Cases for Zero ETL

Before we explore real-life examples, it’s worth emphasizing that Zero ETL is primarily a design principle, not a single technology or component. You may find some examples overlap – in dashboards, streaming, or transactional syncs. That’s because they all represent practical implementations of the same core idea – a continuous, low-latency flow of data – but viewed from different perspectives:

- Real-Time BI: who consumes it.

- Operational Analytics: where it comes from.

- Data Replication to Cloud Warehouses: how it moves.

- AI/ML Applications: what it enables.

Real-Time Business Intelligence

Zero ETL powers dashboards and reports with up-to-the-minute information – and there’s hardly an industry where fresh metrics won’t make a difference. The table below highlights several real-world examples.

| Industry | Use Case |

|---|---|

| Retail and E-commerce | – Live sales dashboards – Inventory and supply-chain tracking – Dynamic pricing and promotions |

| Finance and Banking | – Stock trade records and transaction monitoring – Fraud detection – Real-time P&L dashboards |

| Healthcare | – Dashboards for patients’ vital signs reading – Operational dashboards in hospitals – Claims and billing analytics |

| Telecommunications | – Network-performance monitoring – Customer experience analytics |

| Logistics and Transportation | – Fleet-tracking dashboards – Predictive maintenance analytics |

| SaaS/Tech | – Product-usage analytics – User-behavior dashboards – Subscription and churn analytics |

Operational Analytics

Operational systems refer to transactional, process-driven, or event-producing platforms that record ongoing business activity – CRMs, ERPs, POS, IoT, logistics, or financial systems. Zero ETL ensures that this data becomes usable as soon as it’s generated. Example scenarios may include:

- POS systems: Monitor real-time sales and restock needs.

- CRM or support system: Track ticket volume and customer response times.

- Sensors (IoT): Detect product anomalies in real-time.

Data Replication to Cloud Data Warehouses

This is one of the core use cases for Zero ETL, making transactional data instantly available for analytics. Analyzing it is like keeping a finger on the pulse: it shows exactly what customers, systems, and employees are doing right now.

Whether detecting fraud within seconds of a suspicious payment, or rerouting deliveries during peak traffic – Zero ETL enables teams to respond fast by continuously synchronizing operational sources with warehouses.

Powering AI/ML Applications

Timely data availability is what makes AI apps responsive in user interactions, and ML models accurate and adaptive to real-world changes. Zero ETL enables that by ensuring that data is always current – minimizing drift, supporting continuous learning, and accelerating model responsiveness.

The “Gotchas”: Limitations and Considerations of Zero ETL

Reading this article may give you the impression that Zero ETL is all benefits and no drawbacks. Indeed, it’s often marketed as a simple, low-maintenance approach to integration. But in practice, there are tradeoffs beneath the surface, whose overlooking can bite you later. So let’s pinpoint the flies in the ointment.

Limited Data Transformation Capabilities

Zero ETL removes pipelines, but not the logic behind those pipelines. Complex transformations don’t vanish; they only shift into other architectural layers.

In Zero ETL setups, transformations are handled either within the target platform (using SQL or built-in features) or applied at query time. While this works well for simple reshaping, complex workflows with multi-step logic or large-scale data cleaning may quickly overwhelm a Zero ETL design and stall your integration.

Data Governance and Quality Concerns

Traditional ETL pipelines enforce validation, cleansing, and schema checks before data reaches analytical systems. In Zero ETL, raw data arrives almost instantly, which means bad data arrives instantly too.

To compensate for this, quality checks are shifted to other layers: destination platform, query engine, native replication layer, or even downstream BI and ML systems. Governance still exists, but it’s distributed rather than centralized in a single pipeline.

Not a One-Size-Fits-All Solution

Although Zero ETL shines when speed and simplicity are essential, there are other cases with different priorities. It is less effective when:

- Data is very messy or inconsistent.

- Complex transformations are required.

- Multiple sources must be blended before analysis.

- Integration spans systems outside the cloud provider’s ecosystem.

The Future of Data Integration: Is Zero ETL the New Standard?

Talking about a “standard” implies universality – something widely adopted and recommended in most contexts. Is that true for Zero ETL? Yes and no. On the one hand, it is becoming a standard within its niche of real-time, cloud-native workflows. On the other, the data landscape is far too diverse for any single pattern to become universal. So what comes next?

The Evolving Landscape

Recognizing the need for real-time integration, hyperscalers are heavily investing in Zero ETL and introducing native, low-latency workflows. These include pre-built, in-cloud connections (such as AWS’s Zero ETL services) as well as cross-cloud capabilities like BigQuery Omni for data federation and multi-cloud analytics.

Hybrid Approaches

Despite all the advantages, Zero ETL won’t replace traditional ETL entirely – and it doesn’t need to. Adopting a hybrid model will allow organizations to take the best from two worlds, selecting the right method for each scenario.

The Role of Data Integration Platforms

In disparate data landscapes integration platforms can serve as the axial element that links all the components together. By supporting both traditional ETL/ELT and near real-time integration patterns, solutions like Skyvia give organizations the flexibility to choose the most effective approach for each workload – without locking themselves into a single architecture.

Conclusion

Zero ETL is a modern, pipeline-free integration approach that reflects today’s priorities: speed, simplicity, and real-time access to data. Its power comes from a simplified architecture and cloud-native capabilities that support federated queries and built-in replication between services.

For organizations that value flexibility, Skyvia offers a broad range of integration methods across many platforms – from traditional ETL and ELT to automated, Zero-ETL-like data flows. Whether you’re building multi-step workflows, performing complex transformations, or incorporating real-time patterns, Skyvia provides the tools to handle it all with ease.

To see how Skyvia can support your integration strategy – today and as Zero ETL evolves – explore our full suite of integration solutions.

F.A.Q. for Zero ETL

How is Zero ETL different from traditional ETL?

Traditional ETL extracts, transforms, and loads data through multi-step pipelines. Zero ETL skips those stages and uses cloud-native services to sync or query data instantly.

What is the main advantage of using Zero ETL?

It delivers real-time or near–real-time access to data with far less complexity, letting teams analyze fresh information without managing pipelines.

Is Zero ETL a complete replacement for traditional ETL?

No. Zero ETL is ideal for real-time and low-latency use cases, while traditional ETL is still needed for complex transformations and large-scale data modeling.

What technologies are commonly used to enable Zero ETL?

Zero ETL often uses change data capture, data streaming, federated querying, and cloud-native replication features offered by services like AWS, Google Cloud, and Snowflake.

What are the main limitations or challenges of Zero ETL?

Limited transformation options, distributed governance, dependence on cloud ecosystems, and difficulty handling complex workflows can all pose challenges.