Manual tasks make up the lion’s share of processes in any business. While some could be creative, most are repetitive and require lots of time and attention. Luckily, software solutions come as lifesavers for automating such routine procedures.

One-fifth of all businesses have already experienced the power of workflow automation software. Gartner predicts that 70% of organizations will have implemented this kind of software by 2025.

Apache Airflow is a workflow streamlining solution aiming at accelerating routine procedures. This article provides a detailed description of Apache Airflow as one of the most popular automation solutions. It also presents and compares alternatives to Airflow, their characteristic features, and recommended application areas. Based on that, each business could decide which workflow automation tool could benefit them.

Table of Contents

About Apache Airflow

Some years ago, the well-known company Airbnb decided to orchestrate its multiple complex workflows, so they created Airflow for that. Now, this open-source tool appears under the custody of Apache Software Foundation’s incubation program.

Airflow aims to ensure effective workflow automation through the construction of data pipelines. All this is done using the Python programming language by creating tasks and establishing dependencies between them. Such constructions of tasks and dependencies are known as directed acyclic graphs (DAG). Airflow implements scheduler and executor components that respectively plan and carry out DAGs.

Advantages of Apache Airflow

This tool is used in over 200 companies worldwide, including famous cross-industry players like Yahoo, PayPal, Intel, and Stripe. If such well-known companies use Airflow, there should be reasons for that. So, let’s look at the advantageous features this service offers businesses.

- Easy to deploy. Building simple data transfer or complex machine learning workflows is done with Python. Moreover, Airflow makes it easy to modify and adjust data pipelines anytime.

- Real-time monitoring. This service implements an advanced alert system that notifies about any error or interruption in the running task. Log files are easily accessed via GUI, providing a detailed error description.

- Variability. Airflow integrates with tools such as Zendesk, PostgreSQL, Docker, Kubernetes, Github, and others. It ensures flexibility of choice for setting up data workflow in the environment of one’s choice.

- Community support. Being an open-source platform, the Airflow community is constantly expanding. It contributes to the fast addition of new features and bug fixes as well as fruitful collaboration among community members.

Most Common Apache Airflow Use Cases

Apache Airflow is used within a variety of scenarios.

- Use Case 1. Scheduling and pre-scheduling workflows based on specific parameters. For instance, when a sales deal is made and registered in Dynamics CRM, this data should be transferred to HubSpot. Due to the data sensors Airflow relies on, the process is triggered when data arrives in Dynamics CRM and is sent to HubSpot later.

- Use Case 2. Airflow is also suitable for designing ETL pipelines for working with batch data. That way, the data is extracted from one or several sources, needed data transformations are performed, and then data gets loaded to the destination platform.

- Use Case 3. Airflow is an excellent tool for training machine learning models. Those require large amounts of data for the training set used in machine learning algorithms to make accurate predictions.

Being an open-source tool, Apache Airflow can’t boast powerful support options despite relying on a solid community. It might be an issue when learning how to use the tool and figuring out how its features work. Moreover, the Python coding requirement might not be suitable for all users. Therefore, we have prepared a list of Airflow alternatives that provide similar functionality but are easier to use at the same time.

Skyvia: Best Alternative to Airflow

Skyvia was initially designed as a cloud data integration platform offering such options as ETL data pipeline construction and ELT scenarios. However, Skyvia keeps on developing and thus provides other tools for working with data. One of those is the Automation solution that allows building and managing workflows similar to Airflow. See the detailed comparison of Skyvia vs. Apache Airflow tool.

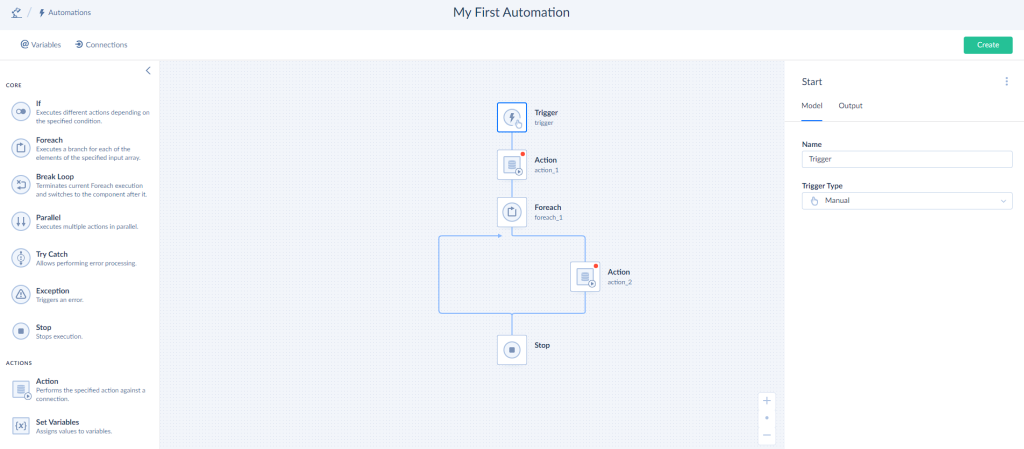

Building workflow automation with Skyvia is easy because everything can be done with a visual wizard without any knowledge of Python. First, tune up the trigger – it could be of the Manual, Connection, Run on Schedule, or Webhook type – to specify the event invoking the automation workflow. Then, add components to the diagram to define which input data should be considered and how it should be elaborated. In fact, the Automation tool by Skyvia offers high flexibility in data automation process planning and management.

Skyvia offers the ETL, ELT, and Reverse ETL functionality for any data integration process. Set up source and destination data platforms or apps for indicating the data path. Then, determine how data needs to be transformed and mapped. Also, Skyvia allows scheduling data integration processes so that new or updated data is transferred regularly. While simple scenarios are delivered by Skyvia Import, more complex data-related tasks use Data Flow and Control Flow tools. They make it possible to involve multiple data connectors and complex data transformation scenarios.

Key Features of Skyvia

We have presented how Skyvia copes with data workflows within Automation, Import, and Data Flow. However, apart from being a data integration service, Skyvia offers its users many other functions and features.

- User-friendly. The no-code approach in Skyvia makes it possible to construct workflow automation and data integration scenarios in minutes.

- Accessed via browser. Being a cloud-based platform, Skyvia is OS-independent and easily accessed from any browser. It’s incredibly convenient for remote teams as any collaborator could access or adjust data pipelines.

- Multifunctional. Apart from simple data import, Skyvia offers solutions for bi-directional data synchronization, data replication, data migration, etc.

- Affordable and Scalable. Start using Skyvia for free and pay only when an extended set of features is needed. With the Enterprise pricing plan, scheduling an unlimited number of integration and data workflow scenarios is possible.

Other Apache Airflow Competitors

Luigi

Spotify developed Luigi to manage operations in Hadoop, such as MapReduce, that require heavy computing operations across clusters. Similar to Airflow, Luigi has the task concept at central – this is where actual computational operations take place. Also, Luigi introduces the ‘target’ notion that stands for an external file for data output.

Even though Airflow and Luigi have much in common (open-source projects, Python used, Apache license), they have slightly different approaches to data workflow management. The first thing is that Luigi prevents tasks from running individually, which limits scalability. Moreover, Luigi’s API implements fewer features than that of Airflow, which might be especially difficult for new users.

Prefect

Prefect, similarly to Airflow, is designed to help user orchestrate their workflows using Python. This service is web-based and could be easily set up with containerization tools, such as Docker, and deployed on the Kubernetes clusters. As a result, Prefect offers a high grade of scalability to its users, which might be of particular value to rapidly growing companies.

Prefect uses the ‘flow’ concept to describe the tasks and dependencies between them. This term corresponds to the DAG concept in Airflow. Moreover, the service can cope with several running tasks executed concurrently within the same workflow and offers a user-friendly interface for monitoring them.

Unlike Airflow, which runs both on-premises and in the cloud, Prefect is more oriented towards the cloud environment. Thus, it would be suitable for those companies that keep their infrastructure on private or public cloud infrastructure.

Prefect is used in a variety of industries: Finance, Healthcare, E-commerce, Retail, Logistics, etc. Due to its ease of use and flexibility, this software could be adapted to any company type.

Dagster

Similar to the above-mentioned tools, Dagster also runs with Python and has tasks and their dependencies in mind. However, a totally new concept of asset-centric development is introduced – that way, companies focus on resources to deliver rather than executing individual tasks. This approach makes Dagster stand out from other data pipeline management tools on the market.

Unlike Airflow, which supports any production environment, Dagster concentrates on cloud services and supports modern data stacks. Being cloud-native and container-native, this solution makes the scheduling and execution processes easier. Dagster was created with such specific goals in mind: designing ETL data pipelines, implementing machine learning curves, and managing data-driven systems.

Apache NiFi

Another product by Apache is called NiFi – even though it’s also dedicated to data workflow management, it differs from Apache Airflow in many aspects. First of all, Apache NiFi is a completely web-based tool with a drag&drop interface and no coding. It’s easy to add and configure processors as graph nodes of data workflow, set up routing directions as graph edges, and indicate operations with data (filtering, joining, etc.).

Apache NiFi is often perceived as an ETL tool for managing data in batches as well as data streams. The latter concept is not supported in Airflow, so NiFi is often chosen by those companies that need to deal with streaming data.

Azure Data Factory

While Apache Airflow focuses on creating tasks and building dependencies between them for workflow automation, Azure Data Factory is suitable for integration tasks. It would be a perfect fit for the construction of the ETL and ELT pipelines for data migration and integration across platforms.

In fact, Data Factory is more similar to Skyvia rather than to Apache Airflow. Both tools are hosted on Azure data cloud and thus offer excellent data security and protection to their customers. Each solution grants scalability and applies a pay-as-you-go pricing model. However, there are also some differences – Data Factory offers 90+ pre-build connectors (on-premises services as well as cloud apps), while this number is 160+ for Skyvia.

Google Cloud Dataflow

Another data orchestration solution is Dataflow, which is a part of Google Cloud. Its concept is slightly different from Airflow’s and Skyvia’s, though the objective remains the same – to organize data and take advantage of it.

Google Cloud Dataflow is highly focused on real-time streaming data and batch data processing from web resources, IoT devices, etc. Data gets cleansed and filtered as Dataflow implements Apache Beam to simplify large-scale data processing. Such prepared data is ready for analysis for Google BigQuery or other analytics tools for prediction, personalization, and other purposes.

AWS Step Functions

The solution called Step Functions was designed by Amazon for orchestrating workflow. This application introduced the concept of ‘step’ and ‘state’ for data automation workflow. The first defines the dataflow logic, where each action corresponds to a specific process stage. Meanwhile, the latter represents the condition of the dataflow process at each step, and stores log about it.

This service suits for many use cases, such as building ETL pipelines, orchestrating microservices, and managing high workloads. AWS Step Functions is particularly efficient when combined with other AWS solutions: Lambda for computing, Dynamo DB for storage, Athena for Analytics, SageMaker for machine learning, etc.

Summary

Businesses operating in various industries often experience a need to automate regular daily processes.

- Marketing departments may want to analyze leads and improve campaign management with automation solutions.

- Sales teams would benefit from streamlining NDA requests and RFP approvals with software solutions.

- Engineering specialists would take advantage of automatic feature request reception with further sorting and analysis.

All this is possible with workflow automation tools such as Skyvia, Airflow, Luigi, Dagster, Prefect, etc. Check detailed tools comparison charts with feature comparison and parameters juxtaposing.

Consider Skyvia for workflow automation and other data-backed operations with no coding. Try Skyvia today!