You know that feeling when the desk is buried under papers, sticky notes, and half-finished to-do lists? That’s your insights right now.

Scattered across:

- CRMs.

- Spreadsheets.

- Databases.

- Apps.

Imagine you’re moving ingredients from the fridge to the kitchen counter to cook something tasty. That’s like ETL.

Data integration is running the entire kitchen smoothly, so every position ( sales reports, dashboards, and customer insights) comes out perfect, on time, every time.

In this article, we’ll walk through:

- What sets ETL apart from full-scale data integration?

- Why modern ELT approaches are gaining ground.

- How to choose the correct tools for your stack.

- Practical implementation tips.

- What costs should you expect along the way?

Think of it as your data GPS: helping companies navigate the messy streets of information and get to clear, actionable insights without driving in circles.

Table of Contents

- What is Data Integration?

- Key Data Integration Methods

- What is ETL?

- Data Integration vs. ETL: The 7 Key Differences

- How to Choose the Right Approach: A 5-Step Framework

- The Data Integration Tool Landscape: A Detailed Comparison

- Implementation Best Practices & Common Challenges

- Security & Cost Considerations

- Conclusion

What is Data Integration?

This approach combines information from multiple sources into a single, unified view that’s accurate, consistent, and ready for analysis.

Without integration, it’s like having pieces of a jigsaw puzzle in ten different rooms. Users may predict what the final result looks like, but there always will be missing pieces. Data integration gathers all the ones, arranges them neatly on one table, and lets businesses see the full image.

It’s more than just moving data around. It’s about creating a story from fragmented information. Sales numbers, marketing engagement, customer support tickets, and product usage tell a single, trustworthy narrative.

In real life, it’s like hosting a family dinner: everyone brings different ingredients, but only when they come together in one pot do you get a meal that actually satisfies.

Similarly, data integration ensures your business teams, like from finance, marketing, etc., are all looking at the same, consistent truth, ready to act on it without confusion.

Key Data Integration Methods

ETL (Extract, Transform, Load)

The classic workhorse of data warehousing.

- Extract data from sources.

- Transform it into the right format.

- Load it into the DWH data warehouse.

ETL is perfect for historical reporting and batch analytics. Unlike streaming, you prep everything first, so your final dish is reliable and consistent.

It’s like a tried-and-true approach, meaning prepping all the ingredients before cooking.

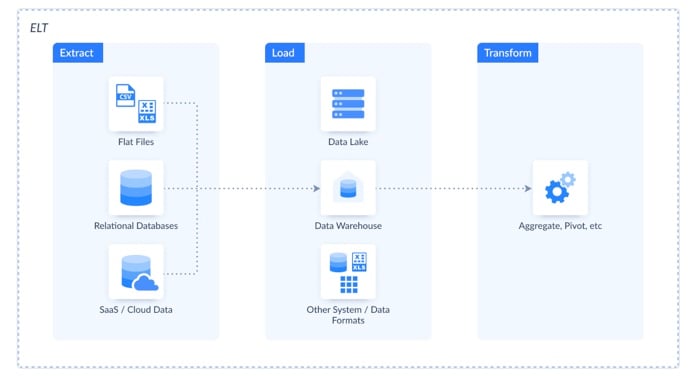

ELT (Extract, Load, Transform)

The modern, cloud-native twist.

Data is loaded first into a warehouse, then transformed as needed.

ELT is perfect for cloud analytics, large datasets, and adaptive transformations. Unlike ETL, you can adjust seasoning (transformations) while the dish is cooking.

It’s like tossing all ingredients into a smart oven that knows how to cook them perfectly. Flexible and scalable.

Data Replication/Synchronization

This approach keeps operational systems in sync, including the CRM and marketing platforms. Unlike ETL, replication updates continuously.

It’s like ensuring all the dishes in the kitchen are prepared step by step.

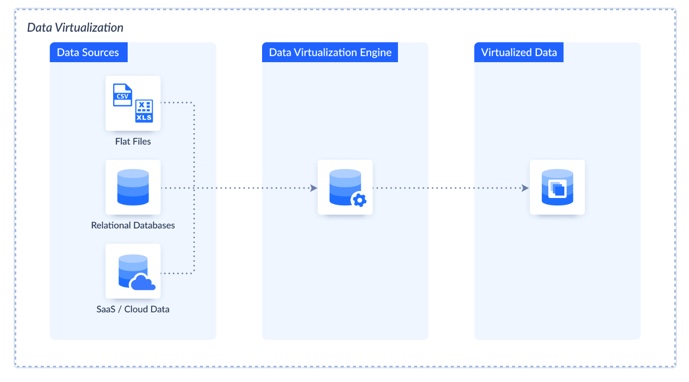

Data Virtualization

Creates a virtual layer of data without physically moving it. Useful when users need real-time access to multiple sources without building full pipelines.

This approach keeps operational systems in sync, including the CRM and marketing platforms.

It’s perfect for real-time access and ad hoc queries. Unlike ETL or ELT, you don’t move ingredients.

Just peek at them instantly whenever you need.

Imagine having a magic window to the fridge where you see everything without opening the doors.

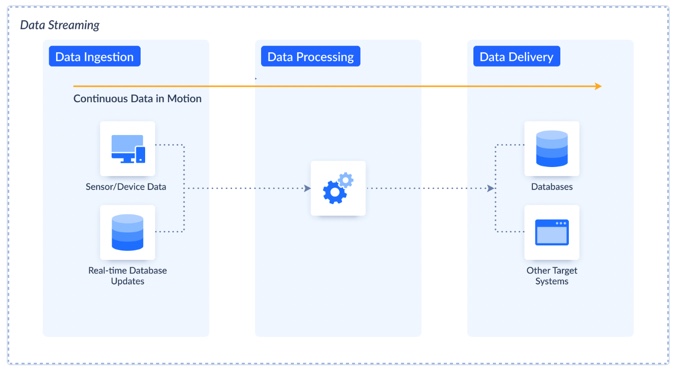

Data Streaming

This method delivers information continuously, as it’s generated, rather than in batches.

- Capture events as they happen from apps, IoT devices, or logs.

- Process or transform on the fly for immediate insights.

- Deliver to your warehouse, lake, or analytics platform without waiting for the next batch.

It’s perfect for real-time dashboards, monitoring, and fast decision-making. Unlike ETL, which preps everything before cooking, streaming lets users stir the pot while ingredients are arriving, so data is always fresh and ready to act on.

Think of it like a conveyor belt in the kitchen, where ingredients keep coming in live, so you can cook and serve in real time.

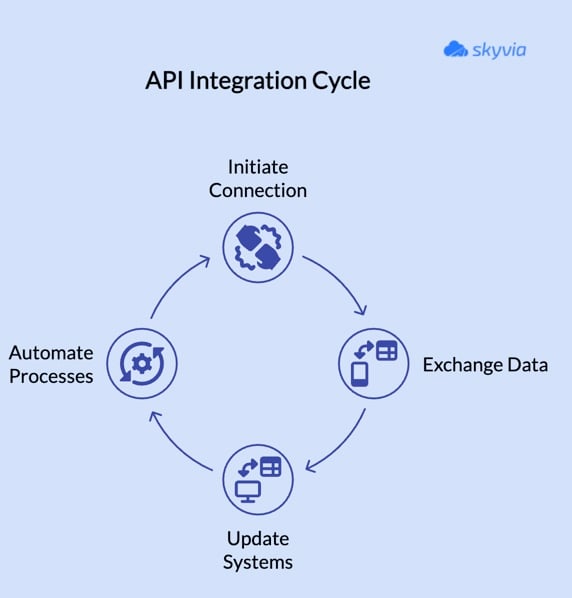

API Integration

Connect applications and services directly.

That’s a good idea for operational sync, automation, and near real-time updates. Unlike ETL, there’s no batch cooking. Data flows directly between apps as it’s produced.

Think of it as a handshake between different software tools, letting them pass information back and forth smoothly and automatically.

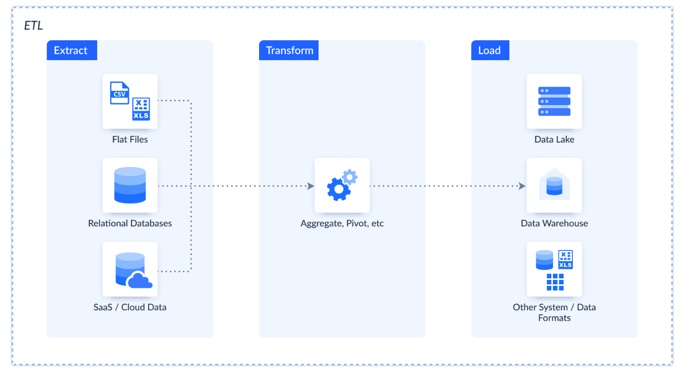

What is ETL?

It stands for Extract, Transform, Load. A three-step process for moving data from sources into a target system like a data warehouse, users may analyze if needed.

Think of it like making a smoothie:

- Extract the ingredients from the fridge.

- Transform them into the right consistency.

- Load them into a glass to enjoy.

Without ETL, the data is raw and scattered; with it, everything is ready to drink… or in business terms, ready to analyze.

How ETL Actually Works

Extract

This is the first step. Pulling data from its sources. Like databases, APIs, or flat files.

Techniques vary:

- Full extraction.

- Incremental extraction.

- Change data capture.

Here, imagine that you’re collecting all the ingredients before cooking.

- Everything fresh.

- All in one place.

Transform

Here’s where the magic happens. Data is moved into a staging area, where it gets cleaned, standardized, deduplicated, and shaped according to the business rules.

Think of it like chopping, blending, and seasoning your ingredients so they’re consistent and ready to use.

Load

Finally, the transformed data is loaded into the target warehouse or data mart.

This can be a full load (everything at once) or an incremental load (only new or changed data). It’s like pouring your finished smoothie into a glass. You can drink it now or store it for later.

Data Integration vs. ETL: The 7 Key Differences

ETL is like following a classic recipe step-by-step in the kitchen. Precise, predictable, and focused on one dish.

Data integration, on the other hand, is like running a busy restaurant where multiple chefs, ingredients, and dishes all need to come together seamlessly.

Both feed the same goal: delicious insights, but the approach is very different. Let’s break it down.

1. Scope

- ETL. A well-defined process: extract, transform, load. Like following a single recipe from start to finish. It’s focused on moving structured data from sources into a warehouse for analytics.

- Data Integration. A broader concept encompassing multiple processes, tools, and workflows to unify data across the organization. Think of it as running a whole kitchen: multiple dishes, chefs, and prep stations working together for one seamless experience.

2. Data Transformation

ETL. Here we’re talking about the staging area, where raw data is:

- Cleaned.

- Standardized.

- Deduplicated.

- Enriched

- Validated before loading into the warehouse.

Typical operations include data type conversion, aggregations, business rule applications, and format validation. All prepped, like chopping and marinating ingredients before cooking.

Data Integration. Transformation can happen in the target system or on-the-fly. Modern tools handle:

- Real-time mapping.

- Conditional transformations.

- Merging multiple sources.

- Calculated fields.

It reduces the need for a separate staging area.

Think of it like adjusting seasoning while the dish is being served: dynamic, fast, and adaptable.

3. Timing

- ETL. Batch-oriented. Data is typically processed on a schedule (nightly, weekly). It’s like slow-cooking a stew: careful, methodical, and predictable.

- Data Integration. Can support batch, near real-time, or real-time processing. This is like running a live kitchen where meals are prepped and served on demand. Streaming APIs, CDC (Change Data Capture), and micro-batches keep systems synced continuously.

4. Target Systems

- ETL. Batch-oriented. Data is typically processed on a schedule (nightly, weekly). It’s like slow-cooking a stew: careful, methodical, and predictable.

- Data Integration. Can support batch, near real-time, or real-time processing. This is like running a live kitchen where meals are prepped and served on demand. Streaming APIs, CDC (Change Data Capture), and micro-batches keep systems synced continuously.

5. Data Type

- ETL. Mostly handles structured data (tables, rows, columns). Like working only with pre-cut, organized ingredients.

- Data Integration. Supports structured and unstructured data (logs, JSON, XML, multimedia). It’s handling raw, messy, or semi-structured ingredients while still delivering a cohesive dish.

6. Use Case

- ETL. Focused on historical business intelligence, aggregating and preparing data for reports and dashboards. It’s like prepping weekly meal kits. Everything is planned in advance.

- Data Integration. Supports BI, operational sync, and app-to-app communication. It’s like running a busy restaurant kitchen, keeping all orders and prep stations in sync while serving customers in real time.

7. Architecture

- ETL. Rigid, predefined flows. Data pipelines are fixed, like following a strict recipe. Changes require rewriting or significant adjustments.

- Data Integration. Flexible, often hub-and-spoke or mesh-based, allowing multiple sources and destinations to connect dynamically. Think of it as multiple chefs sharing ingredients and adjusting on the fly for different dishes simultaneously.

The table below shows it a bit more.

| Feature | ETL | Data Integration |

|---|---|---|

| Scope | Defined process | Holistic concept |

| Data Transformation | Staging area: pre-processing, cleansing, deduplication | Target system or on-the-fly; dynamic mapping, enrichment, calculations |

| Timing | Batch (nightly/weekly) | Batch, Near Real-Time, Real-Time |

| Target Systems | Data Warehouses | Warehouses, Lakes, Applications, Operational Systems |

| Data Type | Structured | Structured & Unstructured |

| Use Case | Historical BI | BI, Operational Sync, App-to-App |

| Architecture | Rigid, predefined flows | Flexible, hub-and-spoke or mesh |

How to Choose the Right Approach: A 5-Step Framework

Picking the right way to integrate or move data can feel like deciding what kind of kitchen to build:

- A small prep table.

- A full commercial kitchen.

- A smart automated setup.

The wrong choice can slow everything down or make your team pull their hair out.

The five steps below help to figure out the setup that actually works for your team and business.

Step 1: Define Your Use Case

First, ask yourself: what’s the goal? Are you building dashboards for BI, syncing operational systems in real time, or doing a one-time data migration?

Knowing the use case is like deciding whether you’re cooking a quick snack or preparing a full banquet. It sets the tone for every decision that follows.

Step 2: Assess Your Data Volume and Velocity

It depends on how much data we are talking about. Gigabytes or terabytes? Do you need batch processing, or is streaming required?

This is like figuring out if you’re feeding a small family or a stadium crowd. The bigger and faster the flow, the more robust your integration tools and processes need to be.

Step 3: Evaluate Your Data Sources and Target

Where’s your data coming from?

- Structured databases.

- SaaS APIs.

- Spreadsheets.

And where is it going? Data warehouses, lakes, or operational apps?

Think of it as knowing where the ingredients come from and which kitchen stations they need to reach. Some ingredients are delicate and need special handling; some are easy to toss in.

Step 4: Consider Your Team’s Technical Skills

Do you have SQL wizards, Python developers, or mostly analysts who prefer no-code solutions?

The team’s expertise dictates whether you can handle custom pipelines or should go with a managed, user-friendly tool.

It’s like choosing between cooking from scratch versus using a ready-made meal kit. Users pick the method your team can actually manage.

Step 5: Factor in Your Budget and Scalability Needs

Finally, money talks, and so does growth. Can you afford dedicated dev time and infrastructure, or do you need a predictable subscription with minimal maintenance?

Also, think ahead: will your data volumes double next year? Scalability is like building a kitchen that can handle both weekday meals and holiday feasts without collapsing.

The Data Integration Tool Landscape: A Detailed Comparison

Choosing a data integration tool can feel like walking into an appliance store with a hundred different kitchen gadgets.

Some are big and powerful, some are sleek and fast, and some try to do everything at once. The right choice depends on the team, budget, and what kind of “kitchen” you need to run for the data.

Below, we’ll walk through four major categories:

- Enterprise Powerhouses.

- Modern cloud-native ELT tools.

- Flexible All-in-One Platforms.

- Open-Source Alternatives.

For each, we’ll highlight features, typical users, pricing, and pros and cons, helping you see which tool fits your business like the right knife in the drawer.

1. Enterprise Powerhouses

These ones are the heavyweights of the data world. If comparing with the kitchen world, think of them as the professional-grade ovens.

- Powerful.

- Reliable.

- Built for large-scale operations.

But requiring expertise and budget to run efficiently. They excel at complex, regulated environments and massive datasets, often used by Fortune 500 companies.

| Tool | Features | Typical Customers | Pricing Model | Pros | Cons |

|---|---|---|---|---|---|

| Informatica | ETL, Data Quality, Data Governance, Metadata Management. | Large enterprises. | High-end, license-based. | Extremely powerful, enterprise-grade support, wide connectivity. | Expensive, complex setup, requires skilled staff. |

| Talend | ETL, ELT, Data Quality, API Integration, Cloud Connectors. | Large enterprises. | License-based. | Flexible, strong data governance, open connectivity. | Steep learning curve, high initial cost |

2. Modern Cloud-Native (ELT-Focused)

If Enterprise Powerhouses are the professional ovens, modern cloud-native tools are like sleek, high-tech blenders. Fast, easy to use, and perfect for smaller kitchens.

These tools focus on ELT pipelines and are designed for startups and mid-market companies that need quick, reliable data movement without heavy setup or infrastructure.

| Tool | Features | Typical Customers | Pricing Model | Pros | Cons |

|---|---|---|---|---|---|

| Fivetran | Pre-built ELT pipelines, SaaS & database connectors, automated schema mapping. | Startups to mid-market. | Consumption-based. | Quick setup, low maintenance, automatic updates. | Can get expensive at high volumes, limited transformation options. |

| Stitch | ELT pipelines, SaaS & database connectors, simple transforming. | Startups to mid-market. | Consumption-based. | Cost-effective, easy to use, flexible for smaller datasets. | Limited enterprise features, less robust for complex workflows. |

3. Flexible All-in-One Platforms

These platforms are like all-in-one kitchen gadgets. They can chop, blend, cook, and even clean up afterward. Flexible, versatile, and user-friendly, they combine multiple integration capabilities (ETL, ELT, Reverse ETL, API sync) into a single solution.

Perfect for SMBs and enterprise teams that want speed, simplicity, and scalability without managing multiple tools.

| Tool | Features | Typical Customers | Pricing Model | Pros | Cons |

|---|---|---|---|---|---|

| Skyvia | ETL, ELT, Reverse ETL, API Sync, Cloud Integration. | SMBs to enterprise. | Tiered, user-friendly. | No-code, flexible, handles multiple use cases, easy setup. | Limited advanced analytics compared to enterprise-grade tools. |

| Integrate.io | ETL, ELT, Cloud connectors, API integration. | SMBs to mid-market. | Subscription-based. | Cloud-native, scalable, simple UI. | Can get expensive at higher volumes, fewer connectors than large enterprise tools. |

4. Open-Source Alternatives

Open-source tools are like DIY kitchen gadgets. Users get flexibility and control at a low cost, but we also need to do the assembly, maintenance, and troubleshooting.

These platforms are ideal for technically savvy teams that want to customize integrations without paying high licensing fees.

| Tool | Features | Typical Customers | Pricing Model | Pros | Cons |

|---|---|---|---|---|---|

| Airbyte | ETL/ELT pipelines, wide connector library, cloud & on-prem support. | Tech-savvy SMBs to mid-market. | Free (open-source), optional cloud hosting. | Highly flexible, rapidly growing connector ecosystem, free to use. | Requires DevOps/maintenance, community support only. |

| Meltano | ELT pipelines, orchestration, version control. | Developers, SMBs with in-house teams. | Free (open-source). | Fully customizable, integrates with CI/CD workflows, free. | Setup and maintenance overhead, limited enterprise support. |

Implementation Best Practices & Common Challenges

Imagine that you’re a chef of the well organized kitchen with cool strategy, professional tools, and eveen plan B.

However, without preparation, things can get messy fast.

Let’s explore some best practices to keep the data operations running like a well-oiled machine, along with common challenges and how to tackle them.

Best Practices for Success

1. Start with a Data Quality Framework

Before diving into integration, ensure data is:

- Clean.

- Consistent.

- Accurate.

It’s like prepping all the ingredients before cooking. For instance,

Skyvia’s Query tool helps automate data validation and cleansing, ensuring only quality data flows through your pipelines.

2. Design for Scalability

Anticipate future growth by building flexible and scalable integration workflows. Cloud-based platforms allow users to scale their data operations effortlessly, handling increasing volumes without compromising performance.

3. Implement Robust Monitoring and Alerting

Set up monitoring to keep an eye on the data pipelines and catch issues early. Tools like Skyvia, Fivetran, and Stitch provide detailed execution logs and activity tracking, along with email notifications for errors or threshold limits.

While they don’t offer real-time alerts out of the box, these features help compaies stay informed and quickly address problems before they impact the workflows. For organizations needing full real-time monitoring, integrating with third-party alerting tools is recommended.

4. Document Everything: Lineage, Transformations, Schedules

Maintain clear documentation of the data workflows, including data lineage, transformation logic, and scheduling. This practice ensures transparency and makes troubleshooting easier.

Intuitive interfaces, like Skyvia, Fivetran, Stitch, and Integrate.io, allow for easy tracking and documentation of the data processes.

Common Challenges & How to Solve Them

- Challenge: “My data sources are constantly changing (Schema Drift).”

- Solution: Choose tools with automatic schema migration. Skyvia’s dynamic schema mapping adapts to changes in your data sources, ensuring seamless integration without manual intervention.

- Challenge: “The integration process is slow and creates a bottleneck.”

- Solution: Optimize transformations and use incremental loads. Skyvia’s efficient data processing and support for incremental loading reduce latency and improve performance.

Real-World Example

Cirrus Insight Data Integration with Skyvia

Cirrus Insight struggled with integrating Salesforce, QuickBooks, and Stripe, which slowed workflows and increased costs. Skyvia automated the data flows, improving operational efficiency, streamlining financial reporting, and reducing expenses.

Security & Cost Considerations

When we’re moving and integrating data, we don’t just need to get it from point A to B. We protect it along the way and understand the real cost of the journey.

It’s like shipping delicate ingredients for a big dinner: you want them fresh, secure, and delivered without surprise expenses.

Security

- Data Encryption (In-Transit & At-Rest). Data should be protected while moving across networks (in-transit) and while stored on servers (at-rest). Cloud-based tools like Skyvia, Fivetran, and Stitch provide built-in encryption to keep data safe from prying eyes.

- Compliance (GDPR, HIPAA). Different ingredients have different handling rules. Personal data, health information, or financial records have regulations around storage and access. Choosing tools that comply with GDPR, HIPAA, and other standards ensures the organization isn’t accidentally serving up a compliance disaster.

- Access Control. Not everyone needs access to every dish in the kitchen. Role-based permissions and account management features make sure only the right people can touch sensitive data.

The Total Cost of Ownership

Integrating data is a combination of tools, infrastructure, and human effort.

- Tool Licensing/Subscription Fees. These are like buying your kitchen appliances. Enterprise platforms like Informatica come with a hefty upfront cost, while cloud-native or flexible platforms like Skyvia, Fivetran, and Stitch offer subscription-based pricing that scales with usage.

- Infrastructure/Cloud Compute Costs. That’s like the electricity and gas you pay to run the kitchen. Cloud-based integration pipelines consume compute resources, storage, and network bandwidth, especially for large volumes or frequent loads.

- Development & Maintenance Hours (Personnel Cost). Even with the best tools, someone needs to cook. Custom API pipelines require developers to maintain, troubleshoot, and optimize the flows. With no-code platforms, this effort is reduced, but some monitoring and management are still needed.

Conclusion

Let’s wrap this up: We’re not about ETL versus data integration. But about knowing when to use each.

- ETL is a powerful tool, perfect for preparing historical data for analysis. It’s just one part of a broader data integration strategy that keeps each organization running smoothly.

- The modern approach for most businesses is hybrid: use ETL for BI and reporting, and combine it with other integration methods (Cloud-native ELT, API syncing, no-code platforms) for operational needs, real-time updates, and app-to-app workflows.

Just imagine having both slow-cooked meals and quick stir-fries ready in the same kitchen.

Users get reliability, speed, and flexibility all at once.

Ready to build your unified data strategy? Explore Skyvia’s flexible platform, handling everything from ETL to real-time sync, and see how easy it can be. Try it free today.

F.A.Q. for Data Integration and ETL

Can a small business use data integration tools?

Yes! No-code and cloud-native tools like Skyvia, Fivetran, and Stitch make integration accessible for small businesses without heavy IT teams.

How do I measure the success of my data integration project?

Track data accuracy, timeliness, and accessibility. Reduced errors, faster reporting, and better insights indicate your integration is delivering value.

What are the most important skills for a data integration specialist?

Strong SQL/ETL knowledge, understanding APIs, data modeling, problem-solving, and familiarity with integration tools. Communication is key to cross-team collaboration.