The emergence of AI agents – intelligent software that can think, act, and learn from results – is reshaping the way we humans approach work. From small assistants handling routine tasks to near-teammates capable of pursuing long-term goals, AI agents keep taking on more roles, lifting the weight off our shoulders.

But there’s a catch – to be really productive, AI agents need to interact with other systems. Until recently, that was a challenge, since every integration required a custom-built connection. The Model Context Protocol (MCP) is changing that by introducing a universal standard for AI to connect with external systems.

This technology is nothing short of groundbreaking. It not only makes AI smarter and more autonomous, but also offers a unified standard for developers to build upon. What MCP servers are, how they work, and why they’re crucial for the future of AI – that’s exactly what we’ll explore in this comprehensive overview.

Table of Contents

- What is the Model Context Protocol (MCP)?

- Understanding MCP Servers: The Bridge Between AI and the Real World

- Why is MCP a Game-Changer for AI Development?

- Real-World Use Cases and Examples of MCP Servers

- How to Get Started with MCP

- Conclusion

“Enterprises want AI agents to have a secure, real-time access to business data with the ability take actions—not just generate answers,” said Oleksandr Khirnyi, Chief Product Officer at Skyvia. “MCP Endpoints provide teams with a secure, no-code way of letting AI assistants, such as Claude, discover, query, and act on data, while Skyvia handles the hard parts behind the scenes.”

What is the Model Context Protocol (MCP)?

The Model Context Protocol is an open-source standard that allows AI agents to interact with external data sources and tools — securely and efficiently.

Developed and open-sourced in November 2024 by Anthropic, one of the leading creators of frontier large language models (LLMs), MCP was originally designed to help models produce richer, more context-aware responses. But it quickly evolved into an industry standard defining how AI systems interact with real-world data and applications.

By connecting to various MCP servers, an AI app builds a list of tools that the language model (like Claude, GPT-5, or Gemini) can access whenever it needs them. When, during a conversation, the model decides to tap into one of these tools, the AI layer catches the request, routes it to the right MCP server, gets the job done, and brings the results back into the conversation – while keeping the dialog ongoing.

At its core, the protocol works much like a telephone switchboard operator, connecting callers by plugging cables into the right sockets. The same principle applies to AI: thanks to MCP, it knows exactly which “wire” to connect when a model needs to call a tool or a dataset.

Let’s have a closer look at these dynamics.

Understanding MCP Servers: The Bridge Between AI and the Real World

MCP servers are at the heart of the Model Context Protocol. They are the components that enable AI to finally move from words to actions – by providing context and guiding their interaction. How do they do that?

How MCP Servers Work

An MCP server is an individual implementation of the Model Context Protocol that follows its specification to make certain capabilities of data sources – such as tools, resources, or other functionality – available for AI clients to use.

In doing so, the server acts as a wrapper, making every connected system “look the same” to the AI, no matter what technology it uses underneath.

The main components of the MCP client-server architecture include:

- Host – the AI application that requests information or actions from an MCP server.

- Client – the connection layer between the host and the server that handles requests and retrieves the required context.

- Server – the program that exposes tools or services (context) for the host to use.

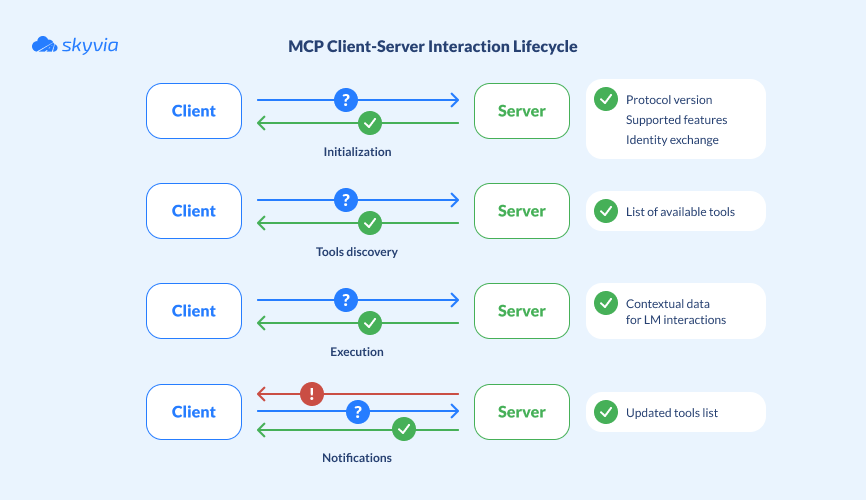

The diagram below illustrates the four key stages of client-server communication:

Initialization

This stage, also known as a capability negotiation handshake, implies a client establishing connection to a server. In response to the capability request, the server returns details about its protocol version, supported features, and identity.

This stage ensures that both client and server understand each other, and support all upcoming operations.

Tools discovery

Next, the client sends a discovery request to determine which tools are available on the server.

The AI application then compiles a list of tools from all connected servers and builds a unified registry that the language model can access during operation.

Execution

After discovery, the client can send a request to invoke a specific tool. The server executes the action and responds with structured content or data that the LLM can integrate into its reasoning and conversation flow.

This process lies at the heart of MCP, demonstrating how AI agents turn requests into real-world actions.

Notifications

In this final stage, the server can proactively notify the client about any changes — such as updates to available tools or configurations.

In response, the client requests the updated tool list, allowing the AI application to refresh its registry and ensure the LLM always has access to the most current information.

Key Functions of MCP Servers

As mentioned before, servers make certain functionality available for the AI to use. The protocol defines three primitives – core capabilities a server can expose to AI clients:

| Capability | Explanation | Example | Controlled by |

|---|---|---|---|

| Tools | Executable functions that enable AI agents to perform actual actions: update databases, modify files, call APIs, or execute commands based on user intent. | – Send invoices – Deploy code – Generate reports – Update CRM records | LLM |

| Resources | Read-only data sources that supply factual context to the AI. These typically include documents, tables, logs, or reference material. | – Retrieve analytics data – Access product catalogs – Read internal documentation – View customer profiles | Application |

| Prompts | Predefined instruction templates that guide the LLM on how to interact with a user or a system. | – Draft a project summary – Prepare a budget proposal – Create a meeting agenda – Generate code snippets | User |

MCP uses a lightweight, low-overhead communication model to enable real-time AI behavior.

Server and client communicate through JSON messages – a compact, structured data format that’s easy to process.

Thanks to a persistent, bidirectional channel (such as WebSocket or STDIO), MCP ensures instant data flow – more like a live conversation than a series of emails. The result is faster, smoother AI-to-system communication.

Why is MCP a Game-Changer for AI Development?

Standardization

Like any other protocol, MCP defines a common set of rules that govern how AI applications interact with external systems.

Being platform- and language-agnostic, it opens the door to a new level of connectivity where any client can talk to any server, as long as both speak MCP.

Scalability

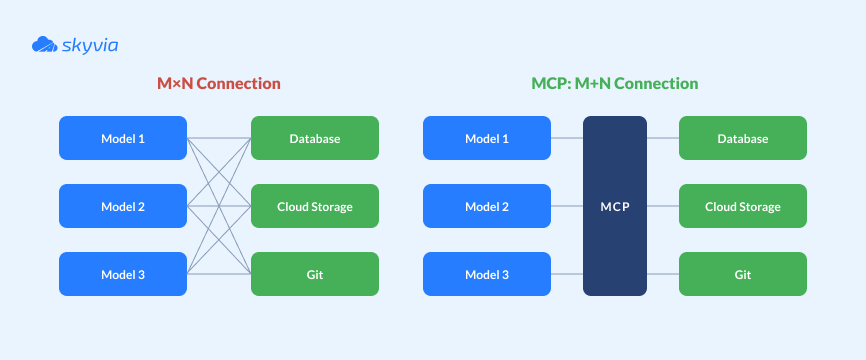

The protocol effectively solves the integration problem by turning the M×N equation into M+N. In the diagram below, AI applications (M) need to connect to multiple data sources and tools (N).

Every new tool requires its own custom connection — forcing developers to write integration code again and again for each app-to-source pair.

With MCP, developers only need to build M clients (for their applications) and N servers (for their tools). MCP acts as an agent between AI models and external systems, reducing the number of required integrations.

Security

The protocol provides a structural framework for safe interaction – a set of rules designed to make AI deployments more secure in real-world environments. These rules are based on:

- Clear boundaries between agents and tools – each server defines exactly which tools or data are available, what parameters can be used, and how results are returned.

- Permission-scoped access – developers can grant granular permissions: one agent might have read-only access to CRM data, while another can update records.

- Transparent logging – every tool invocation, data request, and response within MCP can be logged and traced, making it easier to see what the AI did, when, and why.

That said, the protocol doesn’t enforce these rules automatically – actual security depends on the implementation. So if an organization misconfigures an MCP server, the risk remains theirs – the protocol won’t save them.

Reduced Hallucinations

Unlike humans, AI agents rarely ask clarification questions – and when they don’t fully understand something, they can start hallucinating. It’s an annoying and risky flaw that MCP helps eliminate.

Now, when a user asks a question, the AI assistant doesn’t guess. Instead, it calls the appropriate tool and retrieves factual, up-to-date data directly through an MCP server.

Real-World Use Cases and Examples of MCP Servers

We’ve already discussed the significance of protocol for the broader AI ecosystem – but what does it actually mean for businesses? What kinds of tasks can AI assistants, empowered by MCP, perform in real workflows?

Let’s look at some real-world examples.

Data Integration and Analytics

One of the most common applications of MCP is in data access and analysis.

Imagine a sales manager asking, “How did we perform last quarter?” – and an AI agent, connected via MCP to the company’s internal database, instantly delivers the answer – no manual queries, no dashboards.

Now picture that same agent plugged into enterprise data warehouses like BigQuery or Snowflake. The entire workflow – from SQL query generation to insight delivery – becomes automated and completed in just seconds.

Business Process Automation

In the business sphere, MCP lays the foundation for smarter, context-aware automation.

Suppose you want your AI assistant to manage customer records in the company’s CRM. Through MCP servers, the agent can interact seamlessly with existing systems – such as Salesforce, HubSpot, or Zoho – along with all the other data sources that feed into them.

The CRM, exposed via MCP, provides structured access to essential information like customer records, leads, deals, and activity logs. Then, by drawing context from additional systems connected through other MCP servers, the AI assistant can perform a wide range of tasks:

- Email systems – track customer conversations, identify new leads, and update CRM entries accordingly.

- Calendar and scheduling tools – plan meetings, demos, and calls tied to customer accounts.

- Customer support platforms – summarize support tickets or complaints and attach them to the relevant CRM profiles.

- Billing and payment systems – monitor payment status, update deal stages, and notify account managers automatically.

Developer and IT Operations

This is one of the areas where MCP servers find their broadest use. From developer assistants like GitHub Copilot or Claude for Devs, which connect to internal wikis to fetch the most up-to-date information, to autonomous AI systems that interact directly with cloud platforms – MCP serves as the bridge between intelligent agents and critical infrastructure.

How to Get Started with MCP

For Developers

For developers looking to expose their data through MCP servers or build AI-driven applications, there are two main approaches. The first is to build everything from scratch, following the protocol specification.

The second is to use an official SDK (software development kit). While the former is a great way to learn the protocol’s inner workings, the latter remains the most preferred choice among developers.

And there is little wonder as the SDK abstracts all the plumbing – message parsing, routing, and protocol compliance — leaving developers with the most exciting part: defining their application’s logic.

For now, the official MCP resource offers SDKs available for ten programming languages, including Python, TypeScript, and Go.

For Businesses

Businesses that are planning MCP adoption should start by defining where AI already interacts with their data or tools – like CRMs, ERPs, databases, or analytics platforms. Considering this, they can think of implementation options:

- Use existing open-source MCP servers. The developer community has already built connectors for many systems, including databases (PostgreSQL, MySQL, MongoDB), APIs (Slack, GitHub, Notion, Google Workspace) and developer tools (Git, VS Code extensions, file systems). Deploying one of these servers is like adding an AI plug into your existing infrastructure – no need to write custom adapters.

- Connect through data integration platforms. Modern data integration tools like Airbyte, Fivetran or Skyvia are beginning to support MCP. With this feature, you don’t have to rebuild your pipelines – simply expose them via MCP to make them accessible to AI agents.

- Build custom MCP servers for proprietary systems. If your business uses custom tools or internal APIs, developers can build MCP servers to wrap those systems.

Conclusion

MCP is an open-source standard that redefines the way AI apps interact with external systems. Its straightforward architecture makes it easier for developers to connect LLMs to live data and tools.

Acting as a universal translator, the protocol ensures that any compliant application can connect to any compliant tool, fostering the creation of interoperable, LLM-friendly ecosystems.

For organizations ready to start with MCP, Skyvia offers MCP endpoints. Available to over 200 supported sources, MCP enables the flexibility of no-code integration, the low latency of direct API connections, and the convenience of automated workflows – all without writing a single line of code.

F.A.Q. for MCP Server AI

What is the Model Context Protocol (MCP)?

It is an open standard that defines how AI apps communicate with tools and data sources. It allows any compliant model to access any compliant service safely and efficiently.

How does an MCP server help reduce AI “hallucinations”?

By giving AI direct access to real, verified data via MCP servers, responses are based on facts – not guesses – significantly reducing the risk of hallucinations.

What are some real-world examples of MCP server use cases?

MCP servers let AI connect to CRMs, data warehouses, and cloud services to analyze data, update records, send emails, or automate workflows.

Is MCP difficult to implement for a business?

Not at all. Companies can start with ready-made MCP servers or use SDKs to build custom ones, connecting existing tools without complex coding or system changes.