HubSpot is the lifeblood of your business, a pulsating vein that sustains daily operations of multiple departments. Sales teams use it to manage leads, contacts, and deals. Marketers depend on it to run email campaigns and track conversions. Support uses it to log tickets and maintain customer history.

Naturally, a platform of that scale simply cannot come without built-in analytics. But while HubSpot’s native reporting works fine for basic CRM needs, it’s not enough for serious, customizable analytics.

That’s why many companies export HubSpot data to a warehouse – to run real analytics using SQL or connect it with other systems. And PostgreSQL is an excellent choice. With native support for large datasets, complex queries, and custom data types, Postgres makes an ideal backend for HubSpot integration.

In this article, we’ll walk you through the benefits, methods, and best practices of integrating HubSpot with PostgreSQL, using Skyvia as one of the options to make it happen.

Table of Contents

- Why Integrate HubSpot with PostgreSQL?

- Methods for Integrating HubSpot with PostgreSQL

- Method 1: Manual Export/Import

- Method 2: Custom Scripting

- Method 3: Third-Party Integration Platforms

- Use Cases: Real-World Examples

- Best Practices for HubSpot to PostgreSQL Integration

- Conclusion

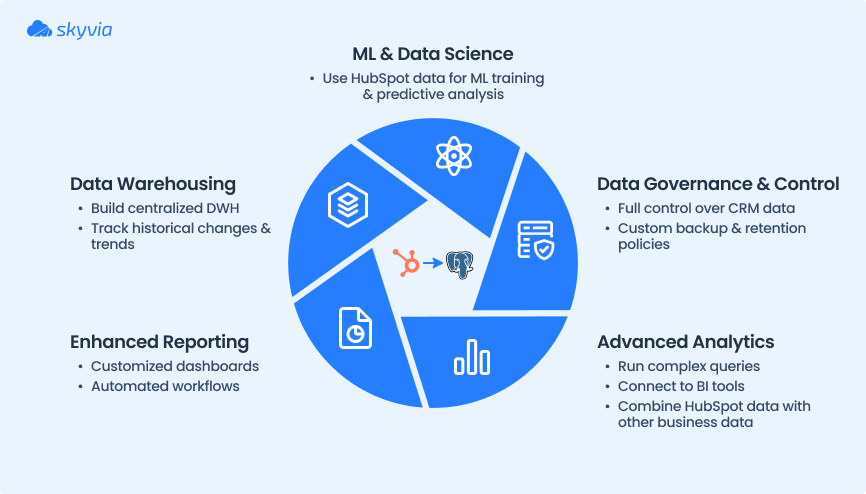

Why Integrate HubSpot with PostgreSQL?

The reason is simple: to turn your CRM data into a rich, queryable asset.

Integrating HubSpot with PostgreSQL helps overcome the key limitations of HubSpot’s built-in analytics, such as:

- It only works on data stored inside HubSpot.

- It’s designed for sales and marketing reporting.

- It lacks support for deep data slicing.

- It can’t be joined with data from other sources.

Apart from that, this integration brings in new value that really makes your data shine. The diagram below outlines the main advantages of this sync.

Methods for Integrating HubSpot with PostgreSQL

There’s no such thing as a “best tool” in absolute terms. What matters is your specific use case, your team’s technical skills, and your budget. That’s the input you should build your integration strategy around.

Below, we’ll outline the most common integration methods – from manual, old-school loads to fully automated ETL pipelines, to guide you in the right direction.

| Characteristics | Manual | Custom scripting | iPaaS |

|---|---|---|---|

| Key differences | Involves exporting data from HubSpot (usually as CSV) and importing it into PostgreSQL with COPY or tools like psql, and pgAdmin; simple and non-technical. | Involves calls to HubSpot API to retrieve data; provides maximum flexibility and customization; secure and self-managed. | Offers no-code approach in connecting data sources by using prebuilt connectors; provides visual UI and scheduling; requires minimum manual effort. |

| Tooling | None; may involve using psql or pgAdmin to import data to Postgres. | CloudQuery; CData JDBC + postgres_fdw; Airbyte | The platform of your choice. |

| Cost | None | Free by default, excluding development time and infrastructure costs. | Varies per tool, with most platforms having usage-based pricing and tier subscription plans. |

| Entry level | Low | High, requires strong development skills. | Low |

| Customizability | Low | High | Medium |

| Automation | Non-available | Possible via scripts, cloud functions, or DAGs. | Available |

| Best for | One-time or occasional loads exporting one or two objects. | Small, well-defined pipelines and straightforward integrations. | Frequent data updates and regular syncs. |

Method 1: Manual Export/Import

Manual integration is the simplest and most low-tech method. It offers a decent level of control over what data you export and when, including:

- The records you choose to transfer.

- Which PostgreSQL table the data is imported into.

- How you transform and clean the data.

- How you handle duplicates.

- Field mapping and renaming.

But as you might guess, all of this must be done manually. Despite the many limitations of this method, it definitely has its time and place – especially for one-time exports, small datasets, or early-stage evaluation.

Best for

- One-time data migrations from HubSpot.

- Small subset of records (e.g., filtered contacts).

- Testing and evaluating the HubSpot data structure before committing to automation.

- Synchronizations with monthly or quarterly frequency.

- Teams with non-technical users.

Step-by-step Guide

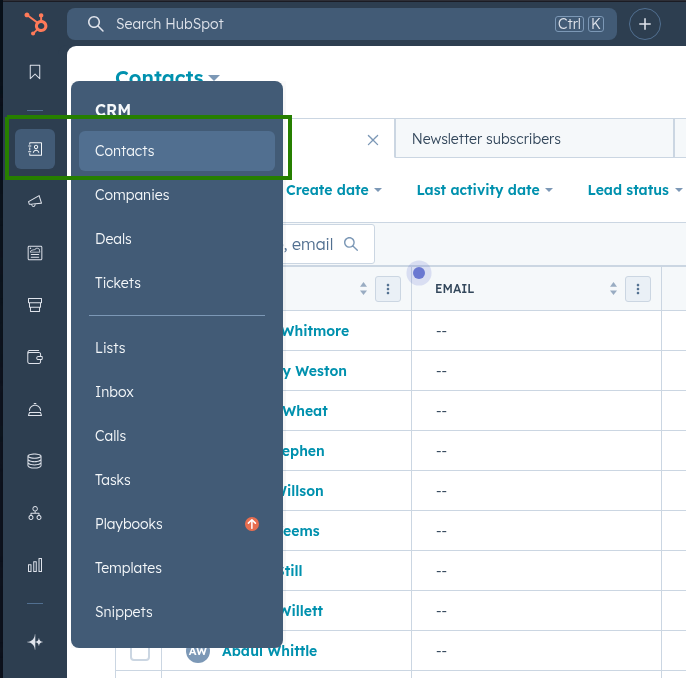

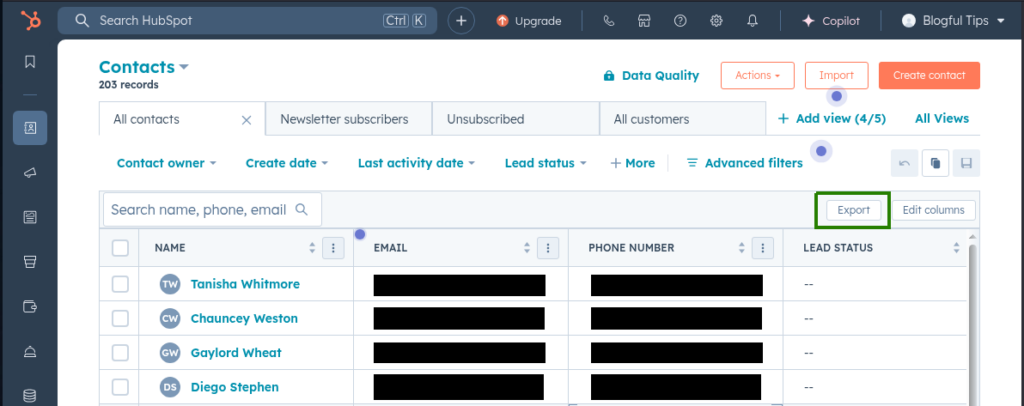

Step 1. Export from HubSpot

- In CRM, go to the object of your choice: Contacts for this example.

- If needed, apply filters to narrow down what you need.

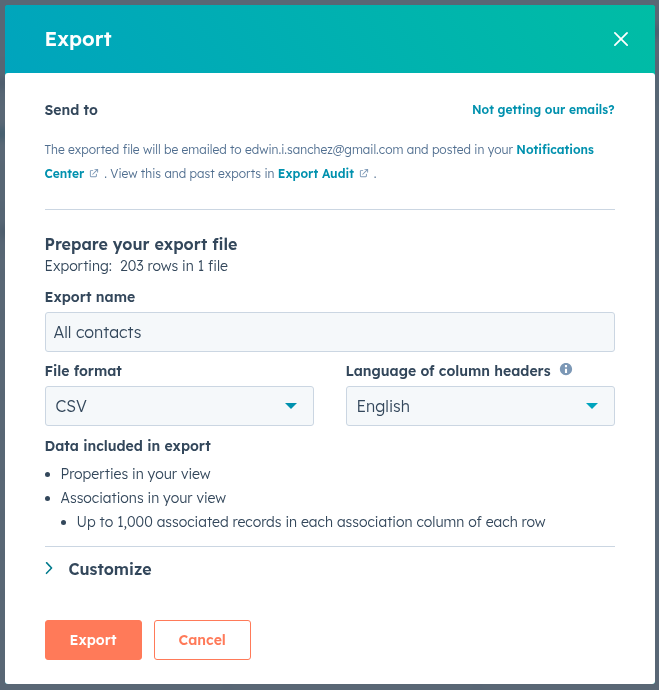

- Click Export.

- Choose CSV as your file format.

- Check your email to download the CSV file.

- Save the file on your computer.

Note: For more information on how to export CSV from HubSpot, check here.

Step 2. Prepare the Postgres table

Note: before importing CSV to Postgres, it is advisable to check the extracted records for consistency, date/time formatting, empty columns or extra rows, and clean/transform it if needed.

Create a new table or make sure the existing one matches the structure of your CSV:

CREATE TABLE contacts_manual_import (

name TEXT,

email TEXT,

phone_number TEXT,

lead_status TEXT

);Step 3. Import using COPY

Once the CSV is ready and the table is created, use the command:

COPY contacts_manual_import(name, email, phone_number, lead_status)

FROM '/path/to/contacts.csv'

DELIMITER ','

CSV HEADER;Step 4. Deduplicate

Manually check for duplicates by searching redundant email addresses:

SELECT email, COUNT(*)

FROM contacts_manual_import

GROUP BY email

HAVING COUNT(*) > 1;Pros

- Simple: no coding, scripting, or setup required.

- Secure and user-controlled.

- Good for audit trails as it provides a literal copy of the export file.

Cons

- Requires significant manual setup and repetition.

- Does not scale well for growing or frequent data needs.

- Lacks support for incremental updates (no delta or updatedAt tracking).

- Cannot be automated.

- Prone to human error during export, transformation, or import.

- No direct connection between HubSpot and PostgreSQL.

- Export formats are limited to spreadsheet-compatible types: .CSV, .XLS, .XLSX.

Method 2: Custom Scripting

This method involves creating a bespoke data pipeline in which a dedicated connector –either self-written or ready-made – calls the HubSpot API, pulls data in JSON format, parses it, and loads it into the appropriate PostgreSQL tables and columns.

Often referred to as custom scripting, this approach earns its name for a reason: among all the integration methods, it offers granular control over nearly every aspect of your HubSpot-to-Postgres flow:

- What data to pull: specific objects or fields.

- How data maps from HubSpot JSON to Postgres tables and columns.

- What transformations occur before writing to the database.

- How syncing works: on a schedule or in real-time.

- How errors, rate limits, and retries are handled.

Do you need to write code? That depends on the kind of connector you choose. Options range from plug-and-play platforms to DIY scripts, and – as you might expect – the level of customization increases in direct proportion to the amount of code you’re willing to write.

Best for

- Organizations with engineering resources to build pipelines.

- Teams that need strong control over data relationships, such as custom objects or advanced transformation.

- Organizations that prefer self-hosted and secure infrastructure.

Step-by-step Guide

Step 1. Define your schema

This isn’t a step technically but rather a set of preliminary actions to ensure the success of your integration. At this stage, you’ll plan how data from HubSpot will be structured and stored in your PostgreSQL database.

- Plan for the Postgres tables that you’ll need or already have. What columns should each table have? How do those columns match the data fields coming from HubSpot? What data types to use? Etc.

- Decide what HubSpot objects you need to integrate and list their API endpoints.

- Define how each field in the JSON response will map to your database schema. Consider how to handle custom fields in case you have them or plan to add.

- Test the initial schema by loading a small dataset, before proceeding to a full-scale integration.

Step 2. Authentication

At this stage, you establish a secure, authenticated connection between your connector and the HubSpot API. HubSpot supports several authentication methods, among which the private app access token is recommended.

Step 3. Fetch data

This stage involves retrieving actual data from HubSpot with GET requests to the API endpoints of the desired objects (e.g., Contacts, Deals, Companies).

Considering HubSpot’s API rate limits, it’s important to include the appropriate parameters (such as limit, properties, or after for pagination) for the most efficient API calls usage.

Step 4. Load data into Postgres

At this stage, parsed data from HubSpot is structured and written into PostgreSQL tables, either with batch inserts or upserts for small volumes, or bulk loading via COPY for high-volume datasets.

You can code for any required cleaning and transformation of your data before loading. Also, make sure to use HubSpot’s updatedAt field to avoid reloading unchanged records.

Step 5. Handle updates and deletions

This step allows you to maintain your PostgreSQL in sync with what’s currently in HubSpot, not just a static snapshot. Use the updatedAt field to detect updated records and apply changes accordingly.

Note that HubSpot doesn’t physically delete records by default – it archives them. To work with these records, include archived=true in your API requests and apply soft deletes locally (e.g., marking records as inactive in your PostgreSQL database).

Step 6. Error-handling and rate limiting

There are millions of reasons why your HubSpot integration may fail, including API errors, network issues, schema changes, and exceeding rate limits.

Baking error-handling logic into your code makes your integration resilient when encountered with any of those issues. This logic defines rules for retrying failed requests, respecting rate limits, and validating data before it’s inserted into PostgreSQL.

Step 7. Automate and monitor

At this step, your one-time script becomes a reliable, production-ready system. This involves:

- Automating sync schedules, depending on the connector and environment — using cron jobs, cloud functions, or DAGs.

- Logging everything that happens during each run, including API calls, errors, and record counts.

- Setting up alerts via email, Slack, or PagerDuty to catch failures or anomalies.

- Integrating observability tools such as New Relic, Datadog, or Sentry for performance monitoring and error tracking.

Step 8. Maintain and adopt

The HubSpot platform keeps evolving, regularly adding new features and fields. Although breaking changes are rare and typically announced well in advance, it pays to take measures and save yourself from unpleasant surprises.

- Periodically check object metadata and compare it against your existing schema.

- Update your mappings to reflect new or changed fields.

- Build in tolerance for fields that may be missing, null, newly added, or unexpected in the JSON returned from HubSpot.

- Use schema introspection endpoints like /crm/v3/properties/{object} to programmatically detect schema changes.

- Follow the HubSpot developer changelog to stay informed about upcoming changes.

Pros

- Full control over schema & transform logic.

- The ability to get very granular data.

- No vendor lock-in.

- Cost-effective for simple integrations.

- Secure, self-hosted.

Cons

- High upfront & ongoing dev/maintenance effort.

- Need to handle rate limits, pagination, retries, schema drift.

- Performance optimization (bulk loading, batching) required.

- Monitoring, alerting, failover need to be implemented.

Method 3: Third-Party Integration Platforms

This method involves connecting HubSpot and PostgreSQL (or other destinations) by using integration platforms like Skyvia, Fivetran, Zapier, or Hevo. These tools enable direct connection between data sources using prebuilt connectors. With a visual UI and automated pipelines for data extraction, transformation, and loading, these platforms abstract away most technical complexities, bringing forward the ultimate – results. A big bonus is the ability to schedule syncs, monitor jobs and define error handling logic without writing any code.

Best for

- Cases of regular syncing of multiple HubSpot objects or large datasets.

- Teams that prioritize fast setup and quick deployment.

- Users that want a fully managed, hands-off solution.

Step-by-step Guide

In this example, we’ll use Skyvia to integrate HubSpot and PostgreSQL. To do so, you’ll need to follow three simple steps:

- Create connection to HubSpot.

- Create connection to PostgreSQL.

- Define integration flow.

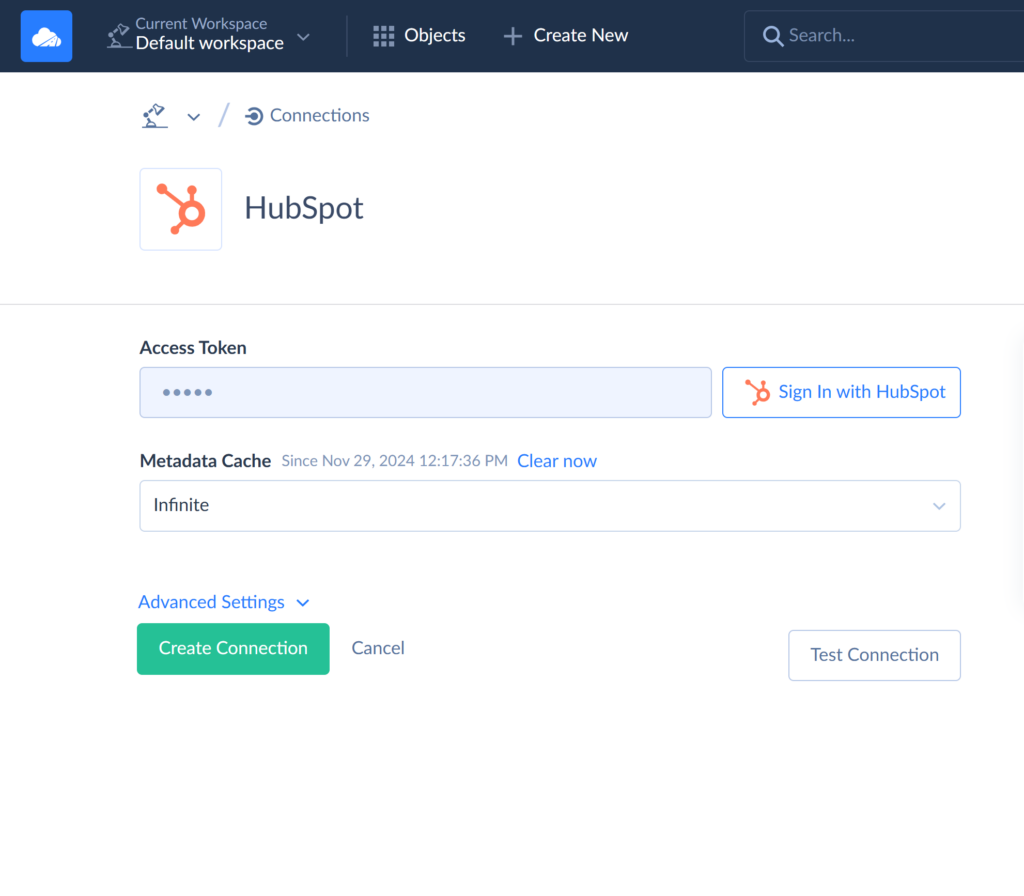

Step 1. Create connection to HubSpot

- Sign in to Skyvia, or, if you don’t have an account yet, create it for free.

- Click + Create New and choose Connection.

- Choose HubSpot from the list of connectors.

- Sign in with HubSpot and select the account to use.

Note: The connection is enabled via OAuth 2.0. By logging in, you authorize Skyvia to access your account and receive an access token to interact with the HubSpot API.

- Click Create Connection.

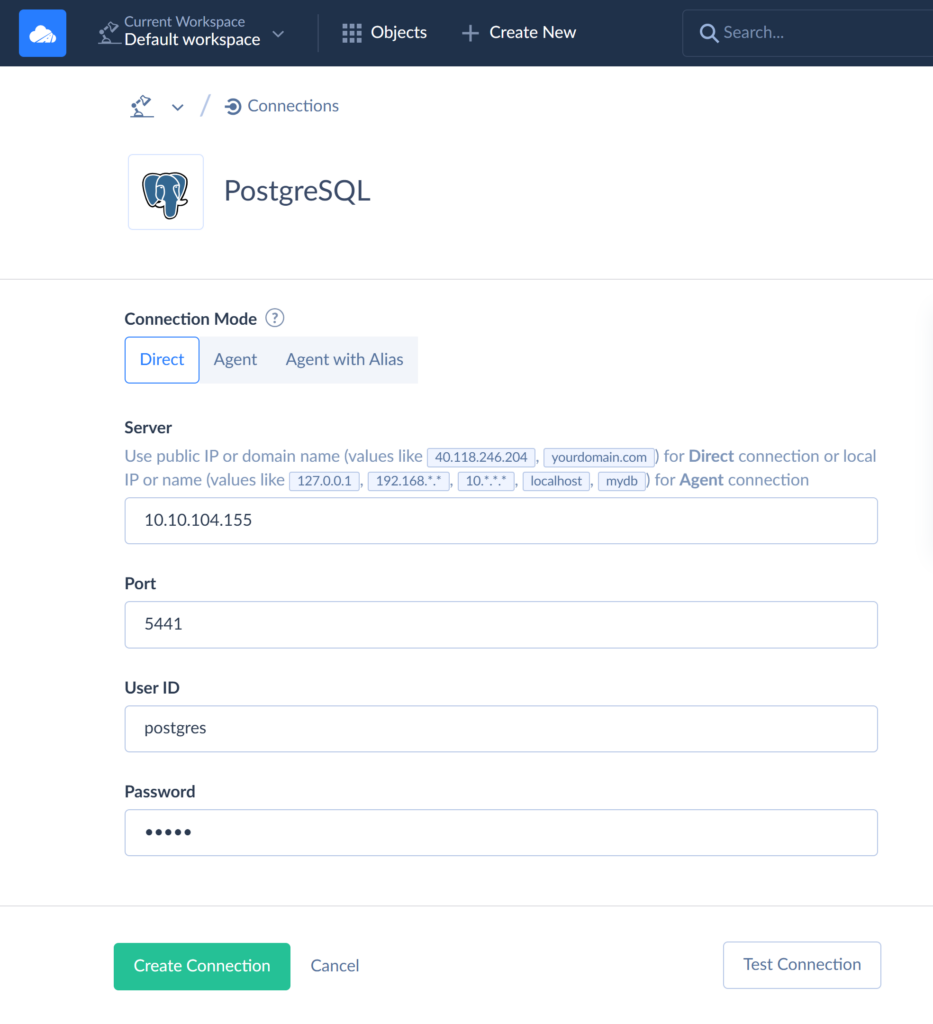

Step 2. Create connection to PostgreSQL

Skyvia allows for TCP/IP, SSL and SSH connections to PostgreSQL. You can choose the required protocol in Advanced Settings. For this example, we’ll create a TCP connection.

- In your Skyvia account, click +Create New, select Connection, and choose PostgreSQL.

Note: Establishing a connection to a local PostgreSQL server requires it to be accessible from the internet. If it’s not, use a Skyvia agent connection instead.

- Provide the required connection parameters:

- Server – name or IP address of the PostgreSQL Server host to connect to.

- Port – PostgreSQL server connection port.

- User Id – your user login.

- Password – your user password.

- Database – a PostgreSQL database to connect to.

Note: Once you specify the server, Skyvia will automatically load the list of databases available on that host.

- Schema – name of the PostgreSQL schema you want to connect to.

- Click Create Connection.

Step 3. Define integration flow

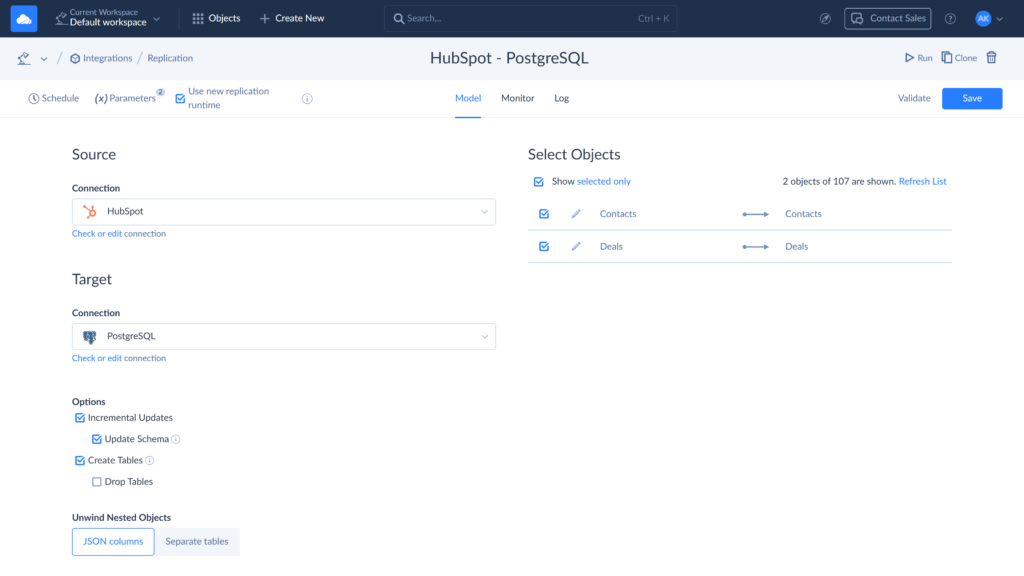

Skyvia offers several ways to transfer data between HubSpot and PostgreSQL. In this example, we’ll use Replication to create a copy of HubSpot’s Contacts and Deals objects in PostgreSQL.

- Click +Create New and choose Replication.

- Choose HubSpot connection as Source and PostgreSQL as Target.

Note: The first replication task is preconfigured with Incremental Updates, Update Schema, and Create Tables options enabled. Uncheck the corresponding boxes to discard the options.

- Choose the objects to replicate. Apply filters to indicate the required objects.

Note: By default, Skyvia replicates all fields of the selected objects. You can customize this by clicking Edit next to an object and excluding fields from replication.

- Save the task and run the integration.

- Use Schedule to configure automatic runs.

- You can check the integration results on the Monitor and Log tabs.

Pros

- Fast and simple no-code setup.

- The ability to automate and schedule data syncs.

- Easy start with prebuilt connectors.

- Visual tools for field mapping and transformation.

- Many platforms provide monitoring and logging out of the box.

Cons

- Can be expensive for frequent, data-heavy flows.

- Processing large datasets can slow down integration.

- Less customization in case of complex business logic.

- Higher risk of vendor lock-in.

- Limited control over retry/backoff logic for HubSpot APIs.

Use Cases: Real-World Examples

Integrating HubSpot with PostgreSQL is a powerful step toward deeper insights and smoother data exchange across your organization. We’ve already covered the key advantages – now let’s look at how companies are applying this setup in real-world scenarios.

Building a 360-degree Customer View

Customer data that sits in HubSpot is priceless. But its value increases even more when combined with input from product usage, billing, and support systems.

Accumulating all this information in PostgreSQL allows you to create a unified customer profile – a single source of truth for marketing touchpoints, support history, account status, and product behavior.

Use case: A SaaS company joins HubSpot lifecycle data with in-app usage logs and Stripe billing data to power personalized onboarding flows.

Advanced Sales Pipeline Analysis

HubSpot does offer pipeline reporting, but it often lacks depth. PostgreSQL enables advanced breakdowns of deal velocity, win/loss trends, rep performance, and revenue forecasting – using custom SQL queries, cohort analysis, or time-based comparisons.

Use case: A B2B sales team tracks average deal size by industry segment and stage duration over time to optimize their sales process.

Powering Marketing Attribution Models

HubSpot does a solid job tracking campaign activity — but it falls short when it comes to complex attribution modeling that requires custom logic and blended data.

With everything aggregated in PostgreSQL, marketers can connect web and app analytics tools to HubSpot data and start building attribution models that are flexible, iterative, and tailored to their real funnel behavior.

Use case: A marketing team correlates HubSpot campaign data with Google Analytics and product signups to attribute conversions more accurately.

Best Practices for HubSpot to PostgreSQL Integration

Understand your data

HubSpot is a data treasure trove. When planning your integration, take time to identify the objects and specific fields that actually matter to your business. Pulling unnecessary data will only strain your API limits and clutter your database.

Define your sync frequency

The frequency of syncs determines how fresh your data is in the database. How often do you really need to integrate – real-time, hourly, daily? Align this with your business use case: a weekly performance report can rely on daily updates, while sales dashboards or task triggers may require real-time syncs.

Remember that this choice also affects your API load, system complexity, and cost. Choose the right cadence based on how your business uses the data – not just how often it changes.

Plan for data volume & scalability

Expecting large data volumes? You should. HubSpot enforces strict API rate limits (e.g., 100 calls per 10 seconds on some tiers), and processing large datasets can slow down everything else in your pipeline.

PostgreSQL can handle as much data as you throw at it – but the real question is whether your integration method is scalable enough to keep up.

Monitor and maintain

Your visibility depends on the method you use. iPaaS solutions offer native monitoring, while with other methods it is recommended to connect third-party monitoring tools. Set up notifications and alerts to stay informed about integration status and timely react to failures. Make it a habit to regularly review your mappings, schemas, and fields – things do change.

Ensure data security and compliance

Working with customer data almost always means handling sensitive information. A leak could be disastrous to your business. Protect the data:

- In transit: by using encrypted connections (HTTPS, SSL for PostgreSQL).

- At rest: by storing data in secure, access-controlled environments.

Conclusion

HubSpot – Postgres integration gives you the power to make your data work, instead of letting it sit idle in the depths of your CRM. Whichever method you choose – manual export, custom scripting, or a dedicated platform – the results are well worth the effort.

For those who want to make the process as effortless as it can be, we suggest using Skyvia. In addition to all the benefits mentioned above, Skyvia’s dedicated HubSpot connector supports custom objects and offers batch API option for the most efficient data loads in and out of HubSpot.

Sounds interesting? Start for free now!

F.A.Q. for HubSpot with PostgreSQL Integration

What HubSpot data can I sync to PostgreSQL?

You can sync standard and custom objects, including Contacts, Deals, Companies, Tickets, and more – with full control over fields.

Can I transform data during the integration process with Skyvia?

Yes. Skyvia allows mapping, expression-based transformations, and data filtering during integration.

What if I need to sync data from PostgreSQL back to HubSpot?

Skyvia supports two-way integration – you can load data into HubSpot using the Import or Data Flow tools.