Drowning in CSVs, SaaS exports, clickstream logs, “final_final_v7_really.xlsx,” and everything in between? That is often referred to as “big data,” but “big chaos” would be a more fitting term. You’re not alone stitching datasets together with duct-taped scripts and midnight cron jobs.

Data orchestration can overcome these challenges by arranging what runs, where, and when, so you can forget about solutions that hang on by a thread and a team’s crossed fingers.

In this beginner-friendly 2026 guide, you’ll learn:

- What is data orchestration?

- Why does it matter today, and why will it matter tomorrow?

- The benefits it brings.

- And how to get started? *

* We promise to deliver all of these in plain English.

Grab a coffee and let’s turn your data from a garage band into a well-rehearsed orchestra – on tempo, on time, and on budget.

Table of Contents

- What is Data Orchestration?

- Why is Data Orchestration So Important Today?

- The 3 Key Steps of a Data Orchestration Workflow

- Top 5 Business Benefits of Effective Data Orchestration

- Data Orchestration vs. ETL vs. Data Integration: What’s the Difference?

- Popular Data Orchestration Tools and Platforms

- Conclusion

What is Data Orchestration?

Data orchestration is the automated coordination of data movement, transformation, integration, and delivery across systems, ensuring the correct data arrives in the proper format, on time, with quality and governance.

In practice, it means less hastily concocted solutions and more rhythm by:

- Connecting databases, SaaS apps, lakes/streams, and routes record to the right targets

- Sequencing steps and dependencies; kicking off transforms in the proper order.

- Validating/enriching data to keep schemas and quality consistent.

- Baking in access controls, lineage, and audit trails by default.

- Monitoring runs in real-time.

- Alerting you when something goes wrong.

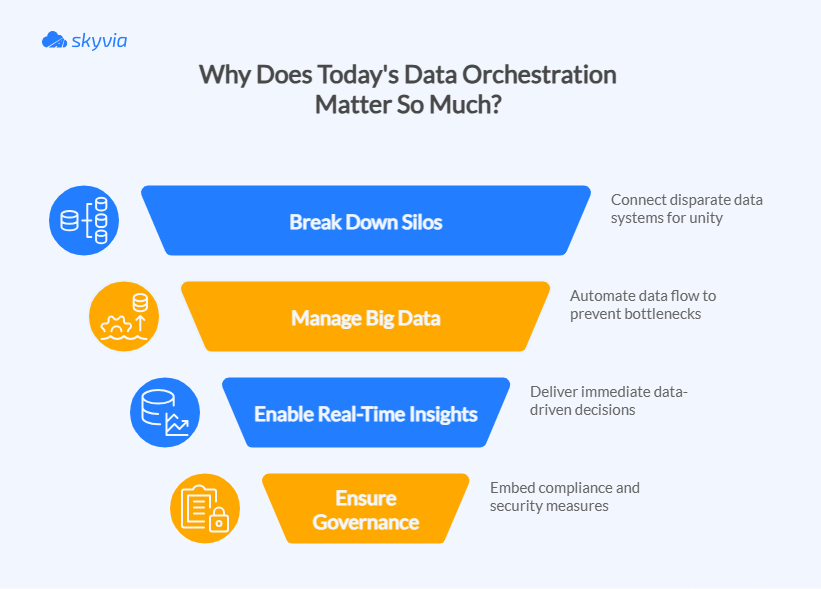

Why is Data Orchestration So Important Today?

Data used to trickle; now it torrents. Without orchestration, you’re waiting for a tsunami to hit your systems sooner or later (spoiler: rather sooner if your project is ambitious). With it, your pipelines move in step.

The Problem of Data Silos

A sales team lives in a CRM, finance in an ERP, and product in event streams, each system guarding its own “truth,” rarely understanding what is going on in the others. That balkanization breaks analysis and breeds duplicate logic.

Orchestration connects apps, data lakes, and warehouses, coordinates handoffs, and standardizes formats. Goodbye swivel-chair integration; hello shared reality.

The Rise of Big Data and Complex Data Pipelines

V means volume, variety, and velocity. A single dashboard might depend on APIs, Kafka topics, object storage, and a warehouse, each with its own timing and quirks.

That brings a risk that someone might drop a plate. Orchestration automates schedules, dependencies, retries, and resource use, keeping massive, mixed datasets flowing without bottlenecks.

The Need for Real-Time Insights

Fraud checks, stockouts, surge traffic, “you might also like” moments can’t wait for tomorrow’s batch. Orchestration keeps streams and micro-batches in lockstep, kicking off transforms the moment data lands and pushing results to apps and dashboards fast. Decisions stay live, not laggy; you steer with headlights, not a rear-view mirror.

Data Governance and Compliance

Everyone demands something from you: auditors want lineage, security wants controls, and customers want privacy, rightly so.

With one move – orchestration – you can satisfy them. It embeds governance into the flow: quality checks at each corner, policy-aware transformations, role-based access, sensitive fields masking, retention rules, and an auditable trail of users’ activity.

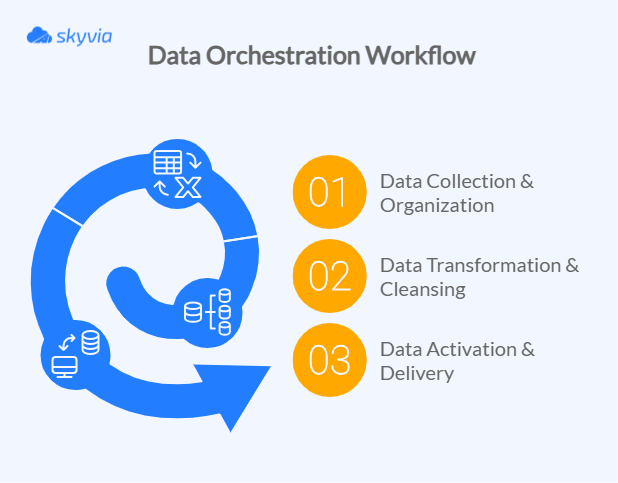

The 3 Key Steps of a Data Orchestration Workflow

Unlike many concepts in the data world, an orchestration strategy is relatively straightforward – comprising only three steps. However, it also means less room for mistakes that can be forgiven.

Here’s what you will need to figure out, blueprint, and handle:

Step 1: Data Collection & Organization

To have something to orchestrate, we need to extract data from APIs, databases, cloud applications, object stores, streams, and wherever it resides, and centralize it first.

That is one task, not many, because orchestration tools hook into those sources and pull the data, leaving your hands free. They handle auth, scheduling, pagination, and retries so feeds don’t flake out the moment you turn away.

Then comes cataloging and tagging of what arrives to initially prevent redundancies and silos:

- Standardize formats (CSV/JSON/Parquet).

- Align keys and time zones.

- Unify schemas so Sales, Finance, and Product aren’t speaking three dialects of “customer.”

Done well, this step kills silos and lays a clean, scalable foundation for everything downstream.

What “good” looks like:

- Reliable connectors with clear SLAs (Service-Level Agreement – is a contract that spells out what service quality a provider promises to deliver and what happens if they don’t).

- Central catalog/metadata, owners, and data contracts.

- Consistent naming, typing, partitioning, and retention rules.

Step 2: Data Transformation & Cleansing

Raw, even though organized, records can be lumpy and tricky. All those duplicates, disparate timestamps, and missing values need to be smoothed out so that analytics and machine learning algorithms won’t choke. This step includes:

- Cleansing: drop duplicates, fix types, normalize units, standardize timestamps, and handle missing values sensibly.

- Validation: enforce business rules (e.g., order_date ≤ ship_date), check referential integrity, and flag outliers.

- Enrichment: join to reference data, geocode, derive KPIs/segments, and add labels your analysts actually use.

End goal: high-quality, consistent, analysis-ready data that won’t turn your dashboards into abstract art and your ML model learn something useful.

Step 3: Data Activation & Delivery

Now it’s time to plate and serve the polished records. Orchestration pushes it where it matters:

- Warehouses and/or lakehouses for analytics.

- Business Intelligence (BI) tools for dashboards.

- Reverse ETL into CRM/marketing apps.

- Event buses (components that pass events from sources to destinations) for real-time triggers.

- Microservices (an architectural approach that breaks applications into multiple independent services) that power customer experiences.

Activation is the “last mile”: materialized views are refreshed on schedule, CDC (Change Data Capture) streams are updated in near real-time, and datasets are packaged with clear contracts and lineage, allowing teams to trust and act quickly.

Activation wins:

- Correct data → right system → right time.

- Consistent SLAs for reports and models.

- Fewer manual handoffs; more decisions made while the moment still matters.

The latest advice (in brief): Collect neatly, transform wisely, deliver decisively. Nail these three steps, and orchestration turns scattered feeds into a dependable data product your business can actually run on.

Top 5 Business Benefits of Effective Data Orchestration

Now that we’re done, at least theoretically, let’s see the payoff. Some of it (cleaner data, quicker insights, fewer fires, and room to grow without rewrites) becomes apparent fairly quickly.

The others may take a bit of time to reveal themselves. Though if everything is executed properly, you will enjoy all of them.

1. Faster, More Reliable Insights

When orchestration schedules jobs, handles dependencies, and auto-retries failures, data becomes punctual, accurate, and reliable.

Orchestrated pipelines are simultaneous to speedy dashboard updates, alerts fire when they should, and decisions aren’t stuck in last night’s batch. Sometimes, they also represent a chance to capitalize on opportunities that are still barely visible for development.

Why it works: The step from “hope it runs” to “know it ran” becomes a tiny one when you combine automation and monitoring (SLAs, alerts, retries).

2. Improved Data Quality and Consistency

Faster in orchestration doesn’t correlate with more errors. The whole concept is about introducing validation, cleansing, and standardization into every step.

Records get deduped, formats normalized, business rules enforced, and lineage tracked. And finally, the numbers deserve the teams’ trust because each dataset passes the same checks every time.

Why it works: Consistent rules, schema contracts, and lineage make quality measurable, not mythical.

3. Enhanced Data Governance and Security

If there were a Maslow-style pyramid for data, then governance and security would be its foundation. Thankfully, orchestration doesn’t mean more precautions.

On the contrary, it embeds access policies, masking, and audit trails within the flow, rather than bolted on afterward. You gain end-to-end visibility, similar to identifying someone’s fingerprints in a dataset.

Additionally, orchestration produces GDPR/CCPA/HIPAA compliance-friendly logs without reducing delivery.

Why it works: Governance-as-code keeps policy enforcement repeatable and provable.

4. Increased Operational Efficiency and Reduced Costs

Manual often brings friends – expensive and brittle, a chaotic duo. Orchestration automates mundane tasks that kill creativity and frees engineers to resolve thorny problems and shine.

Intelligent scheduling and parallelism reduce idle compute and failed reruns, trimming cloud bills, on-call time, and “oops” incidents.

Why it works: Fewer handoffs, fewer errors, better resource utilization = lower TCO.

5. Greater Scalability and Agility

With modular, declarative workflows, you can plug in another SaaS app, stream, or region without rewriting half the stack. Need near-real-time instead of nightly? Tweak the cadence, not the whole architecture.

Why it works: Composable pipelines and configuration over code let you scale out and pivot fast, like swapping Lego bricks, not pouring concrete.

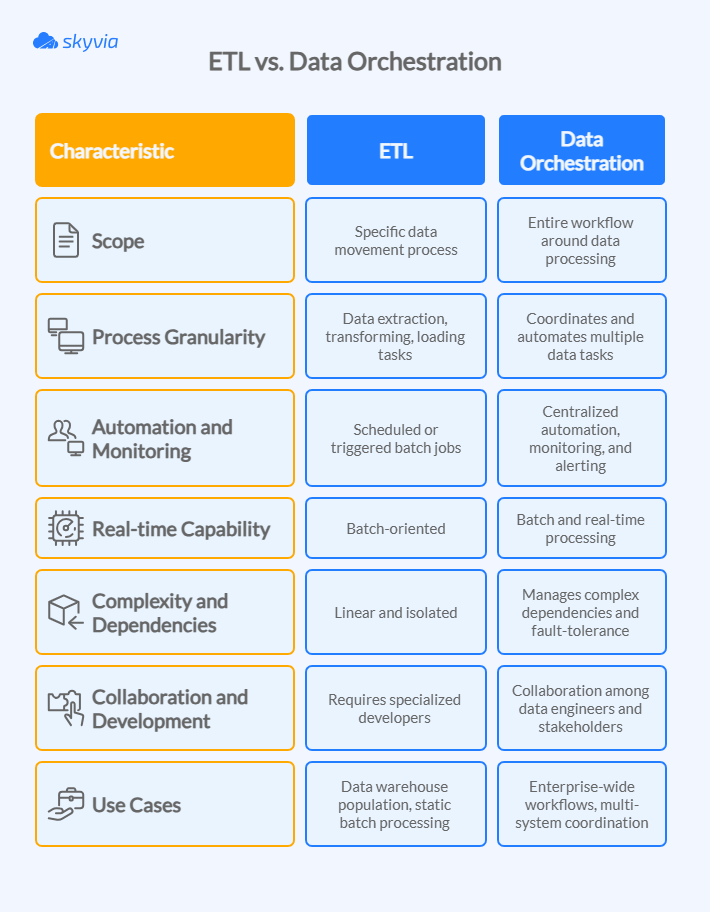

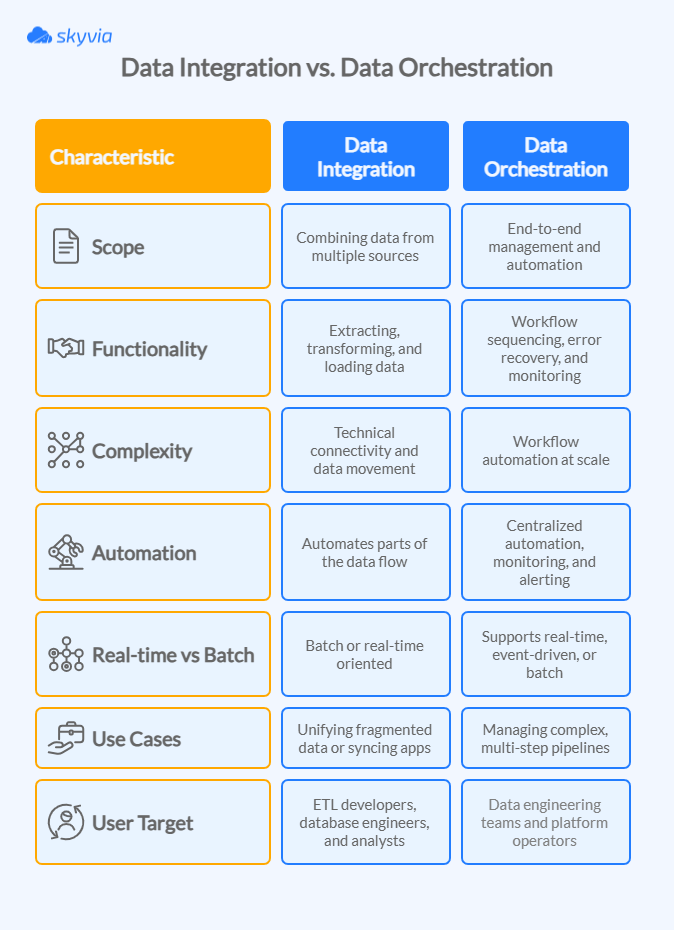

Data Orchestration vs. ETL vs. Data Integration: What’s the Difference?

These terms coexist in the same universe and cross a bit, but the tasks they resolve are different:

- Data integration connects and combines data.

- ETL transforms and loads it.

- And orchestration runs the show.

The order is important because it helps to illustrate these concepts. Now, let’s see in detail how they correlate.

| Aspect | ETL | Data Integration | Data Orchestration |

|---|---|---|---|

| Primary focus | Transform and load | Connect and unify | Coordinate and automate |

| Unit of work | One pipeline | Many sources/targets | Many pipelines + tasks |

| Mode | Mostly batch (some ELT) | Batch or real-time | Batch + real-time, event-driven |

| Governance | Pipeline-level checks | Varies by tool | Central policies, lineage, SLAs |

| Failure handling | Local to pipeline | Varies | Global retries, routing, alerts |

| Flexibility | Often platform-tied | Multi-source | Multi-platform, hybrid/cloud |

Data Orchestration vs. ETL

Key differences between data integration and ETL hide in three places: scope, granularity, and when things actually run.

What they are

- ETL (Extract, Transform, Load) is a pipeline pattern that moves data and can be eloquently described through a simple scheme:

source → transformation → target.

- Orchestration: The control panel that schedules and coordinates many pipelines – ETL, ELT, CDC, streaming – applying run conditions and SLAs, and watches for failures.

Scope & responsibilities

- ETL focuses on a single flow: mappings, transforms, load strategy, and performance of that pipeline.

- Orchestration manages end-to-end workflows: run order (e.g., dimensions before facts), backfills, dependency graphs, retries with backoff, alerting, and post-run tasks (e.g., refresh a semantic layer, notify a downstream app).

Processing modes

- ETL traditionally = batch; modern tools also support micro-batch/ELT.

- Orchestration handles both batch and event/stream triggers in a single DAG, a task network that flows in one direction without any cycles that could create infinite loops, so nightly loads and real-time enrichments live under the same guardrails.

Failure handling & observability

- ETL: pipeline-level error handling (e.g., row rejects, dead-letter queues).

- Orchestration: workflow-level recovery (skip/reattempt/prune), SLA tracking, lineage across tasks, central logs/metrics for the whole graph.

Example

- ETL job: transform

orders.csvintofct_orders. - Orchestrated workflow: wait for upstream file → validate schema → run orders ETL → refresh aggregates → warm BI cache → trigger reverse ETL to CRM → page on-call if SLA breaches.

Data Orchestration vs. Data Integration

What they are

- Data Integration brings together records from multiple sources via connectors, mappings, CDC, ELT/ETL, and virtualization/federation.

- Data Orchestration: Automation and governance of how those integrations (and related tasks) run.

Scope & responsibilities

- Integration solves connectivity, schema mapping, and consolidation (e.g., data from Salesforce, Shopify, PostgreSQL enters a warehouse. The ETL pipeline sighs, “Coming right up”).

- Orchestration stitches multiple integrations and tasks into a coherent plan: sequencing extractions, running quality checks, branching on conditions, publishing outputs, and triggering downstream jobs.

Governance & control

- Integration may provide basic validation and schema sync.

- Orchestration embeds governance-as-code: data quality tests, masking rules, access policies, lineage capture, approval gates, and auditable runs, applied consistently across pipelines.

Scalability & change

- Integration expands connectors and mappings as the number of sources grows.

- Orchestration scales the operational model: parallelizing runs, prioritizing workloads, coordinating compute across environments, and adapting schedules when volumes spike.

Example

- Integration: ingest customers from CRM and subscriptions from billing.

- Orchestration: run CRM and billing ingests in parallel → deduplicate customers → apply consent rules → update warehouse and feature store → notify ML service and BI refresh.

In reality, mature stacks make use of all three: orchestration guarantees that the entire system operates predictably at scale, while integration and ETL/ELT handle the tedious work.

Popular Data Orchestration Tools and Platforms

Your pick is a spectrum: from deeply programmable to delightfully visual. So, what would you like to work with?

| Tool | Type | Key Strengths | Typical Users |

|---|---|---|---|

| Apache Airflow | Open-source | Scalable, extensible, large ecosystem | Data engineers, enterprises |

| Prefect | Open-source/Cloud | User-friendly API, real-time monitoring | Data scientists, engineering teams |

| Dagster | Open-source | Data asset lifecycle, strong metadata & testing | Data teams focused on lineage |

| Skyvia | SaaS platform | No-code, broad integration, workflow automation, and orchestration | Business users, SMBs, non-tech |

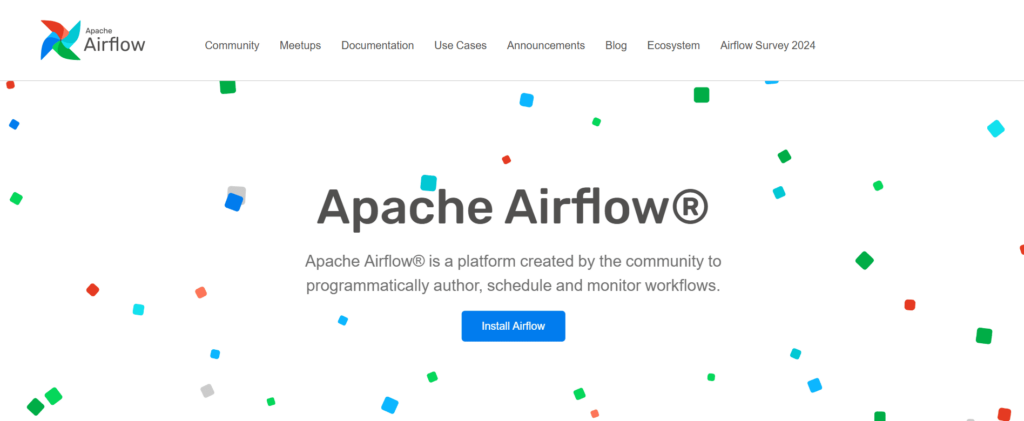

Apache Airflow

It helps to start with the classic that shaped how we schedule and monitor data jobs. Enter Apache Airflow.

Best for

- Engineering teams that want full control over code-defined DAGs and complex dependencies (self-hosted or managed).

Pros

- Massive open-source ecosystem and provider hooks; scales out with workers; battle-tested for batch ETL/ML.

Cons / Limitations

- Heavier ops overhead to run yourself; managed flavors reduce toil but add cloud costs.

Pricing

- In itself, Apache Airflow is free to use, but its pricing depends on how and where it is deployed.

Learning Curve

- Moderate to steep: Python first, DAG concepts, and infra basics required.

Prefect

Best for

- Python teams that want quick iteration, strong observability, and a lighter-weight developer experience.

Pros

- Friendly API, rich retries/alerts, excellent Cloud telemetry;

- Users can run open-source locally or use Prefect Cloud.

Cons / Limitations

- Newer ecosystem than Airflow.

- Fewer long-tail integrations out of the box.

Pricing

- Prefect Cloud tiers: Hobby (free), Starter ($100/month), Team ($400/month), and Pro (you will need to talk to sales to find out how much).

Learning Curve

- Low to moderate: fast to first flow; deeper patterns (blocks, deployments) take a beat.

Dagster

Best for

- Data teams treating data as software: asset-centric pipelines, type safety, testing, lineage.

Pros

- First-class data asset graph.

- Strong metadata and testability

- Polished UI (Dagit).

Cons / Limitations

- Requires some learning for its domain-specific concepts.

- Less mature than Airflow.

Pricing

- Dagster Cloud offers a Solo plan ($10/month) and a Starter ($100/month) plan; enterprise tiers are available at a quote.

Learning Curve

- Moderate: pays off if you want powerful continuous integration-style practices in data.

Skyvia (integration + orchestration)

The tools we’ve already discussed can orchestrate data integration processes by triggering and controlling ETL or data integration jobs. Still, they do not natively provide data integration, which means you will need one more tool in your data stack. However, Skyvia does both, which means your stack can stay tighter.

It is a no-code cloud platform providing data integration (ETL/ELT, reverse ETL), backup, query, and orchestration features via visual tools called Data Flow and Control Flow.

Teesing, an e-commerce company, automated its data orchestration using Skyvia Data Integration to streamline gas and liquid control system data. They improved the reliability and synchronization of processes by orchestrating timely data flows from devices into centralized systems.

FieldAx, a Field Service Management (FSM) solution, centralized job-related data orchestration with Skyvia, importing records automatically to a MySQL repository. That reduced silos and provided a single source for analysis by orchestrating continuous imports and transformations.

Best for

- Business and mixed-skill teams that prefer automated orchestration, visual design, and scheduling without managing infrastructure.

Pros

- No-code pipelines (Data Flow, Control Flow),

- 200+ connectors.

- Powerful automation features, including scheduling, event triggers, and conditional execution.

- Supports CDC and incremental data loading.

- Scales effortlessly with data volume increases and adapts to dynamic workflow demands.

- Implements encryption, access control, and compliance protocols within orchestration workflows.

- Provides detailed monitoring and execution logs.

Cons / Limitations

- Fewer advanced developer-centric workflow features.

- Fewer video tutorials.

Pricing

- Skyvia offers a Free plan for projects with modest needs (10K records per month, one schedule per day, etc.), as well as Basic (~$79/month), Standard (~$159/month), and Professional (~$399/month) plans, all with annual discounts. An enterprise plan is available on request.

Learning Curve

- Low: drag-and-drop mapping, visual scheduling; minimal DevOps required.

Conclusion

Data orchestration is the gap between a tangled bunch of scripts and a data product you’d stake a KPI on. It has some impressive tricks up its sleeve:

- Breaks down silos.

- Hard-bakes quality checks.

- Speeds time-to-insight.

- Enforces governance.

- Trims operational toil.

- Scales as your sources and use cases multiply.

That’s already extraordinary, but there are even greater things to come. Expect more AI-assisted orchestration – pipelines that auto-tune schedules, predict failures before SLAs slip, recommend quality rules, and generate runbooks from data contracts. Add here event-driven patterns, asset-centric lineage, and a tighter weave between batch, streams, and lakehouse stacks.

Need orchestration but allergic to coding? Skyvia opens that door with visual workflows that orchestrate 200+ sources, so you can forget about the code complexity and server monitoring anxiety.

F.A.Q. for Data Orchestration

What is a data orchestration framework?

It is a structured system composed of tools, components, and processes designed to automate, manage, and monitor the flow of data across diverse sources, transformations, and destinations within an organization.

How do you implement data orchestration?

Set outcomes/SLAs, inventory sources/targets, pick a tool, design DAGs, add tests, data contracts, and policies, set triggers/schedules, wire logs/alerts, pilot & backfill, finally iterate, document, and scale.

What is the difference between data orchestration and data automation?

Automation runs a task (e.g., an ETL job). Orchestration coordinates many automated tasks end-to-end.