The whole modern business world revolves around data, as each step of the company’s strategy involves analyzing information from various sources.

In a way, data ingestion is similar to some bee jobs.

- Collecting various data types (structured and unstructured) from a set of data sources like databases, data lakes, IoT, cloud apps, etc.

- Storing it in one centralized destination to analyze.

You may ingest data in real-time, batch it on schedule, or combine both using design patterns like Lambda Architecture. The first approach is the fastest, and the last one is fast for real-time but not for batch.

The data type defines the data ingestion tool selection, and here are the best 10 ones.

Table of Contents

- Skyvia

- Apache Kafka

- Apache NiFi

- Talend

- Apache Flume

- Amazon Kinesis

- Wavefront

- Precisely Connect

- Airbyte

- Dropbase

- Tools comparison

Skyvia

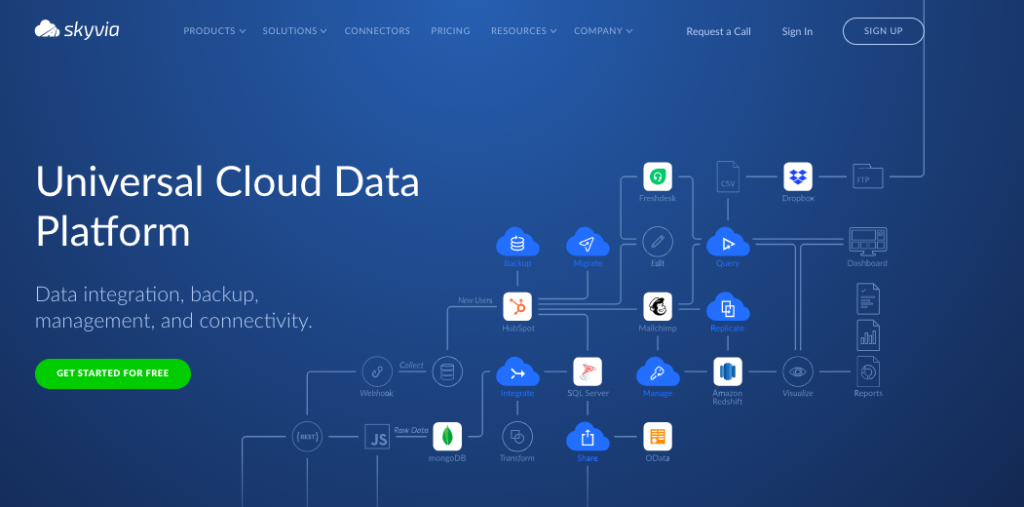

If you are looking for a compatible, scalable, and fast solution for data ingestion, Skyvia is first on this list. The tool is popular because of its useability, strong list of data sources and destinations supported, and great pricing with the Freemium model available.

In other words, the tool is pretty balanced between provided services and saved costs. So, what can it offer?

- Wide range of data integration scenarios:

- ETL, ELT, reverse ETL.

- Data sync, automation, etc.

- Easy-to-use UI without coding.

- 180+ data sources and destinations supported, like HubSpot, Salesforce, Dynamics CRM, Shopify, and other cloud apps and relational databases, including MySQL, PostgreSQL, Oracle, etc.

- Ability to solve both simple integration scenarios and build more complex data pipelines with multiple sources involved.

- The robust security options (HIPAA, GDPR, PCI DSS, ISO 27001, and SOC 2 by Azure).

Try Skyvia in action by yourself, or schedule a demo.

Apache Kafka

Another competitive player in the market is Apache Kafka. It’s open-source so you may use its advantages for free. And the main of them are:

- The ability to stream fast large volumes of data in real time.

- The easy connection with a set of external sources and destinations for import and export data.

- Wide range of different tools and APIs for developers creating custom data pipelines.

Although Apache Kafka is scalable and fast, it has a few drawbacks.

- The solution is unfriendly for non-technical users. You need a technical background to configure it and create brokers and topics. A Broker is a server on a cluster for receiving and processing data with a unique ID and a corresponding topic platform. Kafka brokers store data in a directory on the disk of the server they run on, and each topic section gets its own subdirectory with an associated section name.

- The lack of built-in management and monitoring abilities.

- Although the platform is free, you must pay for connectors on a Confluent subscription.

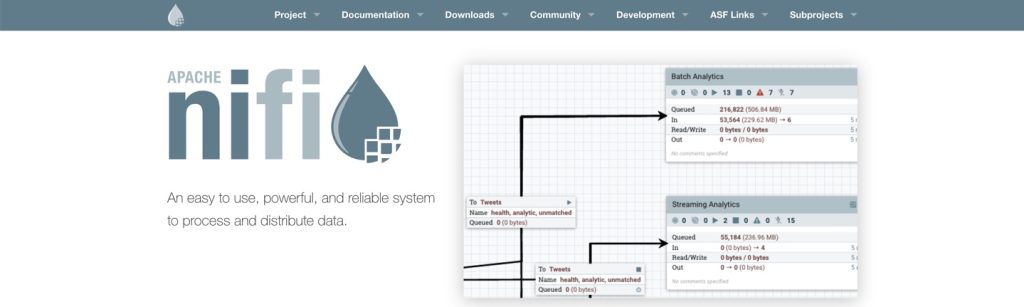

Apache NiFi

Similarly to Apache Kafka, Apache NiFi is an open-source data ingestion platform in the Apache products line. Let’s see how it works? Imagine some delivery system like FedEx that automatically transfers your ordered stuff from the store to the home and monitors it from the source to the destination in real-time. Apache NiFi just does the same but with your data.

The solution is not as complicated as Apache Kafka and provides 280+ different processors responsible for each part of the work (Get, Put, Fetch, etc.). The key advantages of this tool are:

- Flexible scaling model.

- Flow management.

- Extensible architecture.

But while working with large data volumes, the solution eats a lot of processing power and memory, which makes it not so cost-effective, especially for small businesses.

Talend

Talend Data Fabric, presented by Talend, looks like a pretty adult solution that fits more for big enterprises. The amount of supported connectors is impressive – 1000 various sources! It also supports a cool set of destinations like Google Cloud Platform, Amazon Web Services, Snowflake, Microsoft Azure, Databricks, etc.

Compared to other data ingestion platforms, the main advantages here are:

- An ability to drag and drop the data to quickly create pipelines for further usage.

- Data quality, error checking, and fixing functionality.

- Large data amount management ability (in cloud or on-premise).

But the solution is not user-friendly and needs additional time for learning, which might be a blocker for unfamiliar users. Another possible drawback is unclear pricing. You may select a subscription plan, but the details look like a big secret, so connecting with the management team would be better.

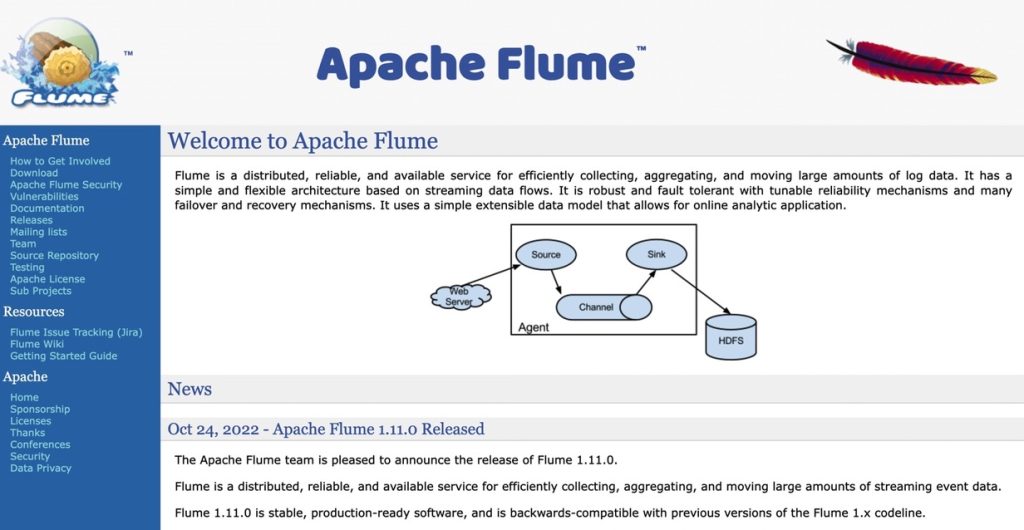

Apache Flume

Apache Flume is one more Apache open-source solution mainly used to ingest data from different sources into Hadoop Distributed File System (HDFS). Its job is to extract, aggregate, and move large log data volumes. Except for Hadoop, you may set it up to work with Hbase and Solr.

The main advantages of this tool are:

- Fault-tolerance.

- Usability.

- Reliability set-up.

The product is accessible for free, but it’s complex in configuration, especially for newcomers. And there is a lack of technical documentation to relay in case of any issues. Except for it, the solution is not so flexible, and it would not be easy to customize it for some specific needs.

Amazon Kinesis

If your business cases require data extraction, processing, and analysis as soon as it comes, and make decisions now, not waiting for the whole data collection, Amazon Kinesis is the perfect choice. This cloud solution is about fast and qualified processing and analysis of real-time data and video streaming. Its key advantages are:

- Impressive data processing speed (terabytes per hour).

- An ability to extract a high volume of data from a wide range of sources (financial docs, IoT, social media, location-tracking, telemetry, etc.).

Despite the pay-as-you-go pricing model, the service becomes costly depending on the data ingested and the level of throughput. If you need more, you’ll pay more. The configuration and setting of the service might be a quest for those who see it for the first time. And, at last, the integration with non-AWS is quite limited.

Wavefront

The most powerful side of Wavefront is its observability. If your business cases are about what’s wrong here and how to fix it immediately, it might be a good idea to try it. This cloud-based tool is created for all types of work with metric data like ingesting, storing, visualizing, monitoring, etc. Google-invented stream processing approach used here makes the platform scalable and ready for the rising of query loads. Let’s review the advantages list.

- There are 200+ sources and services (DevOps tools, cloud service providers, data service, etc.) available here.

- Easy real-time data manipulation ability – the solution may ingest millions of data points per second.

- Alerting, based on data collected on custom dashboards and even forecasting troubles.

The back side of the solution’s advantages is its complexity. You need a solid technical background to configure and set it up. And remember that working with unique metrics, sources, and tags complicates data point ingestions and slows the environment down.

The price here depends on data point per second (PPS), starting from $1.50. For instance, you’ll pay $15 per month with a condition of 100 data points for each host per 10 seconds monthly.

Precisely Connect

If your data zoo lives in various ranges of sources like databases, clouds, and even legacy systems, Precisely Connect is a helpful tool for ingestion and integration. Here, you may build streaming data pipelines and, what’s very important, share the data with the company members to see the whole picture. The platform’s main advantages are:

- Strong data integration abilities, including sources like RDBMS, mainframe, NoSQL, and cloud.

- The real-time data replication abilities.

- Regular data silos cleaning.

The backstage of the benefits is the solution’s complexity. It needs a strong tech background to configure it and set it up. Except for it, it’s not cheap. Similar to Talend, pricing here is also a big secret and depends on the list of factors. But be ready to pay from $99 to $2000+ monthly for the most popular ETL solutions.

Airbyte

Airbyte is a secured open-source data ingestion tool focused on fast data extraction from various sources and loading it into different destinations like data warehouses, data lakes, and databases. The solution is intuitive and provides pre-built and custom connectors to speed up the replication to minutes. Its main advantages are:

- Scalability.

- Easy to understand UI.

- Number of connectors ( 300+ SaaS apps, cloud apps, databases, etc.).

But despite the intuitive interface, it might be a blocker to set the tool up and configure for non-technical users. Another drawback is the lack of technical documentation and support.

You may use the open-source version for free or choose pay-as-you-go pricing. Also, you may use the 14-day free trial to decide which version fits for your company.

Dropbase

If you work with offline data and need to transform it into real-time online databases, Dropbase is a pretty good service for such operations and workflow automation.

With the unified spreadsheet, you can quickly and safely edit, clean, and validate customer data across databases, web apps, and APIs.

In other words, Dropbase is just a data editor where you may import and export data from CSV, Excel, XML, and JSON and load it to Postgres DB for sharing.

The main advantage of this service is the ability to make the offline data live and accessible in real time for all team members on different project levels.

The service isn’t compatible enough with a wide range of similar tools, which might be a bottleneck. But if your company uses Apache Kafka and Apache NiFi, there’s no problem.

The pricing ranges from standard to custom, starting from $150 per workspace per month, and doesn’t offer any free plan.

Tools comparison

The table below compares the tools listed to select the best choice for comfortable work. And, in case you haven’t yet found the chosen one for your business needs, coma and check the big ETL/ELT Tools Comparison made by Skyvia with 25+ services and platforms compared side-by-side.

| Tool | Usability | Scalability | Security | Pricing |

|---|---|---|---|---|

| Skyvia | Easy-to-use, visual wizard. | Yes | HIPAA, GDPR, PCI DSS. ISO 27001 and SOC 2 (by Azure). | Volume-based and feature-based pricing. The Freemium model allows you to start with a free plan. |

| Apache Kafka | Tech background is required. | Yes | SOC 1/2/3, ISO 27001, and GDPR/CCPA. | An open-source solution. |

| Apache NiFi | Depends on the tech expertise level. May be difficult for beginners. | Yes | SOC2, GDPR, HIPAA, CCPA, and TSL. | An open-source solution. |

| Talend | Tech background is required. | Yes | GDPR, HIPPA, SOC 2, CSA STAR. | Subscription-based pricing. 14-day free trial for cloud. Free Talend Open Studio. |

| Apache Flume | Partly. Needs experience in configuration and set up. | Yes | HITRUST, SOCII, and ISO 27000 [2]. HITRUST. | An open-source solution. |

| Amazon Kinesis | Easy-to-use. | Yes | SOC 1/ISAE 3402, SOC 2, SOC 3, FISMA, DIACAP, and FedRAMP. | The pay-as-you-go price. |

| Wavefront | Easy-to-use. | Yes | HIPAA, PCI-DSS, and FedRAMP, ISO 27001/27017/27018, SOC 2 Type 1, and CSA. | PPS Pricing. |

| Precisely Connect | Tech background is required. | Yes | CDP, CDC. | The price is flexible depending on your needs. |

| Airbyte | Tech background is required. | Yes | SOC 2 TYPE 1/2, ISO 27001. | Pay-as-you-go model with a 14-day free trial. |

| Dropbase | Easy-to-use. | Yes | SOC 2 Type 2, ISO 27001, and HIPAA. | Flexible price without a free plan. |