A comparison of Amazon Redshift and Amazon S3 cloud storage services is more than a technical decision. You’ve got data that needs a home, queries that need answers, and a budget that, unfortunately, isn’t bottomless.

However, the choice becomes even harder when you find out that Redshift and S3 aren’t direct competitors. They’re more like Batman and Superman because each has unique talents for various circumstances:

- Redshift is the analytics speed demon, built to slice through structured data at lightning speed.

- S3 is the unflappable storage champion that’ll hold anything you throw at it – yes, even cat videos (for work, of course).

So, let’s reframe the decision: we don’t select which service is better, but which tool fits your specific job. Let’s strip away the marketing jargon and zero in on when to use what, how much it’ll cost, updates that may change everything, and why the most innovative organizations often use both.

Table of Contents

- At a Glance: Redshift vs. S3 Cheat Sheet

- Deep Dive: Amazon Redshift (The Analytics Powerhouse)

- Deep Dive: Amazon S3 (The Universal Data Lake)

- Head-to-Head Comparison: The Deciding Factors

- The “Better Together” Strategy: A Modern Data Architecture

- How Skyvia Simplifies Redshift and S3 Integrations

- Market Context: Redshift & S3 Alternatives

- Real-World Examples & Use Cases

- Conclusion

At a Glance: Redshift vs. S3 Cheat Sheet

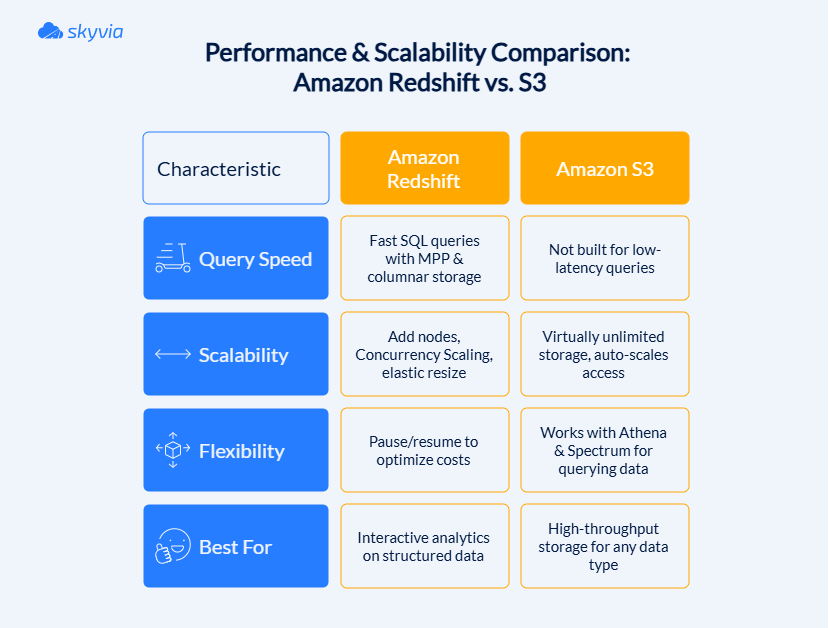

With only two options, the stakes feel higher. Start with this table as your lifeline, and below, we will discuss all the nuances and aspects in detail.

In short, Redshift is for when you’re looking to transform raw data into insights with potential, while S3 is your go-to for storing anything and everything. Both are perfect when you want affordable data lake storage in S3 with hot analytical subsets in Redshift via Spectrum or load jobs.

Deep Dive: Amazon Redshift (The Analytics Powerhouse)

What is it?

Amazon Redshift is a fully managed, petabyte-scale cloud data warehouse built for one thing: transforming complex analytical queries of structured data into results. Companies love it for many reasons, mainly because:

- That “fully managed” part is the best, i.e., AWS handles servers, backs up, and does all the behind-the-scenes grunt work.

- It speaks plain SQL and exposes itself directly to BI tools like Tableau, Power BI, and Looker.

- As part of the AWS ecosystem, it inherits all the security features of the cloud, including VPC (Virtual Private Cloud), encryption for data at rest and in transit, integration with IAM roles, users, and almost every popular service within the family.

- Additionally, it supports open data formats, such as Parquet and ORC (Optimized Row Columnar).

Core Architecture

A deft ensemble lies at the foundation of Redshift:

- A cluster is a collection of nodes that work under one score. You can start small and scale up if the performance demands it.

- Leader node – the maestro. It parses queries, sets up execution plans, and keeps the compute nodes playing in harmony.

- Compute nodes – the actual musicians. Each node stores chunks of data and runs queries in parallel.

- Node slices – each tackles a portion of the workload, guaranteeing that no CPU is left idle.

Note: Node limits depend on the ra3 size. ra3.xlplus starts at up to 16 nodes and can resize to 32. ra3.4xlarge can resize to 64. ra3.16xlarge scales higher. Avoid a blanket “32-node” ceiling and size to workload, not a fixed node count.

| Node type | Architecture | Storage | Compute scaling | Use case |

|---|---|---|---|---|

| ra3 | Cloud-native | Managed | Independent | Large, rapidly growing datasets; flexible compute and storage scaling |

| dc2 | Legacy | Local SSD | Coupled | Smaller datasets; high performance but less flexible |

| ds2 | Legacy | HDD | Coupled | Extensive datasets with lower performance demands |

MPP (Massively Parallel Processing) spreads the workload across multiple nodes and slices, making even enormous queries run smoothly instead of one overloaded server struggling through everything like Frodo wearing the One Ring alone.

Redshift uses columnar storage, organizing data by columns rather than rows. It improves query performance and enables better data compression, reducing storage requirements and speeding up scans.

Tip for new builds: Prefer ra3 or Redshift Serverless for modern workloads; treat dc2/ds2 as legacy solutions.

Limitations

But before you get too excited, let’s talk about Redshift limitations:

- Concurrency bottlenecks: Too many queries at once can choke your cluster. Concurrency scaling spins up temporary clusters during spikes, but extra charges can bite into your budget.

- Storage ceilings: Managed storage scales up automatically, but still bumps into walls. Each dc2.large node brings 160 GB to the table – bigger nodes pack more punch if you need space.

- Python UDF sunset: Time’s running out – new Python user-defined functions after October 2025 will no longer be supported, and execution ends June 30, 2026. You will need to plan a migration to Lambda UDFs.

- Security lock-down: Starting 2025, Redshift goes secure by default – new or restored provisioned clusters default to no public access, encryption on, and secure connections enforced.

- Performance boundaries: Tables can only grow as big as your cluster allows, and overly complex queries will hit walls that force timeouts.

Best Practices for Large Datasets

Throwing hardware at the problem can be effective, but it’s rarely the cheapest win. That’s why we need tricks for managing Redshift at scale.

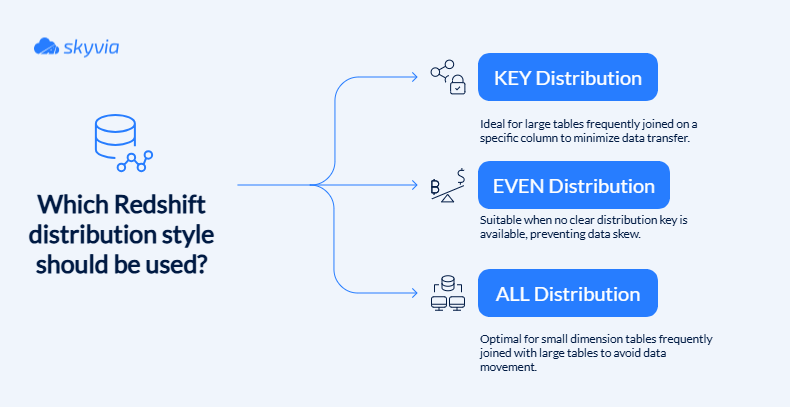

1. Choose the right distribution keys (DISTSTYLE)

Distribution controls the placement of data across cluster nodes. The correct key balances data loads and reduces data shuffling during joins and aggregations.

2. Use sort keys for query performance

- Compound Sort Keys: Data is sorted by multiple columns in order of priority. The first column is most important, so queries filtering by the leading columns perform best. Ideal for joins, GROUP BY, and ORDER BY operations when one column dominates filtering.

- Interleaved Sort Keys: All columns have equal sorting priority, making this ideal when queries filter on various column combinations across large datasets. Improves performance for restrictive filters on any column, but isn’t suitable for monotonically increasing values, such as timestamps.

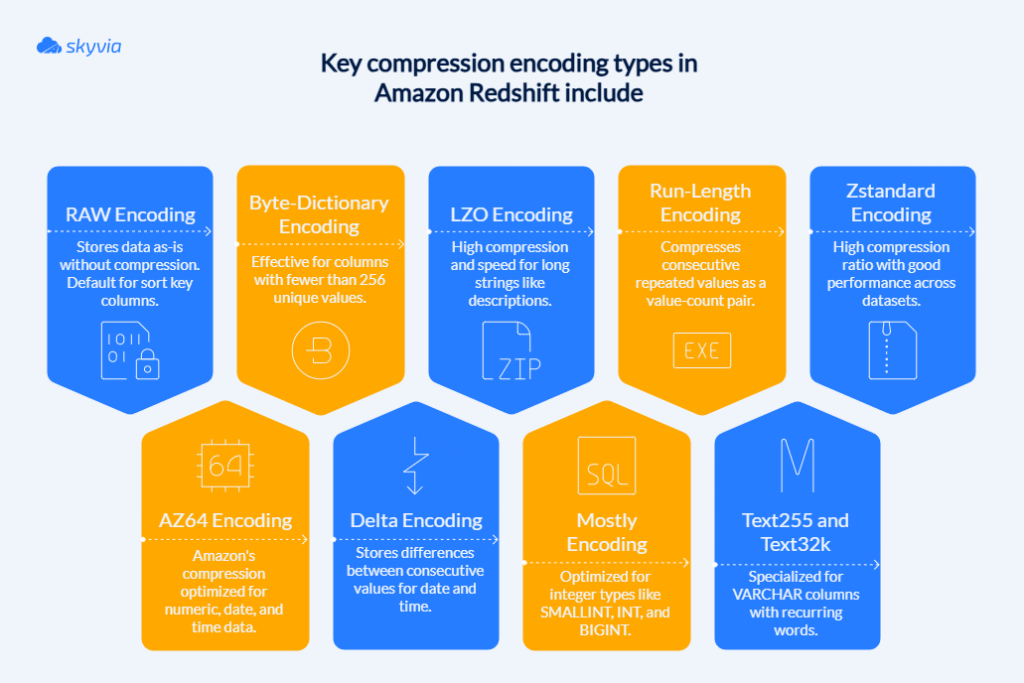

3. Apply compression encodings

Amazon Redshift provides various compression encodings to reduce storage size and improve queries by minimizing disk I/O (input/output). Compression is applied at the column level as data is loaded into tables.

During bulk loading or analysis activities, Redshift automatically chooses the best compression for each column based on data patterns using ENCODE AUTO as the default configuration.

In practice, the choice depends on the data type and value distribution; however, ENCODE AUTO is recommended due to its ease of use and efficiency.

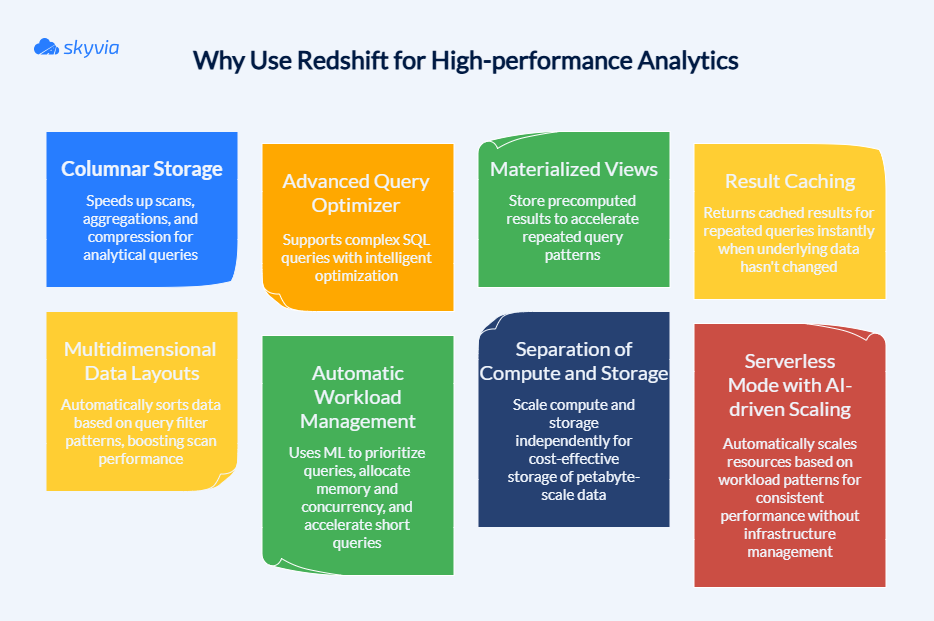

Modernize further with ra3 managed storage, materialized views, result caching, and a healthy VACUUM/ANALYZE cadence. Monitor WLM (workload management) queues for concurrency contention.

Deep Dive: Amazon S3 (The Universal Data Lake)

What is it?

Amazon S3, aka Simple Storage Service, is a highly scalable, web-based object storage service. With a simple user interface, it is capable of keeping nearly unlimited volumes of data, organized as objects inside buckets, and users can retrieve it on demand.

- S3’s pay-as-you-go model lets it handle everything from current data to deep archives, so it is often used as the foundation for data lakes, backups, archives, and content distribution systems.

- It guarantees data durability with 99.999999999% (11 nines) and, since 2020, strong read-after-write and list consistency for new objects, overwrites, and deletes across all Regions. That makes it a default choice for data lakes, backups, and archives.

- Amazon S3 provides data encryption at rest and in transit as well, and is compliant with various industry standards, including HIPAA and GDPR.

Core Concepts

At its foundation, S3 champions versatility in both data warehousing and access patterns:

- Object storage vs. file/block storage: Classic storage solutions either construct hierarchies or fixed blocks, whereas object storage saves data as objects with associated metadata and a unique identifier. That makes S3 ideal for handling enormous repositories of fluid or semi-structured data.

- Buckets and objects: Buckets are the root containers, and objects are the actual files, plus metadata and version history. Together, they form a flat namespace that scales effortlessly.

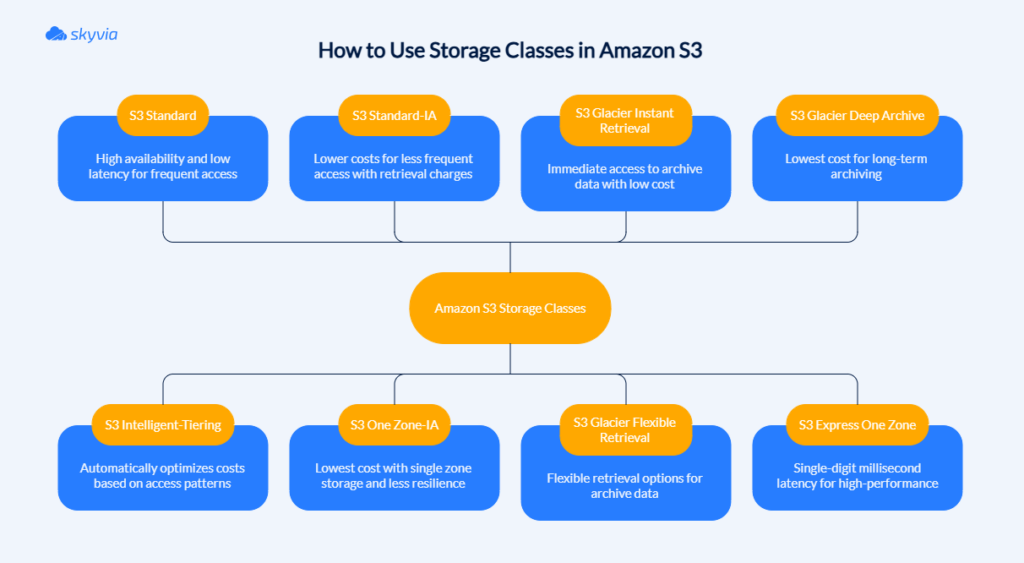

- Storage classes: S3 features a tiered ecosystem built to optimize your budget against access needs:

Thanks to these strategic and tactical features, teams can sculpt their workflows to perfection, and no storage walls will be hit in the process.

Limitations

The S3 rose has its thorns as well:

- Object size ceiling: a single PUT is up to 5 GB; a multipart upload supports objects up to 5 TB. Use multipart for anything 100 MB or larger if you don’t want to stuff an elephant through a keyhole when getting bigger.

- Metadata constraints: Each object carries up to 2 KB of metadata with 2,000 user-defined entries max. Not exactly spacious if you’re trying to cram in detailed descriptions, though you can keep rich context in catalogs like Glue or Lake Formation.

- Bucket boundaries: Users get up to 10,000 general-purpose buckets per account, with higher quotas available on request. Bucket names need to be globally unique –good luck snagging “data” or “backup” at this point.

- Request rate reality: S3 handles thousands of requests per second per prefix, but you’ll need smart key naming to spread the load. Poor design can bottleneck performance fast.

- API payload limits: Request payloads max out at 20 MB, and newer features like S3 Vectors cap you at 500 vectors per call. No bulk operations beyond these thresholds.

- Consistency trade-offs: New objects give you read-after-write consistency, but updates and deletes still play catch-up with eventual consistency across regions.

Key Considerations

Using S3 well often comes down to a few smart habits:

- Know your latency profile

S3 is a data repository frontrunner rather than a split-second database, so expect measured rather than instantaneous responses. It reaches peak performance when artfully coordinating bulk data transfers through parallel processing.

Quick tip: When high-speed transactional demands tighten up the screws, use services like DynamoDB or in-memory caching solutions.

- Watch data transfer costs

Storage is affordable, but moving data isn’t always. Outbound transfers, cross-region replication, and frequent GET/PUT requests can quietly inflate your bill. Use lifecycle policies to archive rarely used data and consolidate requests where possible.

- Use the right storage class

They define how objects are stored, as well as their durability and availability. And, of course, how much this storage will cost. The list of classes is quite long: Standard, Intelligent-Tiering, Standard-IA, One Zone-IA, Glacier Instant Retrieval, Glacier Flexible Retrieval, Glacier Deep Archive. So, use the cheat sheet from above to find what classes suit your workflow best.

- Lock down access

Buckets are private by default, but it’s always a good idea to check. Prioritize applying IAM roles and bucket policies over broad ACLs (Access Control List). Also, switch on server-side encryption (SSE-S3 or SSE-KMS). Make those compliance officers happy. Block Public Access is your safety net.

- Use monitoring tools

When logs stay quiet, you might feel too confident. Use S3 Storage Lens and CloudWatch to catch usage patterns before they cause costs to spike. Start monitoring right away.

Head-to-Head Comparison: The Deciding Factors

Time to put Redshift and S3 under the microscope. We will discuss cost, security, and performance – cornerstones for any intelligent decision.

Cost Comparison

Pricing, if it’s tempting, is often the aspect that makes all other elements irrelevant.

Redshift

- Costs hinge on cluster size, node type, and uptime. Provisioned (ra3): billed per node-hour; managed storage billed separately. Serverless: billed for compute used; scales automatically. Optional features like concurrency scaling can add charges (credits often offset bursts). Reserved capacity helps for steady workloads.

- Advanced features like concurrency scaling may incur extra charges; however, AWS includes free credits that cover many workloads.

- Also, reserved instances soften the blow with discounts for long-term commitments.

S3

- Pricing is refreshingly simple: you pay for what you store, starting around $0.023 per GB/month on Standard storage.

- Access costs are layered on top – every GET, PUT, COPY, or LIST request, as well as data transfers and retrievals.

- The free tier includes 5GB of storage and limited requests, but at scale, request and transfer charges can add up. Lifecycle policies and tiered storage classes (like Glacier) are the go-to levers for keeping S3 bills under control.

Now, let’s examine what happens when these seemingly straightforward numbers and pricing models meet the complexity of actual use cases and cease being abstract.

- Storing 1 TB in S3 Standard typically lands in the tens of dollars per month before access costs.

- Running frequent, complex analytics in Redshift can translate into meaningful daily compute spend depending on concurrency and node sizing.

- Serverless Redshift might optimize spend for unpredictable workloads, but could be pricier for sustained high usage.

Redshift – you pay for compute time (ra3 node-hours or Serverless RPU-seconds) plus managed storage. S3 – you pay per GB stored, plus requests and data transfer. Use Spectrum or Athena (a serverless interactive query service that allows users to analyze data directly stored in Amazon S3) to keep Redshift’s hot data small and scan cold data on demand.

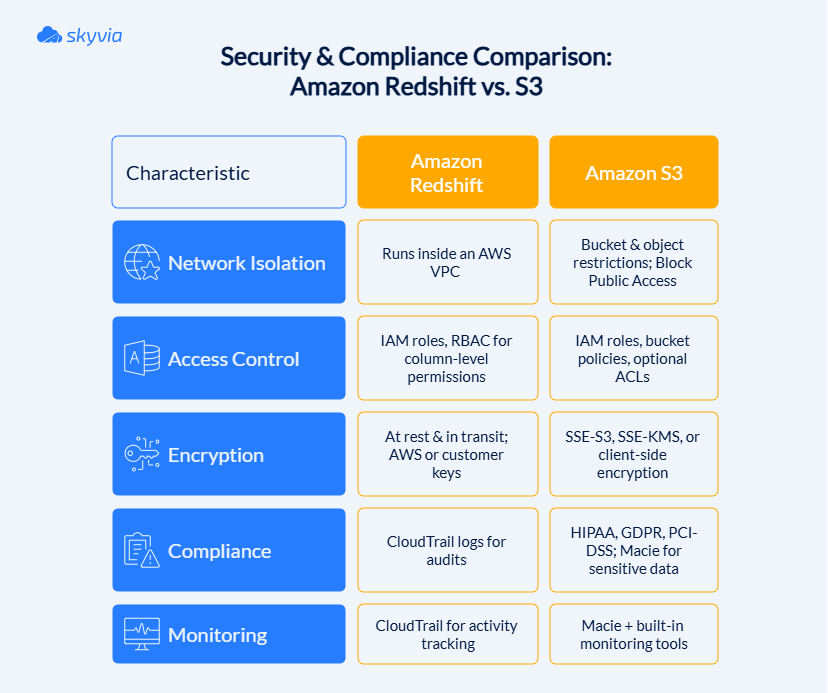

Security & Compliance

Both services take security seriously but apply it differently.

Redshift

- Private VPC isolation with security groups.

- IAM roles and policies handle user permissions.

- Role-based access control, or RBAC, allows for fine-grained management down to the individual column level.

- Whether your data is traveling or sitting stationary, encryption protects it with self-managed keys or AWS keys of your choice.

- CloudTrail records every adjustment and touch for compliance monitoring.

S3

- Dual-layer bucket and object constraints are the foundation of the security model.

- User activities are determined by optional ACLs, bucket policies, and IAM roles.

- The encryption spectrum includes client-controlled encryption, SSE-KMS (your managed keys), and SSE-S3 (AWS’s standard handling).

- Your oops-prevention barrier is S3 Block Public Access, which supports compliance with HIPAA, GDPR, PCI-DSS, and other regulations.

- Intelligent systems, such as Amazon Macie, automatically detect sensitive information for verticals with a governance focus.

Performance & Scalability

Here’s where the two services part their ways entirely.

Redshift

Built for interactive-latency SQL examination of orderly and semi-organized data. Its distributed computation architecture and columnar storage approach deliver interactive-latency analytics on vast data collections.

Growth happens naturally: layer in extra nodes for amplified performance, or apply Concurrency Scaling to spawn ephemeral clusters during demand bursts. Elastic adjustment and sleep/resume controls preserve cost effectiveness when processing loads swing unpredictably.

S3

The strength of S3 isn’t query performance. It’s throughput and scale. It handles virtually unlimited storage and thousands of requests per second. Although S3 isn’t known for its lightning reflexes, it becomes an infinite source of analysis-structured data when connected to services like Redshift Spectrum or Athena.

Choose Redshift when fast, complex, structured analytics with SQL are a must. Choose S3 when flexibility and low-cost storage of diverse data types are your top priorities. Choose both when you’re looking for the strengths of inexpensive storage at scale with powerful, high-performance analytic querying.

| Factor | Amazon Redshift | Amazon S3 |

|---|---|---|

| Primary Role | Cloud data warehouse for SQL analytics | Cloud object storage for data lakes, backup, and archiving |

| Pricing Model | Compute-based: cluster size, uptime, storage billed separately. Serverless billed per second of compute | Storage-based: per GB/month + costs for requests and data transfer |

| Example Costs | 1TB storage + 100 queries/day ≈ $100+/day (provisioned cluster) | 1TB storage ≈ $23/month (excl. access/transfer) |

| Security | VPC isolation, IAM policies, RBAC, encryption in transit/at rest, CloudTrail logging | Bucket policies, IAM roles, ACLs, encryption options (SSE-S3, SSE-KMS), compliance with HIPAA/GDPR |

| Performance | High-speed SQL queries with MPP and columnar storage; supports concurrency scaling | High throughput object retrieval; latency is higher, optimized for scale, not queries |

| Scalability | Scales by adding nodes or enabling serverless/concurrency scaling | Virtually unlimited storage capacity; thousands of requests per second |

| Best Fit | Interactive analytics, BI, and reporting on structured/semi-structured data | Cost-effective, durable storage for any data type, staging ground for analytics |

The “Better Together” Strategy: A Modern Data Architecture

Trying to pit Redshift against S3, users quickly conclude that they’re unstoppable when they work side by side. Why limit yourself when you can lean into an alliance? Let’s see how these two make sure your data is both safe in the vault and sharp on the dashboard.

S3 as the Source of Truth

All of the unstructured, raw, messy, and semi-structured data finds its initial home on Amazon S3. It is essentially an impenetrable fortress for bits and bytes due to its “eleven nines” of durability. Many businesses use S3 as their primary data lake, a single, immutable source of truth, due to its inexpensive storage costs and expansive capacity.

Anything can land here – clickstream logs, sensor feeds, and partner CSVs – before getting processed and sent along the pipeline.

Redshift for High-Performance Analytics

It was built to satisfy companies’ needs in analytics on large datasets. Redshift’s architecture and features align perfectly with fast, scalable, and cost-effective workloads. Here’s what it brings:

- Handles petabytes of data with fast query response times.

- Offers up to 3x better price-performance than other cloud warehouses.

- Provides both provisioned and serverless options for workload elasticity.

- Supports SQL with familiar tools and BI integrations.

Separation of duties is the secret behind financial efficiency that doesn’t lead to losing muscle needed for dashboards, reports, and deep-dive analysis. Companies use S3 for the raw data, transferring only refined datasets to Redshift.

Introducing Redshift Spectrum

Data stored in S3 can be queried in Redshift, thanks to this feature of Redshift. Spectrum does all of the work; all you have to do is define external tables on top of S3 objects.

You are only charged for the quantity of data that is scanned. Frequently queried data stays in Redshift, while colder data sits in S3, still queryable when needed, no duplication required.

Data Migration Between Redshift and S3

This dynamic duo of operations makes data flow between S3 and Redshift feel effortless:

- COPY (S3 → Redshift): Parallelizes data movement from S3 into Redshift tables through multiple threads. It supports varied file formats while distributing processing weight across computing nodes, avoiding sluggish data transfers.

- UNLOAD (Redshift → S3): Dumps query results into S3, breaking them into chunks across different files to boost mobility. Supports various export types like CSV, Parquet, and compressed versions, with encryption thrown in for security. Ideal for backing up analytical findings or feeding data pipelines.

Now, the Redshift → S3 how-to:

- Grant IAM role with S3 permissions; attach to cluster.

- Choose format (CSV/Parquet), compression, and partitioning.

UNLOAD (‘SELECT…’) TO ‘s3://bucket/prefix/’ IAM_ROLE ‘arn:…’ FORMAT AS PARQUET PARTITION BY (…), verify manifests.- (Optional) Encrypt with SSE-KMS, enable manifest, and test Athena/Glue catalog.

Combined, COPY and UNLOAD maintain uninterrupted data movement, transforming S3 and Redshift from competing tools into synchronized allies, fluidly passing information to make sure data remains fresh, protected, and ready for insights.

How Skyvia Simplifies Redshift and S3 Integrations

While Amazon’s native integration tools get the job done, they might leave you puzzled and overwhelmed when the equation has more than two variables. A dead end? Not quite.

The Challenge

Using native methods to manage data connections between Amazon Redshift and S3 becomes quite a production. Although the COPY and UNLOAD commands provide basic functionality, they force you to participate in a manual process that includes time coordination, script building, and ongoing error handling.

Careful file preparation, complex command execution, and cross-system synchronization are necessary for any data migration. It is obvious that this procedure requires a committed battalion of data engineering skills to carry out properly when automated retries and integrity monitoring are included.

The Solution

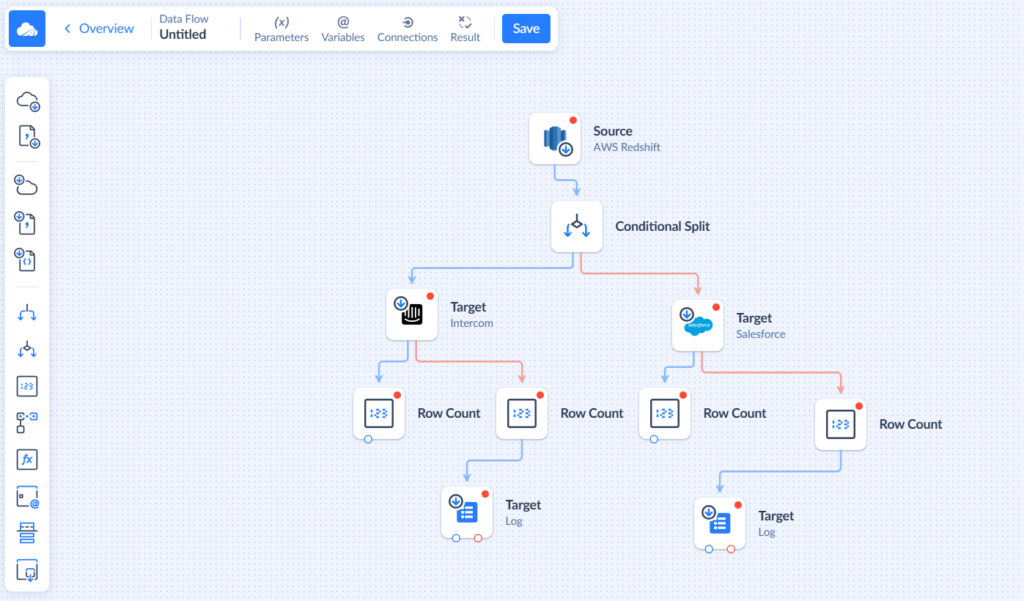

Skyvia transforms intricate ETL/ELT pipelines into visual, no-code processes. Your potent, personalized data transformations may look like this:

It extends Redshift–S3 flows across your wider stack with 200+ prebuilt connectors, scheduling, retries, alerts, and transformations without scripting.

The platform also offers multiple scenarios for various workflow needs:

| Skyvia Scenario | Description | Redshift to S3 Integration | Redshift/S3 to Other Platforms |

|---|---|---|---|

| Import | One-way data import, upsert mode for incremental loads | Simple batch loading from S3 to Redshift or vice versa | Basic incremental imports from SaaS or databases into Redshift/S3 with minimal processing |

| Export | One-way export to target, scheduled | Regular exports from Redshift to S3 (CSV/JSON) for archival or downstream use | Automate data export from Redshift/S3 to BI tools, file storage, or other systems |

| Replication | Near real-time replication/snapshot | Replicate data between Redshift and other DBs, with S3 as staging | Continuous replication from SaaS or databases into Redshift, with S3 as intermediate storage |

| Synchronization | Bi-directional sync, automated record detection | Keeps Redshift and S3 in sync with incremental loads; minimal transformation | Continuous sync between Redshift, S3, and systems like CRMs, ERPs, or marketing platforms |

| Data Flow (the image above the table) | Advanced ETL/ELT, custom SQL, row-level loading | Best for row-level incremental updates, CDC, and transformations between Redshift and S3 | Ideal for multi-step workflows and integrating Redshift, S3, and other apps |

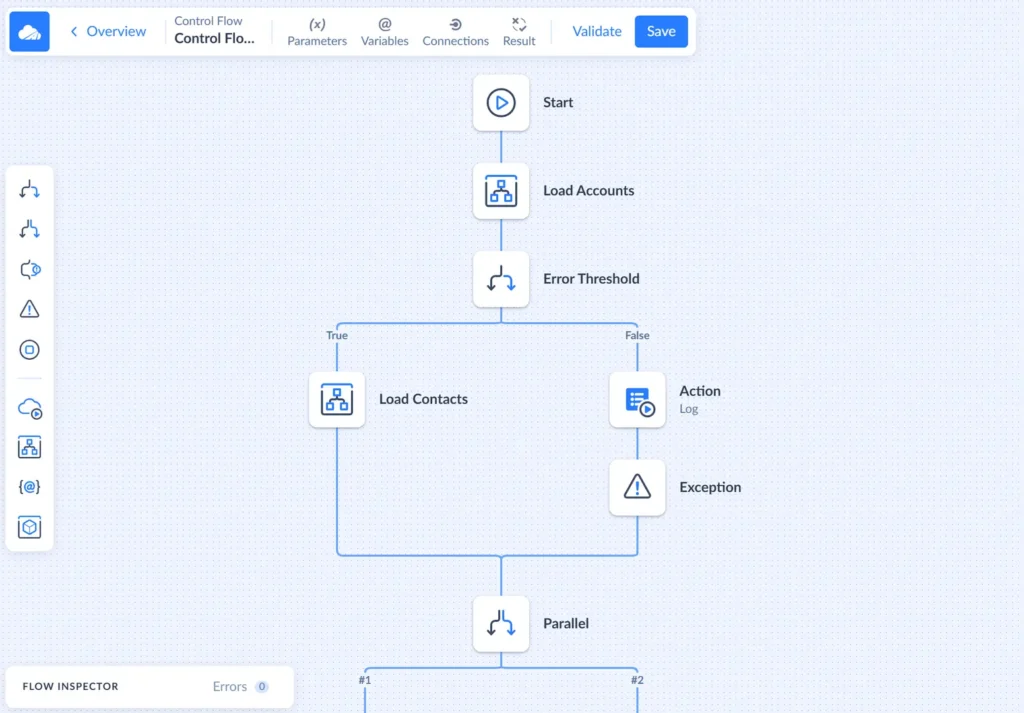

Then there’s Control Flow, the heavy artillery of orchestration. This tool manages and automates multiple data flows and integration tasks in a set sequence or based on conditions. It is especially useful when integrating Redshift and S3 data exchanges with other platforms or when automation is needed beyond a single data operation.

Key Benefits

Skyvia offers several advantages beyond addressing basic integration challenges:

- Visual workflow builder: Design complex pipelines using intuitive point-and-click wizards.

- Automated scheduling: Smoothly carries out data activities in the background while a team attends to urgent issues.

- Error logging and notifications: Catch and address system problems while they are still easy to manage.

- Flexible data mapping and transformations: Adapt content for compatibility with a target, including relationship mapping, schema drifts, and row-level transforms.

- Bi-directional data sync: Keep Redshift, S3, and apps continuously aligned.

Market Context: Redshift & S3 Alternatives

There are cases when Redshift and S3 can’t completely satisfy the user’s demand. For example,

- You have some extraordinarily complex data requirements.

- It is a small to medium-sized business or startup that would like something gentler to the budget.

- Enterprise prefers a multi-cloud or hybrid ecosystem.

This section is for those who, after the comparison, have realized they may need something else.

Data Warehouses

It has built its reputation for being cloud-agnostic, which means you won’t be locked into one ecosystem. Instead, you’re free to choose from AWS, Azure, and Google Cloud.

Redshift and Snowflake play completely different games. If you don’t like that Redshift uses traditional clusters and requires manual tuning and maintenance, then you can try Snowflake, with its storage and computation separation and fully managed automation for most optimization tasks. Many enterprises (Rakuten, for example) lean on it for isolated workloads where ease of use matters most.

It takes serverless to heart. You don’t spin up clusters, you just write queries, and Google does the rest. The price tag depends on query data consumption volume plus storage overhead, transforming efficient query construction into tangible cost control.

With embedded streaming ingestion and frictionless bonds to platforms like Looker and Google Sheets, BigQuery meshes organically with Google’s service constellation. Industry leaders like Twitter and Toyota utilize it for instantaneous analytics and ML frameworks where rapid response and massive scale drive success.

As a part of the Microsoft analytics suite, it collaborates well with the rest of Azure. Synapse provides serverless, pay-as-you-go solutions in addition to providing computing (traditional data warehouse). Its drag-and-drop pipelines and more than 100 interfaces can integrate big data, machine learning, and warehousing under one roof.

| Feature | Redshift (AWS) | Snowflake | BigQuery (Google) | Synapse (Azure) |

|---|---|---|---|---|

| Deployment | AWS only, clusters | Multi-cloud | Serverless, multi-cloud | Serverless + provisioned |

| Scaling Model | Compute & storage tied | Compute & storage separated | Fully decoupled | Both options |

| Streaming | Kinesis Firehose | Snowpipe (add-on) | Built-in streaming | Spark ingestion |

| Semi-structured | Limited JSON | Native JSON/Avro | SQL supports JSON | T-SQL + PolyBase |

| Pricing | Cluster/hour + storage | Per-second + storage | Per-query + storage | On-demand/ reserved |

Object Storage

Google Cloud Storage (GCS)

Durability and scalability it offers are on par with what S3 provides. Their pricing plans range to better match your data needs and budget. Strong global availability, IAM-based security, and tight integration with Google’s analytics and AI stack make it a solid choice for anyone already invested in BigQuery or Vertex AI.

It shepherds chaotic data throughout the Azure ecosystem. It presents thermal storage categories from Hot to Archive for expense optimization, accompanied by backup alternatives spanning geographic territories.

The solution weaves smoothly with Azure Data Lake, Synapse, and Power BI, easing incorporation into Microsoft-centered ecosystems. For software builders, it fits out multiple entry points (from REST mechanisms to Hadoop adapters), broadening its application scope well beyond analytics-only purposes.

| Feature | S3 (AWS) | GCS (Google) | Blob Storage (Azure) |

|---|---|---|---|

| Durability | 11 nines | 11 nines | 11 nines |

| Storage Tiers | Standard, IA, Glacier | Standard, Nearline, Coldline | Hot, Cool, Archive |

| Integration | AWS ecosystem | BigQuery + AI tools | Synapse, Power BI, ML |

| Access Control | IAM, bucket policies | IAM-based | Azure AD, RBAC |

| Best Fit | Universal data lake | Google-centric cloud | Microsoft ecosystems |

If object storage is the core need, take a look at GCS or Azure Blob. If you need an analytics warehouse, weigh all the pros and cons of Snowflake/BigQuery/Synapse for your case.

Real-World Examples & Use Cases

Redshift and S3 power the data engines of today’s most successful companies. For example, Yelp uses Redshift to run complex analytical queries to provide personalized suggestions, utilizing Redshift to analyze vast amounts of reviews and images.

For Pinterest, S3 is a foundation for managing storage for its visual discovery engine. And Netflix uses S3’s content delivery capabilities, which perfectly integrate with their analytics to store and transport vast volumes of video footage throughout the world.

Now, let’s examine how these technologies are being used in industries that need flexibility blended with reliability and scalability.

E-commerce

Data is the deciding factor in the e-commerce game. Here’s how companies are turning Amazon Redshift and S3 into their secret weapons for transforming messy data into money-making insights.

A social e-commerce platform based in Turkey noticed that its user base grows faster than its ability to manage it.

They used Amazon Redshift to create a unified data warehouse and, as a result, they were also able to resolve several side quests related to their previous inability to handle large datasets, such as improved activity and sales trends analytics, as well as disaster recovery patterns.

The top fashion and lifestyle online platform in Europe has a goal – to minimize data silos and give its 400+ teams easier access to datasets.

They created a 15 PB data lake on Amazon S3, which is now a source of insights that improve customer experiences. As a nice and impressive bonus, Zalando also cut storage costs by up to 40% a year.

Gaming

There are approximately 3.32 billion active gamers right now. Almost half of the world’s population. That’s an exhausting amount of data to manage daily. On top of that, they are responsible for protecting that data and also need to use it for growth and producing new video games.

Naturally, they need storage that can handle both. Redshift and S3 definitely belong to this category, and here’s how businesses use them:

Indian gaming giant relies on Amazon Redshift for handling its growing analytics needs. Using Redshift Data Sharing, they’ve cracked the code on allowing various teams to share the same data treasure chest without creating duplicate keys for everyone. Redshift Spectrum simplifies processing and storage by letting the team access S3’s data vault directly.

Gaming giant traded its old Hadoop infrastructure for Amazon’s cloud platform, using S3 as its digital storage where mountains of player data from blockbuster titles like FIFA and Madden can be hoarded.

Versatile storage tiers and classes help to keep costs at the level planned in the annual budget, while data is easy to access, use, and orchestrate.

Finance

What are three things financial organizations can’t live without? Undeniably, data security, compliance, and real-time analytics are among the demands they share.

Even if you find two out of three in some storage platform, the stakes are still too high to risk. That’s why Redshift and S3 are so deeply loved by many institutions.

When Nasdaq decided its data infrastructure needed a serious upgrade, it went all-in with AWS, pairing Amazon Redshift with S3. Now they’re processing a mind-boggling 70 billion daily records while keeping their trading platforms working seamlessly even during the wildest market swings.

Decoupled computation and storage are key because they can grow resources separately when volatility strikes. Both internal analysts and external regulators benefit from faster regulatory compliance and more efficient operations.

This financial services heavyweight migrated from a legacy system to Amazon Redshift. The result was immediate – infrastructure costs got cut in half while performance increased due to easier access to data. The fact that businesses can now use machine learning on their data is another advantage.

They used S3 as a consolidated data lake, a temporary stage, and AWS migration tools were used by the organization during the transfer. Because of Redshift’s data protection, their private information is impenetrable, meeting compliance requirements and providing decision-makers with the analytical tools they need to handle the current unstable financial environment.

Conclusion

It turns out the real plot twist wasn’t picking sides in the Redshift-S3 debate, but discovering these two make an unbeatable team when they join forces.

Here’s what we’ve uncovered:

- Redshift shines when you need fast SQL queries and real-time analytics on structured data – a go-to, speedy solution for queries.

- S3 dominates the storage game with its rock-solid durability and wallet-friendly pricing for any data type – a reliable vault that never judges people’s hoarding habits.

- Cost strategy matters: Redshift bills for compute power and uptime, while S3 keeps it simple with storage-based pricing plus access fees.

- The “better together” approach wins big – use S3 as a data lake foundation and Redshift as an analytics powerhouse, connected through tools like Redshift Spectrum.

However, your data ecosystem probably doesn’t stop at just AWS. When Redshift and S3 need to interact with Salesforce, MySQL, Google Analytics, or any of the 100+ other systems in your tech stack, native integration tools can trap you with complex scripts and manual processes.

If you’re expanding beyond AWS, add an integration layer that doesn’t make you go set a watchman for scripts. Try Skyvia to orchestrate Redshift-S3 pipelines and connect them to Salesforce, MySQL, Google Analytics, you name it – no code.