Going through disparate systems that somehow never seem to match up to answer even a single question? Welcome to the Wild West of data silos. That is exactly why data warehouses aren’t just nice-to-have anymore; they’re mission-critical now.

But here’s the wrinkle: data warehouse systems design and implementation in 2026 isn’t your grandfather’s ETL pipeline. Right here, right now, data storage and business intelligence scripts are being rewritten by cloud native solutions, real-time processing demands, and smart AI analytics.

This guide will walk you through steps and strategies for building a data warehouse that delivers on its promises. Let’s set a foundation that can endure all tasks.

Table of Contents

- What is Data Warehouse Implementation?

- Key Components of Data Warehouse Design

- Data Warehouse Implementation Methodologies

- Best Practices for Data Warehouse Design and Implementation

- Data Warehouse Tools and Technologies

- Common Challenges in Data Warehouse Implementation

- Conclusion

What is Data Warehouse Implementation?

It’s the structured process of transforming an organization’s chaos of records into a centralized system, a single source of truth. You’re literally building a digital library where every book (data) is properly cataloged, easily findable, and ready to tell its story.

In this case, design and implementation are two interconnected processes, and their order is best followed. Design is like blueprinting a dream house. Implementation is breaking ground, pouring the foundation, and turning those blueprints into a system that people can walk into and use.

Swap them up, and you’re stuck in limbo during the whole construction.

Neglect the first one, and limbo will become your personal synonym for data warehouse (DWH).

Let’s see how it works in real life. A retail company has to deal with input from

- E-commerce platform.

- Brick-and-mortar POS systems.

- Inventory management.

- Customer service tools.

That’s a lot to juggle. So, they need a DWH, where everything will be consolidated. During the design phase, they determine how all this information should flow together:

- Which tables connect to which?

- How can customer data from different touchpoints be unified?

- What reporting structures make sense?

The implementation phase is where the rubber meets the road; it’s the bridge between having data and having actionable business intelligence:

- They build actual pipelines.

- Set up the servers.

- Write the code.

- Make sure everything works together.

If everything is done correctly (instructions are coming), analysts can stop playing referee between warring databases. Instead, they can spend their brainpower on pattern recognition and opportunity hunting, rather than engaging in endless reconciliation battles.

Key Components of Data Warehouse Design

Let’s break down the five critical layers that can create harmonious business intelligence.

| Layer | Main Purpose | Key Functions | Supporting Technologies |

|---|---|---|---|

| Data Ingestion Layer | Acquire raw records from diverse sources | Extraction, initial checks, batch/real-time data ingestion | ETL/ELT tools, data ingestion frameworks, API connectors |

| Data Transformation Layer | Cleanse and convert raw data into an analytic-ready form | Validation, enrichment, modeling, staging area | ETL/ELT pipelines, staging databases, transformation frameworks |

| Data Orchestration Layer | Coordinate and automate pipelines | Scheduling, monitoring, error handling, workflow logic | Workflow orchestration tools |

| Data Governance and Quality Layer | Maintain data trustworthiness and compliance | Data quality checks, lineage, security, metadata | Data catalogs, quality tools, IAM/security platforms |

| Destination Layer | Store and serve integrated data for consumption | Storage, indexing, OLAP, user access, dashboards | Relational DBMS, Cloud warehouses, OLAP engines |

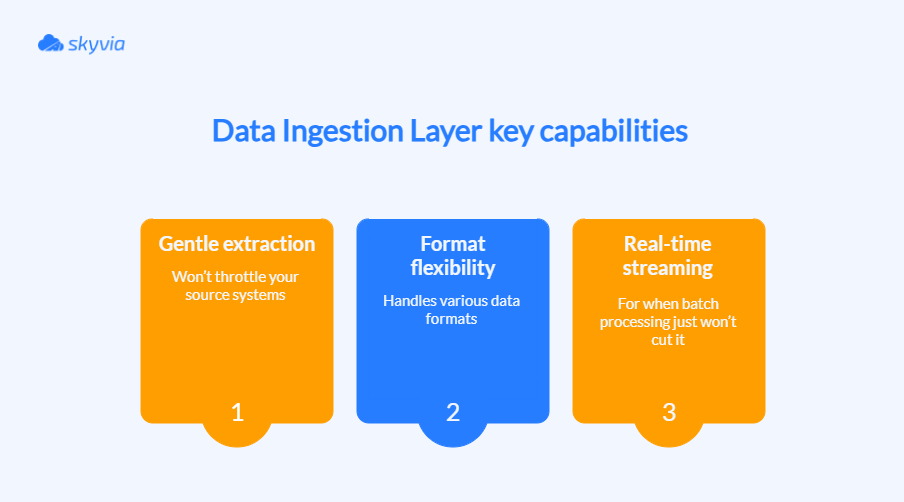

Data Ingestion Layer (Data Sources)

It’s a data customs checkpoint, where information immigrants arrive speaking different languages – this can include transactional databases (OLTP), ERP/CRM systems, IoT devices, external APIs, files (CSV, JSON), and logs.

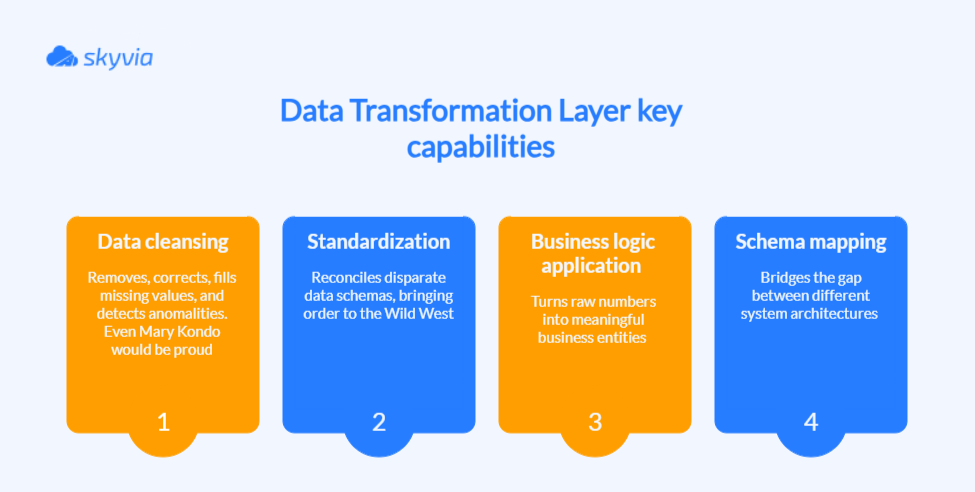

Data Transformation Layer

Raw input walks in looking rough around the edges, and leaves polished, standardized, and ready for its close-up in analytics dashboards.

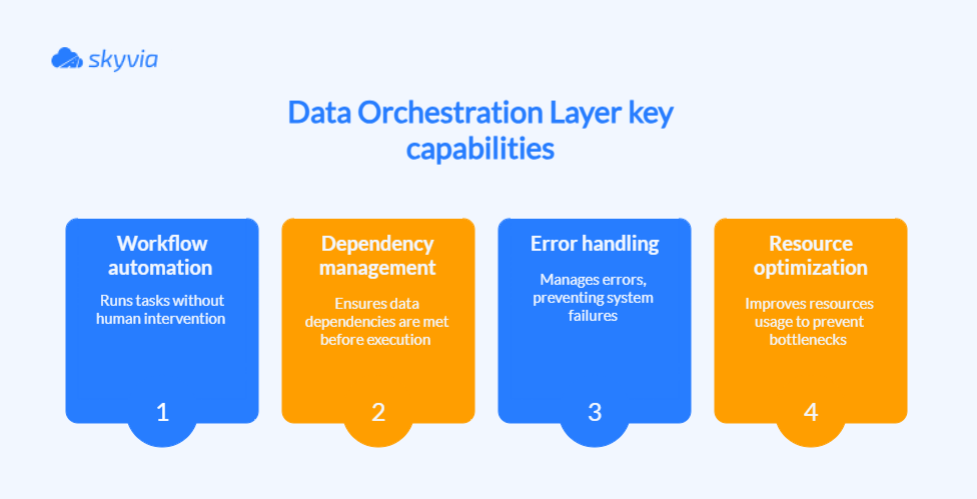

Data Orchestration Layer

In the background, this hero keeps your data flowing smoothly, rather than letting it spiral into a flash mob chaos.

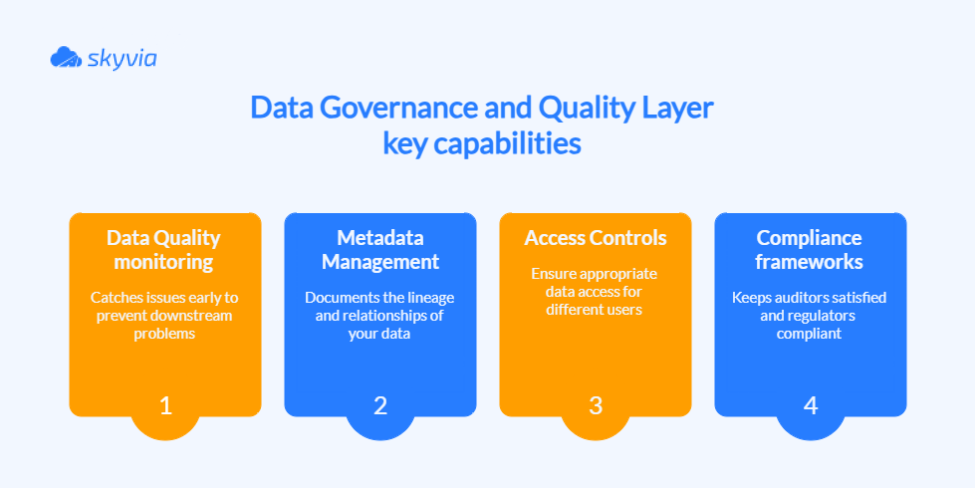

Data Governance and Quality Layer

How do you know your customer count is accurate? Who changed that critical calculation last month? Why does the marketing team’s revenue number never match finance’s? These are the questions that governance layers are built to answer.

Destination Layer

It is where your carefully ingested, transformed, orchestrated, and governed data finally gets to shine bright like a diamond.

Data Warehouse Implementation Methodologies

With the architecture in hand, it’s time to focus on how you will transport records from point A to point B. Think of these methodologies like delivery services: some race against the clock, others play it safe with quality control, and some are designed for those “drop-everything” rush orders.

Let’s dive into the three approaches that dominate modern data warehousing.

| Methodology | Best For | Key Benefits | Notable Challenges |

|---|---|---|---|

| ETL (Extract, Transform, Load) | On-premises/data marts | Data quality before storage, compliance | Latency, complexity at scale |

| ELT (Extract, Load, Transform) | Cloud warehouses/big data | High scalability, flexible, fast load | Data governance, transformation inside DW |

| CDC (Change Data Capture) | Real-time ETL/streaming/replication | Freshness, lower impact on sources, efficient sync | Integration, error handling, schema changes |

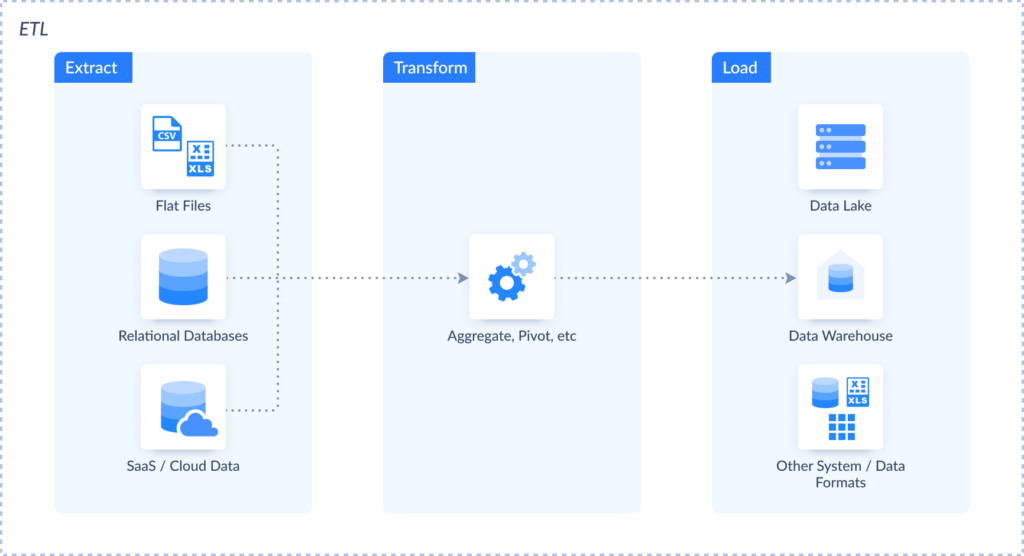

ETL (Extract, Transform, Load)

It carefully extracts data from your source systems without causing a ruckus. Then comes the transformation phase. Duplicates are deleted, formats are standardized, data is validated against business rules, and enriched with additional context. Only then does it earn a spot in the warehouse.

Your DWH establishes itself as a citadel of verified, high-quality information, with transformation processes serving as discerning data stewards. This comprehensive curation approach, however, involves performance considerations.

ETL operations can be more time-intensive and resource-hungry, especially when processing substantial datasets that can test the limits of transformation server capabilities.

ELT (Extract, Load, Transform)

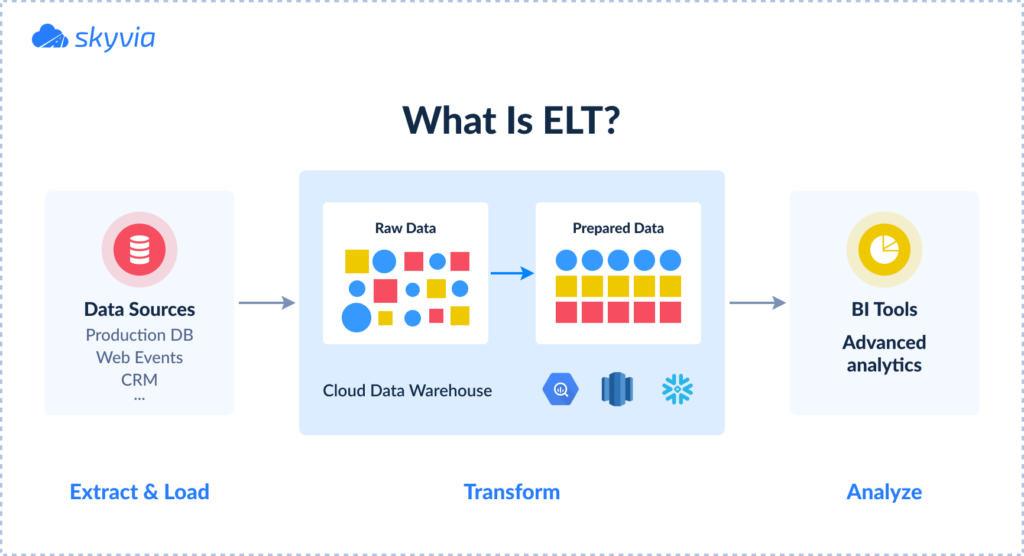

It’s ETL’s younger, more agile sibling, the one who moved to the cloud and discovered the power of parallel processing. Instead of transforming data before it enters the warehouse, ELT flips the script: it extracts data from sources with minimal fuss, loads everything raw into your cloud warehouse, then lets the warehouse’s compute power handle all the transformations.

The trade-off? Your warehouse temporarily becomes a bit like your garage. Everything gets dumped in first, then organized later. That means you need proper governance and security within your warehouse itself, as raw, potentially sensitive data is hanging around before transformation.

Change Data Capture (CDC)

Instead of the traditional “dump and reload” approach, CDC is more precise. It continuously tracks changes, picks up only what’s new or modified, and rushes it to the warehouse.

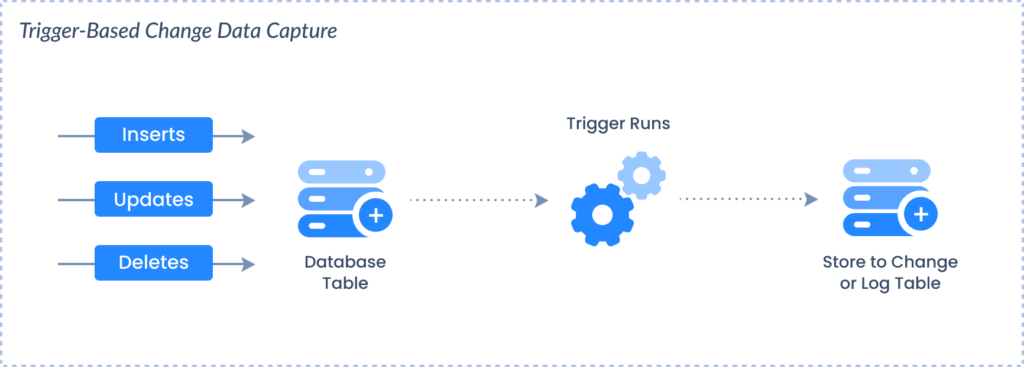

It can be of different kinds: trigger-based, log-based, timestrap-based, and snapshot-based. Here’s how the first one works (and if you’re curious about more examples, we’ve got a whole deep-dive article on the CDC waiting for you):

CDC’s superpowers include:

- Log-based monitoring that reads database transaction logs with minimal impact.

- Delta-only transmission that reduces network traffic and processing overhead.

- Real-time synchronization that keeps your analytics current with operational reality.

- Source system preservation that doesn’t bog down your production databases.

Best Practices for Data Warehouse Design and Implementation

Is building DWHs complex? Absolutely. Demanding? Without question. Yet you’re not a lonely pilgrim here. The path is pretty wide by now, and here’s a guide to light it.

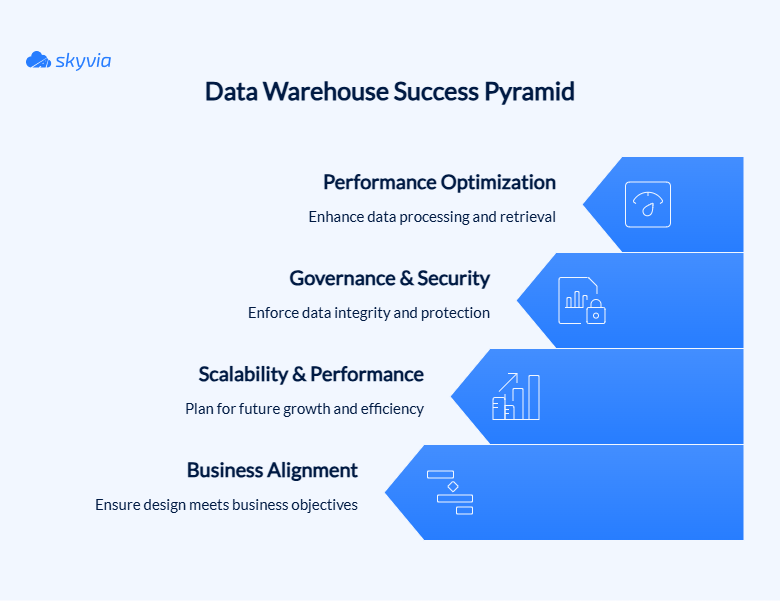

Align Data Warehouse Design with Business Goals

DWH must address real business questions; otherwise, it’s only a fancy accessory that brings no real assistance. Launch with clear business priorities in focus: your key success measurements, critical decision requirements, and operational headaches that strategic data can put an end to.

That means designing schemas around what’s useful, not just what is possible or easier. Also, no matter what schema you choose, keep your business needs at the heart of your design.

If possible, engage both business and IT teams in the process. A cross-functional team can collaborate to build a system that accommodates everyone’s needs.

Plan for Scalability and Performance

Asking and answering the right questions are part of the design stage. The main one here is: How much data will you have in a year, and then in five years? You may underestimate the power of efficient indexing, partitioning, and physical storage while the data is relatively small.

But once it reaches a significant size and you need to run a query, some doubts and suspicions might arise. Better to architect for tomorrow’s reality today.

Implement Data Governance and Security Measures

Engine running, so it’s time to protect what matters most: the data.

- Implement Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC).

- In addition to these, layer encryption, audit trails, and compliance measures such as GDPR and HIPAA.

Now, only the right people access the correct data, and it’s being used responsibly.

Optimize Data Warehousing Performance

By now, a DWH resembles a highway, and like every highway, even an expertly designed one, can experience gridlock without active traffic management.

- Begin with continuous query monitoring and tuning (it’s like having traffic cameras at every interchange to spot slowdowns before they become full-scale jams).

- Effective partitioning, indexing strategies, and aggregation functions are the traffic infrastructure. It’s comparable to having dedicated lanes, proper signage, and efficient on-ramps – every piece of data knows precisely where to go without causing congestion.

- Incremental loading and CDC are intelligent traffic routing. Rather than shutting down the entire highway for construction, redirect traffic around work zones, keeping data flowing while making necessary updates.

- Cloud elasticity provides variable capacity, adding express lanes during rush hour and scaling back to standard capacity during off-peak times.

- Automated orchestration and error handling become an innovative traffic management system, adjusting signals and routing automatically to maintain optimal flow without human intervention.

Voila! Every participant and every data snippet move without ever turning in the wrong direction or causing others distress, regardless of the tasks assigned.

Data Warehouse Tools and Technologies

The transformation from fragmented sources to coherent warehouse models demands more than hope and good intentions.

One side of the coin – data warehouse implementation methods – we’ve already covered. Now, let’s flip it to storm-tested tools that make the difference between data pros and data woes.

ETL/ELT Tools

Both ETL and ELT have their place in modern data architectures. Platforms like Skyvia don’t force you to pick sides – they support both approaches, letting you choose what makes sense for each specific use case. Additionally, Skyvia offers reverse ETL.

The most significant shift in the ETL/ELT landscape isn’t just about where; it’s about who. No-code platforms have democratized integration, putting powerful capabilities into the hands of business users who shouldn’t need to become data analysts just to move data around, while giving data analysts the ability to sculpt and transform data as they see it.

Skyvia exemplifies this flexibility:

- Simple or complex multi-source data gymnastics – either way, 200+ supported connectors make it possible.

- Through the Data Flow component’s visual interface, even sophisticated transformation scenarios become drag-and-drop exercises.

- Control Flow functionality steps in for heavy orchestration work, managing workflow complexity through conditional logic, error handling, and dependency chains.

- CDC means you can keep a warehouse synchronized with source systems in near real-time, while its scheduling and automation features ensure pipelines run reliably without constant monitoring.

Data Modeling Tools

Doing things is more tempting than theorizing. Many teams jump to build dashboards, but without a solid data model underneath, it’s all sandcastles. Here are the tools to avoid and address this lack of integrity:

- erwin Data Modeler offers a comprehensive approach to logical and physical modeling, allowing you to design data structures at a conceptual level and then automatically generate the actual database schemas.

- ER/Studio delivers enterprise-grade capabilities with a knack for taming complex, multi-platform setups. Whether you’re dealing with cranky legacy systems or sleek cloud analytics, cross-platform modeling keeps everything consistent.

- Also, there’s dbt, which can do something brilliant – merge data modeling directly into the transformation layer. Instead of using separate documentation that’s always outdated, you define semantic models right in your transformation code. Suddenly, your docs are executable, and models are testable.

Those who aren’t ready or don’t have enough resources for formal data modeling tools can try analytics or DWH platforms that provide schema management and have simple visual schema builders, like Zoho Analytics, Airtable, Amazon Redshift, or Snowflake, to start somewhere.

Now, to the challenges where these tools can’t help – collaboration. Data models created in isolation often don’t reflect the actual needs of business users, leading to endless rounds of revisions and frustrated stakeholders. Here are several tools designed to bring everyone and everything to the same table:

- SQLDBM addresses this by moving the entire modeling process to the cloud, enabling real-time collaboration between technical and business teams.

- Dataedo takes a different approach by focusing on documentation and data cataloging. It recognizes that in many organizations, the biggest challenge isn’t creating new data models – it’s understanding and documenting the models you already have.

- Another frustrating modeling issue is semi-structured and NoSQL data, which traditional tools weren’t designed to handle. Hackolade steps into this gap by supporting not just relational models, but also document databases, graph structures, and even JSON schemas.

Common Challenges in Data Warehouse Implementation

If DWH implementation were easy, every organization would have pristine, perfectly organized data. The reality? The statistics are very sobering – DWH projects have notoriously high failure rates (50% to 80%), not because the technology isn’t capable, but because the challenges are multifaceted and often underestimated. Understanding them upfront isn’t pessimistic – it’s strategic.

Let’s solve them one by one.

- Diverse input: The nightmare isn’t source diversity, it’s source incompatibility. These systems were built in isolation, like houses designed by architects who never met. Your CRM has one vision of reality, your ERP has another, marketing tools march to their own drummer, and custom apps? Pure chaos.

Solution: Use ETL to create a judgment-free zone where raw data can land in its original, quirky format before undergoing transformation. That gives you crucial discovery time to understand what you’re dealing with before making permanent schema commitments. Skyvia dominates this space by handling 200+ sources while its visual Data Flow component previews exactly how your disparate data streams will merge into warehouse perfection.

- Schema shuffle: Schema mismatches are like data’s cruel joke on sanity. You burn through hours playing matchmaker between “John Smith,” “J. Smith,” and “Smith, John” – three ways of saying the same thing that your systems treat as completely different people. It’s not just formatting chaos; it’s conflicting business logic that can blow up your entire analytics strategy.

Solution: Build for inevitable evolution instead of fighting it. Tools like Dataedo become your change historians, tracking modifications systematically while letting old and new schemas coexist during messy transition periods. Embrace flexibility from the start – schema-on-read data lakes and NoSQL patterns that bend without breaking when data shapes shift unexpectedly. CI/CD practices catch schema drift before it becomes a downstream disaster. Pair this with columnar storage in Snowflake and BigQuery, and schema management transforms from roadmap-killing emergencies into boring maintenance tasks.

- Real-Time conundrum: The real-time dream crashes into infrastructure reality fast. Your sources batch overnight, APIs choke under pressure, and legacy systems treat CDC like kryptonite.

Solution: Don’t rush to upgrade tech when honest conversations around timing needs can help. Does every metric really need instant updates, or can you get away with refreshing hourly? Sometimes the smartest move is showing stakeholders that “good enough” real-time solves 90% of their problems. Also, Skyvia’s scheduling and automation capabilities can help orchestrate the process, so data flows as frequently as the source systems allow without overwhelming them.

- Quality control: Data quality challenges are often just the visible symptom of deeper business process rot that’s been ignored for years. Careless data entry, missing validation controls, and conflicting departmental definitions all contribute to the quality catastrophe you’re inheriting.

Solution: Winning teams make quality a priority from the start (automated validation, profiling, clear exception handling) while also investigating the dynamics that create the issue, because fixing the technical mess without addressing why it happened just sets you up for repeat failures.

- Query issues: DWHs suffer from their own success in the cruelest way possible. Build something that works, and users will inevitably break it by asking harder questions and running wilder queries until a once-smooth system starts wheezing under pressure. Scaling compute is the easy part; predicting when your cloud bill will explode because someone casually cross-joined two massive tables is where things get interesting.

Solution: Smart scalability management starts with obsessive observation. Build transparency into query patterns, resource consumption, and user habits so you can spot trouble brewing instead of scrambling after everything’s already on fire.

- Ever-growing storage: A little secret about data growth: it’s never linear, always exponential, and perpetually surprising. Your modest pilot project starts innocently generating daily gigabytes, then quietly escalates to monthly terabytes while you’re busy with other fires. Storage capacity is just the warm-up. Lifecycle management is where decisions about retention, archiving, and aggregation create cascading cost and performance effects that are hard to fix later.

Solution: With Skyvia, you can choose the source that matches your budget and organize data traffic from it to other tools and vice versa without selling some data engineer or analyst’s kidney.

- Security and compliance: Building something simultaneously impenetrable and accessible may feel like architectural schizophrenia. Managing row security and data masking across massive table counts with complex user hierarchies turns every new integration into a permissions puzzle. Then regulations like GDPR crash the party, transforming simple data deletion into a multi-system engineering odyssey. The final twist? Building audit systems comprehensive enough to satisfy regulators without creating performance-killing log mountains.

Solution: Build security into your DNA. Establish end-to-end data visibility and flexible access management that evolves with your business, while maximizing cloud-native security tools that do the grunt work automatically. For GDPR, embed data lifecycle management from the ground up – trying to bolt on compliance after launch is like installing fire exits in a burning building.

- Budget black hole: DWH costs spiral fast. You start with innocent estimates – maybe $50K for a “simple” setup – then reality crashes the party. Suddenly, you’re seeing amounts that could fund a small country’s IT budget. However, the estimate is very fluid and depends on many side factors, including ETL, storage, BI, legacy integration, consulting, etc.

Solution: Some stages and processes allow for tools that are more cost-effective, while still beneficial to the whole DWH concept. For example, use tools like Skyvia to replace costly development hell with drag-and-drop victories. With its pre-built connectors, you’re not paying developers to reinvent the wheel. You can move data from diverse sources to a consolidated system more easily and with fewer labor resources. And the CDC will save a bit more by detecting and moving only what has been changed recently.

Integration of Legacy Systems

We’ve reached the final boss of DWH implementation issues – legacy systems. The puzzle now has hieroglyphs and ancient logic. Here’s what is waiting and how to overcome it:

- Documentation dilemma: Ever tried integrating systems built by ghosts? The creators disappeared years ago, documentation is purely oral tradition, and business logic lurks in code older than the internet?

Solution: Reconstruct data relationships from database designs, decipher business logic buried in procedures, and conduct oral history sessions with anyone who remembers the old ways. It’s archaeological work, but essential for preserving decades of encoded business intelligence.

- Format time warp: Legacy systems have a special talent for using formats that seemed reasonable at the time but feel increasingly archaic as technology evolves. Fixed-width text files, proprietary binary formats, and database structures that predate modern normalization principles all need to be accommodated in your modern DWH architecture.

Solution: Avoid the temptation to completely rewrite and modernize everything because legacy systems contain subtle business rules and exception handling logic that isn’t immediately obvious. A more successful approach is to build reliable bridges between the old and new worlds, preserving the business logic while making the data accessible to modern analytics tools.

- “It Works, Don’t Touch It”: Many legacy systems fall into the category of “critical but fragile.” A business runs on them, but they’re such undocumented, cobbled-together monstrosities that making changes feels like defusing a bomb. These systems often limp on aging hardware, use outdated operating systems, and depend on software licenses that may no longer be available.

Solution: Instead of modifying legacy systems directly, you can use Skyvia to establish secure, reliable data flows that extract information without impacting the source systems’ stability.

- Migration Tightrope: Nothing tests your sanity quite like the integration period when legacy dinosaurs and shiny new systems must cohabitate peacefully. You can’t just rip off the band-aid. Operations demand continuity while you painstakingly migrate features and cross your fingers that everything actually works.

Solution:

- Start with APIs and Wrappers

Modern API layers can provide clean interfaces to legacy functionality without requiring changes to the underlying systems. Existing business logic will be preserved while data is accessible to modern integration tools.

- Embrace middleware solutions

ESB (Enterprise Service Bus) platforms and slick integration tools like Skyvia play translator at the UN of your tech stack – they smooth-talk between your crusty old mainframes and shiny new APIs, converting formats and cleaning up messes on autopilot. It’s like having a diplomatic immunity card for your data transfers.

- Phase migration carefully

Bit-by-bit deployment with validation checkpoints is like having insurance for your data journey – you can course-correct without breaking a sweat or breaking production. This way, you’re not putting all your eggs in one basket while your stakeholders are breathing down your neck.

- Use data virtualization

It provides on-demand access to legacy data without requiring full migration when this migration can’t be organized due to different factors. This “coexistence” strategy can be particularly valuable when immediate replacement isn’t feasible or cost-effective.

These obstacles are already conquered territory – other data warriors have been there, debugged that, and shipped it. So can you. A bit of creativity, planning for growth, and the right tool can do the trick to make everyone happy.

Conclusion

Every successful data warehouse started as someone’s “impossible” project. Those legendary implementations you hear or read about? They began at the same Data Wild West you’re at right now.

Organizations with working warehouses aren’t the ones with unlimited budgets or fairy-tale architectures. They’re the pragmatists who:

- Plan strategically while leaving space for side quests.

- Build incrementally.

- Choose tools that adapt when (not if) requirements change mid-flight.

Try Skyvia for free to design and implement a warehouse success story. While it can’t replace all the tools you may need, especially if you’re building something grand, it can significantly optimize the toolkit for the sake of the team that will deal with it and the budget.

F.A.Q. for Data Warehouse Systems

How do you design a data warehouse architecture?

Prioritize business outcomes over technological fireworks. Map your data’s evolution from scattered inputs to executive eye-candy, scale for tomorrow’s appetite while building today, and forge alliances between old databases and nimble cloud platforms.

What is the difference between ETL and ELT in data warehousing?

ETL is the careful curator – clean first, store later. ELT is the pragmatic hoarder – dump everything in the cloud warehouse, then sort it out with massive compute power. Both get you there; choose based on your data’s personality and cloud budget.

Why is data governance important in data warehouse implementation?

No governance means your data becomes an unruly house party – everyone’s making modifications without permission, and quality control goes out the window. It’s the line between actionable insights and million-dollar mistakes.

What are the key challenges in data warehouse design and implementation?

The antagonists: formatting, legacy system integration, instant demands versus overnight delivery, quality assurance circus acts, and expenses that breed like wildfire.

What are the best practices for optimizing data warehouse performance?

Watch queries, slice data intelligently, place indexes with precision, and choose bite-sized updates over complete overhauls.