If you’ve ever found yourself watching reports crawl along or hitting dreaded size limits that make your visualizations worth nothing, you know what pain is.

Proper data storage is the bedrock of your entire Power BI universe. Choose wisely, and you’ll be building on solid ground that can scale. Choose without weighing the pros and cons, and, well, you’re likely to go back to what we described at the beginning.

Time to find the best way to store data for Power BI. Let’s get you sorted once and for all.

Table of Contents

- Understanding Power BI’s Data Connection Modes

- Common Data Storage Options for Power BI

- Advanced Data Storage Solutions in Power BI

- How to Choose the Right Storage Option for You: A Decision Framework

- Conclusion

Understanding Power BI’s Data Connection Modes

The storage options themselves are the reason we are all gathered here today. Yet, before we feast on them, we need to discuss how Power BI connects to data – an important part of any BI strategy. Here are the five main connection modes:

| Mode | Storage & Real-Time Data | Modeling Flexibility & Performance | Sources Support | Best For |

|---|---|---|---|---|

| Import | Stored in Power BI, not real-time | High flexibility, moderate performance | Most sources | Small/medium, complex modeling |

| DirectQuery | Real-time, no local storage | Moderate flexibility, performance depends on the source | Many databases, some cloud sources | Large/real-time, security needs |

| Live Connection | No storage in Power BI, real-time | Limited flexibility, high performance | SSAS, Azure AS, Power BI Datasets | Centralized enterprise models |

| Direct Lake | Not stored in Power BI, real-time syncing | High flexibility, optimized performance | Azure Data Lake (Fabric) | Big data, lakehouse analytics |

| Composite (Dual) | Combination of storage and real-time | High flexibility, excellent performance | Mixed | Hybrid, flexible scenarios |

Import Mode

It’s Power BI’s bread and butter. It pulls all data into its highly-compressed in-memory engine called VertiPaq. Once it’s there, everything runs like a dream.

Best for: Compact to mid-sized datasets where analytical depth matters more than live streaming, requiring sophisticated modeling horsepower.

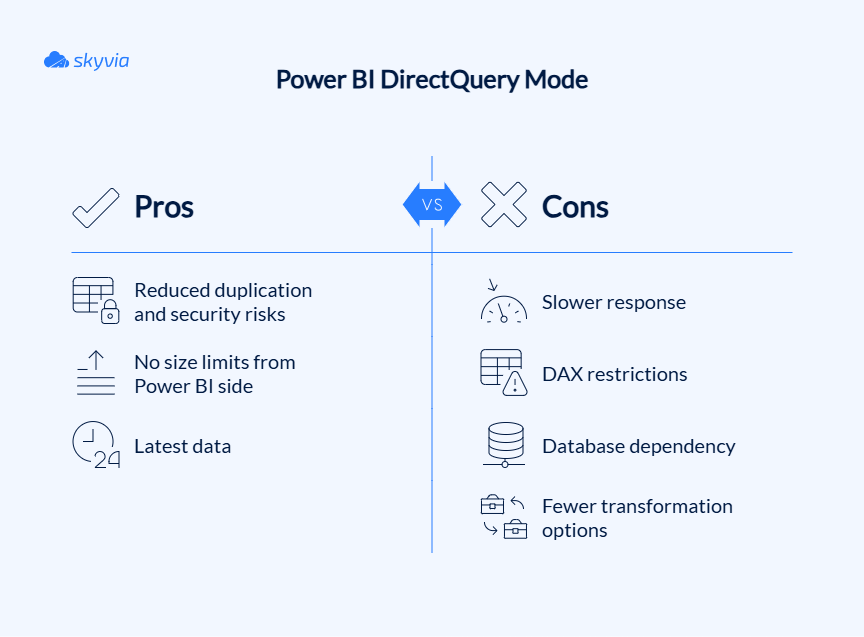

DirectQuery Mode

It summons Power BI to the data source every time someone starts a query.

Best for: Large or highly dynamic datasets where staleness is the natural enemy, or when security protocols forbid duplication.

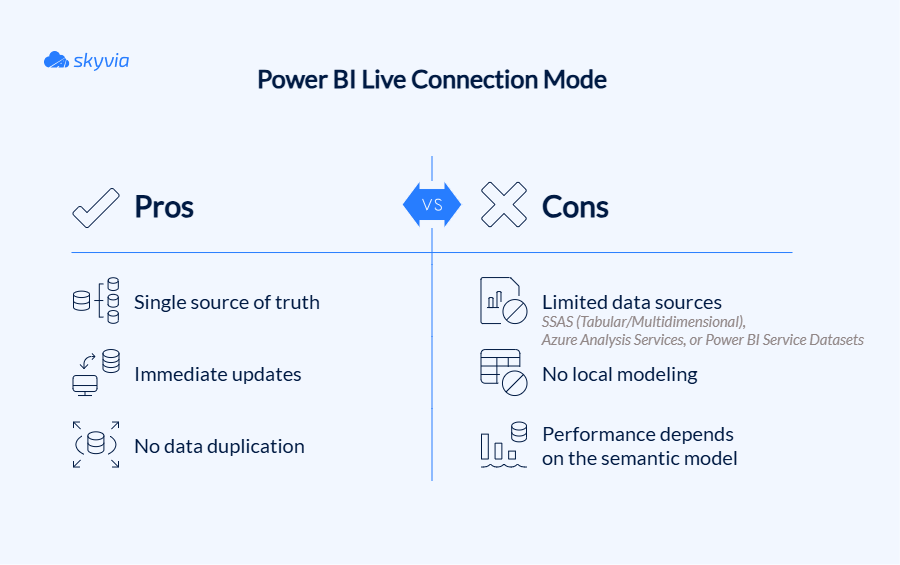

Live Connection Mode

It bypasses the raw data quarry entirely, instead letting Power BI plug straight into a ready-made interpretive layer within Power BI Service, Azure Analysis Services, or SQL Server Analysis Services (Tabular or Multidimensional).

Best for: Enterprise environments with established semantic models and strict governance requirements.

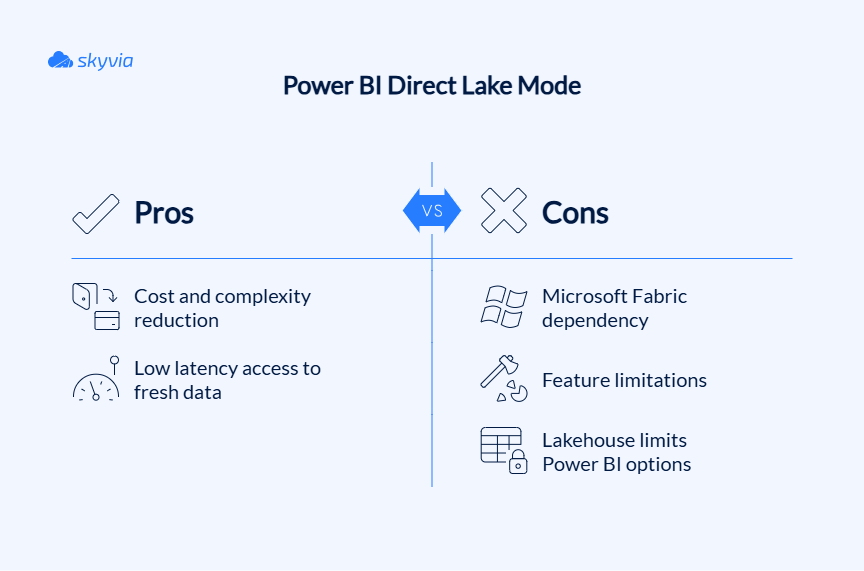

Direct Lake Mode

It’s designed specifically for Microsoft Fabric environments and promises breakneck-speed access to massive datasets in Azure Data Lake without importing or translating queries.

Best for: Organizations using Microsoft Fabric for big data analytics that need high-speed access to massive datasets.

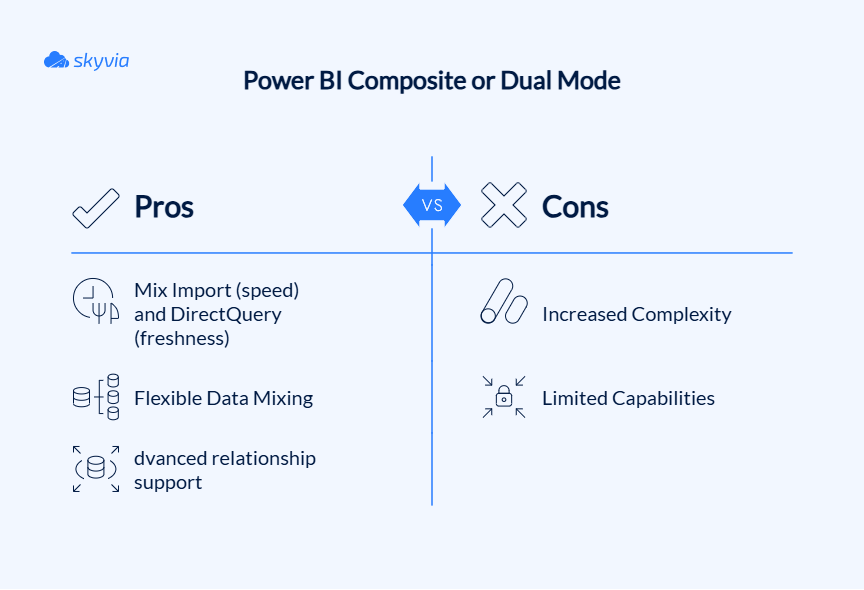

Composite Mode or Dual Mode

The name speaks for itself: it allows you to mix and match different connection modes within one Power BI model.

Best for: When you’re working with historical (for trend analysis) and real-time data (for current operations), or when you want to reduce storage costs.

Common Data Storage Options for Power BI

Finally, let’s discuss where your records reside. The storage landscape for Power BI is quite versatile, and everyone will find their cup of tea.

The beauty of Power BI lies in its source flexibility. But not all storage options are created equal, and your choice will ripple through everything from refresh schedules to user experience.

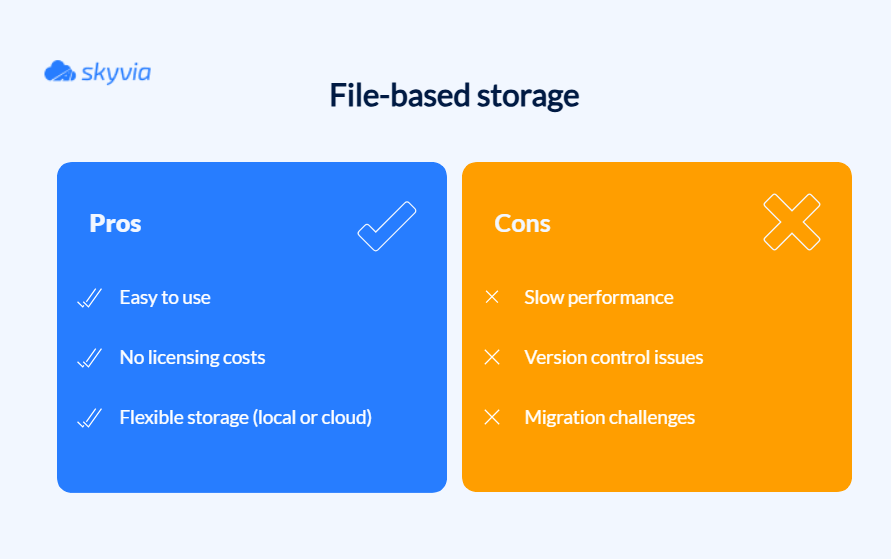

File-Based Storage (Excel, CSV, SharePoint)

This is where most analysts cut their teeth. Files are familiar, easy to understand, and perfect for getting your feet wet in the Power BI pool.

Excel files are old and reliable, sitting on virtually every business computer, and most people know how to work with them. CSV files are the universal donor of data formats – lightweight, fast to process, and compatible with practically everything. SharePoint takes these familiar formats and adds collaboration superpowers, letting teams work together without frequent follow-ups.

Best for: Small datasets, beginners who want to spring from zero to hero, and quick analyses that don’t require enterprise-level infrastructure.

File-based storage may seem a bit outdated compared to all the other options, yet it’s the alpha and omega of business information preservation. There is also a solution that makes these cons less likely to affect your operations, and those pros more impactful.

With tools like Skyvia, you can automate the ETL pipeline building. For example, it pulls records from your CRM, marketing platforms, and databases, then feeds them into SharePoint or OneDrive, where Power BI can access them.

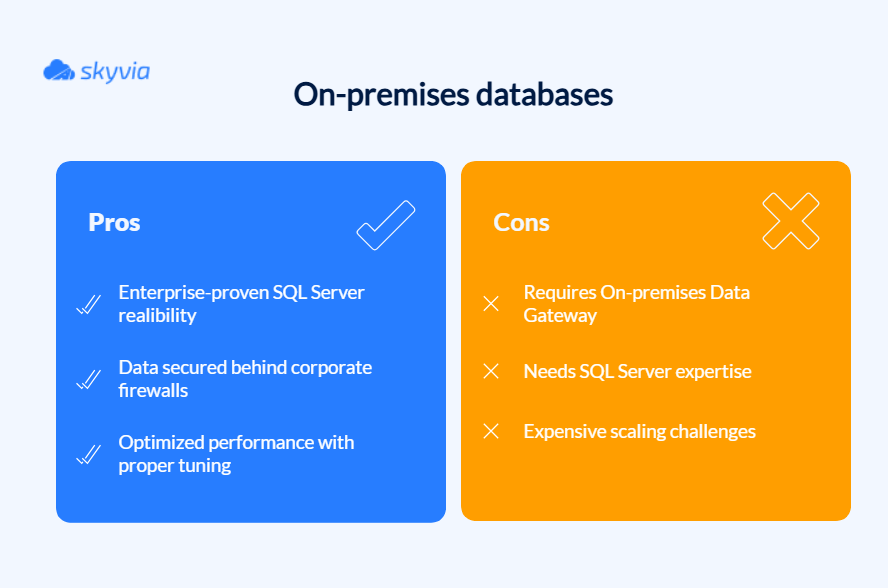

On-Premises Databases (SQL Server)

They are the backbone of many enterprise BI architectures. SQL Server is Microsoft’s flagship database that integrates with Power BI like peanut butter with jelly. It supports all connection modes, handles complex queries, and scales from small databases to enterprise data warehouses (DWH).

Best for: When organizations with existing on-premises infrastructure need more control over their data. If you’ve already got SQL Server boxes humming in your datacenter and a team that knows how to keep them happy, this path makes perfect sense.

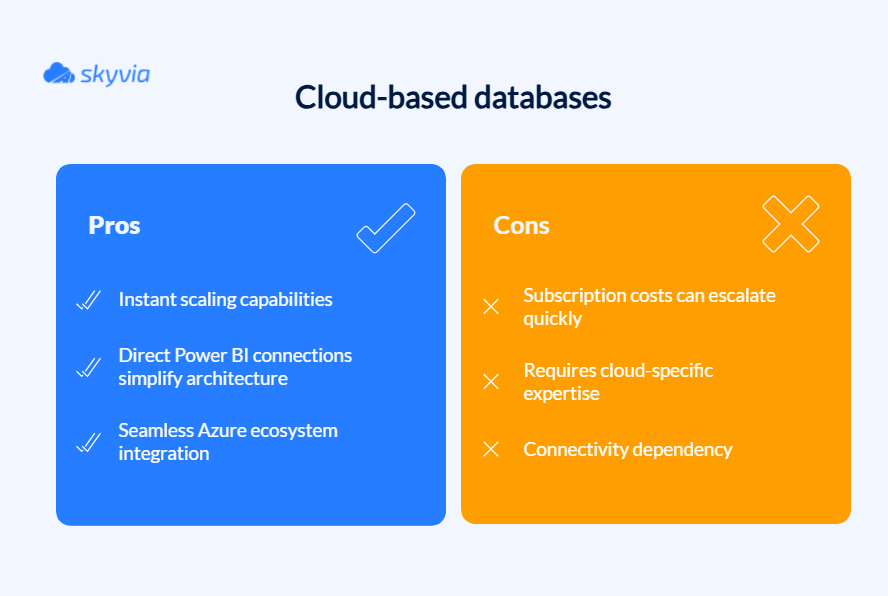

Cloud Databases (Azure SQL Database, Azure Synapse Analytics)

They represent the modern storage philosophy – scalable, elastic, and seamlessly woven into analytical ecosystems. Azure MySQL Database offers the beloved SQL Server experience with cloud scalability, automatic backups, and native intelligence. Azure Synapse Analytics can handle petabytes of data and supports both SQL and Spark workloads.

Best for: When you need to handle large and growing datasets, or build for the future, and want to access the newest analytics capabilities.

Also, storage mode and storage type correlate. So, you will need to align both of them:

| Storage Mode | File-Based Storage | On-Premises Databases | Cloud Databases | Key Characteristic |

|---|---|---|---|---|

| Import | Supported (files imported fully into the PBIX model) | Supported (data imported fully) | Supported (data imported fully) | Fastest query performance, supports full modeling, data refresh needed, size limits apply |

| DirectQuery | Limited support (mostly via cloud file formats like Parquet in Data Lake) | Supported (SQL Server, SSAS, etc.) | Supported (Azure SQL DB, Synapse, Fabric Lakehouse) | Real-time data access, limited modeling, performance depends on source, no duplication |

| Live Connection | Not applicable | Supported (SSAS models on-premises) | Supported (Azure Analysis Services, Power BI datasets) | No local storage, real-time queries, no local modeling in Power BI Desktop, centralized governance |

| Direct Lake | Supported (Parquet files in Fabric Lakehouse) | Not typical | Supported (Fabric Lakehouse, Azure Data Lake) | Near real-time analytics on large datasets, no data movement, requires a Microsoft Fabric environment |

| Composite (Dual) | Supported for dimension/reference tables from files | Supported | Supported | Combines the benefits of Import and DirectQuery, useful for composite models with mixed data freshness needs |

Advanced Data Storage Solutions in Power BI

If basic file and database storage are the foundation of a Power BI building, then Dataflows, Datamarts, and Data Fabric Warehouses are the smart home automation that makes everything work together. These advanced storage solutions are Microsoft’s answer to the age-old BI challenge: how do you create reusable, scalable, and governed data assets without turning your analysts into full-time data engineers?

As of mid-2025, Power BI Datamarts are being phased out in favor of Fabric Data Warehouse. Your current Datamarts will be lost, and all the reports that use them will break. Below, we will discuss the new player and how Skyvia can help you transfer the data to a newer, more modern house.

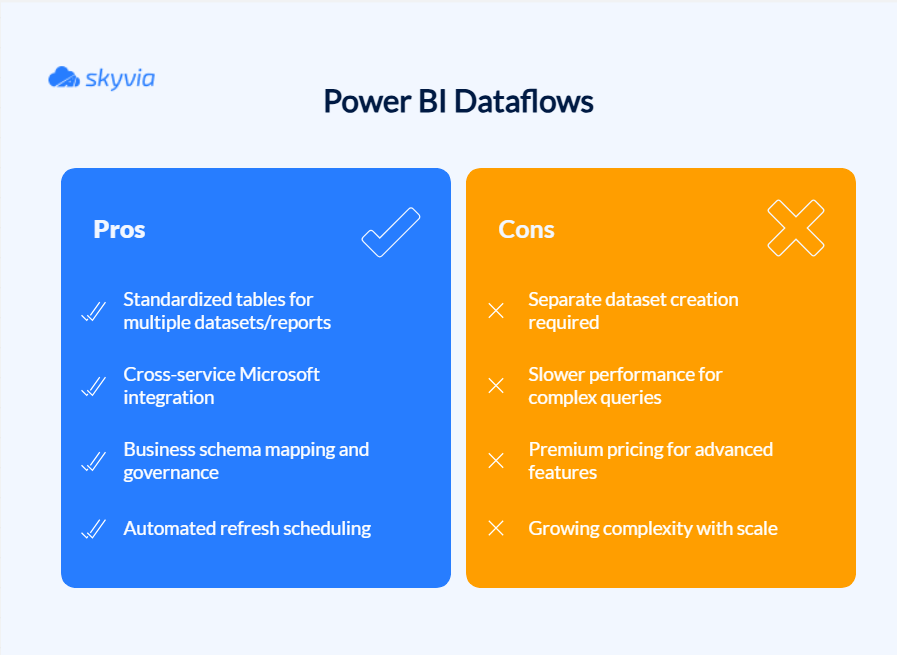

Power BI Dataflows

Think of them as a cloud, self-service ETL tool powered by the same Power Query engine that drives your beloved Power BI Desktop. But here’s the plot twist – instead of your transformation masterpiece being trapped in report silos, Dataflows set it free to roam across your entire Power BI ecosystem and other Microsoft services.

Best for: If you’ve ever found yourself thinking, “I wish I could just copy this cleaned customer table to five other reports without starting from scratch,” Dataflows are your new best friend. Also, it will help with centralizing and standardizing ETL logic for enterprise data.

Power BI Datamarts VS Power BI Data Fabric Warehouse

If Dataflows are transformation factories, then Datamarts are complete DWH solutions in a box. They combine a dataflow (for ETL), an Azure SQL Database (for relational storage), and a dataset (for semantic modeling), all wrapped up in one neat package. The only problem here is that they are available only until October 2025.

Users can migrate to Data Fabric Warehouse if they want to keep the data within the system or choose a third-party storage option.

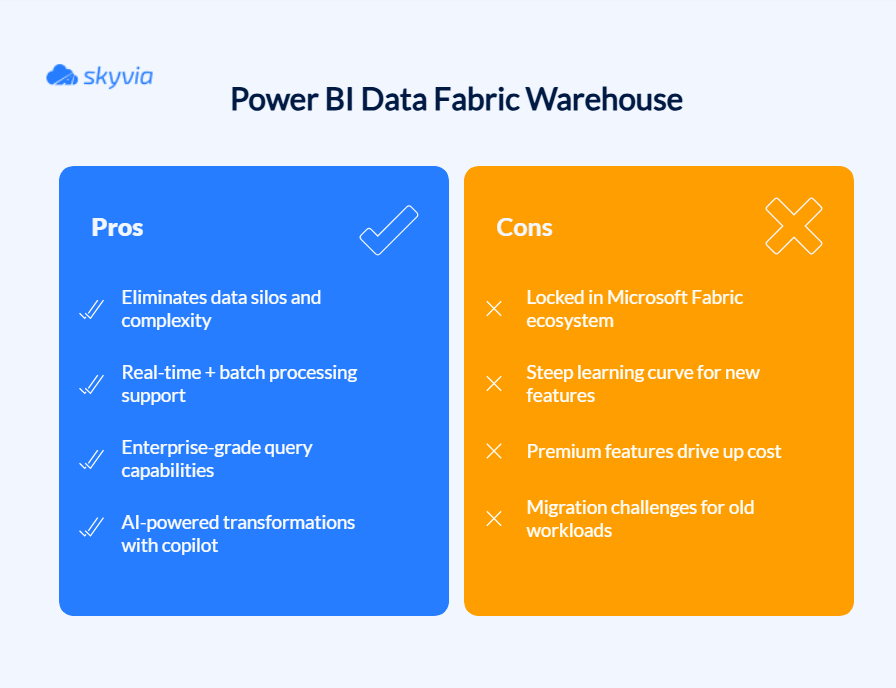

Power BI Data Fabric Warehouse is Microsoft’s modern cloud-native DWH that’s fully integrated into the broader Microsoft Fabric platform. It’s designed to unify, store, process, and analyze massive volumes of both structured and semi-structured data, all while using Delta Lake storage format on Azure Data Lake Gen2 (known as OneLake in Fabric).

Best For:

- Enterprise-scale analytics, when you need to consolidate row-level data from different sources and run large-scale analytical queries without breaking a sweat.

- It’s also a godsend for migration from legacy warehouses.

- For organizations wanting unified analytics and AI.

- Translytical scenarios, when both transactional and analytical processing must occur within the same system for real-time analytics needs.

- Finally, if compliance and governance keep you up at night, Fabric Data Warehouse comes with Microsoft-grade security and auditing baked right in.

How to Safeguard Data When Saying Goodbye to Datamarts

While Microsoft is rolling out the red carpet for their new Data Fabric Warehouse, sometimes the grass is greener on the other side – and that’s where third-party DWHs like Snowflake, Google BigQuery, and Amazon Redshift come into play. This option makes sense when:

- You’re afraid that Microsoft may decide to change something in the BI infrastructure again.

- Need to extensively transform data while moving.

- Want to work with a source that supports CDC.

- Business users will be responsible for the transfer.

- There’s no time to organize training for your team because there’s a strict deadline (October 1, 2025).

- Want to keep options open – move to the different targets within one tool, instead of using multiple Power BI connectors.

- Your data exceeds Power BI’s Import mode limits (1 GB compressed for Pro, up to 100 GB+ for Premium/Fabric).

Skyvia, in this scenario, is your friendly neighborhood data moving service – but instead of hauling furniture, it’s hauling business intelligence. This no-code platform takes the headache of data migration, making it accessible whether you’re a SQL wizard or someone who thinks ETL is a type of sandwich.

Let’s see how this can be organized using a hypothetical mid-sized organization that deposits and analyzes business data (e.g., sales, customer, or operational records, ~10-100GB) for reporting in Power BI Datamarts:

- First things first – pack your belongings before moving:

- Connect to your Datamart: Fire up SQL Server Management Studio (SSMS) and connect to your Datamart’s SQL endpoint.

- Export tables to CSV: For each table, run a SELECT query and export the results as CSV files.

- Stage your files: Upload these CSV files to a cloud storage service you prefer using Skyvia’s connectors.

- Reconnect Power BI – invite Power BI to the new home:

- Use native connectors: Power BI has built-in connectors for most major warehouses.

- Rebuild your semantic model: Recreate relationships and DAX measures.

- Test everything: Make sure your reports still sing the same tune.

- Validate and optimize – unpack and make sure everything’s in its right place:

- Compare record counts between old and new systems.

- Test your reports thoroughly.

- Add indexes for better performance.

- Set up monitoring to catch any issues or problems.

In the end, Skyvia is more cost-effective than native connectors due to reduced labor costs. Its no-code pipelines and OData endpoints minimize setup and maintenance costs, addressing native connector drawbacks (e.g., DirectQuery performance, manual refreshes).

How to Choose the Right Storage Option for You: A Decision Framework

We have already covered many storage options, scenarios, and even took a side quest to drive around the Data Fabric Warehouse migration, and this is the last stage of this search. A few more questions and one comparison table stand between you and the best way to store data for Power BI.

- How much does your data weigh, and how spaghetti-like are the connections holding it together?

File-based storage (Excel or SharePoint) or on-premises databases (SQL Server) match smaller datasets with clear-cut relationships like a glove. However, any data tends to grow, and over time, you will need something mightier, such as cloud databases (like Azure SQL or Synapse) that can scale to deliver butter-smooth results every time.

- Do you need your data delivered via express overnight shipping, or are you fine with standard weekly/daily mail?

If your business suffocates without real-time insights, Power BI’s cloud databases or Data Fabric warehouses are your oxygen supply. They’ll keep your reports fresh and current. But if your reports don’t need real-time updates, file-based storage or on-premises databases with scheduled refreshes will do just fine.

- What are the cost implications of each solution?

Cost is always a factor. Sometimes, the main one. The only reason it’s in the middle of this guide is that we wanted to focus on other aspects, too. File-based storage and local databases are the wallet-conscious choice, perfectly suited for smaller operations or businesses with tight budgets. When you graduate to enterprise scale, cloud databases and Data Fabric warehouses come at a premium, but they offer scalability, performance, and adaptability that pay dividends on every investment.

- Do you have the expertise to manage a database or a cloud environment?

If your team isn’t full of database experts, file-based storage or on-premises databases are great for keeping things simple. As your appetite expands, cloud titans like Azure SQL, Synapse, or Data Fabric serve up gourmet features, but they come with a bit more technical know-how.

- Are your data demands expected to snowball in the coming years?

If you’re just starting or working with modest datasets, file-based storage or on-premises databases will do the trick, for now. But if you aim for fast growth, cloud storage like Azure SQL or Synapse, and Data Fabric warehouses are a better option, delivering adaptive scalability and premium features to support your analytical objectives.

And let’s summarize all these facts:

| Storage Option | Data Volume & Complexity | Refresh Frequency | Budget | Scalability |

|---|---|---|---|---|

| File-Based Storage (Excel, CSV, SharePoint) | Small datasets, simple relationships | Daily/weekly | Low cost | Limited |

| On-Premises Databases (SQL Server) | Medium to large datasets, complex queries | Daily/Weekly | Medium cost | Moderate scalability |

| Cloud Databases (Azure SQL, Synapse) | Large datasets, growing complexity | Daily/Real-time | Medium/high cost | Highly scalable |

| Power BI Dataflows | Medium datasets, standardized transformation | Scheduled refresh (Daily/Weekly) | Medium cost | Moderate scalability |

| Power BI Data Fabric Warehouses | Very large datasets, complex data models | Real-time/Batch | High cost | Extremely scalable |

Conclusion

The truth is, there’s no one-size-fits-all answer, but you’ve probably started to suspect this a few chapters before. Your best way to store data for Power BI depends on:

- Data volumes

- Real-time needs

- Budget

- Team expertise

But here’s the thing – you don’t have to figure it all out in one go. Begin with what makes sense for your current situation. Maybe that’s Excel files and the comfort zone of Import mode.

Eventually, you will grow, and numerous new needs will knock on your door. However, whenever you need to transfer data somewhere (with or without transformations), Skyvia will handle the plumbing so you can focus on what really matters – turning data into insights. From pulling CRM data into SharePoint files to orchestrating seamless migrations to modern cloud warehouses, it’s the toolkit for your data integration needs.

Try Skyvia today and discover how smooth data integration can be when you’ve got the right tool in your corner of the ring.

F.A.Q. for Best storages for Power BI

When should I use DirectQuery instead of Import mode?

When the dataset is too big to import, or you require real-time updates. It works best in situations where the freshness of the data is important, but keep in mind that performance is dependent on the speed and capability of the source system.

How can I improve Power BI performance with large datasets?

Use Live Connection or DirectQuery for large datasets. If you want to keep using Import Mode, think about using incremental refresh and filtering out unnecessary records to reduce the size of the input.

What is the difference between Power BI Dataflow and Datamart?

Dataflow is a cloud-based ETL tool that transforms data for use across reports, while Datamart is a self-contained, structured dataset used for reporting, typically containing cleaned and transformed data from your DWH.

Do I always need a data gateway for my data sources?

A data gateway is only required if your source is located on-site. It ensures safe transfers by serving as a bridge between your on-premises data and Power BI. However, a gateway is not necessary for cloud-based sources.